Professional Documents

Culture Documents

Sheet 8

Uploaded by

darshana.sakpal20Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Sheet 8

Uploaded by

darshana.sakpal20Copyright:

Available Formats

Machine Learning WS 2023/2024

Prof. Andreas Nürnberger, M.Sc. Marcus Thiel 03. December 2023

Assignment Sheet 8

Assignment 8.1

In this course, so far, we only discussed classifiers, which work on non-sequential data (or

alternatively also data with a fixed sequence).

a) Why might those algorithms have trouble predicting the correct output sequential

data (like time-series, text, etc.) for specific tasks? Give an example and explain

it based on that.

b) In order to better make use of sequential data, in Neural Networks the concept

of Recurrent Neural Networks (RNNs) was introduced. What’s the difference to

Feed-Forward Neural Networks?

c) Shortly explain the general idea of Backpropagation Through Time for training

such networks along with the inherent problems of vanishing and exploding

gradients.

d) How does the variant of LSTMs (Long short-term memory) try to solve the issue

of vanishing gradient problems?

e) Summarize the main take-aways of this question in 4 sentences/bullet points!

Assignment 8.2

Explain in your own words the functionality of a Naive Bayes classifier. Cover the following

points in your discussion:

a) What are the formulas used in Naive Bayes and how are they related to a

Maximum-A-Posteriori hypothesis?

b) What is the “naive” assumption made here and why is it relevant?

c) How are the probabilities in a Naive Bayes estimated and used for classification?

d) How can we deal with missing values in a data set and zero frequency problems?

(These are two different problems!)

1 due by 10. December 2023

Machine Learning WS 2023/2024

Prof. Andreas Nürnberger, M.Sc. Marcus Thiel 03. December 2023

Assignment 8.3

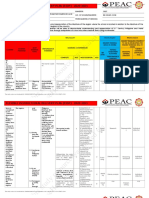

Consider the following PlayTennis example. The given training data is:

day outlook temperature humidity wind play tennis

1 rain mild high strong no

2 overcast hot normal weak yes

3 overcast mild high strong yes

4 sunny mild normal strong yes

5 rain mild normal weak yes

6 sunny cool normal weak yes

7 sunny mild high weak no

8 overcast cool normal strong yes

9 rain cool normal strong no

10 rain cool normal weak yes

a) Classify the following two examples using Naive Bayes and a maximum likelihood

estimation:

i1 = (sunny, cool, high, strong)

i2 = (overcast, mild, normal, weak)

Compute also the posteriori probabilities.

b) Consider the PlayTennis example from the previous subtask again. This time,

create a Naive Bayes classifier using the formula on slide 34, with m = 1 and

assuming a uniform distribution of values. What differences do you observe,

when classifying the instances from subtask a)?

Assignment 8.4

Consider using Naive Bayes for classifying e-mails into spam and ham (not-spam). Very

shortly discuss the following three things:

a) How can the probability of a word belonging to spam or not-spam be estimated?

b) How can the probability of the size of the mail in regards to spam or not-spam

be calculated?

c) What problems might occur, when using a regular Naive Bayes classification

routine?

2 due by 10. December 2023

You might also like

- Solution Manual For Introduction To The Design and Analysis of Algorithms 3 e 3rd Edition Anany LevitinDocument24 pagesSolution Manual For Introduction To The Design and Analysis of Algorithms 3 e 3rd Edition Anany LevitinCarrieFloresgxoa100% (40)

- Public Policy PDFDocument525 pagesPublic Policy PDFMutemwa Emmanuel Tafira93% (15)

- Questions 7: Dr. Roman Belavkin BIS4435Document4 pagesQuestions 7: Dr. Roman Belavkin BIS4435Denis DemajNo ratings yet

- Take Off - Workbook PDFDocument108 pagesTake Off - Workbook PDFSam Love0% (1)

- Statistics Without Tears by Stan BrownDocument294 pagesStatistics Without Tears by Stan BrownlovehackinggalsNo ratings yet

- Ch2. Solution Manual - The Design and Analysis of Algorithm - LevitinDocument51 pagesCh2. Solution Manual - The Design and Analysis of Algorithm - LevitinFaldi Rianda50% (2)

- Deep Learning with JavaScript: Neural networks in TensorFlow.jsFrom EverandDeep Learning with JavaScript: Neural networks in TensorFlow.jsNo ratings yet

- Hci 101Document11 pagesHci 101Divine ManaloNo ratings yet

- Core Concepts PhysiologyDocument159 pagesCore Concepts Physiologygus_lions100% (1)

- P Process BrochureDocument20 pagesP Process BrochureHilda NuruzzamanNo ratings yet

- Pedigree Analysis WorksheetDocument6 pagesPedigree Analysis WorksheetLloaana 12No ratings yet

- Big Data Computing - Assignment 7Document3 pagesBig Data Computing - Assignment 7VarshaMegaNo ratings yet

- ML Model Question PaperDocument2 pagesML Model Question PaperM. Muntasir RahmanNo ratings yet

- Exercises INF 5860: Exercise 1 Linear RegressionDocument5 pagesExercises INF 5860: Exercise 1 Linear RegressionPatrick O'RourkeNo ratings yet

- Exam2021-2022 (Jan C)Document3 pagesExam2021-2022 (Jan C)Danilo ToapantaNo ratings yet

- Assignments TheoryDocument9 pagesAssignments TheoryDragon Ball SuperNo ratings yet

- Machine Learning With Naive Bayes Course Notes 365 Data ScienceDocument32 pagesMachine Learning With Naive Bayes Course Notes 365 Data SciencenouNo ratings yet

- A Gentle Introduction To BackpropagationDocument15 pagesA Gentle Introduction To BackpropagationManuel Aleixo Leiria100% (1)

- Feedback The Correct Answer Is:analysis of Time SeriesDocument42 pagesFeedback The Correct Answer Is:analysis of Time SeriesChockalingam KumarNo ratings yet

- Lec 1Document5 pagesLec 1william waltersNo ratings yet

- ST - HW 1Document2 pagesST - HW 1Paige D.No ratings yet

- Qualification Exam Question: 1 Statistical Models and MethodsDocument4 pagesQualification Exam Question: 1 Statistical Models and MethodsAlmaliequeNo ratings yet

- Exam DUT 070816 AnsDocument5 pagesExam DUT 070816 AnsEdward Baleke SsekulimaNo ratings yet

- AI FinDocument5 pagesAI FinSk NaveedNo ratings yet

- INF264 - Exercise 2: 1 InstructionsDocument4 pagesINF264 - Exercise 2: 1 InstructionsSuresh Kumar MukhiyaNo ratings yet

- Instructions For How To Solve AssignmentDocument4 pagesInstructions For How To Solve AssignmentbotiwaNo ratings yet

- Assignment1 Prop - LogicDocument12 pagesAssignment1 Prop - LogicAjieSamiajiNo ratings yet

- Solution Manual For Digital Systems Design Using Verilog 1st Edition by Roth John Lee ISBN 1285051076 9781285051079Document36 pagesSolution Manual For Digital Systems Design Using Verilog 1st Edition by Roth John Lee ISBN 1285051076 9781285051079michellebarrettgobcmnsdje100% (27)

- MAM1000W Tutorials-3Document75 pagesMAM1000W Tutorials-3Khanimamba ManyikeNo ratings yet

- CS ELEC 4 Finals ModuleDocument57 pagesCS ELEC 4 Finals ModuleRichard MonrealNo ratings yet

- A Tutorial On Principal Component AnalysisDocument16 pagesA Tutorial On Principal Component AnalysisTaylorNo ratings yet

- CPSC 481 Handout - Bio. Search & Machine Learning & StochasticDocument8 pagesCPSC 481 Handout - Bio. Search & Machine Learning & StochasticViet DuongNo ratings yet

- Sheet 1Document2 pagesSheet 1Ahmed gamal ebiedNo ratings yet

- Daa QB ShortDocument11 pagesDaa QB ShortSanthosh KannekantiNo ratings yet

- 0723 Finite Fields Part 1Document28 pages0723 Finite Fields Part 1Pasalapudi Sudheer kumarNo ratings yet

- Solution PDFDocument243 pagesSolution PDFZainab JasimNo ratings yet

- Gujarat Technological UniversityDocument3 pagesGujarat Technological UniversityRukhsar BombaywalaNo ratings yet

- Ii Semester 2004-2005CS C415/is C415 - Data MiningDocument6 pagesIi Semester 2004-2005CS C415/is C415 - Data MiningramanadkNo ratings yet

- Module 1 ExtraDocument3 pagesModule 1 ExtraSneha N ShetNo ratings yet

- Ell784 AqDocument2 pagesEll784 Aqlovlesh royNo ratings yet

- Ias and Airness: Train/Test MismatchDocument12 pagesIas and Airness: Train/Test MismatchJiahong HeNo ratings yet

- ML Question BankDocument7 pagesML Question Bankarunwaghmare5No ratings yet

- Tensorflow Playground:: Exercise 2Document2 pagesTensorflow Playground:: Exercise 2Munia Pitarke OrdoñezNo ratings yet

- Ai SheetDocument4 pagesAi SheetBetsy FredaNo ratings yet

- Sample Questions For COMP-424 Final Exam: Doina PrecupDocument4 pagesSample Questions For COMP-424 Final Exam: Doina PrecupgomathyNo ratings yet

- TD1 ELTP 2023 StudentDocument2 pagesTD1 ELTP 2023 StudentHoussam FoukiNo ratings yet

- 9nm4alc: CS 213 M 2023 Data StructuresDocument21 pages9nm4alc: CS 213 M 2023 Data Structuressahil BarbadeNo ratings yet

- Decision Trees and Random Forest Q&aDocument48 pagesDecision Trees and Random Forest Q&aVishnuNo ratings yet

- Bits F464 1339 C 2017 1Document4 pagesBits F464 1339 C 2017 1ashishNo ratings yet

- T 01Document1 pageT 01orbita3000100% (1)

- Decision TreesDocument14 pagesDecision TreesJustin Russo Harry50% (2)

- ML QPDocument6 pagesML QPAshokNo ratings yet

- Research Methodology - Unit 5 - Week 3 - Data Analysis and Modelling SkillsDocument4 pagesResearch Methodology - Unit 5 - Week 3 - Data Analysis and Modelling SkillsE.GANGADURAI AP-I - ECENo ratings yet

- Class Descriptions-Week 3, Mathcamp 2021Document9 pagesClass Descriptions-Week 3, Mathcamp 2021GregoriusNo ratings yet

- MIT6 006F11 ps4Document5 pagesMIT6 006F11 ps4MoNo ratings yet

- MLPUE1 SolutionDocument9 pagesMLPUE1 SolutionNAVEEN SAININo ratings yet

- Exam Advanced Data Mining Date: 5-11-2009 Time: 14.00-17.00: General RemarksDocument5 pagesExam Advanced Data Mining Date: 5-11-2009 Time: 14.00-17.00: General Remarkskishh28No ratings yet

- Quiz 1Document13 pagesQuiz 1Sudha PatelNo ratings yet

- Diagnostic Quiz 0: Introduction To AlgorithmsDocument97 pagesDiagnostic Quiz 0: Introduction To AlgorithmsshunaimonaiNo ratings yet

- Questions 7: Dr. Roman Belavkin BIS4435Document4 pagesQuestions 7: Dr. Roman Belavkin BIS4435Denis DemajNo ratings yet

- Theme Based Word Problems Based On Chapter 1: Math Grade 7Document3 pagesTheme Based Word Problems Based On Chapter 1: Math Grade 7api-537694937No ratings yet

- Kinjal Mehta: College ActivitiesDocument2 pagesKinjal Mehta: College Activitiesapi-237520234No ratings yet

- Teacher Delivery Guide Pure Mathematics: 1.05 TrigonometryDocument16 pagesTeacher Delivery Guide Pure Mathematics: 1.05 TrigonometryHubert SelormeyNo ratings yet

- The Differences Between Academic and Professional CredentialsDocument3 pagesThe Differences Between Academic and Professional CredentialsGaus MohiuddinNo ratings yet

- Guidelines For Internship Report BBADocument20 pagesGuidelines For Internship Report BBAumesh aryaNo ratings yet

- Cricitical Journal Review 'Educational Psychology': Lecture: Prof. Dr. Ibnu Hajar, M.Si. Rizki Ramadhan S.PD, M.PDDocument21 pagesCricitical Journal Review 'Educational Psychology': Lecture: Prof. Dr. Ibnu Hajar, M.Si. Rizki Ramadhan S.PD, M.PDRahma disaNo ratings yet

- DB2 Installatn On ServerDocument3 pagesDB2 Installatn On ServerAjmal RahmanNo ratings yet

- Educ 103. RamosDocument4 pagesEduc 103. RamosTeecoy RamosNo ratings yet

- So MKT205 PDFDocument17 pagesSo MKT205 PDFak shNo ratings yet

- Free 300 Ebook CombinatoricsDocument48 pagesFree 300 Ebook CombinatoricsAhmad ArifNo ratings yet

- Healthmeans 20 Ways To Build Resilience UpdatedDocument23 pagesHealthmeans 20 Ways To Build Resilience UpdatedSofia BouçadasNo ratings yet

- Supa TomlinDocument33 pagesSupa TomlinDiya JosephNo ratings yet

- Chapter - Motivation: Md. Imran Hossain Assistant Professor Dept: of Finance Jagannath UniversityDocument43 pagesChapter - Motivation: Md. Imran Hossain Assistant Professor Dept: of Finance Jagannath Universityjh shuvoNo ratings yet

- Artikel Bahasa InggrisDocument16 pagesArtikel Bahasa InggrisRizalah Karomatul MaghfirohNo ratings yet

- Third Periodical Test: Araling Panlipunan Department Araling Panlipunan Grade 10 Kontemporaryong IsyuDocument2 pagesThird Periodical Test: Araling Panlipunan Department Araling Panlipunan Grade 10 Kontemporaryong IsyuKaren DaelNo ratings yet

- Feminist Theoretical Frameworks: Gender StudiesDocument2 pagesFeminist Theoretical Frameworks: Gender StudiesMcdared GeneralaoNo ratings yet

- Social Behavior and Social Play 1-1MDocument1 pageSocial Behavior and Social Play 1-1MNBNo ratings yet

- Chap03 (1) With Answers PDFDocument76 pagesChap03 (1) With Answers PDFNihalAbou-GhalyNo ratings yet

- Jayden ResumeDocument3 pagesJayden Resumeapi-283880842No ratings yet

- Balwinder Kaur-MlsDocument2 pagesBalwinder Kaur-Mlsharjeet aulakhNo ratings yet

- Capstone IdeasDocument9 pagesCapstone IdeastijunkNo ratings yet

- EBOOK 978 0123750976 Handbook of Neuroendocrinology Download Full Chapter PDF KindleDocument61 pagesEBOOK 978 0123750976 Handbook of Neuroendocrinology Download Full Chapter PDF Kindlejuan.speight804100% (36)

- This Study Resource Was Shared Via: Flexible Instructional Delivery Plan (Fidp) 2020-2021Document5 pagesThis Study Resource Was Shared Via: Flexible Instructional Delivery Plan (Fidp) 2020-2021Sa Le HaNo ratings yet

- Artificial IntelligenceDocument2 pagesArtificial IntelligenceBarodianbikerniNo ratings yet