Professional Documents

Culture Documents

Memory Notes

Memory Notes

Uploaded by

mdtayyab212121Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Memory Notes

Memory Notes

Uploaded by

mdtayyab212121Copyright:

Available Formats

Unit-IV Memory Organization

Computer Architecture and Organization

Ms. Vaishali Mishra

vaishali.mishra@viit.ac.in

Department of Computer Engineering

BRACT’s, Vishwakarma Institute of Information Technology, Pune-48

(An Autonomous Institute affiliated to Savitribai Phule Pune University)

(NBA and NAAC accredited, ISO 9001:2015 certified)

Ms. Vaishali Mishra, Department of Computer Engineering, VIIT , Pune-48 1

Contents

• Characteristics of Memory system

• The memory hierarchy

• Cache Memory

• I/O modules-Module function and I/O module structure

• Programmed I/O, Interrupt driven I/O.

Ms. Vaishali Mishra, Department of Computer Engineering, VIIT , Pune-48 2

Objective/s and Outcomes of this session

Objective/s of this session

1. To explain the function of each element of a memory hierarchy, identify and

compare different methods for computer I/O.

Learning Outcome /Course Outcome

1. Sketch the concepts of memory and I/O. (Apply)

Ms. Vaishali Mishra, Department of Computer Engineering, VIIT , Pune-48 3

Characteristics of Memory system

• Location

• Capacity

• Unit of transfer

• Access method

• Performance

• Physical type

• Physical characteristics

• Organisation

Ms. Vaishali Mishra, Department of

4

Computer Engineering, VIIT , Pune-48

Characteristics of Memory system

Table 5.1 Key Characteristics of Computer Memory Systems

Ms. Vaishali Mishra, Department of

5

Computer Engineering, VIIT , Pune-48

Location

• CPU

• Internal

• External

• Location refers to whether memory is internal and external

to the computer.

• Internal memory is often equated with main memory. But

there are other forms of internal memory. The processor

requires its own local memory, in the form of registers (CPU).

Cache is another form of internal memory.

• External memory consists of peripheral storage devices, such

as disk and tape, that are accessible to the processor via I/O

controllers.

Ms. Vaishali Mishra, Department of

6

Computer Engineering, VIIT , Pune-48

Capacity

• Internal memory:- this is typically expressed in terms

of bytes (1 byte 8 bits) or words. Common word

lengths are 8, 16, and 32 bits.

• External memory:- capacity is typically expressed in

terms of bytes.

• Internal memory:- the unit of transfer is equal to the

number of electrical lines into and out of the memory

module i.e. word.

• External memory:- data are often transferred in much

larger units than a word, and these are referred to as

blocks.

Ms. Vaishali Mishra, Department of

7

Computer Engineering, VIIT , Pune-48

Unit of Transfer

• Internal

– Usually governed by data bus width

• External

– Usually a block which is much larger than a word

• Addressable unit

– Smallest location which can be uniquely addressed

– Word internally

– Cluster on M$ disks

Ms. Vaishali Mishra, Department of

8

Computer Engineering, VIIT , Pune-48

Access Methods (1)

• Sequential

– Start at the beginning and read through in order

– Access time depends on location of data and previous

location

– e.g. tape

• Direct

– Individual blocks have unique address

– Access is by jumping to vicinity plus sequential search

– Access time depends on location and previous location

– e.g. disk

Ms. Vaishali Mishra, Department of

9

Computer Engineering, VIIT , Pune-48

Access Methods (2)

• Random

– Individual addresses identify locations exactly

– Access time is independent of location or previous

access

– e.g. RAM

• Associative

– Data is located by a comparison with contents of a

portion of the store

– Access time is independent of location or previous

access

– e.g. cache

Ms. Vaishali Mishra, Department of

10

Computer Engineering, VIIT , Pune-48

Performance

• Access time(Latency)

– Time between presenting the address and getting

the valid data

• Memory Cycle time

– Time may be required for the memory to “recover”

before next access

– Cycle time is access + recovery

• Transfer Rate

– Rate at which data can be moved

Ms. Vaishali Mishra, Department of

11

Computer Engineering, VIIT , Pune-48

Physical Types

• Semiconductor

– RAM

• Magnetic

– Disk & Tape

• Optical

– CD & DVD

• Others

– Bubble

– Hologram

Ms. Vaishali Mishra, Department of

12

Computer Engineering, VIIT , Pune-48

Physical Characteristics

• Volatility :-information decays naturally or is lost

when electrical power is switched off

• Non-Erasable :- cannot be altered, except by

destroying the storage unit.

• Power consumption

Ms. Vaishali Mishra, Department of

13

Computer Engineering, VIIT , Pune-48

Memory Hierarchy

• Registers

– In CPU

• Internal or Main memory

– May include one or more levels of cache

– “RAM”

• External memory

– Backing store

Ms. Vaishali Mishra, Department of

14

Computer Engineering, VIIT , Pune-48

The Bottom Line

• How much?

– Capacity

• How fast?

– Time is money

• How expensive?

Ms. Vaishali Mishra, Department of

15

Computer Engineering, VIIT , Pune-48

Memory Hierarchy (Need)

• The following relationships hold among the

three key characteristics of memory namely,

capacity, access time, and cost :

• Faster access time, greater cost per bit

• Greater capacity, smaller cost per bit

• Greater capacity, slower access time

Ms. Vaishali Mishra, Department of

16

Computer Engineering, VIIT , Pune-48

Memory Hierarchy - Diagram

Figure 5.1 The Memory Hierarchy

Ms. Vaishali Mishra, Department of

17

Computer Engineering, VIIT , Pune-48

Memory Hierarchy - Diagram

• As one goes down the hierarchy, the following occur:

a. Decreasing cost per bit

b. Increasing capacity

c. Increasing access time

d. Decreasing frequency of access of the memory by

the processor

Ms. Vaishali Mishra, Department of

18

Computer Engineering, VIIT , Pune-48

Hierarchy List

• Registers

• L1 Cache

• L2 Cache

• Main memory

• Disk cache

• Disk

• Optical

• Tape

Ms. Vaishali Mishra, Department of

19

Computer Engineering, VIIT , Pune-48

Cache

• Small amount of fast memory

• Sits between normal main memory and CPU

• May be located on CPU chip or module

Ms. Vaishali Mishra, Department of

20

Computer Engineering, VIIT , Pune-48

Cache and Main Memory

Figure 5.2 Cache and Main Memory

Ms. Vaishali Mishra, Department of Computer Engineering, VIIT , Pune-48 21

Cache Principles

• Figure a. There is a relatively large and slow main

memory together with a smaller, faster cache

memory.

• The cache contains a copy of portions of main

memory.

• When the processor attempts to read a word of

memory, a check is made to determine if the word is

in the cache.

• Figure b depicts the use of multiple levels of cache.

• The L2 cache is slower and typically larger than the

L1 cache, and the L3 cache is slower and typically

larger than the L2 cache

Ms. Vaishali Mishra, Department of

22

Computer Engineering, VIIT , Pune-48

Cache/Main Memory Structure

Figure 5.3 Cache/Main Memory Structure

Ms. Vaishali Mishra, Department of

23

Computer Engineering, VIIT , Pune-48

Cache operation – overview

• CPU requests contents of memory location

• Check cache for this data.

• If present, get from cache (fast)

• If not present, read required block from main

memory to cache.

• Then deliver from cache to CPU.

• Cache includes tags to identify which block of

main memory is in each cache slot.

Ms. Vaishali Mishra, Department of

24

Computer Engineering, VIIT , Pune-48

Cache/Main Memory Structure

• Above Figure depicts the structure of a cache/main-memory

system.

• Main memory consists of up to 2n addressable words, with

each word having a unique n-bit address.

• For mapping purposes, this memory is considered to consist

of a number of fixed length blocks of K words each.

• That is, there are M =2n/K blocks in main memory.

• The cache consists of m blocks, called lines.

• Each line contains K words, plus a tag of a few bits.

• Each line also includes control bits (not shown), such as a bit

to indicate whether the line has been modified since being

loaded into the cache.

Ms. Vaishali Mishra, Department of

25

Computer Engineering, VIIT , Pune-48

Cache Read Operation - Flowchart

Figure 5.4 Cache Read Operation

Ms. Vaishali Mishra, Department of Computer Engineering, VIIT , Pune-48 26

Cache Read Operation - Flowchart

• The processor generates the read address (RA) of a word to

be read.

• If the word is contained in the cache, it is delivered to the

processor.

• Otherwise, the block containing that word is loaded into the

cache, and the word is delivered to the processor.

• Figure 5.4 shows these last two operations occurring in

parallel and reflects the organization shown in Figure 5.5,

which is typical of contemporary cache organizations.

Ms. Vaishali Mishra, Department of

27

Computer Engineering, VIIT , Pune-48

Cache Read Operation - Flowchart

Figure 5.5 Typical Cache Organization

Ms. Vaishali Mishra, Department of

28

Computer Engineering, VIIT , Pune-48

Cache Read Operation - Flowchart

• In this organization, the cache connects to the processor via data, control,

and address lines.

• The data and address lines also attach to data and address buffers, which

attach to a system bus from which main memory is reached.

• When a cache hit occurs, the data and address buffers are disabled and

communication is only between processor and cache, with no system bus

traffic.

• When a cache miss occurs, the desired address is loaded onto the system

bus and the data are returned through the data buffer to both the cache and

the processor.

• In this latter case, for a cache miss, the desired word is first read into the

cache and then transferred from cache to processor.

Ms. Vaishali Mishra, Department of

29

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

• This section provides an overview of cache design parameters and reports some

typical results.

• The use of caches is referred in high performance computing (HPC).

• HPC deals with supercomputers and their software, especially for scientific

applications that involve large amounts of data, vector and matrix computation, and

the use of parallel algorithms.

• Cache design for HPC is quite different than for other hardware platforms and

applications.

• Table 4.2 lists key elements.

Ms. Vaishali Mishra, Department of

30

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

Table 5.2 Elements of Cache Design

Ms. Vaishali Mishra, Department of

31

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

1) Cache Addresses

• Virtual memory is a facility that allows programs to address memory from

a logical point of view, without regard to the amount of main memory

physically available.

• When virtual memory is used, the address fields of machine instructions

contain virtual addresses.

• For reads to and writes from main memory, a hardware memory management unit

(MMU) translates each virtual address into a physical address in main memory.

• When virtual addresses are used, the system designer may choose to place the

cache between the processor and the MMU or between the MMU and main

memory (Figure 5.6).

• A logical cache, also known as a virtual cache, stores data using virtual

addresses.

• The processor accesses the cache directly, without going through the MMU.

• A physical cache stores data using main memory physical addresses.

Ms. Vaishali Mishra, Department of

32

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

1) Cache Addresses

• One obvious advantage of the logical cache is that cache access speed is faster

than for a physical cache, because the cache can respond before the MMU performs

an address translation.

Ms. Vaishali Mishra, Department of

33

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

1) Cache Addresses

Ms. Vaishali Mishra, Department of

34

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

1) Cache Addresses

Figure 5.6 Logical and Physical Caches

Ms. Vaishali Mishra, Department of Computer Engineering, VIIT , Pune-48 35

Elements of Cache Design

1) Cache

• One obvious advantage of the logical cache is that cache access speed is faster

than for a physical cache, because the cache can respond before the MMU performs

an address translation.

Ms. Vaishali Mishra, Department of

36

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

2) Cache Size

• The larger the cache, the larger the number of gates involved in addressing the

cache.

• The result is that large caches tend to be slightly slower than small ones even when

built with the same integrated circuit technology and put in the same place on chip

and circuit board.

Ms. Vaishali Mishra, Department of

37

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

3) Mapping Function

• Since there are fewer cache lines than main memory blocks, an algorithm is

needed for mapping main memory blocks into cache lines.

• Further, a means is needed for determining which main memory block

currently occupies a cache line.

• The choice of the mapping function dictates how the cache is organized.

• Three techniques can be used: direct, associative, and set-associative.

Ms. Vaishali Mishra, Department of

38

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

4) Replacement Algorithms

• Once the cache has been filled, when a new block is brought into the cache, one of

the existing blocks must be replaced.

• Least Recently Used (LRU):

• Replace that block in the set that has been in the cache longest with no reference to it.

• Each line includes a USE bit.

• When a line is referenced, its USE bit is set to 1 and the USE bit of the other line in that

set is set to 0.

• When a block is to be read into the set, the line whose USE bit is 0 is used.

• Because we are assuming that more recently used memory locations are more likely to be

referenced, LRU should give the best hit ratio.

• The cache mechanism maintains a separate list of indexes to all the lines in the cache.

• When a line is referenced, it moves to the front of the list.

• For replacement, the line at the back of the list is used.

• Because of its simplicity of implementation, LRU is the most popular replacement

algorithm.

Ms. Vaishali Mishra, Department of

39

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

4) Replacement Algorithms

• First-in-first-out (FIFO): Replace that block in the set that has been in the cache

longest.

• FIFO is easily implemented as a round-robin or circular buffer technique.

• Least frequently used (LFU): Replace that block in the set that has experienced

the fewest references.

• LFU could be implemented by associating a counter with each line.

• A technique not based on usage (i.e., not LRU, LFU, FIFO, or some variant) is to

pick a line at random from among the candidate lines.

Ms. Vaishali Mishra, Department of

40

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

5) Write Policy

• When a block that is resident in the cache is to be replaced, there are two

cases to consider.

• If the old block in the cache has not been altered, then it may be

overwritten with a new block without first writing out the old block.

• If at least one write operation has been performed on a word in that line of

the cache, then main memory must be updated by writing the line of cache

out to the block of memory before bringing in the new block.

• A variety of write policies, with performance and economic trade- offs,

possible.

• There are two problems to contend with. First, more than one device may

have access to main memory. For example, an I/O module may be able to

read- write directly to memory.

Ms. Vaishali Mishra, Department of

41

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

5) Write Policy

• If a word has been altered only in the cache, then the corresponding

memory word is invalid.

• Further, if the I/O device has altered main memory, then the cache word is

invalid.

• A more complex problem occurs when multiple processors are attached to

the same bus and each processor has its own local cache.

• Then, if a word is altered in one cache, it could conceivably invalidate a

word in other caches.

• The simplest technique is called write through.

• Using this technique, all write operations are made to main memory as well as to

the cache, ensuring that main memory is always valid.

• Any other processor– cache module can monitor traffic to main memory to

maintain consistency within its own cache.

Ms. Vaishali Mishra, Department of

42

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

5) Write Policy

• The main disadvantage of this technique is that it generates substantial memory

traffic and may create a bottleneck.

• An alternative technique, known as write back, minimizes memory writes.

• With write back, updates are made only in the cache.

• When an update occurs, a dirty bit, or use bit, associated with the line is set.

• Then, when a block is replaced, it is written back to main memory if and only if the

dirty bit is set.

• The problem with write back is that portions of main memory are invalid, and hence

accesses by I/O modules can be allowed only through the cache.

Ms. Vaishali Mishra, Department of

43

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

6) Number of Caches

• Multilevel caches As logic density has increased, it has become possible to

• have a cache on the same chip as the processor: the on-chip cache.

• Compared with a cache reachable via an external bus, the on-chip cache

reduces the processor’s external bus activity and therefore speeds up

execution times and increases overall system performance.

• When the requested instruction or data is found in the on-chip cache, the

bus access is eliminated. Because of the short data paths internal to the

processor, compared with bus lengths, on-chip cache accesses will

complete appreciably faster than would even zero-wait state bus cycles.

• Furthermore, during this period the bus is free to support other transfers.

Ms. Vaishali Mishra, Department of

44

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

6) Number of Caches

• Unified versus split caches:

• More recently, it has become common to split the cache into two: one dedicated to

instructions and one dedicated to data.

• These two caches both exist at the same level, typically as two L1 caches.

• When the processor attempts to fetch an instruction from main memory, it first

consults the instruction L1 cache, and when the processor attempts to fetch data

from main memory, it first consults the data L1 cache.

Ms. Vaishali Mishra, Department of

45

Computer Engineering, VIIT , Pune-48

Elements of Cache Design

6) Number of Caches

• The trend is toward split caches at the L1 and unified caches for higher

levels, particularly for superscalar machines, which emphasize parallel

instruction execution and the prefetching of predicted future instructions.

• The key advantage of the split cache design is that it eliminates contention

for the cache between the instruction fetch/decode unit and the execution

unit.

Ms. Vaishali Mishra, Department of

46

Computer Engineering, VIIT , Pune-48

I/O Modules

• In addition to the processor and a set of memory modules, the third key

element of a computer system is a set of I/O modules.

• Each module interfaces to the system bus or central switch and controls one

or more peripheral devices.

• An I/O module is not simply a set of mechanical connectors that wire a

device into the system bus.

Ms. Vaishali Mishra, Department of Computer Engineering, VIIT , Pune-48 47

Ms. Vaishali Mishra, Department of

48

Computer Engineering, VIIT , Pune-48

I/O Modules

• External Devices

• An external device attaches to the computer by a link to an I/O module

(Figure 5.7).

• The link is used to exchange control, status, and data between the I/O

module and the external device.

• An external device connected to an I/O module is often referred to as a

peripheral device or, simply, a peripheral.

• We can broadly classify external devices into three categories:

• Human readable: Suitable for communicating with the computer user;

• Machine readable: Suitable for communicating with equipment;

• Communication: Suitable for communicating with remote devices.

Ms. Vaishali Mishra, Department of

49

Computer Engineering, VIIT , Pune-48

I/O Modules

• External Devices

Figure 5.8 Block Diagram of an External Device

Ms. Vaishali Mishra, Department of

50

Computer Engineering, VIIT , Pune-48

I/O Modules

• External Devices

• The interface to the I/O module is in the form of control, data, and status

signals.

• Control signals determine the function that the device will perform, such as

send data to the I/O module (INPUT or READ), accept data from the I/O

module (OUTPUT or WRITE), report status, or perform some control

function particular to the device (e.g. position a disk head).

• Data are in the form of a set of bits to be sent to or received from the I/O

module.

• Status signals indicate the state of the device.

• Examples are READY/NOT- READY to show whether the device is ready

for data transfer.

• Control logic associated with the device controls the device’s operation in

response to direction from the I/O module.

Ms. Vaishali Mishra, Department of

51

Computer Engineering, VIIT , Pune-48

I/O Modules

• External Devices

• The transducer converts data from electrical to other forms of energy

during output and from other forms to electrical during input.

• Typically, a buffer is associated with the transducer to temporarily hold

data being transferred between the I/O module and the external

environment.

• A buffer size of 8 to 16 bits is common for serial devices, whereas block-

oriented devices such as disk drive controllers may have much larger

buffers.

Ms. Vaishali Mishra, Department of

52

Computer Engineering, VIIT , Pune-48

I/O Modules

• Module Function

• The major functions or requirements for an I/O module fall into the

following categories:

– Control and timing

– Processor communication

– Device communication

– Data buffering

– Error detection

• During any period of time, the processor may communicate with one or

more external devices in unpredictable patterns, depending on the

program’s need for I/O.

• The internal resources, such as main memory and the system bus, must be

shared among a number of activities, including data I/O.

Ms. Vaishali Mishra, Department of

53

Computer Engineering, VIIT , Pune-48

I/O Modules

• Module Function

• The I/O function includes a control and timing requirement, to coordinate

the flow of traffic between internal resources and external devices.

• For example, the control of the transfer of data from an external device to

the processor might involve the following sequence of steps:

1. The processor interrogates the I/O module to check the status of the attached

device.

2. The I/O module returns the device status.

3. If the device is operational and ready to transmit, the processor requests the

transfer of data, by means of a command to the I/O module.

4. The I/O module obtains a unit of data (e.g., 8 or 16 bits) from the external

device.

5. The data are transferred from the I/O module to the processor.

Ms. Vaishali Mishra, Department of

54

Computer Engineering, VIIT , Pune-48

I/O Modules

• Module Function

• Processor communication involves the following:

• Command decoding: The I/O module accepts commands from the

processor, typically sent as signals on the control bus. For example, an I/O

module for a disk drive might accept the following commands: READ

SECTOR, WRITE SECTOR, SEEK track number, and SCAN record ID.

• Data: Data are exchanged between the processor and the I/O module over

the data bus.

• Status reporting: Because peripherals are so slow, it is important to know

the status of the I/O module.

• For example, if an I/O module is asked to send data to the processor (read),

it may not be ready to do so because it is still working on the previous I/O

command. This fact can be reported with a status signal.

• Common status signals are BUSY and READY. There may also be signals

to report various error conditions.

Ms. Vaishali Mishra, Department of

55

Computer Engineering, VIIT , Pune-48

I/O Modules

• Module Function

• Address recognition: Just as each word of memory has an address, so does

each I/O device. Thus, an I/O module must recognize one unique address

for each peripheral it controls.

Ms. Vaishali Mishra, Department of

56

Computer Engineering, VIIT , Pune-48

I/O Module Structure

Figure 5.9 Block Diagram of an I/O Module

Ms. Vaishali Mishra, Department of

57

Computer Engineering, VIIT , Pune-48

I/O Module Structure

• Figure 5.9 provides a general block diagram of an I/O module.

• The module connects to the rest of the computer through a set of signal

lines (e.g., system bus lines).

• Data transferred to and from the module are buffered in one or more data

registers.

• There may also be one or more status registers that provide current status

information.

• A status register may also function as a control register, to accept detailed

control information from the processor.

• The logic within the module interacts with the processor via a set of control

lines.

• The processor uses the control lines to issue commands to the I/O module.

• Some of the control lines may be used by the I/O module (e.g., for

arbitration and status signals).

Ms. Vaishali Mishra, Department of

58

Computer Engineering, VIIT , Pune-48

I/O Module Structure

• The module must also be able to recognize and generate addresses

associated with the devices it controls.

• Each I/O module has a unique address or, if it controls more than one

external device, a unique set of addresses.

• Finally, the I/O module contains logic specific to the interface with each

device that it controls.

• An I/O module that takes on most of the detailed processing burden, presenting

a high-level interface to the processor, is usually referred to as an I/O channel or

I/O processor.

• An I/O module that is quite primitive and requires detailed control is usually

referred to as an I/O controller or device controller.

• I/O controllers are commonly seen on microcomputers, whereas I/O channels are

used on mainframes

Ms. Vaishali Mishra, Department of

59

Computer Engineering, VIIT , Pune-48

Programmed I/O

• Three techniques are possible for I/O operations.

• With programmed I/O, data are exchanged between the

processor and the I/O module.

• The processor executes a program that gives it direct control of

the I/O operation, including sensing device status, sending a

read or write command, and transferring the data.

• When the processor issues a command to the I/O module, it

must wait until the I/O operation is complete.

• If the processor is faster than the I/O module, this is waste of

processor time.

Ms. Vaishali Mishra, Department of

60

Computer Engineering, VIIT , Pune-48

Programmed I/O

• With interrupt-driven I/O, the processor issues an I/O

command, continues to execute other instructions, and is

interrupted by the I/O module when the latter has completed

its work.

• With both programmed and interrupt I/O, the processor is

responsible for extracting data from main memory for output

and storing data in main memory for input.

• The alternative is known as direct memory access (DMA).

• In this mode, the I/O module and main memory exchange data

directly, without processor involvement.

Ms. Vaishali Mishra, Department of

61

Computer Engineering, VIIT , Pune-48

Programmed I/O

Table 5.3 I/O Techniques

Ms. Vaishali Mishra, Department of

62

Computer Engineering, VIIT , Pune-48

Overview of Programmed I/O

• When the processor is executing a program and encounters an instruction

relating to I/O, it executes that instruction by issuing a command to the

appropriate I/O module.

• With programmed I/O, the I/O module will perform the requested action

and then set the appropriate bits in the I/O status register (Figure 5.9).

• The I/O module takes no further action to alert the processor.

• In particular, it does not interrupt the processor.

• Thus, it is the responsibility of the processor to periodically check the status of the

I/O module until it finds that the operation is complete.

• To explain the programmed I/O technique, we view it first from the point of view of

the I/O commands issued by the processor to the I/O module, and then from the

point of view of the I/O instructions executed by the processor.

Ms. Vaishali Mishra, Department of

63

Computer Engineering, VIIT , Pune-48

I/O Commands

• To execute an I/O-related instruction, the processor issues an address,

specifying the particular I/O module and external device, and an I/O

command.

• There are four types of I/O commands that an I/O module may receive

when it is addressed by a processor:

• Control: Used to activate a peripheral and tell it what to do. For example, a

magnetic-tape unit may be instructed to rewind or to move forward one

record.These commands are tailored to the particular type of peripheral

device.

• Test: Used to test various status conditions associated with an I/O module

and its peripherals. The processor will want to know that the peripheral of

interest is powered on and available for use. It will also want to know if the

most recent I/O operation is completed and if any errors occurred.

Ms. Vaishali Mishra, Department of

64

Computer Engineering, VIIT , Pune-48

I/O Commands

• Read: Causes the I/O module to obtain an item of data from the peripheral

and place it in an internal buffer (depicted as a data register in Figure 5.9).

The processor can then obtain the data item by requesting that the I/O

module place it on the data bus.

• Write: Causes the I/O module to take an item of data (byte or word) from

the data bus and subsequently transmit that data item to the peripheral.

Ms. Vaishali Mishra, Department of

65

Computer Engineering, VIIT , Pune-48

I/O Commands

• Figure 5.10a gives an example of the use of programmed I/O to read in a

block of data from a peripheral device (e.g., a record from tape) into

memory.

• Data are read in one word (e.g., 16 bits) at a time.

• For each word that is read in, the processor must remain in a status-

checking cycle until it determines that the word is available in the I/O

module’s data register.

• This flowchart highlights the main disadvantage of this technique: it is a

time-consuming process that keeps the processor busy needlessly.

Ms. Vaishali Mishra, Department of

66

Computer Engineering, VIIT , Pune-48

Three Techniques for Input of a Block of Data

Figure 5.10 a Programmed I/O

Ms. Vaishali Mishra, Department of Computer Engineering, VIIT , Pune-48 67

Three Techniques for Input of a Block of Data

Figure 5.10 b Interrupt-driven I/O

Ms. Vaishali Mishra, Department of Computer Engineering, VIIT , Pune-48 68

Three Techniques for Input of a Block of Data

Figure 5.10 Direct memory access

Ms. Vaishali Mishra, Department of Computer Engineering, VIIT , Pune-48 69

References

• W. Stallings, ―Computer Organization and Architecture:

Designing for performanceǁ, Pearson Education/ Prentice Hall

of India, 2003, ISBN 978-93-325-1870-4, 7th Edition.

Ms. Vaishali Mishra, Department of

70

Computer Engineering, VIIT , Pune-48

You might also like

- Mobile CodesDocument11 pagesMobile Codesmoizuddin mukarramNo ratings yet

- Financial Data Analytics - Theory and Application by Sinem Derindere KöseoğluDocument290 pagesFinancial Data Analytics - Theory and Application by Sinem Derindere Köseoğlumarcus1216No ratings yet

- Esports Event ProposalDocument33 pagesEsports Event ProposalBrendon Greene PUBG Creator67% (3)

- Tally Vol-1 - April #SKCreative2018Document34 pagesTally Vol-1 - April #SKCreative2018SK CreativeNo ratings yet

- 938 Aurora BLVD., Cubao, Quezon City: Technological Institute of The PhilippinesDocument119 pages938 Aurora BLVD., Cubao, Quezon City: Technological Institute of The PhilippinesRowel Sumang FacunlaNo ratings yet

- Cache MemoryDocument29 pagesCache MemoryBudditha HettigeNo ratings yet

- Computer Architecture - Memory SystemDocument22 pagesComputer Architecture - Memory Systemamit_coolbuddy20100% (1)

- DT - SEMINAR - REPORT FinalDocument31 pagesDT - SEMINAR - REPORT Finalatheena paulson100% (1)

- CH06 Memory OrganizationDocument85 pagesCH06 Memory OrganizationBiruk KassahunNo ratings yet

- CacheDocument81 pagesCacheShravani KhurpeNo ratings yet

- CacheDocument81 pagesCacheNepal MalikNo ratings yet

- CSCI 4717/5717 Computer Architecture: Topic: Memory Hierarchy Reading: Stallings, Chapter 4Document26 pagesCSCI 4717/5717 Computer Architecture: Topic: Memory Hierarchy Reading: Stallings, Chapter 4Rizwan MasoodiNo ratings yet

- CacheDocument81 pagesCachefresh_soul20208212No ratings yet

- Cache Memory: 13 March 2013Document80 pagesCache Memory: 13 March 2013Guru BalanNo ratings yet

- 33-Cache Memory Introduction, Cache Terms - Line, Hit, Miss and Tag. Blocks Identification in MM-03Document16 pages33-Cache Memory Introduction, Cache Terms - Line, Hit, Miss and Tag. Blocks Identification in MM-03Rishabh Paul 21BCE2019No ratings yet

- 01 - Memory System PDFDocument11 pages01 - Memory System PDFRajesh cNo ratings yet

- Arm CacheDocument12 pagesArm CacheLoriana SanabriaNo ratings yet

- 2016 EMT475 03 Cache MemoryDocument81 pages2016 EMT475 03 Cache MemorydemononesNo ratings yet

- Computer Organization & Architecture: Cache MemoryDocument52 pagesComputer Organization & Architecture: Cache Memorymuhammad farooqNo ratings yet

- EC206 CO Module5 Lecture2 1Document11 pagesEC206 CO Module5 Lecture2 1imranNo ratings yet

- Internal Chip Organization 1.3.4Document13 pagesInternal Chip Organization 1.3.4Shubham GoswamiNo ratings yet

- Computer Organization and Architecture C PDFDocument24 pagesComputer Organization and Architecture C PDFGemma Capilitan BoocNo ratings yet

- 2019-2020-CSE206-Week05-07-ch4-Cache MemoryDocument50 pages2019-2020-CSE206-Week05-07-ch4-Cache Memorytemhem racuNo ratings yet

- 04 Cache MemoryDocument36 pages04 Cache Memorymubarra shabbirNo ratings yet

- Lecture-04 & 05, Adv. Computer Architecture, CS-522Document63 pagesLecture-04 & 05, Adv. Computer Architecture, CS-522torabgullNo ratings yet

- Computer Architecture and Organization (Eeng 3192) : 4. The Memory UnitDocument25 pagesComputer Architecture and Organization (Eeng 3192) : 4. The Memory UnitEsuyawkal AdugnaNo ratings yet

- Lecture-04, Adv. Computer Architecture, CS-522Document39 pagesLecture-04, Adv. Computer Architecture, CS-522torabgullNo ratings yet

- Cache MemoryDocument37 pagesCache Memorysraism1303.04No ratings yet

- Hardware: Input, Processing, and Output Devices: Principles of Information Systems Eighth EditionDocument48 pagesHardware: Input, Processing, and Output Devices: Principles of Information Systems Eighth EditionNaman SachdevaNo ratings yet

- Computer Organization & Architecture: BITS PilaniDocument12 pagesComputer Organization & Architecture: BITS PilaniRashmitha RavichandranNo ratings yet

- R4 Ookwb 2 C 3 U PDo HL NZrsDocument15 pagesR4 Ookwb 2 C 3 U PDo HL NZrsShuvomoy rayNo ratings yet

- Overview of Operating System: Department of Information TechnologyDocument42 pagesOverview of Operating System: Department of Information TechnologyVIRAT KOLHINo ratings yet

- Principles of Information Systems Eighth Edition: Hardware: Input, Processing, and Output DevicesDocument46 pagesPrinciples of Information Systems Eighth Edition: Hardware: Input, Processing, and Output DevicesvamcareerNo ratings yet

- 5 Cache and Main MemoryDocument15 pages5 Cache and Main MemoryAKASH PAL100% (1)

- Computer Architecture and Organization: Chapter ThreeDocument26 pagesComputer Architecture and Organization: Chapter ThreeGebrie BeleteNo ratings yet

- Chapter 3Document31 pagesChapter 3s.kouadriNo ratings yet

- Chapter 3 EIS Charts For RevisionDocument12 pagesChapter 3 EIS Charts For RevisionnitishNo ratings yet

- CachesDocument56 pagesCachesAasma KhalidNo ratings yet

- Memory OrganizationDocument57 pagesMemory OrganizationvpriyacseNo ratings yet

- M.Tech (MVD)Document2 pagesM.Tech (MVD)rishi tejuNo ratings yet

- Chapter 04Document22 pagesChapter 04xuandai372005No ratings yet

- Cache Memory123Document15 pagesCache Memory12312202040701068No ratings yet

- L-4 (Cache Memory)Document61 pagesL-4 (Cache Memory)jubairahmed1678No ratings yet

- Computer Science Chap05Document108 pagesComputer Science Chap05joanne2704792No ratings yet

- Aca 1Document39 pagesAca 1Prateek SharmaNo ratings yet

- Computer Hardware Review: ProcessorsDocument8 pagesComputer Hardware Review: Processorsanggit julianingsihNo ratings yet

- Chapter 06Document35 pagesChapter 06NourhanGamalNo ratings yet

- Advanced Caching Techniques: Approaches To Improving Memory System PerformanceDocument18 pagesAdvanced Caching Techniques: Approaches To Improving Memory System PerformanceGagan SainiNo ratings yet

- Com Arch Lec Slide 3 2Document31 pagesCom Arch Lec Slide 3 2masum35-1180No ratings yet

- Chapter 3LDocument31 pagesChapter 3Ladane ararsoNo ratings yet

- Unit-2.1 Intro To VirtualizationDocument21 pagesUnit-2.1 Intro To VirtualizationHello HiNo ratings yet

- Unit-Iv: Memory ManagementDocument19 pagesUnit-Iv: Memory ManagementSainiNishrithNo ratings yet

- Introduction - : Artificial Neural NetworksDocument53 pagesIntroduction - : Artificial Neural NetworksArka SinhaNo ratings yet

- Interrupt and Memory HierarchyDocument32 pagesInterrupt and Memory HierarchyJam Farhad AtharNo ratings yet

- Memory CacheDocument18 pagesMemory CacheFunsuk VangduNo ratings yet

- CH04 COA11eDocument48 pagesCH04 COA11eShi TingNo ratings yet

- ICS 2101 Course OutlineDocument6 pagesICS 2101 Course OutlineAlly Sallim0% (1)

- Parallel Programming Using MPI: David Porter & Mark NelsonDocument81 pagesParallel Programming Using MPI: David Porter & Mark NelsonAsmatullah KhanNo ratings yet

- Chapter 2 - Memory-SystemDocument24 pagesChapter 2 - Memory-SystemYoung UtherNo ratings yet

- Computer Organization and Architecture (ECE2002)Document20 pagesComputer Organization and Architecture (ECE2002)Madhav Rav TripathiNo ratings yet

- Hardware - Input, Processing, and Output DevicesDocument11 pagesHardware - Input, Processing, and Output DevicesAditya Singh75% (4)

- William Stallings Computer Organization and Architecture 8th Edition Cache MemoryDocument43 pagesWilliam Stallings Computer Organization and Architecture 8th Edition Cache MemoryabbasNo ratings yet

- Assignment-Operating System 5014533Document2 pagesAssignment-Operating System 5014533عبدالرحمن يحي صلحNo ratings yet

- Chapter ThreeDocument63 pagesChapter ThreesileNo ratings yet

- Pravin Rajndra Zende: Associate Sap MM ConsultantDocument3 pagesPravin Rajndra Zende: Associate Sap MM ConsultantPravin ZendeNo ratings yet

- USB WatchDog Pro2 User Manual - Open Development LLCDocument16 pagesUSB WatchDog Pro2 User Manual - Open Development LLCJhonny NaranjoNo ratings yet

- Nested StructureDocument5 pagesNested StructureHardik GuptaNo ratings yet

- Using Microsoft Excel To Edit .DBF Tables: ProjectdbfaddinDocument2 pagesUsing Microsoft Excel To Edit .DBF Tables: ProjectdbfaddinMehak FatimaNo ratings yet

- Es5 120 73eb - DatDocument2 pagesEs5 120 73eb - DatCharmin CoronaNo ratings yet

- Claim Security Officer ProfileDocument9 pagesClaim Security Officer ProfileMatlale GraciousNo ratings yet

- European Pharmacopoeia 11th Edition Price ListDocument3 pagesEuropean Pharmacopoeia 11th Edition Price ListvvsshivaprasadNo ratings yet

- 1 Day Before InterviewDocument279 pages1 Day Before InterviewSomitha BalagamNo ratings yet

- Infot 1 - Chapter 1Document12 pagesInfot 1 - Chapter 1ArianneNo ratings yet

- 2021 06 02 13 28 11 DESKTOP-65R2BAK LogDocument365 pages2021 06 02 13 28 11 DESKTOP-65R2BAK LogDTNovaNo ratings yet

- University of Gondar: Web Based Student Information Management System For Edgetfeleg General Secondary SchoolDocument32 pagesUniversity of Gondar: Web Based Student Information Management System For Edgetfeleg General Secondary SchoolZemetu Gimjaw AlemuNo ratings yet

- Adobe Photoshop Urdu BookDocument53 pagesAdobe Photoshop Urdu BookAbdul Majid Bhuttah100% (5)

- DLP Etech 3Document11 pagesDLP Etech 3Dorie Fe ManseNo ratings yet

- ULI-224-Series DS (05sept2023) 20230907173502Document7 pagesULI-224-Series DS (05sept2023) 20230907173502PERABNo ratings yet

- Calculus Option NotesDocument10 pagesCalculus Option NotesMaitreeArtsy100% (1)

- USER 20211027180836 ScrubLogDocument10 pagesUSER 20211027180836 ScrubLogSpookyNo ratings yet

- Ds 3-7 Lab ProgramsDocument14 pagesDs 3-7 Lab Programsgaliti3096No ratings yet

- HF For All (Ir. Ridwan Syaaf - 1st Session)Document22 pagesHF For All (Ir. Ridwan Syaaf - 1st Session)HSE DepartmentNo ratings yet

- Introduction To LabVIEW 8Document88 pagesIntroduction To LabVIEW 8nemzinhoNo ratings yet

- MGMT 3003 Group 1 Case StudyDocument38 pagesMGMT 3003 Group 1 Case Studytwo doubleNo ratings yet

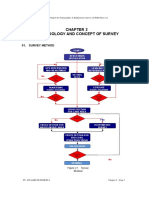

- Methodology and Concept of SurveyDocument20 pagesMethodology and Concept of SurveyCimanuk Cisanggarung riverNo ratings yet

- Design and Simulation of An Automated WH PDFDocument6 pagesDesign and Simulation of An Automated WH PDFta21rahNo ratings yet

- 6th Weekly ReportDocument3 pages6th Weekly ReportNgoc Minh Trang NguyenNo ratings yet

- WAP2100-T SeriesDocument5 pagesWAP2100-T SeriessuplexfarhanNo ratings yet