Professional Documents

Culture Documents

Kaushik Pasi - CV

Uploaded by

ameya vashishthCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Kaushik Pasi - CV

Uploaded by

ameya vashishthCopyright:

Available Formats

Mobile: +917387666102

Email: kaushikpasi@gmail.com

LinkedIn: www.linkedin.com/in/kaushikpasi

Overview

Firmly believer of the term ‘Big Data Science’, merging the goodness of Data Science at the scale of Big Data. Responsible for building

‘Data’ capabilities at StockX. Enjoys building ‘cutting edge data’ solutions providing real-world impacts for clients globally. Expertise

is in designing and developing cloud-based high-performance data engineering, big data & analytics solutions that are performant,

scalable, secured, and compliant with the industry’s best practices. His recent client experiences include building big data lakes and

AI platforms in the hybrid & multi-cloud environments for FinTech, e-commerce and home-appliance companies with use cases like

recommendation engines, risk & fraud analytics, and customer 360 use cases.

Work Experience

2022-Present Senior Cloud Data & AI Platform Engineer – StockX, Bengaluru

- Building Data & AI platform for Data Engineering and Machine Learning teams

- Architecting data solutions for business use cases

- Creating & managing entire platform on AWS using ISaC (Terraform)

- Implementing Data Catalog & Data Governance

2018-2021 Big Data & Analytics Lead – Oneture Technologies., Mumbai

- Consulting big data and analytics platform solutions with data science & pre-sales activities

- Architecting, developing, and fine-tuning big-data lakes and various end-to-end big data

applications

- Developing machine learning applications

- Handled various data sources ranging from structured, semi-structured to unstructured with

both batch & stream processing

- Handled over 6 major clients with diverse problem statements

- Have done over 30+ successful POCs and 20+ projects

2016-2017 Security Consultant – IBM India Pvt. Ltd., Mumbai

- IBM QRadar implementation & integration of components in deployment

- Log-source integration and UDSMs

- Rules and Reports creation and fine-tuning

- Handled over 5 major clients

Education

Master of Technology (M.Tech.) Software Engineering

2016

CGPA: 7.75

Veermata Jijabai Technological Institute – Mumbai, MH

Bachelor of Engineering (B.E.) Computer Engineering

2014

Percentage: 68.13%

Mumbai University

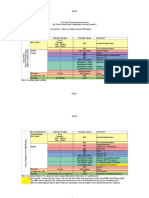

Technical Skills

- Big Data - Spark, Hive, Kafka, Oozie, Sqoop, Flume, - Clouds – AWS, Azure, Google Cloud

Presto, NiFi, Airflow, Databricks - Others – Jenkins, Elastisearch, Terraform

- AWS – EMR, Glue, Athena, Lambda, S3, VPC, EC2, - Languages: Python, Scala, SQL/PLSQL, Java, C/C++

Lambda, Sagemaker, Quicksight, Kinesis, Redshift,

Lake Formation - Machine Learning, Neural Networks, Deep learning

- Azure – Databricks, Data factory, Data Factory, - Natural Language & Image Processing

Synapse, Azure ML, DevOps - Visualization Tools – Tableau, PowerBI,

Quicksight, Qlik

Projects

2022 Organizational Lakehouse Implementation

Responsibilities:

- Building Data & AI platform for Data Engineering and Machine Learning teams

- Architecting data solutions for business use cases

- Creating & managing entire platform on AWS using ISaC (Terraform)

2021 Project architecting & Center of Excellence

Responsibilities:

- Architect technical solutions for projects & initiatives

- Setup ways of working with best practices

Outcomes:

- Successfully delivering technical solutions to teams

2020-21 BI platform solution

Responsibilities:

- Architected project solution

- Lead development team for implementing the designed solution.

- Work with business to develop BI solution for various projects & their BI needs.

- Enhancing the platform from features, self-service & better governance perspective.

Outcomes:

- Successfully rolled out platform to be used at organizational level

2020-21 Platform Security & Governance

Responsibilities:

- Develop POC for unified security of various bigdata & non-bigdata platform components.

- Design solution & implement the same.

Outcomes:

- Successfully completed POC

2020 Self Service & Data Discovery

Responsibilities:

- Develop POC for data discover platform.

- Design solution & implement the same.

Outcomes:

- Successfully completed POC

2020 Consumer experience and contact center enhancement

Responsibilities:

- Designed solution along with other architects.

- Lead development team for implementing the designed solution.

- Process data from Genesys PureCloud, Google DialogFlow & integrate in ClaraBridge and QlikSense

reports

- Compute various KPIs related to understand consumer journey, direct sales, manage cost, increase

efficiency of contact centers.

Outcomes:

- Successfully completed & delivered the project to business.

- Improved sales & serviceability by providing simply outstanding consumer journey

2020 IoT enabled smart home appliance analytics.

Responsibilities:

- Lead development team for implementing the designed solution.

- Compute various KPIs related to product usage, defects, service, improvement, etc.

Outcomes:

- Successfully completed & delivered the project to business

2019 Fast processing semi-structured data pipelines

Responsibilities:

- Create big data applications to handle and process semi-structured data in JSON and XML.

- Convert the raw data into de-normalized forms for making it easy to query and source BI tools for insights.

Outcomes:

- Successfully completed POCs for 2 use cases with 1 in production

- Achieved more than 1000x performance improvement from existing application which helped client to

get insights from once every quarter to daily

2019 Content management platform

Responsibilities:

- Design and implement an end-to-end application for reading various documents and storing the key-

value data into a NoSQL database

- Demonstrate a successful working POC for the same

Outcomes:

- Successfully completed POC, application under final development phases

Application execution price is more than 1000x cheaper than any existing solution.

2018-19 Customer 360 (2)

Responsibilities:

- Designing and implementing entire data backend for two Customer-360 projects

- Provide all the required data components with additional key insights with analytical results for

improving up-sell and cross-sell of products by analyzing customer’s spend behavior with the help of

machine learning models

- Showcasing unified customer timeline from all available channels

- Recommending best next actionable to the client for personalized by each customer

Outcomes:

Successfully completed POC for one client and another one currently in UAT

2018-19 Various analytics application development (4+)

Responsibilities:

- Create ML models for following use cases: Customer Segmentation, Customer Recommendation for cross-

sell & up-sell, Suspicious Transaction Detection, Credit Card Fraud Analytics

- Migrate 10+ existing trained ML models from on-premises to AWS

Outcomes:

- Successfully implemented all models, currently in UAT

Migration activity in progress, currently in initial phases

2018 Big data CI/CD pipeline

Responsibilities:

- Creating CI/CD pipeline for big data applications

- Automate the process of application lifecycle for UAT & Prod

Outcomes:

Successfully developed the pipeline and handed it over to client’s release team for operations and administration

2018-19 Big data-lakes on clouds (3)

Responsibilities:

- Designing and implementing big data-lake on AWS & Azure

- Planning and implementing end-to-end big data ETL pipelines

Outcomes:

- Successfully implemented two data-lakes in production and one in initial phases of development

It is servings as organization’s self-service platform for all their date needs

2018-2021 Technical pre-sales & consulting

Responsibilities:

- Meet new potential clients, discuss & understand their requirements

- Design & propose solution

- Convert opportunities into potential clients

Outcomes:

Got 5 new clients for my parent company

2016-17 SIEM Implementation (5)

Responsibilities:

- QRadar Administrator for installation, integration and deployment of SIEM architecture in the banking

security environment.

- Log source integration for supported devices and UDSMs for unsupported ones.

- Use cases and business/device specific reports creation and fine-tuning.

- Integration and setting up of QRM, QVM, QRIF in QRadar.

- Troubleshooting issues related to deployment and operations of QRadar.

Outcomes:

- Successfully deployed SIEM with total ownership.

- Created standard operating procedures for strategic/important tasks performed.

Certifications

Data Engineer

AWS Certified Solutions Architect – Professional

OpenHack: Modern Data Warehousing

Microsoft Certified: Azure Fundamentals

Machine Learning A-Z™: Hands-On Python & R in Data Science

Baseline: Data, ML, AI

BigQuery For Data Analysis

Data Science on the Google Cloud

Machine Learning for Engineering and Science Applications

AWS Certified Solutions Architect – Associate

Apache Spark 2.0 + Scala: DO Big Data Analytics & ML

Taming Big Data with Spark Streaming and Scala

Edureka - Big Data Hadoop Certification

Personal Information

th

Date of Birth 19 September 1992

Nationality Indian

Passport M1261485

Languages English, Hindi, Marathi

Marital Status Unmarried

Address 1601, Lotus A, Nisarg Greens – Phase 2, Morivali, Ambernath (East), Maharashtra, India - 421501

DISCLAIMER: I hereby declare that all the information provided in this CV is factual and correct to the best of my

knowledge and belief.

Date: 21sth March 2022 Kaushik Pasi

You might also like

- In Case This Is The Correct Book, Please Contact Me For Orders atDocument5 pagesIn Case This Is The Correct Book, Please Contact Me For Orders atIkhsan As Hamba Alloh33% (6)

- Sample IT Business Analyst ResumeDocument4 pagesSample IT Business Analyst Resumeamardeepy2kNo ratings yet

- Software Engineer Sample ResumeDocument2 pagesSoftware Engineer Sample ResumePhạm Thị Thu SươngNo ratings yet

- Database Design for Qatar FoundationDocument5 pagesDatabase Design for Qatar FoundationElon DuskNo ratings yet

- List of JDsDocument8 pagesList of JDsPriyanshu AgrawalNo ratings yet

- AhmadHammad CV 2020 9 PDFDocument3 pagesAhmadHammad CV 2020 9 PDFAhmad HammadNo ratings yet

- Mridul Puri: WORK EXPERIENCE (6 Years)Document2 pagesMridul Puri: WORK EXPERIENCE (6 Years)Goden MNo ratings yet

- VAMSI MicrostrategyDocument8 pagesVAMSI MicrostrategyJoshElliotNo ratings yet

- JASANMEET SINGH - Resume - MDMDocument4 pagesJASANMEET SINGH - Resume - MDMJasanmeet SinghNo ratings yet

- 1BI Developer 3yrs QlikDocument2 pages1BI Developer 3yrs Qlikfunny guyNo ratings yet

- Sudheer Kumar MuramshettyDocument4 pagesSudheer Kumar MuramshettyRowNo ratings yet

- vnd.openxmlformats-officedocument.wordprocessingml.document&rendition=1_(1)Document9 pagesvnd.openxmlformats-officedocument.wordprocessingml.document&rendition=1_(1)pc4No ratings yet

- CV AbdurRehmanKhalil v5 2Document2 pagesCV AbdurRehmanKhalil v5 2Abdur Rehman KhalilNo ratings yet

- RameshKumarMResumeMendixArchitectDocument5 pagesRameshKumarMResumeMendixArchitectPhani PrakashNo ratings yet

- InnovationM - Corporate PresentationDocument67 pagesInnovationM - Corporate PresentationVaibhavNo ratings yet

- Resume Prashant Agarwal Dec 2023 V6Document2 pagesResume Prashant Agarwal Dec 2023 V6PrashantAgarwalNo ratings yet

- Junaid Hossein Resume-3Document2 pagesJunaid Hossein Resume-3kazifaisal41No ratings yet

- Business Analyst Vo Truong Thinh Experience SummaryDocument2 pagesBusiness Analyst Vo Truong Thinh Experience SummaryNghe Tin Hot mỗi ngàyNo ratings yet

- Kunal_Mohanta_Profile+2022Document8 pagesKunal_Mohanta_Profile+2022database.mnrsolutionsNo ratings yet

- Maheswara Reddy M: Mobile: +91 9108320379Document5 pagesMaheswara Reddy M: Mobile: +91 9108320379msmr14No ratings yet

- DeveloperDocument7 pagesDevelopermike millNo ratings yet

- Microsoft Cloud Certified. Passport No:U8693521, CSMDocument8 pagesMicrosoft Cloud Certified. Passport No:U8693521, CSMmithulareddivariNo ratings yet

- Data Engineering Professional with 5+ Years ExperienceDocument3 pagesData Engineering Professional with 5+ Years ExperienceShweta ThakurNo ratings yet

- Abid Ahmed: Designation: MF Analyst (Programmer)Document3 pagesAbid Ahmed: Designation: MF Analyst (Programmer)Akash GNo ratings yet

- IT Professional Resume with 14+ Years of ExperienceDocument7 pagesIT Professional Resume with 14+ Years of ExperienceRajmachhole MPCNo ratings yet

- Director, ACS Global Delivery, EMEA Cloud MW & Apps MEA at OracleDocument6 pagesDirector, ACS Global Delivery, EMEA Cloud MW & Apps MEA at OracleSreenivasulu kNo ratings yet

- Abhishek+Deshmukh+CVDocument2 pagesAbhishek+Deshmukh+CVrajendrasNo ratings yet

- Tarandeep Kaur Saini Data ScientistDocument3 pagesTarandeep Kaur Saini Data Scientisttarandeep kaurNo ratings yet

- Job - Full Stack DevelperDocument2 pagesJob - Full Stack DevelperAjo MathewNo ratings yet

- Electrical Engineer Abdur Rehman Khalil Seeks New OpportunitiesDocument2 pagesElectrical Engineer Abdur Rehman Khalil Seeks New OpportunitiesAbdur Rehman KhalilNo ratings yet

- Anurag Gupta's 20+ Years IT Experience in Cloud, Data, BIDocument8 pagesAnurag Gupta's 20+ Years IT Experience in Cloud, Data, BINITIN SINGHNo ratings yet

- Java, IOT, Big Data, Cloud TechnologiesDocument8 pagesJava, IOT, Big Data, Cloud TechnologiesChetan ShahNo ratings yet

- Okbah Aaziz Mushaweh ResumeDocument3 pagesOkbah Aaziz Mushaweh ResumeIphadokb Okb0% (1)

- Bindiya - 144628950Document3 pagesBindiya - 144628950preeti dNo ratings yet

- Mustafa Shaik PeoplesoftDocument5 pagesMustafa Shaik PeoplesoftMustafa ShaikNo ratings yet

- Naukri VinodChandrakantKhot (10y 0m)Document5 pagesNaukri VinodChandrakantKhot (10y 0m)Main Shayar BadnamNo ratings yet

- HardiksResume 1Document2 pagesHardiksResume 1Time PassNo ratings yet

- Hema_CV_Manager_ITDocument3 pagesHema_CV_Manager_ITHema ManoharanNo ratings yet

- Alm.CVDocument2 pagesAlm.CValmajdsh18No ratings yet

- Nashid SultanaDocument4 pagesNashid SultanaHarshal BarhateNo ratings yet

- Amrinder Singh - AzureDocument10 pagesAmrinder Singh - AzureprajaNo ratings yet

- VijayPradeepKumar SADocument2 pagesVijayPradeepKumar SAVijay PradeepNo ratings yet

- Fullstack Cloud Native Engineer with 10+ Years ExperienceDocument3 pagesFullstack Cloud Native Engineer with 10+ Years Experiencevamsi chinniNo ratings yet

- Amarnath Chigurupati CVDocument4 pagesAmarnath Chigurupati CVRobert GînguNo ratings yet

- MicroStrategy Architect Resume - Arup Kumar DasDocument12 pagesMicroStrategy Architect Resume - Arup Kumar DasApeiron ManagementNo ratings yet

- M.Danish Iqbal Resume ModifiedDocument2 pagesM.Danish Iqbal Resume ModifiedMuhammad TariqNo ratings yet

- SUMAN GOSALA - BI ArchitectDocument10 pagesSUMAN GOSALA - BI ArchitectNaga Ravi Thej KasibhatlaNo ratings yet

- Nataraj - 5p Resume As On 6th Jan 20-4-2Document6 pagesNataraj - 5p Resume As On 6th Jan 20-4-2pnatrajpngNo ratings yet

- Grey Modern Fashion Designer ResumeDocument2 pagesGrey Modern Fashion Designer ResumeNaresh BNo ratings yet

- UdhayakumarG ResumeDocument1 pageUdhayakumarG ResumeAli ShahNo ratings yet

- CV Updated XDocument1 pageCV Updated Xluksisnskks929299wNo ratings yet

- Sample ResumeDocument2 pagesSample ResumePrashant PatelNo ratings yet

- Associate ProfilingDocument14 pagesAssociate ProfilingShantha GopaalNo ratings yet

- Sample ResumeDocument8 pagesSample ResumekirantejavvaruNo ratings yet

- Resume - Abubakkar Siddiq K MDocument6 pagesResume - Abubakkar Siddiq K Mabu1882No ratings yet

- Umair Aziz - CVDocument11 pagesUmair Aziz - CVUmair AttariNo ratings yet

- Aditya Bartwal's Resume - Experienced Data ProfessionalDocument7 pagesAditya Bartwal's Resume - Experienced Data ProfessionalVijay KumarNo ratings yet

- Mritunjay Sahay: Capability StatementDocument8 pagesMritunjay Sahay: Capability StatementMuhilarasan ElangovanNo ratings yet

- Chaitanya Khairnar: ProfileDocument3 pagesChaitanya Khairnar: Profilealok pratap singhNo ratings yet

- Hadeel Mahmoud Metwaly CVDocument1 pageHadeel Mahmoud Metwaly CVHadeel MahmoudNo ratings yet

- Avinash R Erupaka ResumeDocument4 pagesAvinash R Erupaka ResumeNainika KedarisettiNo ratings yet

- IBM Business Analytics and Cloud Computing: Best Practices for Deploying Cognos Business Intelligence to the IBM CloudFrom EverandIBM Business Analytics and Cloud Computing: Best Practices for Deploying Cognos Business Intelligence to the IBM CloudRating: 5 out of 5 stars5/5 (1)

- AbhijithDocument3 pagesAbhijithameya vashishthNo ratings yet

- Sarthak Garg Cloud Data EngineerDocument2 pagesSarthak Garg Cloud Data Engineerameya vashishthNo ratings yet

- Suresh SPARK AWSDocument4 pagesSuresh SPARK AWSameya vashishthNo ratings yet

- Sarthak Garg Cloud Data EngineerDocument2 pagesSarthak Garg Cloud Data Engineerameya vashishthNo ratings yet

- Oric Tap File FormatDocument4 pagesOric Tap File Formatrc3molinaNo ratings yet

- HBASE Table Creation and Data ManipulationDocument8 pagesHBASE Table Creation and Data Manipulationchandra reddyNo ratings yet

- Basic Course in Biomedical Research Dr. Manickam Ponnaiah ICMR-National Institute of Epidemiology, Chennai Lecture - 17 Data ManagementDocument15 pagesBasic Course in Biomedical Research Dr. Manickam Ponnaiah ICMR-National Institute of Epidemiology, Chennai Lecture - 17 Data ManagementGksNo ratings yet

- Analysis Vs ReportingDocument21 pagesAnalysis Vs Reportingselva rajNo ratings yet

- Chapter Iii-Methods and Procedures 1Document14 pagesChapter Iii-Methods and Procedures 1Dexter BaretNo ratings yet

- Database Management Systems NotesDocument2 pagesDatabase Management Systems NotesNitin VisheNo ratings yet

- Postgresql 16 USDocument3,120 pagesPostgresql 16 USeddhadiNo ratings yet

- (Linux) DRBD - Heartbeat (ActivePassive High Availability Cluster)Document3 pages(Linux) DRBD - Heartbeat (ActivePassive High Availability Cluster)Sonia Andrea Bautista AmayaNo ratings yet

- Apriori AlgorithmDocument4 pagesApriori AlgorithmsowmiyaNo ratings yet

- Xylem Vue Powerd by Go-AiguaDocument68 pagesXylem Vue Powerd by Go-Aiguazainab.khalid.engNo ratings yet

- UNIT 1 MongoDB Fully CompleteDocument60 pagesUNIT 1 MongoDB Fully CompleteRishiNo ratings yet

- Hdfs Basics, Running Example Programs and Benchmarks, Hadoop Mapreduce Framework, Mapreduce ProgrammingDocument144 pagesHdfs Basics, Running Example Programs and Benchmarks, Hadoop Mapreduce Framework, Mapreduce ProgrammingVaishnavi S MNo ratings yet

- SQL JoinsDocument21 pagesSQL JoinsTeshale SiyumNo ratings yet

- Intro to Data Structures and Algorithms (CO2003Document41 pagesIntro to Data Structures and Algorithms (CO2003ĐỨC TRẦN HUYNo ratings yet

- Building A Ha and DR Solution Using Alwayson SQL Fcis and Ags v1Document37 pagesBuilding A Ha and DR Solution Using Alwayson SQL Fcis and Ags v1Prasad ReddNo ratings yet

- Data Science With PythonDocument8 pagesData Science With PythonNamna ChhedaNo ratings yet

- Howard Wainer-Truth or Truthiness - Distinguishing Fact From Fiction by Learning To Think Like A Data Scientist-Cambridge University Press (2015)Document230 pagesHoward Wainer-Truth or Truthiness - Distinguishing Fact From Fiction by Learning To Think Like A Data Scientist-Cambridge University Press (2015)Jorge Guzmán GarcíaNo ratings yet

- Etworking Interview Questions and Answer 1Document5 pagesEtworking Interview Questions and Answer 1Mohd Tanveer AshrafNo ratings yet

- Sap BibwDocument2 pagesSap BibwsudhakpNo ratings yet

- ASCII Character Sets ExplainedDocument1 pageASCII Character Sets ExplainedElektronsko UcenjeNo ratings yet

- Program process lifecycle, scheduling, communication and memory managementDocument2 pagesProgram process lifecycle, scheduling, communication and memory managementJonathan ChiuNo ratings yet

- Azizian Brocca 2019Document3 pagesAzizian Brocca 2019Robert CrossNo ratings yet

- SQL TITLE GENERATORDocument24 pagesSQL TITLE GENERATORKumar BNo ratings yet

- Assignment #3Document4 pagesAssignment #3NoambuchlerNo ratings yet

- SAP As Adapter enDocument37 pagesSAP As Adapter enGilbert AriasNo ratings yet

- Essbase11 - Managing ASDBDocument15 pagesEssbase11 - Managing ASDBMonica UtaNo ratings yet

- The Self Assessment Report (SAR) As Part of The Accreditation ProcessDocument20 pagesThe Self Assessment Report (SAR) As Part of The Accreditation ProcessluqmanfNo ratings yet

- Data Extract SVGDocument3 pagesData Extract SVGAxl AxlNo ratings yet