Professional Documents

Culture Documents

Experts PseudoCode

Uploaded by

happy_userOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Experts PseudoCode

Uploaded by

happy_userCopyright:

Available Formats

Anna Choromanska, Claire Monteleoni

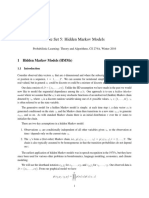

Algorithm 1 OCE: Online Clustering with Experts (3 variants)

Inputs: Clustering algorithms {a1 , a2 , . . . , an }, R: large constant, Fixed-Share only: ↵ 2 [0, 1],

Learn-↵ only: {↵1 , ↵2 , . . . , ↵m } 2 [0, 1]m

Initialization: t = 1, p1 (i) = 1/n, 8i 2 {1, . . . , n}, Learn-↵ only: p1 (j) = 1/m, 8j 2 {1, . . . , m},

p1,j (i) = 1/n, 8j 2 {1, . . . , m}, 8i 2 {1, . . . , n}

At t-th iteration:

i

Receive vector Pnc, wherei c is the output of algorithm ai atP time t. P

m n

clust(t) = i=1 pt (i)c // Learn-↵: clust(t) = j=1 pt (j) i=1 pt,j (i)ci

i⇤ ⇤

Output: clust(t) // Optional: additionally output C {ci }, where i⇤ = arg maxi2{1,...,n} pt (i)

View xt in the stream.

For each i 2 {1, . . . , n}:

i

L(i, t) = k xt2Rc k2

————————————————————————————————————————————————

1

pt+1 (i) = pt (i)e 2 L(i,t) 1. Static-Expert

————————————————————————————————————————————————

For each i 2 {1, . . . , n}: 2. Fixed-Share

Pn 1

pt+1 (i) = h=1 pt (h)e 2 L(h,t) P (i | h; ↵) // P (i | h; ↵) = (1 ↵) if i = h, n↵ 1 o.w.

————————————————————————————————————————————————

For each j 2 {1, . . . , m}: 3. Learn-↵

Pn 1

L(i,t)

lossPerAlpha[j] = log i=1 pt,j (i)e 2

pt+1 (j) = pt (j)e lossPerAlpha[j]

For each i 2 {1, . . . , n}:

Pn 1

pt+1,j (i) = h=1 pt,j (h)e 2 L(h,t) P (i | h; ↵j )

Normalize pt+1,j .

————————————————————————————————————————————————

Normalize pt+1 .

t=t+1

the algorithm obeys the following bound, with respect experts is parameterized by an arbitrary transition dy-

to that of the best expert: namics; Fixed-Share follows as a special case. Fol-

lowing [26, 25], we will express regret bounds for

LT (alg) LT (ai ⇤) + 2 log n these algorithms

Pn in terms1 L(x of the following log-loss:

i

Llog

t = log i=1 p t (i)e 2 t ,ct )

. This log-loss re-

The proof follows by an almost direct application of lates our clustering loss (Definition 3) as follows.

an analysis technique of [25]. We now provide regret Lemma 1. Lt 2Llog

t

bounds for our OCE Fixed-Share variant. First, we fol-

low the analysis framework of [19] to bound the regret Proof. By Theorem 1: Lt = L(xt , clust(t))

of the Fixed-Share algorithm (parameterized by ↵) Pn 1 i

2 log i=1 e 2 L(xt ,ct ) = 2Llog

t

with respect to the best s-partition of the sequence.4

Theorem 3. For any sequence of length T , and for Theorem 4. The cumulative log-loss of a generalized

any s < T , consider the best partition, computed in OCE algorithm that performs Bayesian updates with

hindsight, of the sequence into s segments, mapping arbitrary transition dynamics, ⇥, a matrix where rows,

each segment to its best expert. Then, letting ↵0 = ⇥i , are stochastic vectors specifying an expert’s distri-

s/(T 1): LT (alg) LT (best s-partition) bution over transitioning to the other experts at the

+ 2[log n + s log(n 1) + (T 1)(H(↵0 ) + D(↵0 k↵))] next time step, obeys5 :

n

X

We also provide bounds on the regret with respect to Llog Llog ⇤

⇢⇤i D(⇥⇤i k⇥i )

T (⇥) T (⇥ ) + (T 1)

the Fixed-Share algorithm running with the hindsight i=1

optimal value of ↵. We extend analyses in [25, 26]

to the clustering setting, which includes providing a where ⇥⇤ is the hindsight optimal (minimizing log-loss)

more general bound in which the weight update over

5

As in [26, 25], we express cumulative loss with respect

4

To evaluate this bound, one must specify s. to the algorithms’ transition dynamics parameters.

231

You might also like

- Bosch KE-Jetronic System DescriptionDocument3 pagesBosch KE-Jetronic System DescriptionJack Tang50% (2)

- Time Series SolutionDocument5 pagesTime Series Solutionbehrooz100% (1)

- OPENING & CLOSING PROGRAM NARRATIVE REPORT (Grade 7)Document4 pagesOPENING & CLOSING PROGRAM NARRATIVE REPORT (Grade 7)Leo Jun G. Alcala100% (1)

- Ib Psychology - Perfect Saq Examination Answers PDFDocument2 pagesIb Psychology - Perfect Saq Examination Answers PDFzeelaf siraj0% (2)

- ELEC221 HW04 Winter2023-1Document16 pagesELEC221 HW04 Winter2023-1Isha ShuklaNo ratings yet

- LBSEconometricsPartIIpdf Time SeriesDocument246 pagesLBSEconometricsPartIIpdf Time SeriesZoloft Zithromax ProzacNo ratings yet

- Random Process in Analog Communication SystemsDocument91 pagesRandom Process in Analog Communication SystemsEkaveera Gouribhatla100% (1)

- Econ 512 Box Jenkins SlidesDocument31 pagesEcon 512 Box Jenkins SlidesMithilesh KumarNo ratings yet

- Manometer Lab ReportwithrefDocument12 pagesManometer Lab ReportwithrefCH20B020 SHUBHAM BAPU SHELKENo ratings yet

- Signals & System Lecture by OppenheimDocument10 pagesSignals & System Lecture by OppenheimJeevith JeeviNo ratings yet

- Hidden Markov ModelsDocument5 pagesHidden Markov ModelssitukangsayurNo ratings yet

- A New Filtering Approach For Continuous Time Linear Sys - 2014 - IFAC ProceedingDocument6 pagesA New Filtering Approach For Continuous Time Linear Sys - 2014 - IFAC Proceeding彭力No ratings yet

- Pareto EfficiencyDocument47 pagesPareto Efficiencyসাদিক মাহবুব ইসলামNo ratings yet

- Transform Analysis of Linear Time-Invariant Systems: P P P P PDocument16 pagesTransform Analysis of Linear Time-Invariant Systems: P P P P PreneeshczNo ratings yet

- Kybernetika 39-2003-4 6Document11 pagesKybernetika 39-2003-4 6elgualo1234No ratings yet

- ELEC301 - Fall 2019 Homework 2Document3 pagesELEC301 - Fall 2019 Homework 2Rıdvan BalamurNo ratings yet

- Robustness MarginsDocument18 pagesRobustness MarginsMysonic NationNo ratings yet

- Ec3354 Signals and SystemsDocument57 pagesEc3354 Signals and SystemsParanthaman GNo ratings yet

- Lecture 2: Predictability of Asset Returns: T T+K T T T+K T+KDocument8 pagesLecture 2: Predictability of Asset Returns: T T+K T T T+K T+Kintel6064No ratings yet

- Topics in Analytic Number Theory, Lent 2013. Lecture 24: Selberg's TheoremDocument6 pagesTopics in Analytic Number Theory, Lent 2013. Lecture 24: Selberg's TheoremEric ParkerNo ratings yet

- EE402 Lecture 2Document10 pagesEE402 Lecture 2sdfgNo ratings yet

- For T 3, We HaveDocument32 pagesFor T 3, We Have517wangyiqiNo ratings yet

- Sample q2Document6 pagesSample q2Keerthana ChirumamillaNo ratings yet

- Dorcak Petras 2007 PDFDocument7 pagesDorcak Petras 2007 PDFVignesh RamakrishnanNo ratings yet

- Control Theory, Tsrt09, TSRT06 Exercises & Solutions: 23 Mars 2021Document184 pagesControl Theory, Tsrt09, TSRT06 Exercises & Solutions: 23 Mars 2021Adel AdenaneNo ratings yet

- Automatic Control ExercisesDocument183 pagesAutomatic Control ExercisesFrancesco Vasturzo100% (1)

- BluSTL Controller Synthesis From Signal Temporal Logic SpecificationsDocument9 pagesBluSTL Controller Synthesis From Signal Temporal Logic SpecificationsRaghavendra M BhatNo ratings yet

- Exam in Automatic Control II Reglerteknik II 5hp: Good Luck!Document10 pagesExam in Automatic Control II Reglerteknik II 5hp: Good Luck!Armando MaloneNo ratings yet

- TSSD 2009Document16 pagesTSSD 2009MongiBESBESNo ratings yet

- Lecture 1Document47 pagesLecture 1oswardNo ratings yet

- Stability Analysis of Periodically Switched Linear Systems Using Floquet TheoryDocument11 pagesStability Analysis of Periodically Switched Linear Systems Using Floquet TheoryTukesh SoniNo ratings yet

- Week5-LectureNotesDocument15 pagesWeek5-LectureNotesbarra alfaNo ratings yet

- 2020 01 07MidTerm2Document1 page2020 01 07MidTerm2pedroetomasNo ratings yet

- Assignment 2Document5 pagesAssignment 2Aarav 127No ratings yet

- Chapter 2 AgaDocument22 pagesChapter 2 AgaNina AmeduNo ratings yet

- Pseudocode For Krotov's Method: (Dated: December 11, 2019)Document3 pagesPseudocode For Krotov's Method: (Dated: December 11, 2019)Nelson Enrique BolivarNo ratings yet

- Hevia ARMA EstimationDocument6 pagesHevia ARMA EstimationAnthanksNo ratings yet

- Time Respons: Dasar Sistem KontrolDocument13 pagesTime Respons: Dasar Sistem KontrolitmyNo ratings yet

- MidTermQuestion Paper PDFDocument5 pagesMidTermQuestion Paper PDFUsama khanNo ratings yet

- Discretization of The Flow EquationsDocument5 pagesDiscretization of The Flow EquationsAnonymous 7apoTj1No ratings yet

- Problem Set No. 6: Sabancı University Faculty of Engineering and Natural Sciences Ens 211 - SignalsDocument6 pagesProblem Set No. 6: Sabancı University Faculty of Engineering and Natural Sciences Ens 211 - SignalsGarip KontNo ratings yet

- ECEG 5352 - Lecture - 6 PDFDocument29 pagesECEG 5352 - Lecture - 6 PDFmulukenNo ratings yet

- Complexity1 Quick SummaryDocument5 pagesComplexity1 Quick SummaryagNo ratings yet

- Spread Spectrum RangingDocument24 pagesSpread Spectrum RangingErik RayNo ratings yet

- Time Respons: Dasar Sistem KontrolDocument15 pagesTime Respons: Dasar Sistem KontrolitmyNo ratings yet

- Ec2 4Document40 pagesEc2 4masudul9islamNo ratings yet

- Unesco/Ansti Econtent Development Programme: Mathematical Methods For Scientists and EngineersDocument23 pagesUnesco/Ansti Econtent Development Programme: Mathematical Methods For Scientists and Engineerstinotenda nziramasangaNo ratings yet

- Kraus 2012Document21 pagesKraus 2012FlorinNo ratings yet

- MyOFDM Tutorial V 01Document22 pagesMyOFDM Tutorial V 01Khaya KhoyaNo ratings yet

- GPU Computing For Unsteady Compressible Flow Using Roe SchemeDocument5 pagesGPU Computing For Unsteady Compressible Flow Using Roe SchemeMichele TuttafestaNo ratings yet

- ELCE301 Lecture5 (LTIsystems Time2)Document31 pagesELCE301 Lecture5 (LTIsystems Time2)Little VoiceNo ratings yet

- Signal MidDocument1 pageSignal Midjfx8j568zjNo ratings yet

- Communicati N Systems: Prof. Wonjae ShinDocument33 pagesCommunicati N Systems: Prof. Wonjae Shin이동준No ratings yet

- ReviewDocument3 pagesReviewMohamedSamir ElbuniNo ratings yet

- XT Atxt TT: Systems & Control Theory Systems & Control TheoryDocument12 pagesXT Atxt TT: Systems & Control Theory Systems & Control TheoryWilber PinaresNo ratings yet

- Chapter - 5 - Part2 - DT Signals & SystemsDocument51 pagesChapter - 5 - Part2 - DT Signals & SystemsReddy BabuNo ratings yet

- Assignment 1Document7 pagesAssignment 1Umesh KumarNo ratings yet

- Signal and SystemDocument15 pagesSignal and Systemswap_k007100% (1)

- Solution Mid SemDocument4 pagesSolution Mid SemJyoti ranjan pagodaNo ratings yet

- The Spectral Theory of Toeplitz Operators. (AM-99), Volume 99From EverandThe Spectral Theory of Toeplitz Operators. (AM-99), Volume 99No ratings yet

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)From EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)No ratings yet

- Tables of Generalized Airy Functions for the Asymptotic Solution of the Differential Equation: Mathematical Tables SeriesFrom EverandTables of Generalized Airy Functions for the Asymptotic Solution of the Differential Equation: Mathematical Tables SeriesNo ratings yet

- (Word 365-2019) Mos Word MocktestDocument4 pages(Word 365-2019) Mos Word MocktestQuỳnh Anh Nguyễn TháiNo ratings yet

- Fiedler1950 - A Comparison of Therapeutic Relationships in PsychoanalyticDocument10 pagesFiedler1950 - A Comparison of Therapeutic Relationships in PsychoanalyticAnca-Maria CovaciNo ratings yet

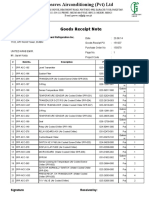

- Goods Receipt Note: Johnson Controls Air Conditioning and Refrigeration Inc. (YORK) DateDocument4 pagesGoods Receipt Note: Johnson Controls Air Conditioning and Refrigeration Inc. (YORK) DateSaad PathanNo ratings yet

- Shift Registers NotesDocument146 pagesShift Registers NotesRajat KumarNo ratings yet

- Antenna Systems - Standard Format For Digitized Antenna PatternsDocument32 pagesAntenna Systems - Standard Format For Digitized Antenna PatternsyokomaNo ratings yet

- Calculation ReportDocument157 pagesCalculation Reportisaacjoe77100% (3)

- Design of Footing R1Document8 pagesDesign of Footing R1URVESHKUMAR PATELNo ratings yet

- Drsent PT Practice Sba OspfDocument10 pagesDrsent PT Practice Sba OspfEnergyfellowNo ratings yet

- Harriet Tubman Lesson PlanDocument7 pagesHarriet Tubman Lesson PlanuarkgradstudentNo ratings yet

- DAY 3 STRESS Ielts NguyenhuyenDocument1 pageDAY 3 STRESS Ielts NguyenhuyenTĩnh HạNo ratings yet

- KSP Solutibilty Practice ProblemsDocument22 pagesKSP Solutibilty Practice ProblemsRohan BhatiaNo ratings yet

- ERP Solution in Hospital: Yangyang Shao TTU 2013Document25 pagesERP Solution in Hospital: Yangyang Shao TTU 2013Vishakh SubbayyanNo ratings yet

- GL Career Academy Data AnalyticsDocument7 pagesGL Career Academy Data AnalyticsDeveloper GuideNo ratings yet

- Engineering Geology: Wei-Min Ye, Yong-Gui Chen, Bao Chen, Qiong Wang, Ju WangDocument9 pagesEngineering Geology: Wei-Min Ye, Yong-Gui Chen, Bao Chen, Qiong Wang, Ju WangmazharNo ratings yet

- 8051 Programs Using Kit: Exp No: Date: Arithmetic Operations Using 8051Document16 pages8051 Programs Using Kit: Exp No: Date: Arithmetic Operations Using 8051Gajalakshmi AshokNo ratings yet

- Biosynthesis and Characterization of Silica Nanoparticles From RiceDocument10 pagesBiosynthesis and Characterization of Silica Nanoparticles From Riceanon_432216275No ratings yet

- National Pension System (NPS) - Subscriber Registration FormDocument3 pagesNational Pension System (NPS) - Subscriber Registration FormPratikJagtapNo ratings yet

- Classical Theories of Economic GrowthDocument16 pagesClassical Theories of Economic GrowthLearner8494% (32)

- Mathematics4 q4 Week4 v4Document11 pagesMathematics4 q4 Week4 v4Morales JinxNo ratings yet

- Summative Test in Foundation of Social StudiesDocument2 pagesSummative Test in Foundation of Social StudiesJane FajelNo ratings yet

- Dissertation MA History PeterRyanDocument52 pagesDissertation MA History PeterRyaneNo ratings yet

- Gates Crimp Data and Dies Manual BandasDocument138 pagesGates Crimp Data and Dies Manual BandasTOQUES00No ratings yet

- Dpb6013 HRM - Chapter 3 HRM Planning w1Document24 pagesDpb6013 HRM - Chapter 3 HRM Planning w1Renese LeeNo ratings yet

- Romano Uts Paragraph Writing (Sorry For The Late)Document7 pagesRomano Uts Paragraph Writing (Sorry For The Late)ទី ទីNo ratings yet

- With You: Full-Line CatalogDocument68 pagesWith You: Full-Line CatalogCOMINo ratings yet

- Aectp 300 3Document284 pagesAectp 300 3AlexNo ratings yet

- Human Development and Performance Throughout The Lifespan 2nd Edition Cronin Mandich Test BankDocument4 pagesHuman Development and Performance Throughout The Lifespan 2nd Edition Cronin Mandich Test Bankanne100% (28)