Professional Documents

Culture Documents

Human Activity Recognition Using Machine Learning

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Human Activity Recognition Using Machine Learning

Copyright:

Available Formats

Volume 9, Issue 4, April – 2024 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165 https://doi.org/10.38124/ijisrt/IJISRT24APR1530

Human Activity Recognition using

Machine Learning

Omkar Mandave1; Abhishek Phad2; Sameer Patekar3; Dr. Nandkishor Karlekar4

Mahatma Gandhi Mission’s College of Engineering and Technology, Navi Mumbai, Maharashtra

Abstract:- Working information and classification is one For instance, the MS-G3D network addresses some of

of the most important problems in computer science. these challenges by automatically learning features from

Recognizing and identifying actions or tasks performed skeleton sequences and incorporating 3D convolution and

by humans is the main goal of intelligent video systems. attention mechanisms [1]. To further enhance the

Human activity is used in many applications, from performance of action recognition systems, new methods like

human-machine interaction to surveillance, security and the 3D graph convolutional feature selection and dense pre-

healthcare. Despite continuous efforts, working estimation (3D-GSD) method have been proposed [1]. This

knowledge in a limitless field is still a difficult task and method leverages spatial and temporal attention mechanisms

faces many challenges. In this article, we focus on some of along with human prediction models to better capture local

the current research articles on various cognitive and global information of actions and analyse human poses

functions. This project includes three popular methods to comprehensively [1]. Meanwhile, in a separate line of

define projects: vision-based (using estimates), practical research, the use of Convolutional Neural Networks (CNNs)

devices, and smartphones. We will also discuss some for human behaviour recognition from different viewpoints

advantages and disadvantages of the above methods and has gained attention [2].

give a brief comparison of their accuracy. The results will

also show how the visualization method has become a CNNs are utilized for tasks such as object detection,

popular method for HAR research today. segmentation, and recognition in videos, aiming to identify

various classes of body movement [2]. In parallel, the

Keywords:- Artificial Intelligence (AI), Human Activity development of Human Activity Recognition (HAR) systems

Recognition (HAR), Computer Vision, Machine Learning. has been propelled by the demand for automation in various

fields including healthcare, security, entertainment, and

I. INTRODUCTION abnormal activity monitoring [3]. HAR systems rely on

computer vision-based technologies, particularly deep

Skeleton features are widely used in human action learning and machine learning, to recognize human actions or

recognition and human-computer interaction [1]. This abnormal behaviours [3]. These systems monitor human

technology involves detecting and tracking key points of a activities through visual monitoring or sensing technologies,

human skeleton from images or videos, typically utilizing categorizing actions into gestures, actions, interactions, and

depth cameras, sensors, and other equipment to capture group activities based on involved body parts and movements

movement trajectories and analyse them through computer [3]. The applications of HAR encompass diverse domains

vision and machine learning techniques. The applications of such as video processing, surveillance, education, and

skeleton behaviour recognition span across various domains healthcare [3].

such as games, virtual reality, healthcare, and security [1].

Similarly, action identification in videos remains a

However, recognizing actions from skeleton data poses crucial task in computer vision and artificial intelligence,

several challenges due to factors such as multiple characters with applications in intelligent environments, security

in videos, varying perspectives, and interactions between systems, and human-machine interfaces [4]. Deep learning

characters [1]. Early methods relied on hand-designed feature methods, including convolutional and recurrent neural

extraction and spatiotemporal modelling techniques, while networks, have been employed to address challenges such as

recent advancements have been made in deep learning-based focal point recognition, lighting conditions, motion

approaches, particularly focusing on skeleton key points or variations, and imbalanced datasets [4]. Proposed models

spatio-temporal feature analysis [1]. integrate object identification, skeleton articulation, and 3D

convolutional network approaches to enhance action

Deep learning methods have shown promise in recognition accuracy [4]. These models leverage deep

improving action recognition accuracy, but traditional learning architectures, including LSTM recurrent networks

approaches still face challenges in adapting to different and 3D CNNs, along with innovative techniques such as

scenarios and effectively handling pose changes and high feature extraction and object detection to classify activities in

motion complexity [1]. video sequences effectively [4].

IJISRT24APR1530 www.ijisrt.com 903

Volume 9, Issue 4, April – 2024 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165 https://doi.org/10.38124/ijisrt/IJISRT24APR1530

Human Activity Recognition System from Different Poses

with CNN:

Human Pose Detection: Utilizes HAAR Feature-based

Classifier for detecting human poses in videos or images.

Human Pose Segmentation: Segments human poses based on

the output of the HAAR feature-based classifier. Activity

Recognition: Uses Convolutional Neural Network (CNN) for

recognizing human activities from segmented human poses.

Human Pose Detection: Utilizes HAAR Feature-based

Classifier trained on grayscale images to detect human poses

accurately. Human Pose Segmentation: Segments human

poses based on the coordinates obtained from the HAAR

feature-based classifier and resizes the images to 64x64

resolution. Activity Recognition: Employs a CNN

architecture, specifically VGG19, for recognizing human

activities from the segmented human poses.

Effectiveness of Pre-Trained CNN Networks for Detecting

Abnormal Activities in Online Exams:

Detection of online exam cheating using deep learning,

motion-based keyframe extraction, COVID-19 dataset

collection, pre-trained models (YOLOv5, Inception_

ResNet_v2, DenseNet121, Inception-V3), and CNN fine-

tuning for enhanced performance. Analysis of cheating

activities during online exams using deep learning models.

Collection and preprocessing of a dataset containing

videos of various cheating behaviors. Use of pre-trained and

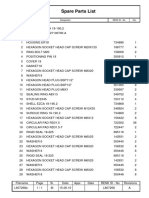

Fig 1 The Overview of General HAR System this is Basic fine-tuned deep learning models for classification. Focus on

Building Blocks of Almost Every System model accuracy, loss, precision, recall, and F1-score for

evaluation.

Problem Statement & Objectives

Human Activity Recognition (HAR) aims to develop A Real-Time 3-Dimensional Object Detection Based

algorithms and systems capable of identifying and classifying Human Action Recognition Model:

various human activities based on data collected from sensors Data fusion technique to merge datasets. Utilization of

or video streams. In fields such as healthcare, sports 3D Convolutional Neural Network (3DCNN) with

performance analysis, and human-computer interaction, multiplicative Long Short-Term Memory (LSTM) for feature

accurate HAR systems can provide valuable insights into extraction. Object identification using finetuned YOLOv6.

individual behaviors, health monitoring, and enhancing user Skeleton articulation technique for human pose estimation.

experiences. However, challenges arise due to variations in Combination of four different modules into a single neural

sensor data quality, diverse activity patterns, and real-time network. Integration of data fusion, feature extraction, object

processing requirements. Thus, the need arises for robust and detection, and skeleton articulation techniques. Utilization of

efficient HAR techniques that can accurately classify human multiplicative LSTM to improve classification accuracy.

activities in real-time, considering the complexities of

dynamic environments and diverse user contexts. Different Approaches to HAR:

A HAR system is necessary in order to accomplish the

II. LITERATURE REVIEW purpose of identifying human activity. For this reason,

sensor-based and vision-based activity recognition are the

3D Graph Convolutional Feature Selection and Dense two most widely utilized methods. We are able to categorize

Pre-Estimation for Skeleton Action Recognition: them.

The proposed method utilizes a 3D graph convolutional

feature selection (3DSKNet) to adaptively learn important Pose Based Approach:

features in skeleton sequences. Additionally, a DensePose Pose-based approaches focus on analysing the human

algorithm is introduced to detect complex key points of body's posture or pose to recognize activities. This approach

human body postures and optimize the accuracy and often involves using computer vision techniques to extract

interpretability of action recognition. 3DSKNet focuses on key joint positions or body configurations from images or

key skeletal parts, improving the accuracy and robustness of videos. One common technique in pose-based HAR is using

bone recognition by selecting important features adaptively. pose estimation algorithms, such as OpenPose or PoseNet, to

DensePose provides more detailed pose and shape detect and track key points on the human body. These

information, enhancing the accuracy of human motion algorithms can estimate the positions of joints like elbows,

analysis. shoulders, hips, and knees. Once the pose information is

IJISRT24APR1530 www.ijisrt.com 904

Volume 9, Issue 4, April – 2024 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165 https://doi.org/10.38124/ijisrt/IJISRT24APR1530

obtained, machine learning or deep learning models can be The relationship between the growth of HAR and AI is

trained to classify different activities based on the detected symbiotic. As AI techniques evolve, they enable more

poses. Features derived from pose data, such as joint angles sophisticated and accurate methods for extracting meaningful

or body part velocities, can be used as input features for these information from raw sensor data, giving rise to improved

models. Pose-based approaches are particularly useful when HAR systems. In the past, HAR models relied on single

sensor data is not available or when activities can be reliably images or short image sequences. These advanced models,

inferred from visual cues, such as in surveillance or human- such as CNNs combined with LSTMs, have made HAR

computer interaction applications. designs more robust and adaptable.

Sensor Based: Human activities are diverse and can encompass a wide

Sensor-based approaches involve using various types of range of motions and positions. HAR has diverse

sensors, such as accelerometers, gyroscopes, magnetometers, applications, including healthcare, surveillance, monitoring,

or inertial measurement units (IMUs), to capture motion or and assistance for the elderly and people with disabilities. It

physiological signals associated with human activities. These is imperative to note that while the field is making great

sensors can be embedded in wearable devices like strides, there are challenges that need to be addressed. These

smartwatches, fitness trackers, or smartphones, or they can challenges include the selection of appropriate sensors, data

be placed in the environment (e.g., on walls or floors) to collection, recognition performance, energy efficiency,

capture activity data. Data collected from sensors typically processing capacity, and system flexibility.

include time-series measurements of acceleration, rotation, or

other physical quantities. Machine learning algorithms, such Our goal in this project is to create an efficient and

as decision trees, support vector machines (SVMs), or deep effective human recognition system that can process video

neural networks, can then be trained on this data to recognize and image data to detect tasks performed. The system is

different activities. Sensor-based approaches are widely used versatile and can find applications in many areas, such as

in applications like activity tracking, fall detection, sports caring for and helping people with special needs.

performance analysis, and healthcare monitoring due to their

versatility and non-intrusiveness. III. METHODOLOGY

Proposed System This application harnesses MediaPipe's human pose

Human Activity Recognition (HAR) is a burgeoning estimation pipeline, powered by machine learning models

field with myriad applications, driven by the proliferation of like convolutional neural networks (CNNs) or similar

devices equipped with sensors and cameras, and the rapid architectures. These models enable real-time detection and

advancements in Artificial Intelligence (AI). HAR involves estimation of key points on the human body, resulting in

the art of identifying and naming various human activities graphical representations of skeletal structures. These visuals

using AI algorithms on data collected from these devices. serve as a roadmap of a person's posture and movements,

The data from these sources, including smartphones, video offering valuable insights into their activities. At its core are

cameras, RFID, and Wi-Fi, provides a rich source of pose landmarks, individual coordinates corresponding to

information that can be harnessed for recognizing human specific joints in the body, pivotal for accurately depicting a

activities. person's pose and tracking their movements over time.

Furthermore, the connections between these key points,

known as pairs, contribute to a deeper understanding of

human motion dynamics.

Fig 2 Conventional Machine Learning Architecture for Human Activity Recognition

IJISRT24APR1530 www.ijisrt.com 905

Volume 9, Issue 4, April – 2024 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165 https://doi.org/10.38124/ijisrt/IJISRT24APR1530

Fig 3 Basic Architecture of the CNN-LSTM Network

Another critical component of the HAR system is its

user interface (UI), acting as the primary point of interaction

between the user and the application. Leveraging the Tkinter

library, we have crafted an intuitive and visually appealing

UI that facilitates seamless navigation and interaction. The Fig 5 Selecting Video

interface prominently features labeled buttons for various

functionalities, neatly categorized under Camera and Video

options, empowering users to effortlessly select their

preferred mode for HAR.

Working

The project boasts a Graphical User Interface (GUI)

crafted with Tkinter, facilitating human tracking while

leveraging Mediapipe's pose estimation model for exercise

supervision. Through Tkinter, the GUI provides users with a

variety of exercise tracking options, including fighting,

smoking, walking, reading, and playing. It offers the

flexibility of choosing between real-time camera recognition

or video input, with distinct buttons to toggle between the

two modes.

IV. RESULT

These are the results we achieved after developing the

application. The result shows the GUI, Human Activity Fig 6 Video Output

Recognition through real-time video feed and prerecorded

video.

Fig 4 Graphical user Interface Fig 7 Real-Time Camera Output

IJISRT24APR1530 www.ijisrt.com 906

Volume 9, Issue 4, April – 2024 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165 https://doi.org/10.38124/ijisrt/IJISRT24APR1530

V. FUTURE SCOPE VI. CONCLUSION

The future holds a wealth of opportunities for further In an era dominated by the advancements in computer

enhancing and extending Human Activity Recognition vision, the development and implementation of Human

systems. Here are some promising areas of development and Activity Recognition (HAR) systems have emerged as a

exploration: compelling and indispensable technology. These systems has

proven a remarkably effective in addressing multitude of

Multimodal Integration: real-world applications, from surveillance and monitoring to

Incorporating data from diverse sensor types, such as providing invaluable assistance to elderly and visually

audio, accelerometers, and more, can enable richer context impaired individuals. The project's CNN-LSTM-based HAR

and improved recognition accuracy. system represents a significant stride in this direction,

offering a versatile and accurate approach to recognize and

Privacy and Ethics: categorize a wide array of human activities. This system's

Researching and implementing robust privacy measures adaptability to video streams and image data showcases its

and ethical guidelines is essential for deploying HAR potential in addressing the diverse needs of various industries

systems in sensitive or public environments. and domains.

Real-World Deployment: REFERENCES

Scaling up the usage of HAR systems in practical

domains like smart homes, healthcare, and personalized [1]. J. Zhang, A. Yang, C. Miao, X. Li, R. Zhang and D.

assistance offers a wide array of benefits. N. H. Thanh, "3D Graph Convolutional Feature

Selection and Dense Pre-Estimation for Skeleton

Edge Computing: Action Recognition," in IEEE Access, vol. 12, pp.

Investigating the feasibility of deploying HAR models 11733-11742, 2024, doi:

on edge devices can lead to more responsive real-time 10.1109/ACCESS.2024.3353622.

applications without heavy reliance on cloud resources. [2]. M. Atikuzzaman, T. R. Rahman, E. Wazed, M. P.

Hossain and M. Z. Islam, "Human Activity

Incremental Learning: Recognition System from Different Poses with CNN,"

Developing techniques for incremental learning allows 2020 2nd International Conference on Sustainable

systems to adapt to new activities and environments over Technologies for Industry 4.0 (STI), Dhaka,

time without complete retraining. Bangladesh, 2020, pp. 1-5, doi:

10.1109/STI50764.2020.9350508.

Environment Robustness: [3]. M. Ramzan, A. Abid, M. Bilal, K. M. Aamir, S. A.

Enhancing system robustness to challenging Memon and T. -S. Chung, "Effectiveness of Pre-

environments, including adverse weather and lighting Trained CNN Networks for Detecting Abnormal

conditions, is a crucial research direction. Activities in Online Exams," in IEEE Access, vol. 12,

pp. 21503-21519, 2024, doi:

User Interaction: 10.1109/ACCESS.2024.3359689.

Exploring natural and intuitive user interaction [4]. S. Win and T. L. L. Thein, "Real-Time Human

methods, like gesture recognition or voice commands, can Motion Detection, Tracking and Activity Recognition

enhance the usability of HAR systems. with Skeletal Model," 2020 IEEE Conference on

Computer Applications(ICCA), Yangon, Myanmar,

In conclusion, the future of Human Activity 2020, pp. 1-5, doi:

Recognition is filled with exciting possibilities. The continual 10.1109/ICCA49400.2020.9022822.

development of these systems is set to unlock new levels of [5]. A. Gupta, K. Gupta, K. Gupta and K. Gupta, "A

accuracy, versatility, and applicability in various domains. Survey on Human Activity Recognition and

These advancements will contribute to the ongoing evolution Classification," 2020 International Conference on

of intelligent systems that can understand and respond to Communication and Signal Processing (ICCSP),

human actions across diverse scenarios, making a significant Chennai, India, 2020, pp. 0915-0919, doi:

impact on society and industries. 10.1109/ICCSP48568.2020.9182416.

[6]. https://www.mdpi.com/2078-2489/13/6/275

IJISRT24APR1530 www.ijisrt.com 907

You might also like

- Hide Tanning PDF - FINAL7.23Document32 pagesHide Tanning PDF - FINAL7.23Kevin100% (1)

- Mobile Phone CloningDocument38 pagesMobile Phone CloningDevansh KumarNo ratings yet

- Steel Forgings, Carbon and Alloy, For Pinions, Gears and Shafts For Reduction GearsDocument4 pagesSteel Forgings, Carbon and Alloy, For Pinions, Gears and Shafts For Reduction Gearssharon blushteinNo ratings yet

- Ancient Indian ArchitectureDocument86 pagesAncient Indian ArchitectureRishika100% (2)

- Radhu's Recipes - 230310 - 180152 PDFDocument123 pagesRadhu's Recipes - 230310 - 180152 PDFl1a2v3 C4No ratings yet

- 01-13 PWE3 ConfigurationDocument97 pages01-13 PWE3 ConfigurationRoger ReisNo ratings yet

- Fold N Slide Hardware BrochureDocument52 pagesFold N Slide Hardware Brochurecavgsi16vNo ratings yet

- Review paper-HARDocument3 pagesReview paper-HARJhalak DeyNo ratings yet

- Final Selected ReportDocument4 pagesFinal Selected Reportaly869556No ratings yet

- 2023efficient Deep Learning Approach To Recognize Person Attributes by Using Hybrid Transformers For Surveillance ScenariosDocument13 pages2023efficient Deep Learning Approach To Recognize Person Attributes by Using Hybrid Transformers For Surveillance ScenariosEng-Ali Al-madaniNo ratings yet

- Human Activity Detection Using Deep - 2-1Document8 pagesHuman Activity Detection Using Deep - 2-1Riky Tri YunardiNo ratings yet

- A Review On Machine Learning Styles in Computer VisionTechniques and Future DirectionsDocument37 pagesA Review On Machine Learning Styles in Computer VisionTechniques and Future DirectionsSonyNo ratings yet

- Identification of Human & Various Objects Through Image Processing Based SystemDocument4 pagesIdentification of Human & Various Objects Through Image Processing Based SystemAnjanaNo ratings yet

- Computer Vision MidDocument2 pagesComputer Vision MidHuzair NadeemNo ratings yet

- Violence Detection Paper 1Document6 pagesViolence Detection Paper 1Sheetal SonawaneNo ratings yet

- Human RecognitionDocument8 pagesHuman RecognitionKampa LavanyaNo ratings yet

- Literature Survey On Criminal Identification System Using Facial Detection and IdentificationDocument7 pagesLiterature Survey On Criminal Identification System Using Facial Detection and IdentificationAdministerNo ratings yet

- 2 PBDocument5 pages2 PBJulianto SaputroNo ratings yet

- 14166-Article Text-25243-1-10-20231018Document7 pages14166-Article Text-25243-1-10-20231018ahmedalhaswehNo ratings yet

- YoloDocument30 pagesYoloPrashanth KumarNo ratings yet

- Action RecognitionDocument14 pagesAction RecognitionJ SANDHYANo ratings yet

- Sensors: New Sensor Data Structuring For Deeper Feature Extraction in Human Activity RecognitionDocument26 pagesSensors: New Sensor Data Structuring For Deeper Feature Extraction in Human Activity RecognitionkirubhaNo ratings yet

- Human Activity Recognition Using Convolutional Neural NetworkDocument19 pagesHuman Activity Recognition Using Convolutional Neural NetworkKaniz FatemaNo ratings yet

- EasyChair Preprint 2297Document5 pagesEasyChair Preprint 2297apurv shuklaNo ratings yet

- Capstone PPT 4Document17 pagesCapstone PPT 4Shweta BhargudeNo ratings yet

- C13 Research Paper 3-2Document7 pagesC13 Research Paper 3-2SakethNo ratings yet

- Object Detection Using CNNDocument6 pagesObject Detection Using CNNInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Journal Pre-Proofs: MeasurementDocument28 pagesJournal Pre-Proofs: MeasurementG.R.Mahendrababau AP - ECE departmentNo ratings yet

- Irjet V10i822Document5 pagesIrjet V10i822dk singhNo ratings yet

- CNN LSTMDocument5 pagesCNN LSTMEngr. Naveed MazharNo ratings yet

- Vision Based Anomalous Human Behaviour Detection Using CNN and Transfer LearningDocument6 pagesVision Based Anomalous Human Behaviour Detection Using CNN and Transfer LearningIJRASETPublicationsNo ratings yet

- Batch 6Document28 pagesBatch 6Prashanth KumarNo ratings yet

- Emotion Classification of Facial Images Using Machine Learning ModelsDocument6 pagesEmotion Classification of Facial Images Using Machine Learning ModelsIJRASETPublicationsNo ratings yet

- Skeleton Based Human Action Recognition Using A Structured-Tree Neural NetworkDocument6 pagesSkeleton Based Human Action Recognition Using A Structured-Tree Neural NetworkArs Arifur RahmanNo ratings yet

- Actions in The Eye - Dynamic Gaze Datasets and Learnt Saliency Models For Visual RecognitionDocument15 pagesActions in The Eye - Dynamic Gaze Datasets and Learnt Saliency Models For Visual Recognitionsiva shankaranNo ratings yet

- IJReal-Time Object Detection by Using Deep Learning A SurveyDocument6 pagesIJReal-Time Object Detection by Using Deep Learning A SurveyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Orientation-Discriminative Feature Representation For Decentralized Pedestrian TrackingDocument8 pagesOrientation-Discriminative Feature Representation For Decentralized Pedestrian Trackingbukhtawar zamirNo ratings yet

- Research Paper PDFDocument6 pagesResearch Paper PDFKhushi UdasiNo ratings yet

- Iris Recognition With Off-the-Shelf CNN Features: A Deep Learning PerspectiveDocument8 pagesIris Recognition With Off-the-Shelf CNN Features: A Deep Learning PerspectivepedroNo ratings yet

- Full TextDocument6 pagesFull TextglidingseagullNo ratings yet

- Detection of Objects in Motion-A Survey of Video SurveillanceDocument6 pagesDetection of Objects in Motion-A Survey of Video SurveillanceARTHURNo ratings yet

- Deep Neural Network Approachesfor Video Based Human Activity RecognitionDocument4 pagesDeep Neural Network Approachesfor Video Based Human Activity RecognitionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Innovative Face Detection Using Artificial IntelligenceDocument4 pagesInnovative Face Detection Using Artificial IntelligenceInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Implementation of Human Tracking Using Back Propagation AlgorithmDocument4 pagesImplementation of Human Tracking Using Back Propagation Algorithmpurushothaman sinivasanNo ratings yet

- Human Body Orientation Estimation Using Convolutional Neural NetworkDocument5 pagesHuman Body Orientation Estimation Using Convolutional Neural Networkbukhtawar zamirNo ratings yet

- Weapon Detection Using Yolov4, CNNDocument7 pagesWeapon Detection Using Yolov4, CNNIJRASETPublicationsNo ratings yet

- Fin Irjmets1686037369Document5 pagesFin Irjmets1686037369bhanuprakash15440No ratings yet

- Sensors XyzxyzDocument14 pagesSensors Xyzxyzmasty dastyNo ratings yet

- ST ND RD: NtroductionDocument4 pagesST ND RD: NtroductionsufikasihNo ratings yet

- Performance of Hasty and Consistent Multi Spectral Iris Segmentation Using Deep LearningDocument5 pagesPerformance of Hasty and Consistent Multi Spectral Iris Segmentation Using Deep LearningEditor IJTSRDNo ratings yet

- Artificial Intelligence in Object Detection-ReportDocument6 pagesArtificial Intelligence in Object Detection-ReportDimas PradanaNo ratings yet

- Real Time Object Detection System Using Deep Learning: University Institute of Engineering, Chandigarh UniversityDocument6 pagesReal Time Object Detection System Using Deep Learning: University Institute of Engineering, Chandigarh UniversityShanu Naval SinghNo ratings yet

- Review of Violence Detection System.Document4 pagesReview of Violence Detection System.Vishwajit DandageNo ratings yet

- ICSCSS 177 Final ManuscriptDocument6 pagesICSCSS 177 Final ManuscriptdurgatempmailNo ratings yet

- A Person Re-Identification System For Mobile DevicesDocument6 pagesA Person Re-Identification System For Mobile DevicesDebopriyo BanerjeeNo ratings yet

- Smart Surveillance Using Computer VisionDocument4 pagesSmart Surveillance Using Computer VisionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Survey of Object Detection Approaches in Embedded Platforms: Ii. Literature ReviewDocument5 pagesSurvey of Object Detection Approaches in Embedded Platforms: Ii. Literature ReviewEshwar KamsaliNo ratings yet

- Multi-Stream Siamese and Faster Region-Based Neural Network For Real-Time Object TrackingDocument14 pagesMulti-Stream Siamese and Faster Region-Based Neural Network For Real-Time Object TrackingAnanya SinghNo ratings yet

- 23 AI Crowd A - Cloud-Based - Deep - Learning - Framework - For - Early - Detection - of - Pushing - at - Crowded - Event - EntrancesDocument14 pages23 AI Crowd A - Cloud-Based - Deep - Learning - Framework - For - Early - Detection - of - Pushing - at - Crowded - Event - Entrances20cs068No ratings yet

- Synopsis 1Document4 pagesSynopsis 1Hrishikesh GaikwadNo ratings yet

- Development of A Hand Pose Recognition System On An Embedded Computer Using Artificial IntelligenceDocument4 pagesDevelopment of A Hand Pose Recognition System On An Embedded Computer Using Artificial IntelligenceGraceSevillanoNo ratings yet

- Design & Implementation of Real Time Detection System Based On SSD & OpenCVDocument17 pagesDesign & Implementation of Real Time Detection System Based On SSD & OpenCVDr-Anwar ShahNo ratings yet

- Human Emotions Reader by Medium of Digital Image ProcessingDocument8 pagesHuman Emotions Reader by Medium of Digital Image ProcessingAKULA AIT20BEAI046No ratings yet

- Human Action Recognition On Raw Depth MapsDocument13 pagesHuman Action Recognition On Raw Depth Mapsjacekt89No ratings yet

- Literature Review - SCDocument2 pagesLiterature Review - SCprateek.singh.20cseNo ratings yet

- Ullah 2019Document6 pagesUllah 2019Dinar TASNo ratings yet

- Reading Intervention Through “Brigada Sa Pagbasa”: Viewpoint of Primary Grade TeachersDocument3 pagesReading Intervention Through “Brigada Sa Pagbasa”: Viewpoint of Primary Grade TeachersInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- A Study to Assess the Knowledge Regarding Teratogens Among the Husbands of Antenatal Mother Visiting Obstetrics and Gynecology OPD of Sharda Hospital, Greater Noida, UpDocument5 pagesA Study to Assess the Knowledge Regarding Teratogens Among the Husbands of Antenatal Mother Visiting Obstetrics and Gynecology OPD of Sharda Hospital, Greater Noida, UpInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Application of Game Theory in Solving Urban Water Challenges in Ibadan-North Local Government Area, Oyo State, NigeriaDocument9 pagesApplication of Game Theory in Solving Urban Water Challenges in Ibadan-North Local Government Area, Oyo State, NigeriaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Firm Size as a Mediator between Inventory Management Andperformance of Nigerian CompaniesDocument8 pagesFirm Size as a Mediator between Inventory Management Andperformance of Nigerian CompaniesInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- PHREEQ C Modelling Tool Application to Determine the Effect of Anions on Speciation of Selected Metals in Water Systems within Kajiado North Constituency in KenyaDocument71 pagesPHREEQ C Modelling Tool Application to Determine the Effect of Anions on Speciation of Selected Metals in Water Systems within Kajiado North Constituency in KenyaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Exploring the Post-Annealing Influence on Stannous Oxide Thin Films via Chemical Bath Deposition Technique: Unveiling Structural, Optical, and Electrical DynamicsDocument7 pagesExploring the Post-Annealing Influence on Stannous Oxide Thin Films via Chemical Bath Deposition Technique: Unveiling Structural, Optical, and Electrical DynamicsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Consistent Robust Analytical Approach for Outlier Detection in Multivariate Data using Isolation Forest and Local Outlier FactorDocument5 pagesConsistent Robust Analytical Approach for Outlier Detection in Multivariate Data using Isolation Forest and Local Outlier FactorInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Impact of Music on Orchid plants Growth in Polyhouse EnvironmentsDocument5 pagesThe Impact of Music on Orchid plants Growth in Polyhouse EnvironmentsInternational Journal of Innovative Science and Research Technology100% (1)

- Esophageal Melanoma - A Rare NeoplasmDocument3 pagesEsophageal Melanoma - A Rare NeoplasmInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Mandibular Mass Revealing Vesicular Thyroid Carcinoma A Case ReportDocument5 pagesMandibular Mass Revealing Vesicular Thyroid Carcinoma A Case ReportInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Vertical Farming System Based on IoTDocument6 pagesVertical Farming System Based on IoTInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Sustainable Energy Consumption Analysis through Data Driven InsightsDocument16 pagesSustainable Energy Consumption Analysis through Data Driven InsightsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Detection of Phishing WebsitesDocument6 pagesDetection of Phishing WebsitesInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Detection and Counting of Fake Currency & Genuine Currency Using Image ProcessingDocument6 pagesDetection and Counting of Fake Currency & Genuine Currency Using Image ProcessingInternational Journal of Innovative Science and Research Technology100% (9)

- Osho Dynamic Meditation; Improved Stress Reduction in Farmer Determine by using Serum Cortisol and EEG (A Qualitative Study Review)Document8 pagesOsho Dynamic Meditation; Improved Stress Reduction in Farmer Determine by using Serum Cortisol and EEG (A Qualitative Study Review)International Journal of Innovative Science and Research TechnologyNo ratings yet

- Review on Childhood Obesity: Discussing Effects of Gestational Age at Birth and Spotting Association of Postterm Birth with Childhood ObesityDocument10 pagesReview on Childhood Obesity: Discussing Effects of Gestational Age at Birth and Spotting Association of Postterm Birth with Childhood ObesityInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Realigning Curriculum to Simplify the Challenges of Multi-Graded Teaching in Government Schools of KarnatakaDocument5 pagesRealigning Curriculum to Simplify the Challenges of Multi-Graded Teaching in Government Schools of KarnatakaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- An Efficient Cloud-Powered Bidding MarketplaceDocument5 pagesAn Efficient Cloud-Powered Bidding MarketplaceInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Entrepreneurial Creative Thinking and Venture Performance: Reviewing the Influence of Psychomotor Education on the Profitability of Small and Medium Scale Firms in Port Harcourt MetropolisDocument10 pagesEntrepreneurial Creative Thinking and Venture Performance: Reviewing the Influence of Psychomotor Education on the Profitability of Small and Medium Scale Firms in Port Harcourt MetropolisInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- An Overview of Lung CancerDocument6 pagesAn Overview of Lung CancerInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Impact of Stress and Emotional Reactions due to the Covid-19 Pandemic in IndiaDocument6 pagesImpact of Stress and Emotional Reactions due to the Covid-19 Pandemic in IndiaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Influence of Principals’ Promotion of Professional Development of Teachers on Learners’ Academic Performance in Kenya Certificate of Secondary Education in Kisii County, KenyaDocument13 pagesInfluence of Principals’ Promotion of Professional Development of Teachers on Learners’ Academic Performance in Kenya Certificate of Secondary Education in Kisii County, KenyaInternational Journal of Innovative Science and Research Technology100% (1)

- Auto Tix: Automated Bus Ticket SolutionDocument5 pagesAuto Tix: Automated Bus Ticket SolutionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Digital Finance-Fintech and it’s Impact on Financial Inclusion in IndiaDocument10 pagesDigital Finance-Fintech and it’s Impact on Financial Inclusion in IndiaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Ambulance Booking SystemDocument7 pagesAmbulance Booking SystemInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Utilization of Waste Heat Emitted by the KilnDocument2 pagesUtilization of Waste Heat Emitted by the KilnInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Designing Cost-Effective SMS based Irrigation System using GSM ModuleDocument8 pagesDesigning Cost-Effective SMS based Irrigation System using GSM ModuleInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Effect of Solid Waste Management on Socio-Economic Development of Urban Area: A Case of Kicukiro DistrictDocument13 pagesEffect of Solid Waste Management on Socio-Economic Development of Urban Area: A Case of Kicukiro DistrictInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Examining the Benefits and Drawbacks of the Sand Dam Construction in Cadadley RiverbedDocument8 pagesExamining the Benefits and Drawbacks of the Sand Dam Construction in Cadadley RiverbedInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Predictive Analytics for Motorcycle Theft Detection and RecoveryDocument5 pagesPredictive Analytics for Motorcycle Theft Detection and RecoveryInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- 1.1.DeFacto Academy enDocument7 pages1.1.DeFacto Academy enYahya AçafNo ratings yet

- The Young Muslim Muharram 1429 January 2008Document12 pagesThe Young Muslim Muharram 1429 January 2008Amthullah Binte YousufNo ratings yet

- The Top Level Diagram Is A Use Case Diagram That Shows The Actors andDocument5 pagesThe Top Level Diagram Is A Use Case Diagram That Shows The Actors andsin2pNo ratings yet

- (E) Chapter 1 - Origin of Soil and Grain SizeDocument23 pages(E) Chapter 1 - Origin of Soil and Grain SizeLynas Beh TahanNo ratings yet

- Finalizing The Accounting ProcessDocument2 pagesFinalizing The Accounting ProcessMilagro Del ValleNo ratings yet

- Geometry Sparkcharts Geometry Sparkcharts: Book Review Book ReviewDocument3 pagesGeometry Sparkcharts Geometry Sparkcharts: Book Review Book ReviewAyman BantuasNo ratings yet

- CHED - NYC PresentationDocument19 pagesCHED - NYC PresentationMayjee De La CruzNo ratings yet

- The Dedicated - A Biography of NiveditaDocument384 pagesThe Dedicated - A Biography of NiveditaEstudante da Vedanta100% (2)

- PHP - Google Maps - How To Get GPS Coordinates For AddressDocument4 pagesPHP - Google Maps - How To Get GPS Coordinates For AddressAureliano DuarteNo ratings yet

- HET Neoclassical School, MarshallDocument26 pagesHET Neoclassical School, MarshallDogusNo ratings yet

- A Sensorless Direct Torque Control Scheme Suitable For Electric VehiclesDocument9 pagesA Sensorless Direct Torque Control Scheme Suitable For Electric VehiclesSidahmed LarbaouiNo ratings yet

- Math Achievement Gr11GenMathDocument3 pagesMath Achievement Gr11GenMathMsloudy VilloNo ratings yet

- Management of Developing DentitionDocument51 pagesManagement of Developing Dentitionahmed alshaariNo ratings yet

- Ebook PDF Understanding Nutrition 2th by Eleanor Whitney PDFDocument41 pagesEbook PDF Understanding Nutrition 2th by Eleanor Whitney PDFedward.howard102100% (31)

- Dr. Shikha Baskar: CHE154: Physical Chemistry For HonorsDocument22 pagesDr. Shikha Baskar: CHE154: Physical Chemistry For HonorsnishitsushantNo ratings yet

- Exercises - PronounsDocument3 pagesExercises - PronounsTerritório PBNo ratings yet

- Grammar Translation MethodDocument22 pagesGrammar Translation MethodCeyinNo ratings yet

- Unidad 1 - Paco (Tema 3 - Paco Is Wearing A New Suit) PDFDocument24 pagesUnidad 1 - Paco (Tema 3 - Paco Is Wearing A New Suit) PDFpedropruebaNo ratings yet

- Analogy - 10 Page - 01 PDFDocument10 pagesAnalogy - 10 Page - 01 PDFrifathasan13No ratings yet

- Industrial RobotDocument32 pagesIndustrial RobotelkhawadNo ratings yet

- Biodiversity ReportDocument52 pagesBiodiversity ReportAdrian HudsonNo ratings yet

- Pyneng Readthedocs Io en LatestDocument702 pagesPyneng Readthedocs Io en LatestNgọc Duy VõNo ratings yet

- L807268EDocument1 pageL807268EsjsshipNo ratings yet