100% found this document useful (2 votes)

196 views12 pagesProgram Evaluation

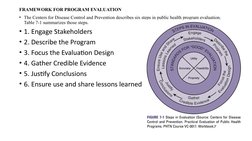

Program evaluation is important for public health initiatives to determine their effectiveness and impact. There are two main types of evaluation: formative evaluation which occurs during implementation to identify opportunities for improvement, and summative evaluation which occurs after to assess outcomes against objectives. Evaluations should utilize both quantitative and qualitative methods to provide a comprehensive assessment and determine if programs should be continued, expanded, or improved. The results of evaluations are important for ensuring quality improvement and effective use of resources.

Uploaded by

Geneto RosarioCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd

100% found this document useful (2 votes)

196 views12 pagesProgram Evaluation

Program evaluation is important for public health initiatives to determine their effectiveness and impact. There are two main types of evaluation: formative evaluation which occurs during implementation to identify opportunities for improvement, and summative evaluation which occurs after to assess outcomes against objectives. Evaluations should utilize both quantitative and qualitative methods to provide a comprehensive assessment and determine if programs should be continued, expanded, or improved. The results of evaluations are important for ensuring quality improvement and effective use of resources.

Uploaded by

Geneto RosarioCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd