Professional Documents

Culture Documents

Eigenvalue Vineet

Uploaded by

VSCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Eigenvalue Vineet

Uploaded by

VSCopyright:

Available Formats

Introduction

• Definition

• Why do we study it ?

• Is the Behavior system based or nodal based?

• What are the real time applications

• How do we calculate these values

•What are the applications and applicable areas

•A lot many definitions and new way of looking at the things

•Matrix theories and spaces : an overview

Mar 08 2002 Numerical approach for large-scale E 1

igenvalue problems

Understanding with Mechanical Engineering

m m m T sin 2 2

1 T

x2

T x1

l l T

2l 2l

l 2l

a)Three beads on a string

b)The forces on bead 1

T sin 1

A

xi+1- xi

xi+1 Governing equations are

xi C B

d 2 x1 x T(x 2 x1 )

m 2

1

a dt l 2l

2

c)The approximation for sin d x T(x 2 x1 ) T(x 3 x 2 )

m 22

T sin 1 AB AB x i 1 x i dt 2l 2l

sin 2

AC BC a d x T(x 3 x 2 ) Tx 3

m 23

Vibration of beads perpendicular to string dt 2l l

iwt

Xi x ie

Mar 08 2002 Numerical approach for large-scale E 2

igenvalue problems

Physical Interpretation

2

i 2w ml T

3 1 0 1 1 1

1 2 1 0 1,3,4; v1 2 , v 2 0 , v1 1

0 1 3 1 1 1

1 2 1

1 Physical interpretation:

1 There is a free vibration with

1

1 0 angular frequency ' w' : w 2 T

3 2 ml

-1

corresponding to this frequency of vibration

there exists a mode of oscillation ST x1 : x 2 : x 3 1 : 2 : 1

1 1

4

-1

(Modes of vibration)

Mar 08 2002 Numerical approach for large-scale E 3

igenvalue problems

General Formulation - discussions

In many physical applications we often encounter to study

system behavior :

Au u

The problem is called the Eigenvalue problem for the matrix A.

is called the Eigenvalue (proper value or

characteristic value) value

u is called the Eigenvector of A.

System can be written as (A - I) u 0

To have a non-trivial solution for ‘u’ A - I 0

Degree of polynomial in order of A

Mar 08 2002 Numerical approach for large-scale E 4

igenvalue problems

Distinguishion

A

A has distinct eigenvalues A has multiple eigenvalues

Eigenvectors are Eigenvectors are Eigenvectors are

linearly independent linearly independentlinearly dependent

semi simple matrix Non - semi simple

(diagonalizable) (non - diagonalizable)

Mar 08 2002 Numerical approach for large-scale E 5

igenvalue problems

Spectral Approximation

Set of all Eigenvalues : Spectrum of ‘A’

Single Vector Iteration

•Power method Jacobi method

•Shifted power method Subspace iteration technique

•Inverse power method

•Rayliegh Quotient

iteration

Deflation Techniques

•Wielandt deflation with one vector

•Deflation with several vectors

•Schur - Weilandt deflation

•Practical deflation procedures

Projection methods

•Orthogonal projection methods

•Rayleigh - Ritz procedure

•Oblique projection methods

Mar 08 2002 Numerical approach for large-scale E 6

igenvalue problems

Power method

•It’s a single vector iteration technique

•This method only generates only dominant eigenpairs , v

•It generates a sequence of vectors

: A k v 0 where v 0 is some non - zero initial vector

This sequence of vectors when normalized properly,

under reasonably mild conditions converge to a dominant

eigenvector associated with eigenvalue of largest modulus.

Methodology

S

: tart : Choose a nonzero initial vector

Iterate : for k = 1,2,…… until convergence, compute

1

vk Av k -1 where k is a component of the vector Av k -1

αk

which has the maximum modulus

Mar 08 2002 Numerical approach for large-scale E 7

igenvalue problems

Why and What's happening in power method

To apply power method, our assumptions should be

Assumption 1: 1 is strictly greater than i for i 2,3.......n

Assumption 2 :' A' has n eigen vectors v1 , v 2 ........v n (where Av i i v i )

which are a basis for n - space

Iterate: v k A k v 0 for k 1,2,3.......

In view of assumption 2, v0 can be expressed as

v 0 1v1 2 v 2 ........ n v n

k

But Av i i v i , hence A k v i i v i for k 1, so in view of assumtion1,

A k v 0 11k v1 2 k2 v 2 ........ n kn v n

k k

2 n

k

1v1 2 v 2 ....... n v n

1

1 1

11k v1 for large values of k, provided that 1 0

11k v1 is a scalar multiple of v1 , v k A k v 0 will approach an eigenvector

for the dominant eigen value i (i.e, Av k 1v k )

So if v k is scaled so that its dominant component is 1, then

(dominant component of Av k ) 1.(dominant component of v k ) 1

Mar 08 2002 Numerical approach for large-scale E 8

igenvalue problems

Shifted Power method

Instead of iterating with ' A' B A I for positive is any arbitrary value

The scalars ' ' are called shifts of origin

, v is an eigenpair for ' A' , v in an eigenpair for ' A - I'

Advantages

1. Less number of iterations for convergence

2. Shifting doesnt alter the eigenvectors but eigenvalues would shift by ' '

Eigenvalues of A

1 2 3 4 5 …. n

Eigenvalues of (A- I) ( 0)

(1 ) (2 )(3 ) (4 )(5 ) (n )

Mar 08 2002 Numerical approach for large-scale E 9

igenvalue problems

Inverse power method-Shifted Inverse power method

Basic idea is that

, v is an eigenpair of A 1 , v is an eigenpair of A -1

So the dominant eigenvalueof A -1 is the reciprocal of the least dominant eigenvalueof A

1

if n 0, and n i we can find and an associated v n by applying the same iterative method

n

If A is singular, we shall be unable to find A -1 , indicating n 0

Advantages

1.Least dominant eigenpair of A

2.Faster convergence rate

Shifted Inverse power method:

The same mechanism follows like in the shifted power method and only thing is

that we will achieve faster convergence rates in comparison.

Mar 08 2002 Numerical approach for large-scale E 10

igenvalue problems

Rayliegh Quotient

The basic idea is to keep changing the shifts so that faster convergenc e occurs

Start : Choose an initial vector v 0 such that v 0 2

1

Iterate : for k 1,2,...... , until convergenc e compute

k Av k -1 , v k -1 ,

1

vk A - k I 1 v k -1

k

Where k should be in a way that the 2 - norm of the vector v k is one. \

According to Rayliegh, If we know any eigen vect or in the system

we can calculate eigenvalue .

suppose v j is an eigenvecto r we know in the system.

Basic formulatio n can be made as Av j j v j

pre - multiplying with v T v Tj Av j v Tj j v j We can find out correspond ing eigenvalue .

Mar 08 2002 Numerical approach for large-scale E 11

igenvalue problems

Deflation Techniques

Definition : Manipulate the system

After finding out the largest eigenvalue in the system,displace it in such away that

next larger value is the largest value in the system and apply power method.

Weilandt deflation technique

It’s a single vector deflation technique.

According to Weilandt the deflated matrix is of the form

A1 A - u1v H where v is an arbitrary vector such that v h u1 1

and is an appropriate shift. Eigen values of the A are those except

for the eigenvalue 1 which is trasnformed to 1 .

Basically the spectrum of A1 would be

(A1 ) 1 , 2 , 3 ,........,p

Mar 08 2002 Numerical approach for large-scale E 12

igenvalue problems

Deflation with several vectors:

It uses the Schur decomposition

The basic idea is that if we know one vector of 2 - norm one can be

completed by (n - 1) additional vectors to form an orthonormal basis of C n

That is achieved by writing the matrix in schur form.

Let q1 , q 2 , q 3 ,.......q j be a set of schur vectors associated with the

eigenvalues 1 , 2 ,........ j such that Q j q1 , q 2 , q 3 ,.......q j is an orthonormal matrix

whose columns form a basis of the eigen space associated with the eigenvalues 1 , 2 ,........ j .

So the generalization would be

Let j Diag 1 , 2 ,...... j

Then the eigenvalues of the matrix A j A - Q j j Q Hj

~ ~

are i i i for i j and i for i j

Mar 08 2002 Numerical approach for large-scale E 13

igenvalue problems

Schur - Weilandt Deflation

For i 0,1,2,.......j - 1

1.Define A i A i -1 i 1q i -1q iH-1 (initially define A 0 A) and compute

~

the dominant eigenvaluei of A i and corresponding eigenvector u i

~

2. Orthonormalize u i against q1 , q 2 ,.......q i-1 to get the vector q i

Practical deflation procedure:

The more general way to compute 2 , u 2 are

1. u w1 the left eigenvector. disadvantage is requiring left and right vectors

but on the other hand right and left eigenvectors of A1are preserved.

2.v u1which is often nearlyoptimal and preserves the schur vectors.

3. Using a block of schur vectors instead of a single vector.

Usually the steps to be followed are

1)get vector y Ax

2)get the scalar t v H x

3)compute y y - tu 1 The above procedurerequires only that the vectors u1and v be

kept in memory along with the matrix A. We deflate A1 again into A 2 and then into A 3 etc.

~

At each of the process we have A i A i -1 u i v iH

Mar 08 2002 Numerical approach for large-scale E 14

igenvalue problems

Projection methods

Suppose if matrix ‘A’ is real and the eigenvalues are complex.consider power method

where dominant eigenvalues are complex and but the matrix is real.

Although the usual sequence

x j1 jAx j where j is a normalizin g factor doesnt converge, A simple analysis

may show that the subspace spanned by the last two iterates x j1 , x j will contain

converging approximat ions to the complex pair of eigenvecto rs. A simple projection

technique on to those vectors will extract th e eigenvalue s and eigenvecto rs.

~

Approxiamate the exact eigenvecto r u, by a vector u belonging to some subspace K

of approximan ts , by imposing Petrov - Galerkin method that the residual vector of

~

u be orthogonal to some subspace L.

In orthogonal projection technique subspace L is same K.

In oblique projection technique there is no relation.

Mar 08 2002 Numerical approach for large-scale E 15

igenvalue problems

Orthogonal projection methods

Let A(n n) K(m).

According to eigenvalue problem : Find ' u' C n and C STAu u.

~ ~

In an orthogonal projection technique onto the subspace K, we take approx. u and

~ ~ ~ ~ ~

~ ~~

with in C and u in K ST. A u - u K that is A u - u, v 0 v K

Assume some orthonormal basis v1 , v 2 ,......, v m of K is available and denote by V the matix

with column vectors v1 , v 2 ,......, v m . Then we can solve the approximate problem numerically by

~

~

translating it into this basis. Letting u Vy our equation becomes AVy Vy, v j 0, j 1,2,...... m.

~ ~

y and must satisfy Bm y y with B m V H AV

If we denote by A m the linear transformation of rank ' m' defined by A m PK APK then we observe that

the restriction of this operator to the subspace K is represented by matrix Bm with respect to basis V.

Mar 08 2002 Numerical approach for large-scale E 16

igenvalue problems

What exactly is happening in orthogonal projection

Suppose v is the guess vector, take successive two iterations in the power method.

Form an orthonormal basis X = [v|Av].

Do the Gram - Schmidt process to QR factorize X.

Say v is nX1 and Av is ofcourse of nX1. So ‘X’ is now nX2 matrix.

Q = [q1|q2] where q1 = q1/norm(q1); q2 = projection onto q1;

now Q is perfectly orthonormalized.

q1,q2 form an orthonormal basis which spans x,x, which are corresponding

eigenvectors as it converges.

since the columns of Q k are orthonormal, the projection of a vector vinto the subspace spanned by

the columns of Q k is given by Q k Q Tk v k 1. Since the space spanned by the columns of vk 1 is nearly

equal to the space spanned by Q k , it follows that

Q k Q Tk v k 1 vk 1 AQk

Replacing Q Tk v k 1 by its diagonalization PP -1 we have Q k PP -1 AQk post multiplying with P yields

Q k P AQk P ; The diagonal elements of approximate the dominant eigenvalues and Q k P approximates eigenvectors.

Mar 08 2002 Numerical approach for large-scale E 17

igenvalue problems

Rayleigh - Ritz procedure

step 1 : Compute an orthonormal basis v i i 1,2,....m of the subspace K. Let V v1 , v 2 ,.....v m

step 2 : Compute Bm V H AV

~

step 3 : Compute the eigenvalues of Bm and select the k desired ones i 1,2,....k where k m

~

step4 : Compute the eigenvectors y i , i 1,2....k of Bm associated with i 1,2,....k and the

~

corresponding approximate eigenvectors of A, u i Vy i , i 1,2,.....k

Rayleigh - Ritz procedure act under a fact that :

If K is invariant under A, then every approximate eigenvalue , eigenvector pair

obtained from the orthogonal projection method onto K is exact.

K is invariant under A There exists an orthogonal basis Q of K and an mXm matrix

C such that AQ QC every eigenpair , and y of C is such that , Qy is an eigenpair

of A since AQy QCy Qy, So as long as Q is fixed and y are eigenpair.

Mar 08 2002 Numerical approach for large-scale E 18

igenvalue problems

Oblique projection method

~

We have two subspaces K and L and seek an approximation u K and an element

of C that satisfy the Petrov Galerkin condition A - I u, v 0 v L

~ ~ ~

We have two bases V K and W L, we assume that that these two bases are biorthogonal.

~

that is (v i , w j ) ij or W H V I ; Writing as u vy, B m would now be defined as Bm W H AV

One more condition to be bi - orthogonal is det( W H V) 0

We can now express our oblique projector as Q L ST. Q L x K and (x - Q L x) L

When it holds the Petrov - Galerkin these expressions can be written as

~ ~ ~ ~ ~ ~

QL A u u 0 QLA u u

Mar 08 2002 Numerical approach for large-scale E 19

igenvalue problems

Jacobi method

Jacobi method finds all the eigenvalues and vectors at a time.

The inverse of orthogonal matrix is its own transpose.

Suppose we have two matrices A and B , and an orthogonal matrix U

and if we can write B U T AU then B is said to be orthogonally similar to A.

and we know that every symmetric matrix is orthogonally similar to a diagonal matrix

So the basic idea is that If A is symmetric, then there is an orthonormal matrix U

ST. U T AU diag1,2, .....n

cos sin

Consider a matrix which is an orthogonal matrix and we choose it as U

sin cos

a a 12

and take A 11 and applying the orthogonal similarity

a 21 a 22

cos sin a 11 a 12 cos sin

sin

cos a 21 a 22 sin cos

a11 cos 2 a 22 sin 2 a 12 sin 2 a 12 cos 2 12 a 11 a 22 sin 2

a 12 cos 2 2 a 11 a 22 sin 2 a 11 sin 2 a 22 cos 2 a 12 sin 2

1

Mar 08 2002 Numerical approach for large-scale E 20

igenvalue problems

Jacobi method….

Now if is choosen in such a way that a12 cos 2 12 a11 a 22 sin 2 0

2a ij

12 tan if a ii a jj

-1

a ii a jj

if a a

4 ii jj

then

Matrix B R ij () T AR ij () will satisfy b ij b ji 0

n(n -1)

So in general if we have A of nXn matrix then, we need to rotate it 2 times.

and the corresponding eigenvectors are are the corresponding columns of U k

The value of U k can be completed for every time we rotate.

Mar 08 2002 Numerical approach for large-scale E 21

igenvalue problems

Subspace Iteration Techniques

•It is one of the most important methods in the structural engineering

Bauer’s method

Take initial system of m vectors forming nXm matrix X 0 x1 , x 2 ,...x m and computing

X k A k X 0 . If we normalize the column vectors separately , then in typical cases each of these will

converge to the same eigenvector associated with the dominant eigenvalue.

The system X k will progressively loose its linear independence.

According to Bauer we reestablish the linear independence for these vectors by a process such as QR factorization.

Algorithm

1. Start : Choose an initial system of vectors X 0 x1 , x 2 ,...x m

2. Iterate : Until convergence do,

a) X k A k X k -1

b) Compute QR factorization X k QR of X k and set X k : Q

Mar 08 2002 Numerical approach for large-scale E 22

igenvalue problems

Applications and Applicable areas

Problems related to the analysis of vibrations

•usually symmetric generalized eigenvalue problems

Problems related to stability analysis

•usually generates non - symmetric matrices

•Structural Dynamics

•Electrical Networks

•Combustion processes

•Macroeconomics

•Normal mode techniques

•Quantum chemistry

•chemical reactions

•Magneto Hydrodynamics

Mar 08 2002 Numerical approach for large-scale E 23

igenvalue problems

You might also like

- Electronic Properties of Crystalline Solids: An Introduction to FundamentalsFrom EverandElectronic Properties of Crystalline Solids: An Introduction to FundamentalsNo ratings yet

- Ch1 Principle of Sound and VibrationDocument11 pagesCh1 Principle of Sound and VibrationTsz Chun YuNo ratings yet

- Physics Max Marks: 100 (Single Correct Answer Type) : MSR LCDocument41 pagesPhysics Max Marks: 100 (Single Correct Answer Type) : MSR LCSiddharth SinghNo ratings yet

- The Spectral Theory of Toeplitz Operators. (AM-99), Volume 99From EverandThe Spectral Theory of Toeplitz Operators. (AM-99), Volume 99No ratings yet

- BMM3553 Mechanical Vibrations: Chapter 3: Damped Vibration of Single Degree of Freedom System (Part 1)Document37 pagesBMM3553 Mechanical Vibrations: Chapter 3: Damped Vibration of Single Degree of Freedom System (Part 1)Alooy MohamedNo ratings yet

- PHYS220 - Lecture 1.2Document6 pagesPHYS220 - Lecture 1.2Savio KhouryNo ratings yet

- Planned Sequence: Examine Classical WavesDocument17 pagesPlanned Sequence: Examine Classical Waveskasun1237459No ratings yet

- ISVR6130Document600 pagesISVR6130LIM SHANYOUNo ratings yet

- 110448Document28 pages110448Balu ChanderNo ratings yet

- Mechanical vibrations damped free vibration analysisDocument16 pagesMechanical vibrations damped free vibration analysisFong Wei JunNo ratings yet

- I XT XT VXT XT T MX E N: Incident Transmitted ReflectedDocument11 pagesI XT XT VXT XT T MX E N: Incident Transmitted ReflectedsajalgiriNo ratings yet

- I XT XT VXT XT T MX E N: Incident Transmitted ReflectedDocument11 pagesI XT XT VXT XT T MX E N: Incident Transmitted ReflectedRomi ApriantoNo ratings yet

- I XT XT VXT XT T MX E N: Incident Transmitted ReflectedDocument11 pagesI XT XT VXT XT T MX E N: Incident Transmitted ReflectedAmin SanimanNo ratings yet

- 17339-28-07 Morning Maths 2022Document11 pages17339-28-07 Morning Maths 2022princekumarg013No ratings yet

- Unit IV Convolutional CodesDocument33 pagesUnit IV Convolutional Codestejal ranaNo ratings yet

- ECE 3512 Lecture 38: State Space Representations and SolutionsDocument12 pagesECE 3512 Lecture 38: State Space Representations and Solutionsahmed_nassef2004No ratings yet

- Least Squares & Pseudo InverseDocument12 pagesLeast Squares & Pseudo InversemisscomaNo ratings yet

- 9 Schrodinger EquationDocument23 pages9 Schrodinger EquationRahajengNo ratings yet

- Fourier Series Analysis of Sawtooth WaveDocument31 pagesFourier Series Analysis of Sawtooth WaveRupalNo ratings yet

- Ece 232 Discrete-Time Signals and Systems Solved Problems I: DT e T X T C and e C T XDocument4 pagesEce 232 Discrete-Time Signals and Systems Solved Problems I: DT e T X T C and e C T XistegNo ratings yet

- 2 2 T T T T M T 1 1 T 2 T 2 2 2 2 2 1 2 1 T 1 T 3 T 1 2 1! 2 2! 4 3!Document48 pages2 2 T T T T M T 1 1 T 2 T 2 2 2 2 2 1 2 1 T 1 T 3 T 1 2 1! 2 2! 4 3!AndersonHaydarNo ratings yet

- Oscillation Motion: T Cos A T XDocument10 pagesOscillation Motion: T Cos A T XNoviNo ratings yet

- QBPT Test - Ii, - 05.04.2020Document13 pagesQBPT Test - Ii, - 05.04.2020DrNaresh SahuNo ratings yet

- Teoría de Mecanismos Tema 3. VibracionesDocument18 pagesTeoría de Mecanismos Tema 3. VibracionestxabiNo ratings yet

- Fourier Series, Fourier Integral, Fourier TransformDocument29 pagesFourier Series, Fourier Integral, Fourier Transformvasu_koneti5124No ratings yet

- Objectives:: The State EquationsDocument12 pagesObjectives:: The State EquationsHasan AljabaliNo ratings yet

- Solving the Schrödinger Equation for the Infinite Square Well PotentialDocument18 pagesSolving the Schrödinger Equation for the Infinite Square Well PotentialKusio YusitoNo ratings yet

- Chapter 1: Problem Solutions: Review of Signals and SystemsDocument36 pagesChapter 1: Problem Solutions: Review of Signals and SystemsSangeet NambiNo ratings yet

- Department of Ece: Agnel Technical Educational Complex Assagao, Bardez-Goa. 403 507Document5 pagesDepartment of Ece: Agnel Technical Educational Complex Assagao, Bardez-Goa. 403 507Vinay RaiNo ratings yet

- Linear Time Varying State Equation Solutions: EE-601: Linear System TheoryDocument16 pagesLinear Time Varying State Equation Solutions: EE-601: Linear System TheorysunilsahadevanNo ratings yet

- Arc Length and Surface Area (Level 1 Solutions)Document3 pagesArc Length and Surface Area (Level 1 Solutions)vincesee85No ratings yet

- Free Vibration of Single Degree of Freedom (SDOF)Document107 pagesFree Vibration of Single Degree of Freedom (SDOF)Mahesh LohanoNo ratings yet

- Dynamic Analysis: T F KX X C X MDocument9 pagesDynamic Analysis: T F KX X C X MRed JohnNo ratings yet

- 5.5 Heat Capacity and Magnetic Susceptibility of The Ising Ferromagnet in The Mean-Field Approximation. Critical ExponentsDocument10 pages5.5 Heat Capacity and Magnetic Susceptibility of The Ising Ferromagnet in The Mean-Field Approximation. Critical ExponentsAnderson Garcia PovedaNo ratings yet

- Computational Fluid Dynamics : February 21Document37 pagesComputational Fluid Dynamics : February 21Tatenda NyabadzaNo ratings yet

- Computational Fluid Dynamics : February 28Document68 pagesComputational Fluid Dynamics : February 28Tatenda NyabadzaNo ratings yet

- Notes 3 6382 Complex IntegrationDocument46 pagesNotes 3 6382 Complex IntegrationMariam MugheesNo ratings yet

- Relativity: The Principle of Newtonian RelativityDocument13 pagesRelativity: The Principle of Newtonian RelativityNoviNo ratings yet

- ET8304 Lecture10 2022Document22 pagesET8304 Lecture10 2022Rizwan RafiqueNo ratings yet

- Vibrations of Continuous SystemsDocument27 pagesVibrations of Continuous SystemslalbaghNo ratings yet

- Lecture 1cDocument15 pagesLecture 1cYusuf GulNo ratings yet

- Physics Times March 19: Methods of Finding Time of Meeting for Oscillating BodiesDocument68 pagesPhysics Times March 19: Methods of Finding Time of Meeting for Oscillating BodiesEdney Melo100% (1)

- Introduction To ROBOTICSDocument35 pagesIntroduction To ROBOTICSVistor VondarthNo ratings yet

- Classification by EigenvaluesDocument6 pagesClassification by EigenvaluessandorNo ratings yet

- Teoría de Mecanismos Tema 3. VibracionesDocument37 pagesTeoría de Mecanismos Tema 3. VibracionestxabiNo ratings yet

- Lecture 04Document13 pagesLecture 04Shiju RamachandranNo ratings yet

- M H X S M X C: Formulas (Phys-270)Document3 pagesM H X S M X C: Formulas (Phys-270)Shaidul IkramNo ratings yet

- Week04Module03 FourierTransformsDocument13 pagesWeek04Module03 FourierTransformsrra127No ratings yet

- Lattice DynamicsDocument23 pagesLattice DynamicsFrankNo ratings yet

- MECH4450 Introduction To Finite Element MethodsDocument17 pagesMECH4450 Introduction To Finite Element MethodsAnimesh Kumar JhaNo ratings yet

- MP QM - Part 2 - 2021Document36 pagesMP QM - Part 2 - 2021sama akramNo ratings yet

- Block Diagram and Transfer FunctionsDocument20 pagesBlock Diagram and Transfer FunctionsBatuhan Mutlugil 'Duman'No ratings yet

- Yn Ayn Ayn N BXN BXN N: Recursive FiltersDocument28 pagesYn Ayn Ayn N BXN BXN N: Recursive FiltersnikshithNo ratings yet

- 3.0 Fourier SeriesDocument17 pages3.0 Fourier SeriesKarmughillaan ManimaranNo ratings yet

- GEM 601 Lecture Slides (Solving PDE by Separation of Variables)Document5 pagesGEM 601 Lecture Slides (Solving PDE by Separation of Variables)Aly Jr ArquillanoNo ratings yet

- The Mathematics of VibrationDocument22 pagesThe Mathematics of VibrationFatima NusserNo ratings yet

- State Variable Analysis: Birla Vishwakarma MahavidyalayaDocument17 pagesState Variable Analysis: Birla Vishwakarma Mahavidyalayavani100% (1)

- Free vibration of SDOF systemsDocument107 pagesFree vibration of SDOF systemssenthilcae100% (1)

- 9 IirDocument28 pages9 IirMekonen AberaNo ratings yet

- Model Order Reduction Vlsi CktsDocument28 pagesModel Order Reduction Vlsi CktsVSNo ratings yet

- 5C RV Simulation NewDocument189 pages5C RV Simulation NewVSNo ratings yet

- Digital Systems Design OverviewDocument12 pagesDigital Systems Design OverviewVSNo ratings yet

- Beamersimulating RVs PDFDocument115 pagesBeamersimulating RVs PDFVSNo ratings yet

- Vector Space Groups Rings FieldsDocument10 pagesVector Space Groups Rings FieldsVSNo ratings yet

- Coreconnect AShutosh Full PaperDocument6 pagesCoreconnect AShutosh Full PaperVSNo ratings yet

- Beamersimulating RVs PDFDocument115 pagesBeamersimulating RVs PDFVSNo ratings yet

- Model Order RedcnDocument10 pagesModel Order RedcnVSNo ratings yet

- Challenges & Implications of VLSI Architectures for Multimedia ProcessingDocument35 pagesChallenges & Implications of VLSI Architectures for Multimedia ProcessingVSNo ratings yet

- Reduced Order ModelingDocument65 pagesReduced Order ModelingVSNo ratings yet

- Ridhi Vidhan 24 Page-Shri-VidyasagarDocument24 pagesRidhi Vidhan 24 Page-Shri-VidyasagarVSNo ratings yet

- SampleChancery PDFDocument1 pageSampleChancery PDFVSNo ratings yet

- Vineet Vlsi For MutlimediaDocument2 pagesVineet Vlsi For MutlimediaVSNo ratings yet

- SVM Baysian PatilDocument7 pagesSVM Baysian PatilVSNo ratings yet

- Abstract Is CA 95Document2 pagesAbstract Is CA 95VSNo ratings yet

- Evaluating Cost and Time-to-Market Requirements of MCM DesignsDocument28 pagesEvaluating Cost and Time-to-Market Requirements of MCM DesignsVSNo ratings yet

- A Study of Low Power Design Techniques For Application Specific ProcessorsDocument2 pagesA Study of Low Power Design Techniques For Application Specific ProcessorsVSNo ratings yet

- Chancery Job AidDocument1 pageChancery Job AidFamila Sujanee SampathNo ratings yet

- 2 Sem II Mtech Ete 27 May 2017Document2 pages2 Sem II Mtech Ete 27 May 2017VSNo ratings yet

- IMAPS International Conf. On Emerging Microelectronics & Interconnection Technology 2000Document6 pagesIMAPS International Conf. On Emerging Microelectronics & Interconnection Technology 2000VSNo ratings yet

- Electric Circuits: General & Particular SolutionsDocument13 pagesElectric Circuits: General & Particular SolutionsVSNo ratings yet

- Enabling Technologies CMOS & Beyond Jan Apr 2016 IITJ PDFDocument94 pagesEnabling Technologies CMOS & Beyond Jan Apr 2016 IITJ PDFVSNo ratings yet

- 2 MTech 1 MTE 8 Feb 2017Document3 pages2 MTech 1 MTE 8 Feb 2017VSNo ratings yet

- Optimal Interconnects: Modelling and Synthesis: Vineet Sahula C.P. Ravikumar D. NagchoudhuryDocument8 pagesOptimal Interconnects: Modelling and Synthesis: Vineet Sahula C.P. Ravikumar D. NagchoudhuryVSNo ratings yet

- The Art of Calligraphy by David Harris PDFDocument128 pagesThe Art of Calligraphy by David Harris PDFamandabraunNo ratings yet

- CKT Transients N Tellegen TheoremDocument32 pagesCKT Transients N Tellegen TheoremVSNo ratings yet

- Graph Matrix Representations ExplainedDocument36 pagesGraph Matrix Representations ExplainedVSNo ratings yet

- ECE Dept. Faculty Research Metrics MNIT JaipurDocument1 pageECE Dept. Faculty Research Metrics MNIT JaipurVSNo ratings yet

- Vector Spaces OrhtogonalizationDocument31 pagesVector Spaces OrhtogonalizationVSNo ratings yet

- 1 Order TransientDocument13 pages1 Order TransientVSNo ratings yet

- Cherry Mx1A-Lxxa/B: Housing Colour: KeymoduleDocument5 pagesCherry Mx1A-Lxxa/B: Housing Colour: KeymoduleArtur MartinsNo ratings yet

- Hershey's Milk Shake ProjectDocument20 pagesHershey's Milk Shake ProjectSarvesh MundhadaNo ratings yet

- Unit 3 Secondary MarketDocument21 pagesUnit 3 Secondary MarketGhousia IslamNo ratings yet

- BN68-13792A-01 - Leaflet-Remote - QLED LS03 - MENA - L02 - 220304.0Document2 pagesBN68-13792A-01 - Leaflet-Remote - QLED LS03 - MENA - L02 - 220304.0Bikram TiwariNo ratings yet

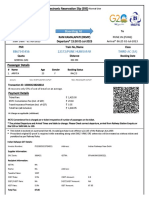

- 22172/pune Humsafar Third Ac (3A)Document2 pages22172/pune Humsafar Third Ac (3A)VISHAL SARSWATNo ratings yet

- An Overview of Penetration TestingDocument20 pagesAn Overview of Penetration TestingAIRCC - IJNSANo ratings yet

- Research Article: Deep Learning-Based Real-Time AI Virtual Mouse System Using Computer Vision To Avoid COVID-19 SpreadDocument8 pagesResearch Article: Deep Learning-Based Real-Time AI Virtual Mouse System Using Computer Vision To Avoid COVID-19 SpreadRatan teja pNo ratings yet

- HUT-A Hydraulic Universal Testing Machine 2018.6.26 PDFDocument6 pagesHUT-A Hydraulic Universal Testing Machine 2018.6.26 PDFSoup PongsakornNo ratings yet

- ChatroomsDocument4 pagesChatroomsAhmad NsNo ratings yet

- List of Private Medical PractitionersDocument7 pagesList of Private Medical PractitionersShailesh SahniNo ratings yet

- Company Profile: Our BeginningDocument1 pageCompany Profile: Our BeginningSantoshPaul CleanlandNo ratings yet

- 545-950 ThermaGuard 2021Document2 pages545-950 ThermaGuard 2021AbelNo ratings yet

- Decoled FR Sas: Account MovementsDocument1 pageDecoled FR Sas: Account Movementsnatali vasylNo ratings yet

- Bo EvansDocument37 pagesBo EvanskgrhoadsNo ratings yet

- Solution Manual For C Programming From Problem Analysis To Program Design 4th Edition Barbara Doyle Isbn 10 1285096266 Isbn 13 9781285096261Document19 pagesSolution Manual For C Programming From Problem Analysis To Program Design 4th Edition Barbara Doyle Isbn 10 1285096266 Isbn 13 9781285096261Gerald Digangi100% (32)

- BS 3921-1985 Specification For Clay Bricks PDFDocument31 pagesBS 3921-1985 Specification For Clay Bricks PDFLici001100% (4)

- Sante Worklist Server QSG 1Document25 pagesSante Worklist Server QSG 1adamas77No ratings yet

- Inorganic Growth Strategies: Mergers, Acquisitions, RestructuringDocument15 pagesInorganic Growth Strategies: Mergers, Acquisitions, RestructuringHenil DudhiaNo ratings yet

- ICAP Pakistan Final Exams Business Finance Decisions RecommendationsDocument4 pagesICAP Pakistan Final Exams Business Finance Decisions RecommendationsImran AhmadNo ratings yet

- Infotech JS2 Eclass Computer VirusDocument2 pagesInfotech JS2 Eclass Computer VirusMaria ElizabethNo ratings yet

- Simplex 4081-0002Document2 pagesSimplex 4081-0002vlaya1984No ratings yet

- XML Programming With SQL/XML and Xquery: Facto Standard For Retrieving and ExchangingDocument24 pagesXML Programming With SQL/XML and Xquery: Facto Standard For Retrieving and ExchangingdkovacevNo ratings yet

- Mid Test (OPEN BOOK) : Swiss German UniversityDocument5 pagesMid Test (OPEN BOOK) : Swiss German Universityaan nug rohoNo ratings yet

- Install Fresh & Hot Air AttenuatorsDocument3 pagesInstall Fresh & Hot Air Attenuatorsmazumdar_satyajitNo ratings yet

- Checklist Whitepaper EDocument3 pagesChecklist Whitepaper EMaria FachiridouNo ratings yet

- iC60L Circuit Breakers (Curve B, C, K, Z)Document1 pageiC60L Circuit Breakers (Curve B, C, K, Z)Diego PeñaNo ratings yet

- What Makes Online Content ViralDocument15 pagesWhat Makes Online Content ViralHoda El HALABINo ratings yet

- Sdoc 09 13 SiDocument70 pagesSdoc 09 13 SiJhojo Tesiorna FalcutilaNo ratings yet

- Udit Sharma: Summary of Skills and ExperienceDocument2 pagesUdit Sharma: Summary of Skills and ExperienceDIMPI CHOPRANo ratings yet