Professional Documents

Culture Documents

Numerical Optimization: Lecture Notes #24 Nonlinear Least Squares - Orthogonal Distance Regression

Uploaded by

emwlupeOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Numerical Optimization: Lecture Notes #24 Nonlinear Least Squares - Orthogonal Distance Regression

Uploaded by

emwlupeCopyright:

Available Formats

Summary

Orthogonal Distance Regression

Numerical Optimization

Lecture Notes #24

Nonlinear Least Squares Orthogonal Distance Regression

Peter Blomgren,

blomgren.peter@gmail.com

Department of Mathematics and Statistics

Dynamical Systems Group

Computational Sciences Research Center

San Diego State University

San Diego, CA 92182-7720

http://terminus.sdsu.edu/

Fall 2013

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (1/21)

Summary

Orthogonal Distance Regression

Outline

1

Summary

Linear Least Squares

Nonlinear Least Squares

2

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (2/21)

Summary

Orthogonal Distance Regression

Linear Least Squares

Nonlinear Least Squares

Summary: Linear Least Squares

Our study of non-linear least squares problems started with a look at

linear least squares, where each residual r

j

(x) is linear, and the

Jacobian therefore is constant. The objective of interest is

f (x) =

1

2

|Jx +r

0

|

2

2

, r

0

=r(0),

solving for the stationary point f (x

) = 0 gives the normal equations

J

T

J

x

= J

T

r

0

.

We have three approaches to solving the normal equations for x

in

increasing order of computational complexity and stability:

(i) Cholesky factorization of J

T

J,

(ii) QR-factorization of J, and

(iii) Singular Value Decomposition of J.

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (3/21)

Summary

Orthogonal Distance Regression

Linear Least Squares

Nonlinear Least Squares

Summary: Nonlinear Least Squares 1 of 4

Problem: Nonlinear Least Squares

x

= arg min

xR

n

[f (x)] = arg min

xR

n

_

_

1

2

m

j =1

r

j

(x)

2

_

_

, m n,

where the residuals r

j

(x) are of the form r

j

(x) = y

j

(x; t

j

).

Here, y

j

are the measurements taken at the locations/times t

j

,

and (x; t

j

) is our model.

The key approximation for the Hessian

2

f(x) = J(x)

T

J(x) +

m

j =1

r

j

(x)

2

r

j

(x) J(x)

T

J(x).

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (4/21)

Summary

Orthogonal Distance Regression

Linear Least Squares

Nonlinear Least Squares

Summary: Nonlinear Least Squares 2 of 4

Line-search algorithm: Gauss-Newton, with the subproblem:

_

J(x

k

)

T

J(x

k

)

_

p

GN

k

= f (x

k

).

Guaranteed descent direction, fast convergence (as long as the

Hessian approximation holds up) equivalence to a linear least

squares problem (used for ecient, stable solution).

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (5/21)

Summary

Orthogonal Distance Regression

Linear Least Squares

Nonlinear Least Squares

Summary: Nonlinear Least Squares 3 of 4

Trust-region algorithm: Levenberg-Marquardt, with the

subproblem:

p

LM

k

= arg min

pR

n

1

2

|J(x

k

) p +r

k

|

2

2

, subject to | p|

k

.

Slight advantage over Gauss-Newton (global convergence), same

local convergence properties; also (locally) equivalent to a linear

least squares problem.

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (6/21)

Summary

Orthogonal Distance Regression

Linear Least Squares

Nonlinear Least Squares

Summary: Nonlinear Least Squares 4 of 4

Hybrid Algorithms:

When implementing Gauss-Newton or Levenberg-Marquardt,

we should implement a safe-guard for the large residual

case, where the Hessian approximation fails.

If, after some reasonable number of iterations, we realize that

the residuals are not going to zero, then we are better o

switching to a general-purpose algorithm for non-linear opti-

mization, such as a quasi-Newton (BFGS), or Newton method.

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (7/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

Fixed Regressor Models vs. Errors-In-Variables Models

So far we have assumed that there are no errors in the variables

describing where / when the measurements are made, i.e. in the data

set t

j

, y

j

where t

j

denote times of measurement, and y

j

the measured

value, we have assumed that t

j

are exact, and the measurement errors

are in y

j

.

Under this assumption, the discrepancies between the model and the

measured data are

j

= y

j

(x; t

j

), i = 1, 2, . . . , m.

Next, we will take a look at the situation where we take errors in t

j

into

account these models are known as errors-in-variables models, and

their solutions in the linear case are referred to as total least squares

optimization, or in the non-linear case as orthogonal distance

regression.

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (8/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

Least Squares vs. Orthogonal Distance Regression

1 1.5 2 2.5 3 3.5 4 4.5 5

0.9

1

1.1

1.2

1.3

1.4

1 1.5 2 2.5 3 3.5 4 4.5 5

0.9

1

1.1

1.2

1.3

1.4

Figure: (left) An illustration of how the error is measured in stan-

dard (xed regressor) least squares optimization. (right) An example

of orthogonal distance regression, where we measure the shortest

distance to the model curve. [The right gure is actually not correct, why?]

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (9/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

Orthogonal Distance Regression

For the mathematical formulation of orthogonal distance regression

we introduce perturbations (errors)

j

for the variables t

j

, in

addition to the errors

j

for the y

j

s.

We relate the measurements and the model in to following way

j

= y

j

(x; t

j

+

j

),

and dene the minimization problem:

(x

) = arg min

x,

1

2

m

j=1

_

w

2

j

_

y

j

(x; t

j

+

j

)

_

2

+ d

2

j

2

j

_

,

where

d and w are two vectors of weights which denote the

relative signicance of the error terms.

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (10/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

Orthogonal Distance Regression: The Weights

The weight-vectors

d and w must either be supplied by the

modeler, or estimated in some clever way.

If all the weights are the same w

j

= d

j

= (, then each term in the

sum is simply the shortest distance between the point (t

j

, y

j

) and

curve (x; t) (as illustrated in the previous gure).

In order to get the orthogonal-looking gure, I set w

j

= 1/0.5 and

d

j

= 1/4, thus adjusting for the dierent scales in the t- and

y-directions.

The shortest path between the point and the curve will be normal

(orthogonal) to the curve at the point of intersection.

We can think of the scaling (weighting) as adjusting for measuring

time in fortnights, seconds, milli-seconds, micro-seconds, or

nano-seconds...

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (11/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

Orthogonal Distance Regression: In Terms of Residuals r

j

By identifying the 2m residuals

r

j

(x,

) =

_

_

_

w

j

_

y

j

(x; t

j

+

j

)

_

j = 1, 2, . . . , m

d

j m

j m

j = (m + 1), (m + 2), . . . , 2m

we can rewrite the optimization problem

(x

) = arg min

x,

1

2

m

i =1

w

2

j

_

y

j

(x; t

j

+

j

)

_

2

+ d

2

j

2

j

,

in terms of the 2m-vector r(x,

)

(x

) = arg min

x,

1

2

2m

i=1

r

j

(x,

)

2

= arg min

x,

1

2

|r(x,

)|

2

2

.

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (12/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

Orthogonal Distance Regression Least Squares

If we take a cold hard stare at the expression

(x

) = arg min

x,

1

2

2m

i =1

r

j

(x,

)

2

= arg min

x,

1

2

|r(x,

)|

2

2

.

We realize that this is now a standard (nonlinear) least squares

problem with 2m terms and (n + m) unknowns x,

.

We can use any of the techniques we have previously explored for

the solution of the nonlinear least squares problem.

However, a straight-forward implementation of these strategies

may prove to be quite expensive, since the number of parameters

have doubled to 2m and the number of independent variables have

grown from n to (n + m). Recall that usually m n, so this is a

drastic growth of the problem.

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (13/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

Orthogonal Distance Regression Least Squares: Problem Size

m

n (n+m)

2m

Figure: We recast ODR as a much larger standard

nonlinear least squares problem.

Standard LSQ-solution via QR/SVD O(mn

2

), for m n; slows

down by a factor of 2(1 + m/n)

2

.

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (14/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

ODR Least Squares: Exploiting Structure

Fortunately we can save a lot of work by exploiting the structure of the

Jacobian of the Least Squares problem originating from the orthogonal

distance regression many entries are zero!

r

j

i

= w

j

[y

j

(x; t

j

+

j

)]

i

= 0, i , j m, i ,= j

r

j

i

=

[d

j m

j m

]

i

=

_

0 i ,= (j m), j > m

d

j m

i = (j m), j > m

r

j

x

i

=

[d

j m

j m

]

x

i

= 0, i = 1, 2, . . . , n, j > m

Let v

j

= w

j

[y

j

(x; t

j

+

j

)]

j

, and let D = diag(

d), and V = diag(v),

then we can write the Jacobian of the residual function in matrix form...

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (15/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

ODR Least Squares: The Jacobian

We now have

J(x,

) =

_

J V

0 D

_

,

where D and V are m m diagonal matrices, D = diag(

d), and

V = diag(v), and

J is the m n matrix dened by

J =

_

[w

j

(y

j

(x; t

j

+

j

))]

x

i

_

j = 1, 2, . . . , m

i = 1, 2, . . . , n

We can now use this matrix in e.g. the Levenberg-Marquardt

algorithm...

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (16/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

ODR Least Squares: The Jacobian Structure

m

n (n+m)

2m

Figure: If we exploit the structure of the Jacobian,

the problem is still somewhat tractable.

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (17/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

ODR Least Squares Levenberg-Marquardt 1 of 2

If we partition the step vector p, and the residual vector r into

p =

_

p

x

p

_

, r =

_

r

1

r

2

_

where p

x

R

n

, p

R

m

, and r

1

, r

2

R

m

, then e.g. we can write

the Levenberg-Marquardt subproblem in partitioned form

_

J

T

J + I

n

J

T

V

V

J V

2

+ D

2

+ I

m

_

_

p

x

p

_

=

_

J

T

r

1

Vr

1

+ Dr

2

_

Since the (2, 2)-block V

2

+ D

2

+ I

m

is diagonal, we can eliminate

the p

variables from the system...

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (18/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

ODR Least Squares Levenberg-Marquardt 2 of 2

_

J

T

J + I

n

J

T

V

V

J V

2

+ D

2

+ I

m

_

_

p

x

p

_

=

_

J

T

r

1

Vr

1

+ Dr

2

_

p

=

_

V

2

+ D

2

+ I

m

_

1

_

(Vr

1

+ Dr

2

) + V

J p

x

_

This leads to the n n-system A p

x

=

b, where

A =

_

J

T

J + I

n

J

T

V

_

V

2

+ D

2

+ I

m

_

1

V

J

_

b =

_

J

T

r

1

+

J

T

V

_

V

2

+ D

2

+ I

m

_

1

_

Vr

1

+ Dr

2

__

.

Hence, the total cost of nding the LM-step is only marginally

more expensive than for the standard least squares problem.

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (19/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

ODR LSQ (2m (n + m)) Levenberg-Marquardt LSQ (m n)

The derived system may be ill-conditioned since we have formed a

modied version of the normal equations

J

T

J + stu... With some

work we can recast is as an m n linear least squares problem

p

x

= arg min

p

|

A p

b|

2

, where

A =

_

J + [

J

T

]

V

_

V

2

+ D

2

+ I

m

_

1

V

J

_

b =

_

r

1

+ V

_

V

2

+ D

2

+ I

m

_

1

_

Vr

1

+ Dr

2

__

Where the mystery factor [

J

T

]

is the pseudo-inverse of

J

T

.

Expressed in terms of the QR-factorization QR =

J, we have

J

T

= R

T

Q

T

, [

J

T

]

= QR

T

,

Since QR

T

R

T

Q

T

= I = R

T

Q

T

QR

T

.

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (20/21)

Summary

Orthogonal Distance Regression

Error Models

Weighted Least Squares / Orthogonal Distance Regression

ODR = Nonlinear Least Squares, Exploiting Structure

Software and References

MINPACK Implements the Levenberg-Marquardt algorithm. Available

for free from http://www.netlib.org/minpack/.

ODRPACK Implements the orthogonal distance regres-

sion algorithm. Available for free from

http://www.netlib.org/odrpack/.

Other

The NAG (Numerical Algorithms Group) library and HSL

(formerly the Harwell Subroutine Library), implement

several robust nonlinear least squares implementations.

GvL

Golub and van Loans Matrix Computations, 4th edition

(chapters 56) has a comprehensive discussion on orthogo-

nalization and least squares; explaining in gory detail much

of the linear algebra (e.g. the SVD and QR-factorization)

we swept under the rug.

Peter Blomgren, blomgren.peter@gmail.com Orthogonal Distance Regression (21/21)

You might also like

- A-level Maths Revision: Cheeky Revision ShortcutsFrom EverandA-level Maths Revision: Cheeky Revision ShortcutsRating: 3.5 out of 5 stars3.5/5 (8)

- Differential Quadrature MethodDocument13 pagesDifferential Quadrature MethodShannon HarrisNo ratings yet

- A Scaled Gradient Projection Method For Constrained Image DeblurringDocument28 pagesA Scaled Gradient Projection Method For Constrained Image DeblurringBogdan PopaNo ratings yet

- Understanding Vector Calculus: Practical Development and Solved ProblemsFrom EverandUnderstanding Vector Calculus: Practical Development and Solved ProblemsNo ratings yet

- Bad GeometryDocument5 pagesBad GeometryMilica PopovicNo ratings yet

- Distance Regression by Gauss-Newton-type Methods and Iteratively Re-Weighted Least-SquaresDocument15 pagesDistance Regression by Gauss-Newton-type Methods and Iteratively Re-Weighted Least-SquaresMárcioBarbozaNo ratings yet

- Undergraduate EconometricDocument34 pagesUndergraduate EconometricAcho JieNo ratings yet

- Supporting Random Wave Models A QuantumDocument7 pagesSupporting Random Wave Models A QuantumAlejandro SaenzNo ratings yet

- Development of Empirical Models From Process Data: - An Attractive AlternativeDocument27 pagesDevelopment of Empirical Models From Process Data: - An Attractive AlternativeJamel CayabyabNo ratings yet

- Gravitational Waves From An Axi-Symmetric Source in The Nonsymmetric Gravitational TheoryDocument26 pagesGravitational Waves From An Axi-Symmetric Source in The Nonsymmetric Gravitational TheoryEduardo Salgado EnríquezNo ratings yet

- Adaptive Simulation of Turbulent Flow Past A Full Car Model: Niclas Jansson Johan Hoffman Murtazo NazarovDocument7 pagesAdaptive Simulation of Turbulent Flow Past A Full Car Model: Niclas Jansson Johan Hoffman Murtazo NazarovLuiz Fernando T. VargasNo ratings yet

- Nonlinear Programming UnconstrainedDocument182 pagesNonlinear Programming UnconstrainedkeerthanavijayaNo ratings yet

- Society For Industrial and Applied MathematicsDocument12 pagesSociety For Industrial and Applied MathematicsluisNo ratings yet

- BASIC ITERATIVE METHODS FOR SOLVING LINEAR SYSTEMSDocument33 pagesBASIC ITERATIVE METHODS FOR SOLVING LINEAR SYSTEMSradoevNo ratings yet

- Numerical Study of Nonlinear Sine-Gordon Equation by Using The Modified Cubic B-Spline Differential Quadrature MethodDocument15 pagesNumerical Study of Nonlinear Sine-Gordon Equation by Using The Modified Cubic B-Spline Differential Quadrature Methodvarma g s sNo ratings yet

- P 1963 Marquardt AN ALGORITHM FOR LEAST-SQUARES ESTIMATION OF NONLINEAR PARAMETERS PDFDocument12 pagesP 1963 Marquardt AN ALGORITHM FOR LEAST-SQUARES ESTIMATION OF NONLINEAR PARAMETERS PDFrobertomenezessNo ratings yet

- Yang Gobbert PDFDocument19 pagesYang Gobbert PDFSadek AhmedNo ratings yet

- A Finite Element Approach To Burgers' Equation: J. Caldwell and P. WanlessDocument5 pagesA Finite Element Approach To Burgers' Equation: J. Caldwell and P. WanlesschrissbansNo ratings yet

- Power System State Estimation Based On Nonlinear ProgrammingDocument6 pagesPower System State Estimation Based On Nonlinear ProgrammingBrenda Naranjo MorenoNo ratings yet

- Optimize Statistical Process Control with Variable SamplingDocument29 pagesOptimize Statistical Process Control with Variable SamplingjuanivazquezNo ratings yet

- Beam On Elastic FoundationDocument8 pagesBeam On Elastic Foundationgorakdias50% (2)

- Application of The Upper and Lower-Bound Theorems To Three-Dimensional Stability of SlopesDocument8 pagesApplication of The Upper and Lower-Bound Theorems To Three-Dimensional Stability of SlopesJeanOscorimaCelisNo ratings yet

- SIO239 Conductivity of The Deep Earth Iterative Inverse ModelingDocument9 pagesSIO239 Conductivity of The Deep Earth Iterative Inverse Modelingforscribd1981No ratings yet

- Mujeeb Ur Rehman and Umer Saeed: C 2015 Korean Mathematical SocietyDocument28 pagesMujeeb Ur Rehman and Umer Saeed: C 2015 Korean Mathematical SocietyVe LopiNo ratings yet

- Module 8: Numerical Methods: TopicsDocument20 pagesModule 8: Numerical Methods: TopicsMáximo A. SánchezNo ratings yet

- An Adaptive Nonlinear Least-Squares AlgorithmDocument21 pagesAn Adaptive Nonlinear Least-Squares AlgorithmOleg ShirokobrodNo ratings yet

- Mirror Descent and Nonlinear Projected Subgradient Methods For Convex OptimizationDocument9 pagesMirror Descent and Nonlinear Projected Subgradient Methods For Convex OptimizationfurbyhaterNo ratings yet

- Restricted Delaunay Triangulations and Normal Cycle: David Cohen-Steiner Jean-Marie MorvanDocument10 pagesRestricted Delaunay Triangulations and Normal Cycle: David Cohen-Steiner Jean-Marie MorvanCallMeChironNo ratings yet

- Discretization of EquationDocument14 pagesDiscretization of Equationsandyengineer13No ratings yet

- Nonlinear Least Squares Optimization and Data Fitting MethodsDocument20 pagesNonlinear Least Squares Optimization and Data Fitting MethodsMeriska ApriliadaraNo ratings yet

- Augmentad LagrangeDocument45 pagesAugmentad LagrangeMANOEL BERNARDES DE JESUSNo ratings yet

- Heat Equation in Partial DimensionsDocument27 pagesHeat Equation in Partial DimensionsRameezz WaajidNo ratings yet

- Nonlinear curve fitting with the Levenberg-Marquardt methodDocument15 pagesNonlinear curve fitting with the Levenberg-Marquardt methodelmayi385No ratings yet

- Julio Garralon, Francisco Rus and Francisco R. Villatora - Radiation in Numerical Compactons From Finite Element MethodsDocument6 pagesJulio Garralon, Francisco Rus and Francisco R. Villatora - Radiation in Numerical Compactons From Finite Element MethodsPomac232No ratings yet

- 6-A Prediction ProblemDocument31 pages6-A Prediction ProblemPrabhjot KhuranaNo ratings yet

- A Sharp Version of Mahler's Inequality For Products of PolynomialsDocument13 pagesA Sharp Version of Mahler's Inequality For Products of PolynomialsLazar MihailNo ratings yet

- The Levenberg-Marquardt Method For Nonlinear Least Squares Curve-Fitting ProblemsDocument15 pagesThe Levenberg-Marquardt Method For Nonlinear Least Squares Curve-Fitting Problemsbooksreader1No ratings yet

- Vector Arithmetic and GeometryDocument10 pagesVector Arithmetic and GeometryrodwellheadNo ratings yet

- Computation of Parameters in Linear Models - Linear RegressionDocument26 pagesComputation of Parameters in Linear Models - Linear RegressioncegarciaNo ratings yet

- Notes For Experimental Physics: Dimensional AnalysisDocument12 pagesNotes For Experimental Physics: Dimensional AnalysisJohn FerrarNo ratings yet

- The Conjugate Gradient Method: Tom LycheDocument23 pagesThe Conjugate Gradient Method: Tom Lychett186No ratings yet

- Ecole Polytechnique: Centre de Math Ematiques Appliqu EES Umr Cnrs 7641Document22 pagesEcole Polytechnique: Centre de Math Ematiques Appliqu EES Umr Cnrs 7641harshal161987No ratings yet

- Application of GDQM in Solving Burgers' EquationsDocument7 pagesApplication of GDQM in Solving Burgers' EquationssamuelcjzNo ratings yet

- Numerical Solution of 2D Heat EquationDocument25 pagesNumerical Solution of 2D Heat Equationataghassemi67% (3)

- Computers and Mathematics With Applications: Maddu Shankar, S. SundarDocument11 pagesComputers and Mathematics With Applications: Maddu Shankar, S. SundarAT8iNo ratings yet

- Finite Difference MethodsDocument15 pagesFinite Difference MethodsRami Mahmoud BakrNo ratings yet

- Claims Reserving Using Tweedie'S Compound Poisson Model: BY Ario ÜthrichDocument16 pagesClaims Reserving Using Tweedie'S Compound Poisson Model: BY Ario ÜthrichAdamNo ratings yet

- Tolerances in Geometric Constraint ProblemsDocument16 pagesTolerances in Geometric Constraint ProblemsVasudeva Singh DubeyNo ratings yet

- A Simple Method For Calculating Clebsch-Gordan CoefficientsDocument11 pagesA Simple Method For Calculating Clebsch-Gordan CoefficientsAna Maria Rodriguez VeraNo ratings yet

- International Journal of C Numerical Analysis and Modeling Computing and InformationDocument7 pagesInternational Journal of C Numerical Analysis and Modeling Computing and Informationtomk2220No ratings yet

- Accurate and Efficient Evaluation of Modal Green's FunctionsDocument12 pagesAccurate and Efficient Evaluation of Modal Green's Functionsbelacqua2No ratings yet

- Least Squares Approximations of Power Series: 1 20 10.1155/IJMMS/2006/53474Document21 pagesLeast Squares Approximations of Power Series: 1 20 10.1155/IJMMS/2006/53474MS schNo ratings yet

- Cam17 12Document17 pagesCam17 12peter panNo ratings yet

- Torsion of Orthotropic Bars With L-Shaped or Cruciform Cross-SectionDocument15 pagesTorsion of Orthotropic Bars With L-Shaped or Cruciform Cross-SectionRaquel CarmonaNo ratings yet

- Seismic Source Inversion Using Discontinuous Galerkin Methods A Bayesian ApproachDocument16 pagesSeismic Source Inversion Using Discontinuous Galerkin Methods A Bayesian ApproachJuan Pablo Madrigal CianciNo ratings yet

- KIL1005: Numerical Methods For Engineering: Semester 2, Session 2019/2020 7 May 2020Document16 pagesKIL1005: Numerical Methods For Engineering: Semester 2, Session 2019/2020 7 May 2020Safayet AzizNo ratings yet

- Directional Secant Method For Nonlinear Equations: Heng-Bin An, Zhong-Zhi BaiDocument14 pagesDirectional Secant Method For Nonlinear Equations: Heng-Bin An, Zhong-Zhi Baisureshpareth8306No ratings yet

- MCMC BriefDocument69 pagesMCMC Briefjakub_gramolNo ratings yet

- Comentarios RevisoresDocument3 pagesComentarios RevisoresemwlupeNo ratings yet

- TeoriaDispersion PDFDocument22 pagesTeoriaDispersion PDFPedro Luis CarroNo ratings yet

- Switch Mode RF Amplifier 02Document5 pagesSwitch Mode RF Amplifier 02Henry TseNo ratings yet

- 2329Document57 pages2329emwlupeNo ratings yet

- Switch Mode RF Amplifier 02Document5 pagesSwitch Mode RF Amplifier 02Henry TseNo ratings yet

- PSD Sos SurveyDocument28 pagesPSD Sos SurveyemwlupeNo ratings yet

- SosDocument10 pagesSosemwlupeNo ratings yet

- QRDocument8 pagesQRemwlupeNo ratings yet

- Antenna Synthesis: Given A Radiation Pattern, Find The Required Current Distribution To Realize ItDocument15 pagesAntenna Synthesis: Given A Radiation Pattern, Find The Required Current Distribution To Realize ItemwlupeNo ratings yet

- UCT APM M2 U1 - TP Leadership QuestionnaireDocument4 pagesUCT APM M2 U1 - TP Leadership QuestionnaireLincolyn MoyoNo ratings yet

- RADEMAKER) Sophrosyne and The Rhetoric of Self-RestraintDocument392 pagesRADEMAKER) Sophrosyne and The Rhetoric of Self-RestraintLafayers100% (2)

- Block Ice Machine Bk50tDocument6 pagesBlock Ice Machine Bk50tWisermenNo ratings yet

- Technical Report Writing For Ca2 ExaminationDocument6 pagesTechnical Report Writing For Ca2 ExaminationAishee DuttaNo ratings yet

- Agricrop9 ModuleDocument22 pagesAgricrop9 ModuleMaria Daisy ReyesNo ratings yet

- EPFO Declaration FormDocument4 pagesEPFO Declaration FormSiddharth PednekarNo ratings yet

- Professional Industrial Engineering Program: Technical EnglishDocument15 pagesProfessional Industrial Engineering Program: Technical EnglishFabio fernandezNo ratings yet

- 3.4 Linearization of Nonlinear State Space Models: 1 F X Op 1 F X Op 2 F U Op 1 F U Op 2Document3 pages3.4 Linearization of Nonlinear State Space Models: 1 F X Op 1 F X Op 2 F U Op 1 F U Op 2Ilija TomicNo ratings yet

- SMAN52730 - Wiring Diagram B11RaDocument58 pagesSMAN52730 - Wiring Diagram B11RaJonathan Nuñez100% (1)

- Telstra Strategic Issues and CEO Leadership Briefing PaperDocument16 pagesTelstra Strategic Issues and CEO Leadership Briefing PaperIsabel Woods100% (1)

- Electrical Tools, Eq, Sup and MatDocument14 pagesElectrical Tools, Eq, Sup and MatXylene Lariosa-Labayan100% (2)

- Economics Not An Evolutionary ScienceDocument17 pagesEconomics Not An Evolutionary SciencemariorossiNo ratings yet

- Cesp 105 - Foundation Engineering and Retaining Wall Design Lesson 11. Structural Design of Spread FootingDocument7 pagesCesp 105 - Foundation Engineering and Retaining Wall Design Lesson 11. Structural Design of Spread FootingJadeNo ratings yet

- Speed Control Methods of 3-Phase Induction MotorsDocument3 pagesSpeed Control Methods of 3-Phase Induction MotorsBenzene diazonium saltNo ratings yet

- Test Bank For Close Relations An Introduction To The Sociology of Families 4th Canadian Edition McdanielDocument36 pagesTest Bank For Close Relations An Introduction To The Sociology of Families 4th Canadian Edition Mcdanieldakpersist.k6pcw4100% (44)

- GE Café™ "This Is Really Big" RebateDocument2 pagesGE Café™ "This Is Really Big" RebateKitchens of ColoradoNo ratings yet

- MCC-2 (Intermediate & Finishing Mill)Document17 pagesMCC-2 (Intermediate & Finishing Mill)Himanshu RaiNo ratings yet

- Herbarium Specimen Preparation and Preservation GuideDocument9 pagesHerbarium Specimen Preparation and Preservation GuideJa sala DasNo ratings yet

- Sa Sem Iv Assignment 1Document2 pagesSa Sem Iv Assignment 1pravin rathodNo ratings yet

- Blood Smear PreparationDocument125 pagesBlood Smear PreparationKim RuizNo ratings yet

- Develop, Implement and Maintain WHS Management System Task 2Document4 pagesDevelop, Implement and Maintain WHS Management System Task 2Harry Poon100% (1)

- Bi006008 00 02 - Body PDFDocument922 pagesBi006008 00 02 - Body PDFRamon HidalgoNo ratings yet

- Talento 371/372 Pro Talento 751/752 Pro: Installation and MountingDocument1 pageTalento 371/372 Pro Talento 751/752 Pro: Installation and MountingFareeha IrfanNo ratings yet

- In2it: A System For Measurement of B-Haemoglobin A1c Manufactured by BIO-RADDocument63 pagesIn2it: A System For Measurement of B-Haemoglobin A1c Manufactured by BIO-RADiq_dianaNo ratings yet

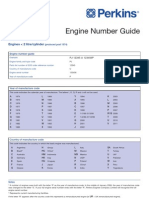

- Perkins Engine Number Guide PP827Document6 pagesPerkins Engine Number Guide PP827Muthu Manikandan100% (1)

- Executive CommitteeDocument7 pagesExecutive CommitteeMansur ShaikhNo ratings yet

- RallinAneil 2019 CHAPTER2TamingQueer DreadsAndOpenMouthsLiDocument10 pagesRallinAneil 2019 CHAPTER2TamingQueer DreadsAndOpenMouthsLiyulianseguraNo ratings yet

- Challan FormDocument2 pagesChallan FormSingh KaramvirNo ratings yet

- Manual HandlingDocument14 pagesManual Handlingkacang mete100% (1)

- L. M. Greenberg - Architects of The New Sorbonne. Liard's Purpose and Durkheim's RoleDocument19 pagesL. M. Greenberg - Architects of The New Sorbonne. Liard's Purpose and Durkheim's Rolepitert90No ratings yet