Professional Documents

Culture Documents

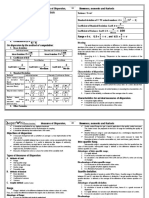

Testing Difference of Means

Uploaded by

boCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Testing Difference of Means

Uploaded by

boCopyright:

Available Formats

1

Dependent Means

There are two possible cases when testing two population means, the dependent case and the independent

case. Most books treat the independent case first, but I'm putting the dependent case first because it follows

immediately from the test for a single population mean in the previous chapter.

The Mean of the Difference:

The idea with the dependent case is to create a new variable, D, which is the difference between the paired

values. You will then be testing the mean of this new variable.

Here are some steps to help you accomplish the hypothesis testing

1. Write down the original claim in simple terms. For example: After > Before.

2. Move everything to one side: After - Before > 0.

3. Call the difference you have on the left side D: D = After - Before > 0.

4. Convert to proper notation:

5. Compute the new variable D and be sure to follow the order you have defined in step 3. Do not

simply take the smaller away from the larger. From this point, you can think of having a new set of

values. Technically, they are called D, but you can think of them as x. The original values from the

two samples can be discarded.

6. Find the mean and standard deviation of the variable D. Use these as the values in the t-test from

chapter 9.

Independent Means

Sums and Differences of Independent Variables

Independent variables can be combined to form new variables. The mean and variance of the combination

can be found from the means and the variances of the original variables.

Combination of Variables In English (Melodic Mathematics)

The mean of a sum is the sum of the means.

The mean of a difference is the difference of the means.

The variance of a sum is the sum of the variances.

The variance of a difference is the sum of the variances.

The Difference of the Means:

2

Since we are combining two variables by subtraction, the important rules from the table above are that the

mean of the difference is the difference of the means and the variance of the difference is the sum of the

variances.

It is important to note that the variance of the difference is the sum of the variances, not the standard

deviation of the difference is the sum of the standard deviations. When we go to find the standard error, we

must combine variances to do so. Also, you're probably wondering why the variance of the difference is the

sum of the variances instead of the difference of the variances. Since the values are squared, the negative

associated with the second variable becomes positive, and it becomes the sum of the variances. Also,

variances can't be negative, and if you took the difference of the variances, it could be negative.

You might also like

- Measures of VariabilityDocument5 pagesMeasures of VariabilityGemine Ailna Panganiban NuevoNo ratings yet

- SPTC 0203 Q3 FPFDocument27 pagesSPTC 0203 Q3 FPFLove the VibeNo ratings yet

- Research IV - QTR 3 - Week 3Document7 pagesResearch IV - QTR 3 - Week 3andrei bercadezNo ratings yet

- Econometrics 4Document37 pagesEconometrics 4Anunay ChoudharyNo ratings yet

- SPTC 0202 Q3 TomDocument21 pagesSPTC 0202 Q3 TomLove the VibeNo ratings yet

- Metolit Gabung BenerDocument26 pagesMetolit Gabung BenerTheresia Lolita SetiawanNo ratings yet

- 1.06 Variance and Standard Deviation: 1 Exploring DataDocument2 pages1.06 Variance and Standard Deviation: 1 Exploring DataKavya GopakumarNo ratings yet

- Basic ConceptsDocument9 pagesBasic Conceptshsrinivas_7No ratings yet

- Understand Dummy Variables in RegressionDocument2 pagesUnderstand Dummy Variables in RegressionWaqas AnwarNo ratings yet

- Linear Models BiasDocument17 pagesLinear Models BiasChathura DewenigurugeNo ratings yet

- Shubh UM22239Document4 pagesShubh UM22239Shubh SandhuNo ratings yet

- CHAPTER 22 &23: Bivariate Statistical AnalysisDocument10 pagesCHAPTER 22 &23: Bivariate Statistical Analysissaka haiNo ratings yet

- Standard DeviationDocument8 pagesStandard DeviationAmine BajjiNo ratings yet

- Readings For Lecture 5,: S S N N S NDocument16 pagesReadings For Lecture 5,: S S N N S NSara BayedNo ratings yet

- Sta1510 Basic Statistics and Sta1610 IntDocument66 pagesSta1510 Basic Statistics and Sta1610 IntLeigh MakanNo ratings yet

- Class 2 Lab2Document6 pagesClass 2 Lab2GsUpretiNo ratings yet

- Est Diff MeanDocument41 pagesEst Diff Meanariba shoukatNo ratings yet

- Intro To Political Analysis and Research - Measures of Variablity and Normal DistriDocument5 pagesIntro To Political Analysis and Research - Measures of Variablity and Normal Distribyun baek hyunNo ratings yet

- Chapter 1 Descriptives StatisticsDocument27 pagesChapter 1 Descriptives StatisticsKamil IbraNo ratings yet

- 6 Mean Discreet RV SPTC 0202 Q3 FPFDocument23 pages6 Mean Discreet RV SPTC 0202 Q3 FPFMichael Adrian LabradorNo ratings yet

- Graph VariablesDocument22 pagesGraph VariablesSydney Jester PascuaNo ratings yet

- Independent and Dependent Variables: Continuous and Discrete Data CorrelationDocument22 pagesIndependent and Dependent Variables: Continuous and Discrete Data CorrelationAngel CambaNo ratings yet

- Inference For Numerical Data - Stats 250Document18 pagesInference For Numerical Data - Stats 250Oliver BarrNo ratings yet

- Alternative Hypothesis: One-Tailed Test Will Be Used When Using Two-Tailed Test Will Be Used WhenDocument11 pagesAlternative Hypothesis: One-Tailed Test Will Be Used When Using Two-Tailed Test Will Be Used WhenRainee Anne DeveraNo ratings yet

- Independent and Dependent Variables: Continuous and Discrete Data CorrelationDocument22 pagesIndependent and Dependent Variables: Continuous and Discrete Data CorrelationHanne Danica AtesNo ratings yet

- Statistics and Probability Guide for Home LearningDocument4 pagesStatistics and Probability Guide for Home LearningPrincess SanchezNo ratings yet

- Variance, Standard DeviationDocument5 pagesVariance, Standard DeviationAasthaa SharmaNo ratings yet

- Chapters 2-4Document72 pagesChapters 2-4WondmageneUrgessaNo ratings yet

- Disperson SkwenessOriginalDocument10 pagesDisperson SkwenessOriginalRam KrishnaNo ratings yet

- Stat - Prob Q3 Module 3Document28 pagesStat - Prob Q3 Module 3Joan Marie Salayog100% (1)

- Hypothesis TestingDocument21 pagesHypothesis TestingjomwankundaNo ratings yet

- STATISTICAL TREATMENTDocument12 pagesSTATISTICAL TREATMENTMelanie ArangelNo ratings yet

- Statistics and Probability: Quarter 4 - Module 2 Null and Alternative Hypotheses and Test StatisticsDocument16 pagesStatistics and Probability: Quarter 4 - Module 2 Null and Alternative Hypotheses and Test StatisticsSonnel Calma100% (2)

- Chapter 14 Advanced Panel Data Methods: T T Derrorterm Complicate X yDocument13 pagesChapter 14 Advanced Panel Data Methods: T T Derrorterm Complicate X ydsmile1No ratings yet

- Standard Deviation-From WikipediaDocument11 pagesStandard Deviation-From WikipediaahmadNo ratings yet

- CHYS 3P15 Final Exam ReviewDocument7 pagesCHYS 3P15 Final Exam ReviewAmanda ScottNo ratings yet

- ANOVA Analysis Reveals Best BP Reduction MethodDocument9 pagesANOVA Analysis Reveals Best BP Reduction Methodmahmoud fuqahaNo ratings yet

- Educational StatisticsDocument12 pagesEducational StatisticsAsad NomanNo ratings yet

- Missing Value TreatmentDocument22 pagesMissing Value TreatmentrphmiNo ratings yet

- Business Club: Basic StatisticsDocument26 pagesBusiness Club: Basic StatisticsJustin Russo HarryNo ratings yet

- Comprehensive Guide to Data ExplorationDocument8 pagesComprehensive Guide to Data Explorationsnreddy b100% (1)

- Statsprob q2 Hypothesis Testing (1)Document7 pagesStatsprob q2 Hypothesis Testing (1)livelaughwclNo ratings yet

- The Mean, Median, Mode, RangeDocument2 pagesThe Mean, Median, Mode, RangeRamareziel Parreñas RamaNo ratings yet

- Standard Deviation and VarianceDocument18 pagesStandard Deviation and VarianceIzi100% (3)

- Me Math 9 q2 0401 PsDocument38 pagesMe Math 9 q2 0401 PsFlor HawthornNo ratings yet

- A Guide To Data ExplorationDocument20 pagesA Guide To Data Explorationmike110*No ratings yet

- Basic Concepts of Hypothesis Testing DiscussionDocument46 pagesBasic Concepts of Hypothesis Testing DiscussionBenedick CruzNo ratings yet

- Glossary StatisticsDocument6 pagesGlossary Statisticssilviac.microsysNo ratings yet

- Statistical Concepts ExplainedDocument4 pagesStatistical Concepts ExplainedShubh SandhuNo ratings yet

- Topic 17 Formulating Appropriate Null and Alternative Hypotheses On A Population Mean PDFDocument7 pagesTopic 17 Formulating Appropriate Null and Alternative Hypotheses On A Population Mean PDFPrincess Verniece100% (1)

- Data Mining TechnicalDocument45 pagesData Mining TechnicalbhaveshNo ratings yet

- A Simple Introduction To ANOVA (With Applications in Excel) : Source: MegapixlDocument22 pagesA Simple Introduction To ANOVA (With Applications in Excel) : Source: MegapixlmelannyNo ratings yet

- Basic Statistics ConceptsDocument29 pagesBasic Statistics ConceptsthinagaranNo ratings yet

- Assessment Module 6: BackgroundDocument3 pagesAssessment Module 6: BackgroundbradNo ratings yet

- Choosing the Right Variables for Regression ModelsDocument18 pagesChoosing the Right Variables for Regression ModelswuNo ratings yet

- Q. 1 How Is Mode Calculated? Also Discuss Its Merits and Demerits. AnsDocument13 pagesQ. 1 How Is Mode Calculated? Also Discuss Its Merits and Demerits. AnsGHULAM RABANINo ratings yet

- PME-lec8-ch4-bDocument50 pagesPME-lec8-ch4-bnaba.jeeeNo ratings yet

- Class X English Maths Chapter06Document30 pagesClass X English Maths Chapter06shriram1082883No ratings yet

- Midterm II Review: Remember: Include Lots of Examples, Be Concise But Give Lots of InformationDocument6 pagesMidterm II Review: Remember: Include Lots of Examples, Be Concise But Give Lots of InformationKirsti JangaardNo ratings yet

- Examples On Normal DistributionDocument1 pageExamples On Normal DistributionboNo ratings yet

- Poisson To NormalDocument1 pagePoisson To NormalboNo ratings yet

- Examples On Linear Combinations of Normal VariablesDocument1 pageExamples On Linear Combinations of Normal VariablesboNo ratings yet

- Area 1Document1 pageArea 1boNo ratings yet

- Binomial To NormalDocument1 pageBinomial To NormalboNo ratings yet

- Estimation PDFDocument3 pagesEstimation PDFboNo ratings yet

- Multiple RegressionDocument4 pagesMultiple RegressionboNo ratings yet

- Inference in The Regression ModelDocument4 pagesInference in The Regression ModelboNo ratings yet

- TRANSFORMATION OF FUNCTIONsDocument18 pagesTRANSFORMATION OF FUNCTIONsboNo ratings yet

- Simple Random Sampling Is The Basic Sampling Technique Where We Select A Sample of Subjects From A LargeDocument1 pageSimple Random Sampling Is The Basic Sampling Technique Where We Select A Sample of Subjects From A LargeboNo ratings yet

- Estimation PDFDocument3 pagesEstimation PDFboNo ratings yet

- Goodness of Fit: Squares (ESS) To The Total Sum of Squares (TSS)Document2 pagesGoodness of Fit: Squares (ESS) To The Total Sum of Squares (TSS)boNo ratings yet

- Testing ProportionsDocument2 pagesTesting ProportionsboNo ratings yet

- The F Test: Explaining Overall Regression SignificanceDocument1 pageThe F Test: Explaining Overall Regression SignificanceboNo ratings yet

- Avoiding Type I and Type II Errors in Hypothesis TestingDocument2 pagesAvoiding Type I and Type II Errors in Hypothesis TestingboNo ratings yet

- Testing A Sample Mean: Large Samples: Using The Normal DistributionDocument3 pagesTesting A Sample Mean: Large Samples: Using The Normal DistributionboNo ratings yet

- A Variance Ratio Test: XM Yn FDocument2 pagesA Variance Ratio Test: XM Yn FboNo ratings yet

- Hypothesis Testing GuideDocument8 pagesHypothesis Testing GuideboNo ratings yet

- Sampling Distributions of MeansDocument1 pageSampling Distributions of MeansboNo ratings yet

- Student's T DistributionDocument3 pagesStudent's T DistributionboNo ratings yet

- Sampling Techniques: Advantages and DisadvantagesDocument1 pageSampling Techniques: Advantages and DisadvantagesboNo ratings yet

- Example II: The Normal Distribution (A Continuous Random Variable)Document3 pagesExample II: The Normal Distribution (A Continuous Random Variable)boNo ratings yet

- Hypothesis Testing: The Nature of Hypotheses and Scientific MethodDocument2 pagesHypothesis Testing: The Nature of Hypotheses and Scientific MethodboNo ratings yet

- Mail MergeDocument1 pageMail MergeboNo ratings yet

- Sampling Distributions:: N X X X XDocument3 pagesSampling Distributions:: N X X X XboNo ratings yet

- Binomial NotesDocument2 pagesBinomial NotesboNo ratings yet

- Change AgentDocument9 pagesChange AgentboNo ratings yet

- Probability: Example I (Discrete Random Variable) : The Binomial DistributionDocument4 pagesProbability: Example I (Discrete Random Variable) : The Binomial DistributionboNo ratings yet

- The Central Limit TheoremDocument1 pageThe Central Limit TheoremboNo ratings yet

- Inferences about Linear Regression: Sample Statistics Confidence Interval for Slope, β1Document3 pagesInferences about Linear Regression: Sample Statistics Confidence Interval for Slope, β1utsav_koshtiNo ratings yet

- Bon FerroniDocument3 pagesBon FerroniFrancais FordNo ratings yet

- Teaching Plan: Engineering Mathematics and Statistics Semester 1Document3 pagesTeaching Plan: Engineering Mathematics and Statistics Semester 1Mohd Izzat Abd GhaniNo ratings yet

- Isc12 Correlation and Regression PDFDocument11 pagesIsc12 Correlation and Regression PDFArghya SahaNo ratings yet

- OLS 2 VariablesDocument55 pagesOLS 2 Variables09 Hiba P VNo ratings yet

- Analisis Soal Ulangan Harian Fitri Nur HidayatiDocument12 pagesAnalisis Soal Ulangan Harian Fitri Nur HidayatiNenk UpOyyNo ratings yet

- Advanced Educational: StatisticsDocument37 pagesAdvanced Educational: StatisticsCynthia Marie BesaNo ratings yet

- FML Assignments Uni-1,2,3,4,6Document5 pagesFML Assignments Uni-1,2,3,4,6hitarth parekhNo ratings yet

- Ben Ulmer, Matt Fernandez, Predicting Soccer Results in The English Premier LeagueDocument5 pagesBen Ulmer, Matt Fernandez, Predicting Soccer Results in The English Premier LeagueCosmin GeorgeNo ratings yet

- SAT Subject StatisticsDocument12 pagesSAT Subject StatisticsyuhanNo ratings yet

- GUID - 5 en-USDocument29 pagesGUID - 5 en-USAndrés Ignacio Solano SeravalliNo ratings yet

- Sec 7.5 With Excel 2020Document13 pagesSec 7.5 With Excel 2020Darian ChettyNo ratings yet

- Eco 270Document9 pagesEco 270Tarun Shankar ChoudharyNo ratings yet

- Term Project DetailsDocument4 pagesTerm Project Detailsapi-316152589No ratings yet

- Exercise Probability Distribution QADocument3 pagesExercise Probability Distribution QAHafiz khalidNo ratings yet

- Problem Set Solution QT I I 17 DecDocument22 pagesProblem Set Solution QT I I 17 DecPradnya Nikam bj21158No ratings yet

- Regression AnalysisDocument15 pagesRegression AnalysispoonamNo ratings yet

- Chapter8 StudentDocument60 pagesChapter8 StudentsanjayNo ratings yet

- ANOVA (Analysis of Variance)Document7 pagesANOVA (Analysis of Variance)TusharNo ratings yet

- 1.probability Random Variables and Stochastic Processes Athanasios Papoulis S. Unnikrishna Pillai 1 300 241 270Document30 pages1.probability Random Variables and Stochastic Processes Athanasios Papoulis S. Unnikrishna Pillai 1 300 241 270AlvaroNo ratings yet

- Q4 Activity - No 2 - Statistics and ProbabilityDocument3 pagesQ4 Activity - No 2 - Statistics and ProbabilityOmairah S. MalawiNo ratings yet

- Lecture 18 LSD and HSDDocument19 pagesLecture 18 LSD and HSDPremKumarTamilarasanNo ratings yet

- Adaptive Lasso & Oracle PropertiesDocument12 pagesAdaptive Lasso & Oracle PropertiesNurul Hidayanti AnggrainiNo ratings yet

- Chapter 3 Study GuideDocument11 pagesChapter 3 Study Guidereema0% (1)

- Project QuestionsDocument3 pagesProject QuestionsravikgovinduNo ratings yet

- Statistical Significance vs Practical SignificanceDocument25 pagesStatistical Significance vs Practical SignificanceJasMisionMXPachucaNo ratings yet

- A Caution Regarding Rules of Thumb For Variance Inflation FactorsDocument18 pagesA Caution Regarding Rules of Thumb For Variance Inflation FactorsParag Jyoti DuttaNo ratings yet

- Module 11 Unit 3 Multiple Linear RegressionDocument8 pagesModule 11 Unit 3 Multiple Linear RegressionBeatriz LorezcoNo ratings yet

- Question 1: What Is Machine Learning Answer 1Document23 pagesQuestion 1: What Is Machine Learning Answer 1DevanshNo ratings yet

- Sampling Distribution and Point EstimatesDocument9 pagesSampling Distribution and Point EstimatesShiinNo ratings yet