Professional Documents

Culture Documents

Determining the Right Sample Size for an MSA Study

Uploaded by

Vikram BillalOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Determining the Right Sample Size for an MSA Study

Uploaded by

Vikram BillalCopyright:

Available Formats

Determining the Right Sample Size for an MSA

Study

Laura Lancaster and Chris Gotwalt

JMP Research & Development

SAS Institute

Discovery Summit Europe 2017

Laura Lancaster (SAS Institute) Discovery Summit 2017 1 / 33

Outline

1 Measurement Systems Analysis

2 Previous Study

3 Current Study Design

4 Results

Two Factors Crossed

Two Factors Nested

5 Conclusions

Laura Lancaster (SAS Institute) Discovery Summit 2017 2 / 33

Measurement Systems Analysis (MSA)

An MSA study is a designed experiment that helps determine how

much measurement variation is contributing to overall process

variation.

Laura Lancaster (SAS Institute) Discovery Summit 2017 3 / 33

Measurement Systems Analysis (MSA)

An MSA study is a designed experiment that helps determine how

much measurement variation is contributing to overall process

variation.

These studies use random effects models to estimate variance

components that assess the sources of variation in the

measurement process.

Laura Lancaster (SAS Institute) Discovery Summit 2017 3 / 33

Measurement Systems Analysis (MSA)

An MSA study is a designed experiment that helps determine how

much measurement variation is contributing to overall process

variation.

These studies use random effects models to estimate variance

components that assess the sources of variation in the

measurement process.

The variance components are typically estimated using one of

three methods:

I Average and Range Method

I Expected Means Squares (EMS)

I Restricted Maximum Likelihood (REML)

Laura Lancaster (SAS Institute) Discovery Summit 2017 3 / 33

Measurement Systems Analysis (MSA)

An MSA study is a designed experiment that helps determine how

much measurement variation is contributing to overall process

variation.

These studies use random effects models to estimate variance

components that assess the sources of variation in the

measurement process.

The variance components are typically estimated using one of

three methods:

I Average and Range Method

I Expected Means Squares (EMS)

I Restricted Maximum Likelihood (REML)

Problem: These methods can produce negative variance

component estimates that do not make sense in MSA studies.

Laura Lancaster (SAS Institute) Discovery Summit 2017 3 / 33

Measurement Systems Analysis (MSA)

An MSA study is a designed experiment that helps determine how

much measurement variation is contributing to overall process

variation.

These studies use random effects models to estimate variance

components that assess the sources of variation in the

measurement process.

The variance components are typically estimated using one of

three methods:

I Average and Range Method

I Expected Means Squares (EMS)

I Restricted Maximum Likelihood (REML)

Problem: These methods can produce negative variance

component estimates that do not make sense in MSA studies.

Typical Solution: Set negative variance components to zero.

Laura Lancaster (SAS Institute) Discovery Summit 2017 3 / 33

Measurement Systems Analysis (MSA)

An MSA study is a designed experiment that helps determine how

much measurement variation is contributing to overall process

variation.

These studies use random effects models to estimate variance

components that assess the sources of variation in the

measurement process.

The variance components are typically estimated using one of

three methods:

I Average and Range Method

I Expected Means Squares (EMS)

I Restricted Maximum Likelihood (REML)

Problem: These methods can produce negative variance

component estimates that do not make sense in MSA studies.

Typical Solution: Set negative variance components to zero.

Some practitioners were not happy with zeroed variance

components either!!

Laura Lancaster (SAS Institute) Discovery Summit 2017 3 / 33

MSA - Bayesian Estimate Method

New Solution: We found a Bayesian estimation method that produces

strictly positive variance components using a non-informative prior.

Laura Lancaster (SAS Institute) Discovery Summit 2017 4 / 33

MSA - Bayesian Estimate Method

New Solution: We found a Bayesian estimation method that produces

strictly positive variance components using a non-informative prior.

We generalized Portnoy and Sahai’s modified Jeffrey’s Prior and

implemented it in JMP’s Variability platform.

Laura Lancaster (SAS Institute) Discovery Summit 2017 4 / 33

MSA - Bayesian Estimate Method

New Solution: We found a Bayesian estimation method that produces

strictly positive variance components using a non-informative prior.

We generalized Portnoy and Sahai’s modified Jeffrey’s Prior and

implemented it in JMP’s Variability platform.

JMP’s default behavior is to use REML estimates if no variance

components have been zeroed and use the Bayesian estimates

otherwise. We will refer to this as a Hybrid method.

Laura Lancaster (SAS Institute) Discovery Summit 2017 4 / 33

Previous Study

1 Compared the bias and variability of the Bayesian and Hybrid

estimates to the REML estimates.

Laura Lancaster (SAS Institute) Discovery Summit 2017 5 / 33

Previous Study

1 Compared the bias and variability of the Bayesian and Hybrid

estimates to the REML estimates.

2 Compared each estimation method’s ability to correctly classify

measurement systems.

Laura Lancaster (SAS Institute) Discovery Summit 2017 5 / 33

Previous Study

1 Compared the bias and variability of the Bayesian and Hybrid

estimates to the REML estimates.

2 Compared each estimation method’s ability to correctly classify

measurement systems.

I Don Wheeler’s Evaluating the Measurement Process (EMP)

method

Laura Lancaster (SAS Institute) Discovery Summit 2017 5 / 33

Previous Study

1 Compared the bias and variability of the Bayesian and Hybrid

estimates to the REML estimates.

2 Compared each estimation method’s ability to correctly classify

measurement systems.

I Don Wheeler’s Evaluating the Measurement Process (EMP)

method

I Automotive Industry Action Group’s (AIAG) Gauge R&R method

Laura Lancaster (SAS Institute) Discovery Summit 2017 5 / 33

EMP Classification System

Intraclass Correlation Coefficient (ICC) - ρ - ratio of product

variance to total variance

σp2 σp2

ρ= =

σp2 + σe2 σx2

Laura Lancaster (SAS Institute) Discovery Summit 2017 6 / 33

EMP Classification System

Intraclass Correlation Coefficient (ICC) - ρ - ratio of product

variance to total variance

σp2 σp2

ρ= =

σp2 + σe2 σx2

EMP Classifications:

Classification ρ̂ Probability of Warning*

First Class 0.80 − 1.00 0.99 − 1.00

Second Class 0.50 − 0.80 0.88 − 0.99

Third Class 0.20 − 0.50 0.40 − 0.88

Fourth Class 0.00 − 0.20 0.03 − 0.40

* Probability of a warning for a 3σp shift within 10 subgroups using

Test 1.

Laura Lancaster (SAS Institute) Discovery Summit 2017 6 / 33

AIAG’s Classification System

AIAG uses Percent Gauge R&R to classify the health of a

measurement system.

s

σ̂e2 σ̂e p

%GRR = 100 = 100 = 100 1 − ρ̂

σ̂p2 + σ̂e2 σ̂x

Laura Lancaster (SAS Institute) Discovery Summit 2017 7 / 33

AIAG’s Classification System

AIAG uses Percent Gauge R&R to classify the health of a

measurement system.

s

σ̂e2 σ̂e p

%GRR = 100 = 100 = 100 1 − ρ̂

σ̂p2 + σ̂e2 σ̂x

AIAG Classifications:

Classification %GRR ρ̂

Acceptable 0% − 10% 0.99 − 1.00

Marginal 10% − 30% 0.91 − 0.99

Unacceptable 30% − 100% 0.00 − 0.91

Laura Lancaster (SAS Institute) Discovery Summit 2017 7 / 33

Previous Simulation Study

Three Typical MSA Designs

Laura Lancaster (SAS Institute) Discovery Summit 2017 8 / 33

Previous Simulation Study

Three Typical MSA Designs

I Two Factors Crossed (balanced) with 3 Operators, 10 Parts, 3

Replications

Laura Lancaster (SAS Institute) Discovery Summit 2017 8 / 33

Previous Simulation Study

Three Typical MSA Designs

I Two Factors Crossed (balanced) with 3 Operators, 10 Parts, 3

Replications

I Two Factors Nested (balanced) with 3 Operators, 20 Parts, 2

Replications

Laura Lancaster (SAS Institute) Discovery Summit 2017 8 / 33

Previous Simulation Study

Three Typical MSA Designs

I Two Factors Crossed (balanced) with 3 Operators, 10 Parts, 3

Replications

I Two Factors Nested (balanced) with 3 Operators, 20 Parts, 2

Replications

I Three Factors Staggered Nested Design (highly unbalanced) with

120 measurements - Performance was very bad!

Laura Lancaster (SAS Institute) Discovery Summit 2017 8 / 33

Previous Study Results

Bias - REML and Hybrid estimates are generally less biased than

Bayesian estimates.

Laura Lancaster (SAS Institute) Discovery Summit 2017 9 / 33

Previous Study Results

Bias - REML and Hybrid estimates are generally less biased than

Bayesian estimates.

Variability - Bayesian estimates almost always have smaller

RMSE and standard deviations than REML and Hybrid estimates.

Laura Lancaster (SAS Institute) Discovery Summit 2017 9 / 33

Previous Study Results

Bias - REML and Hybrid estimates are generally less biased than

Bayesian estimates.

Variability - Bayesian estimates almost always have smaller

RMSE and standard deviations than REML and Hybrid estimates.

EMP classifications:

I Were generally not too bad for all methods and designs but we

thought they could be improved by increasing sample size.

Laura Lancaster (SAS Institute) Discovery Summit 2017 9 / 33

Previous Study Results

Bias - REML and Hybrid estimates are generally less biased than

Bayesian estimates.

Variability - Bayesian estimates almost always have smaller

RMSE and standard deviations than REML and Hybrid estimates.

EMP classifications:

I Were generally not too bad for all methods and designs but we

thought they could be improved by increasing sample size.

I Worst case was 40% incorrect classification by all methods for a

two factors crossed design with a class 3 system.

Laura Lancaster (SAS Institute) Discovery Summit 2017 9 / 33

Previous Study Results

Bias - REML and Hybrid estimates are generally less biased than

Bayesian estimates.

Variability - Bayesian estimates almost always have smaller

RMSE and standard deviations than REML and Hybrid estimates.

EMP classifications:

I Were generally not too bad for all methods and designs but we

thought they could be improved by increasing sample size.

I Worst case was 40% incorrect classification by all methods for a

two factors crossed design with a class 3 system.

AIAG classifications:

I Were generally pretty good for two factors crossed but very bad for

two factors nested designs, especially for acceptable systems.

Laura Lancaster (SAS Institute) Discovery Summit 2017 9 / 33

Previous Study Results

Bias - REML and Hybrid estimates are generally less biased than

Bayesian estimates.

Variability - Bayesian estimates almost always have smaller

RMSE and standard deviations than REML and Hybrid estimates.

EMP classifications:

I Were generally not too bad for all methods and designs but we

thought they could be improved by increasing sample size.

I Worst case was 40% incorrect classification by all methods for a

two factors crossed design with a class 3 system.

AIAG classifications:

I Were generally pretty good for two factors crossed but very bad for

two factors nested designs, especially for acceptable systems.

I We hoped that increasing sample size would help with these

classifications.

Laura Lancaster (SAS Institute) Discovery Summit 2017 9 / 33

Previous Study Results

Bias - REML and Hybrid estimates are generally less biased than

Bayesian estimates.

Variability - Bayesian estimates almost always have smaller

RMSE and standard deviations than REML and Hybrid estimates.

EMP classifications:

I Were generally not too bad for all methods and designs but we

thought they could be improved by increasing sample size.

I Worst case was 40% incorrect classification by all methods for a

two factors crossed design with a class 3 system.

AIAG classifications:

I Were generally pretty good for two factors crossed but very bad for

two factors nested designs, especially for acceptable systems.

I We hoped that increasing sample size would help with these

classifications.

I Worst case was 100% incorrect classification by the Bayesian

method for the two factors nested design with an acceptable

system.

Laura Lancaster (SAS Institute) Discovery Summit 2017 9 / 33

Current Research - Sample Size

How does sample size affect our ability to estimate the variance

components and classify systems with the EMP and AIAG

methods?

Laura Lancaster (SAS Institute) Discovery Summit 2017 10 / 33

Simulation Study Design

Studied 2 Factors Crossed and 2 Factors Nested designs.

Laura Lancaster (SAS Institute) Discovery Summit 2017 11 / 33

Simulation Study Design

Studied 2 Factors Crossed and 2 Factors Nested designs.

Range of bad to good measurement systems (using ICC as the

metric)

Laura Lancaster (SAS Institute) Discovery Summit 2017 11 / 33

Simulation Study Design

Studied 2 Factors Crossed and 2 Factors Nested designs.

Range of bad to good measurement systems (using ICC as the

metric)

I ICC values in middle of EMP classifications:

0.1, 0.35, 0.65, 0.9

Laura Lancaster (SAS Institute) Discovery Summit 2017 11 / 33

Simulation Study Design

Studied 2 Factors Crossed and 2 Factors Nested designs.

Range of bad to good measurement systems (using ICC as the

metric)

I ICC values in middle of EMP classifications:

0.1, 0.35, 0.65, 0.9

I ICC values in middle of AIAG’s top 2 classifications:

0.96 and 0.9975

Laura Lancaster (SAS Institute) Discovery Summit 2017 11 / 33

Simulation Study Design

Studied 2 Factors Crossed and 2 Factors Nested designs.

Range of bad to good measurement systems (using ICC as the

metric)

I ICC values in middle of EMP classifications:

0.1, 0.35, 0.65, 0.9

I ICC values in middle of AIAG’s top 2 classifications:

0.96 and 0.9975

Part variance value: 5

Laura Lancaster (SAS Institute) Discovery Summit 2017 11 / 33

Simulation Study Design

Studied 2 Factors Crossed and 2 Factors Nested designs.

Range of bad to good measurement systems (using ICC as the

metric)

I ICC values in middle of EMP classifications:

0.1, 0.35, 0.65, 0.9

I ICC values in middle of AIAG’s top 2 classifications:

0.96 and 0.9975

Part variance value: 5

250 Simulations

Laura Lancaster (SAS Institute) Discovery Summit 2017 11 / 33

Simulation Study Design

We used JSL in JMP Pro 13 to run the simulations.

Laura Lancaster (SAS Institute) Discovery Summit 2017 12 / 33

Simulation Study Design

We used JSL in JMP Pro 13 to run the simulations.

Used the new JMP Pro 13 Simulate function that makes

simulating statistics in a JMP report very easy.

Laura Lancaster (SAS Institute) Discovery Summit 2017 12 / 33

Simulation Study Design

We used JSL in JMP Pro 13 to run the simulations.

Used the new JMP Pro 13 Simulate function that makes

simulating statistics in a JMP report very easy.

Called the following estimation methods in the Variability platform:

Laura Lancaster (SAS Institute) Discovery Summit 2017 12 / 33

Simulation Study Design

We used JSL in JMP Pro 13 to run the simulations.

Used the new JMP Pro 13 Simulate function that makes

simulating statistics in a JMP report very easy.

Called the following estimation methods in the Variability platform:

I REML

Laura Lancaster (SAS Institute) Discovery Summit 2017 12 / 33

Simulation Study Design

We used JSL in JMP Pro 13 to run the simulations.

Used the new JMP Pro 13 Simulate function that makes

simulating statistics in a JMP report very easy.

Called the following estimation methods in the Variability platform:

I REML

I Bayesian (Portnoy-Sahai)

Laura Lancaster (SAS Institute) Discovery Summit 2017 12 / 33

Simulation Study Design

We used JSL in JMP Pro 13 to run the simulations.

Used the new JMP Pro 13 Simulate function that makes

simulating statistics in a JMP report very easy.

Called the following estimation methods in the Variability platform:

I REML

I Bayesian (Portnoy-Sahai)

I Hybrid (JMP default setting)

If zeroed variance components ⇒ Bayesian estimates.

Otherwise ⇒ REML estimates.

Laura Lancaster (SAS Institute) Discovery Summit 2017 12 / 33

Two Factors Crossed Design

Laura Lancaster (SAS Institute) Discovery Summit 2017 13 / 33

Two Factors Crossed Design

Balanced design:

I Number of Operators: 3, 6, 9, 12

I Number of Parts: 5, 10, 15

I Number of Replications: 2, 3

Laura Lancaster (SAS Institute) Discovery Summit 2017 13 / 33

Two Factors Crossed Design

Balanced design:

I Number of Operators: 3, 6, 9, 12

I Number of Parts: 5, 10, 15

I Number of Replications: 2, 3

Error variance breakdown:

I Operator variance = 0.45*σe2

I Operator*Part variance = 0.1*σe2

I Residual variance = 0.45*σe2

Laura Lancaster (SAS Institute) Discovery Summit 2017 13 / 33

Two Factors Crossed - EMP Classifications

Laura Lancaster (SAS Institute) Discovery Summit 2017 14 / 33

Two Factors Crossed - EMP Classifications

Laura Lancaster (SAS Institute) Discovery Summit 2017 15 / 33

Two Factors Crossed - AIAG Classifications

Laura Lancaster (SAS Institute) Discovery Summit 2017 16 / 33

Two Factors Crossed - AIAG Classifications

Laura Lancaster (SAS Institute) Discovery Summit 2017 17 / 33

Two Factors Crossed - Summary

EMP Classifications

I All methods are correct about the same amount.

Laura Lancaster (SAS Institute) Discovery Summit 2017 18 / 33

Two Factors Crossed - Summary

EMP Classifications

I All methods are correct about the same amount.

I Increasing number of parts helps the most except for really bad

systems (class 4).

Laura Lancaster (SAS Institute) Discovery Summit 2017 18 / 33

Two Factors Crossed - Summary

EMP Classifications

I All methods are correct about the same amount.

I Increasing number of parts helps the most except for really bad

systems (class 4).

AIAG Classifications

I All methods are correct about the same amount.

Laura Lancaster (SAS Institute) Discovery Summit 2017 18 / 33

Two Factors Crossed - Summary

EMP Classifications

I All methods are correct about the same amount.

I Increasing number of parts helps the most except for really bad

systems (class 4).

AIAG Classifications

I All methods are correct about the same amount.

I Increasing number of parts helps the most. Increasing operators

does not have much impact.

Laura Lancaster (SAS Institute) Discovery Summit 2017 18 / 33

Two Factors Crossed - Summary

EMP Classifications

I All methods are correct about the same amount.

I Increasing number of parts helps the most except for really bad

systems (class 4).

AIAG Classifications

I All methods are correct about the same amount.

I Increasing number of parts helps the most. Increasing operators

does not have much impact.

Recommendation: Use more than 3 operators (especially for

EMP classifications) and at least 10 parts.

Laura Lancaster (SAS Institute) Discovery Summit 2017 18 / 33

Two Factors Nested Design

Laura Lancaster (SAS Institute) Discovery Summit 2017 19 / 33

Two Factors Nested Design

Balanced Design:

I Number of Operators: 3, 6, 9, 12, 15

I Number of Parts: 5, 10, 15, 20, 25

I Number of Replications: 2, 3

Laura Lancaster (SAS Institute) Discovery Summit 2017 19 / 33

Two Factors Nested Design

Balanced Design:

I Number of Operators: 3, 6, 9, 12, 15

I Number of Parts: 5, 10, 15, 20, 25

I Number of Replications: 2, 3

Error variance breakdown:

I Operator variance = 0.5*σe2

I Residual variance = 0.5*σe2

Laura Lancaster (SAS Institute) Discovery Summit 2017 19 / 33

Two Factors Nested - EMP Classifications

Laura Lancaster (SAS Institute) Discovery Summit 2017 20 / 33

Two Factors Nested - EMP Classifications

Laura Lancaster (SAS Institute) Discovery Summit 2017 21 / 33

Two Factors Nested - AIAG Classifications

Laura Lancaster (SAS Institute) Discovery Summit 2017 22 / 33

Two Factors Nested - AIAG Classifications

Laura Lancaster (SAS Institute) Discovery Summit 2017 23 / 33

Two Factors Nested - Marginal AIAG Classifications

Laura Lancaster (SAS Institute) Discovery Summit 2017 24 / 33

Two Factors Nested - Acceptable AIAG Classifications

Laura Lancaster (SAS Institute) Discovery Summit 2017 25 / 33

Two Factors Nested - Mean Operator Variance Bias

Laura Lancaster (SAS Institute) Discovery Summit 2017 26 / 33

Two Factors Nested - Mean Operator Variance Bias

(Zoom)

Laura Lancaster (SAS Institute) Discovery Summit 2017 27 / 33

Two Factors Nested - Mean Part Variance Bias

Laura Lancaster (SAS Institute) Discovery Summit 2017 28 / 33

Two Factors Nested - Summary

EMP Classifications

I All methods are correct about the same amount.

Laura Lancaster (SAS Institute) Discovery Summit 2017 29 / 33

Two Factors Nested - Summary

EMP Classifications

I All methods are correct about the same amount.

I Increasing number of parts helps the most for good systems

(classes 1 and 2) and increasing operators helps the most with bad

systems (classes 3 and 4).

Laura Lancaster (SAS Institute) Discovery Summit 2017 29 / 33

Two Factors Nested - Summary

EMP Classifications

I All methods are correct about the same amount.

I Increasing number of parts helps the most for good systems

(classes 1 and 2) and increasing operators helps the most with bad

systems (classes 3 and 4).

I Recommendation: Use more than 3 operators and at least 10

parts.

Laura Lancaster (SAS Institute) Discovery Summit 2017 29 / 33

Two Factors Nested - Summary

EMP Classifications

I All methods are correct about the same amount.

I Increasing number of parts helps the most for good systems

(classes 1 and 2) and increasing operators helps the most with bad

systems (classes 3 and 4).

I Recommendation: Use more than 3 operators and at least 10

parts.

AIAG Classifications

I REML performs the best and is far superior for acceptable systems.

(Bayesian and Hybrid do well for marginal systems if you have

higher sample sizes but horribly for acceptable systems.)

Laura Lancaster (SAS Institute) Discovery Summit 2017 29 / 33

Two Factors Nested - Summary

EMP Classifications

I All methods are correct about the same amount.

I Increasing number of parts helps the most for good systems

(classes 1 and 2) and increasing operators helps the most with bad

systems (classes 3 and 4).

I Recommendation: Use more than 3 operators and at least 10

parts.

AIAG Classifications

I REML performs the best and is far superior for acceptable systems.

(Bayesian and Hybrid do well for marginal systems if you have

higher sample sizes but horribly for acceptable systems.)

I Increasing number of parts helps the most. It has more impact for

marginal systems.

Laura Lancaster (SAS Institute) Discovery Summit 2017 29 / 33

Two Factors Nested - Summary

EMP Classifications

I All methods are correct about the same amount.

I Increasing number of parts helps the most for good systems

(classes 1 and 2) and increasing operators helps the most with bad

systems (classes 3 and 4).

I Recommendation: Use more than 3 operators and at least 10

parts.

AIAG Classifications

I REML performs the best and is far superior for acceptable systems.

(Bayesian and Hybrid do well for marginal systems if you have

higher sample sizes but horribly for acceptable systems.)

I Increasing number of parts helps the most. It has more impact for

marginal systems.

I Recommendation: Use REML, especially if you think your

system is acceptable! Sample sizes with more than 3

operators and at least 10 parts are best. Caution: REML was

still only correct 73.2% with 15 operators and 25 parts.

Laura Lancaster (SAS Institute) Discovery Summit 2017 29 / 33

Conclusions

2 Factors Crossed: Sample sizes of typical 2 factors crossed

designs seem to be OK with both classification systems.

Laura Lancaster (SAS Institute) Discovery Summit 2017 30 / 33

Conclusions

2 Factors Crossed: Sample sizes of typical 2 factors crossed

designs seem to be OK with both classification systems.

2 Factors Nested: Sample sizes of typical 2 factors nested

designs do OK for EMP classifications but not AIAG

classifications, especially for acceptable systems.

Laura Lancaster (SAS Institute) Discovery Summit 2017 30 / 33

Future Research

Fine tune the sample sizes between 3 and 6 operators and 5 and

10 parts.

Laura Lancaster (SAS Institute) Discovery Summit 2017 31 / 33

Future Research

Fine tune the sample sizes between 3 and 6 operators and 5 and

10 parts.

Study more types of MSA designs.

Laura Lancaster (SAS Institute) Discovery Summit 2017 31 / 33

Future Research

Fine tune the sample sizes between 3 and 6 operators and 5 and

10 parts.

Study more types of MSA designs.

Try different breakdowns of error variance.

Laura Lancaster (SAS Institute) Discovery Summit 2017 31 / 33

References

Automotive Industry Action Group (2002), Measurement Systems

Analysis Reference Manual, 3rd Edition.

Portnoy (1971), “Formal Bayes Estimation With Application To a

Random Effect Model,” Annals Of Mathematical Statistics, 42,

1379-1402.

Sahai (1974), “Some Formal Bayes Estimators of Variance

Components in Balanced Three-Stage Nested Random Effects

Model,” Communications in Statistics, 3, 233-242.

Laura Lancaster (SAS Institute) Discovery Summit 2017 32 / 33

Laura.Lancaster@jmp.com

ChristopherM.Gotwalt@jmp.com

Laura Lancaster (SAS Institute) Discovery Summit 2017 33 / 33

You might also like

- Msa PDFDocument111 pagesMsa PDFClaudiu NicolaeNo ratings yet

- CPK Vs PPK 4Document15 pagesCPK Vs PPK 4Seenivasagam Seenu100% (2)

- MSA Case StudiesDocument10 pagesMSA Case StudiesMuthuswamyNo ratings yet

- 1 CP-CPK and PP PPK FormulasDocument4 pages1 CP-CPK and PP PPK FormulasJulion2009No ratings yet

- Case Study Dfmea PfmeaDocument2 pagesCase Study Dfmea PfmeabhuvaneshNo ratings yet

- Measurement System Analysis GuideDocument19 pagesMeasurement System Analysis Guiderollickingdeol100% (1)

- The Application of Gage R&R Analysis in S Six Sigma Case of ImproDocument83 pagesThe Application of Gage R&R Analysis in S Six Sigma Case of ImproDLNo ratings yet

- SPC ManualDocument49 pagesSPC Manualchteo1976No ratings yet

- MSR-Columns hidden or deletedDocument2 pagesMSR-Columns hidden or deleted57641100% (1)

- Shainin AmeliorDocument40 pagesShainin AmeliorOsman Tig100% (1)

- Manual MSA.3.Ingles PDFDocument240 pagesManual MSA.3.Ingles PDFIram ChaviraNo ratings yet

- Improvement Initiatives Presents: Team-Oriented Problem SolvingDocument44 pagesImprovement Initiatives Presents: Team-Oriented Problem SolvingCARPENTIERIMAZZANo ratings yet

- 2.3.4 Variable and Attribute MSA PDFDocument0 pages2.3.4 Variable and Attribute MSA PDFAlpha SamadNo ratings yet

- Advanced Product Quality Planning and Control Plan PDFDocument13 pagesAdvanced Product Quality Planning and Control Plan PDFCesarNo ratings yet

- GRR Study MSA TemplateDocument21 pagesGRR Study MSA TemplateaadmaadmNo ratings yet

- APQP GoodDocument70 pagesAPQP Goodtrung100% (1)

- Teaching DoE With Paper Helicopters and MinitabDocument17 pagesTeaching DoE With Paper Helicopters and MinitabKumar SwamiNo ratings yet

- An Honest Gauge R&R Study PDFDocument19 pagesAn Honest Gauge R&R Study PDFAbi ZuñigaNo ratings yet

- Warm-Up - Day 2: Place Self Others Team Purpose AgendaDocument85 pagesWarm-Up - Day 2: Place Self Others Team Purpose AgendaSanjeev SharmaNo ratings yet

- How To Construct A FMEA Boundary Diagram - CrippsDocument6 pagesHow To Construct A FMEA Boundary Diagram - Crippstehky63100% (1)

- APQP FormsDocument23 pagesAPQP FormsJOECOOL670% (1)

- CPK CalculatorDocument2 pagesCPK CalculatorNumas SalazarNo ratings yet

- MsaDocument34 pagesMsaRohit Arora100% (1)

- Measurement Unit Analysis % Tolerance AnalysisDocument7 pagesMeasurement Unit Analysis % Tolerance AnalysisCLEV2010No ratings yet

- Supplier PPAP Manual 2Document25 pagesSupplier PPAP Manual 2roparn100% (1)

- Statistical Process Control FundamentalsDocument32 pagesStatistical Process Control FundamentalsEd100% (1)

- Gage R&RDocument1 pageGage R&Rshobhit2310No ratings yet

- Register Forum Home Page Post Attachment Files All Help Lost PasswordDocument3 pagesRegister Forum Home Page Post Attachment Files All Help Lost PasswordKirthivasanNo ratings yet

- Measurement Systems Analysis - How ToDocument72 pagesMeasurement Systems Analysis - How Towawawa1100% (1)

- GR&R Training DraftDocument53 pagesGR&R Training DraftLOGANATHAN VNo ratings yet

- Shain in TaguchiDocument8 pagesShain in TaguchisdvikkiNo ratings yet

- Sample DFMEA - Full PackageDocument7 pagesSample DFMEA - Full Packageabhisheksen.asindNo ratings yet

- Valeo IATF Communication To SuppliersDocument33 pagesValeo IATF Communication To SuppliersubllcNo ratings yet

- MSA StudyDocument22 pagesMSA StudyNarayanKavitakeNo ratings yet

- Measurement System Analysis (MSADocument10 pagesMeasurement System Analysis (MSAMark AntonyNo ratings yet

- Strategy Diagram ExamplesDocument8 pagesStrategy Diagram ExamplesmanuelNo ratings yet

- Measurement System Analysis How-To Guide - Workbook: August 2013Document25 pagesMeasurement System Analysis How-To Guide - Workbook: August 2013trsmrsNo ratings yet

- MSA-R&R Training Program GuideDocument25 pagesMSA-R&R Training Program GuideHarshad KulkarniNo ratings yet

- PFMEA ExampleDocument14 pagesPFMEA Examplekalebasveggie100% (1)

- Root Cause Analysis - ShaininapproachDocument6 pagesRoot Cause Analysis - ShaininapproachRaghavendra KalyanNo ratings yet

- Msa Guide MDocument114 pagesMsa Guide MRohit SoniNo ratings yet

- ASQ Auto Webinar Core Tools Slides 101203Document83 pagesASQ Auto Webinar Core Tools Slides 101203David SigalinggingNo ratings yet

- Variable MSA 4th Edition Blank TemplateDocument1 pageVariable MSA 4th Edition Blank TemplateSachin RamdurgNo ratings yet

- Measurement System AnalysisDocument42 pagesMeasurement System Analysisazadsingh1No ratings yet

- Complete PPAP OverviewDocument166 pagesComplete PPAP OverviewblkdirtymaxNo ratings yet

- Poke Yoke Solution Selection MatrixDocument1 pagePoke Yoke Solution Selection Matrixrgrao85No ratings yet

- Perform a Machine Capability Study in 7 StepsDocument10 pagesPerform a Machine Capability Study in 7 StepsAbhishek RanjanNo ratings yet

- 15 Mistake ProofingDocument4 pages15 Mistake ProofingSteven Bonacorsi100% (2)

- Control Plan 1st Edition - AnalysisDocument9 pagesControl Plan 1st Edition - AnalysisYassin Serhani100% (1)

- Quality & HSE SpecDocument73 pagesQuality & HSE SpecEdmarNo ratings yet

- Msa 4th Edition ChangesDocument3 pagesMsa 4th Edition ChangesNasa00No ratings yet

- DOEDocument126 pagesDOEsuryaksvidyuth0% (1)

- Core Tools: Measurement Systems Analysis (MSA)Document6 pagesCore Tools: Measurement Systems Analysis (MSA)Salvador Hernandez ColoradoNo ratings yet

- Catalogue 2021 Height Gauges J ENDocument35 pagesCatalogue 2021 Height Gauges J ENVikram BillalNo ratings yet

- ISO 17025 Accredited Lab Calibration ServicesDocument129 pagesISO 17025 Accredited Lab Calibration ServicesVikram BillalNo ratings yet

- Northlab HosurDocument25 pagesNorthlab HosurVikram BillalNo ratings yet

- KR 100 Roughness Tester Manual 1Document16 pagesKR 100 Roughness Tester Manual 1Hepi Sibolang99No ratings yet

- Precise Roughness Measurement: Surface Texture Parameters in PracticeDocument14 pagesPrecise Roughness Measurement: Surface Texture Parameters in PracticeVikram BillalNo ratings yet

- Roots Metrology Laboratory CalibrationsDocument105 pagesRoots Metrology Laboratory CalibrationsVikram BillalNo ratings yet

- Unqie Measurement 07.2021Document18 pagesUnqie Measurement 07.2021Karthik MadhuNo ratings yet

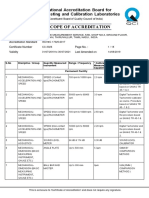

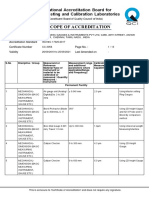

- Laboratory Name: Accreditation Standard Certificate Number Page No Validity Last Amended OnDocument35 pagesLaboratory Name: Accreditation Standard Certificate Number Page No Validity Last Amended OnVikram BillalNo ratings yet

- Calibrating Non-Indicating ScalesDocument10 pagesCalibrating Non-Indicating ScalesVikram BillalNo ratings yet

- Laboratory NameDocument13 pagesLaboratory NameVikram BillalNo ratings yet

- R111 E94Document24 pagesR111 E94ArifNurjayaNo ratings yet

- Contingency Planning Standards and GuidelinesDocument60 pagesContingency Planning Standards and GuidelinesVikram BillalNo ratings yet

- Laboratory Name: Accreditation Standard Certificate Number Page No Validity Last Amended OnDocument31 pagesLaboratory Name: Accreditation Standard Certificate Number Page No Validity Last Amended OnVikram BillalNo ratings yet

- Laboratory Name: Accreditation Standard Certificate Number Page No Validity Last Amended OnDocument75 pagesLaboratory Name: Accreditation Standard Certificate Number Page No Validity Last Amended OnVikram BillalNo ratings yet

- 2 Handout IATF 16949 Clause Map 2016 To 2009 PDFDocument5 pages2 Handout IATF 16949 Clause Map 2016 To 2009 PDFMohini Marathe100% (2)

- Tesa Tsms Kampagne Evb Ohne Basteltipps RZ x4Document134 pagesTesa Tsms Kampagne Evb Ohne Basteltipps RZ x4Hadeel mohammadNo ratings yet

- InstacalDocument82 pagesInstacalVikram BillalNo ratings yet

- QL800 SeriesDocument4 pagesQL800 SeriesVikram BillalNo ratings yet

- Jis B 7503-2011Document34 pagesJis B 7503-2011Kvanan7867% (6)

- Jis B 7503-2011Document34 pagesJis B 7503-2011Kvanan7867% (6)

- Chapter 15. Monitoring and Measurement Resources Related: (Clause Description-Paraphrase)Document12 pagesChapter 15. Monitoring and Measurement Resources Related: (Clause Description-Paraphrase)Vikram BillalNo ratings yet

- Recommended Environments For Standards LaboratoriesDocument32 pagesRecommended Environments For Standards LaboratoriesGanesh TigadeNo ratings yet

- Oiml R76-1Document144 pagesOiml R76-1Andrés Pacompía100% (1)

- Chapter 15. Monitoring and Measurement Resources Related: (Clause Description-Paraphrase)Document12 pagesChapter 15. Monitoring and Measurement Resources Related: (Clause Description-Paraphrase)Vikram BillalNo ratings yet

- 2 Handout IATF 16949 Clause Map 2016 To 2009 PDFDocument5 pages2 Handout IATF 16949 Clause Map 2016 To 2009 PDFMohini Marathe100% (2)

- Euramet Calibration Guide No18Document120 pagesEuramet Calibration Guide No18Jesika Andilia Setya WardaniNo ratings yet

- Isa-Tr52.00.01-2006 Recommended Environments For Standards LaboratoriesDocument37 pagesIsa-Tr52.00.01-2006 Recommended Environments For Standards LaboratoriesEdison SaflaNo ratings yet

- Measurement System Analysis (MSA)Document125 pagesMeasurement System Analysis (MSA)Vikram BillalNo ratings yet

- D-Book E2020 1125Document608 pagesD-Book E2020 1125Vikram BillalNo ratings yet

- Inside Micrometers Measurement GuideDocument10 pagesInside Micrometers Measurement GuideVikram BillalNo ratings yet

- Journal of Baltic Science Education, Vol. 11, No. 2, 2012Document89 pagesJournal of Baltic Science Education, Vol. 11, No. 2, 2012Scientia Socialis, Ltd.No ratings yet

- Sexual Orientation Identity and Romantic Relations PDFDocument17 pagesSexual Orientation Identity and Romantic Relations PDFushha2No ratings yet

- Encyclopedia of Medical Decision MakingDocument1,266 pagesEncyclopedia of Medical Decision MakingAgam Reddy M100% (3)

- RNFL AsymmetryDocument24 pagesRNFL Asymmetryhakimu10No ratings yet

- Jimaging 08 00111Document8 pagesJimaging 08 00111Simi Florin CiraNo ratings yet

- Daily Living Self Efficacy ScaleDocument8 pagesDaily Living Self Efficacy Scalespamemail00No ratings yet

- Translating The Research Diagnostic Criteria For Temporomandibular Disorders Into Malay: Evaluation of Content and ProcessDocument0 pagesTranslating The Research Diagnostic Criteria For Temporomandibular Disorders Into Malay: Evaluation of Content and ProcessUniversity Malaya's Dental Sciences ResearchNo ratings yet

- Confidence Levels - Failure Rates - Confidence IntervalsDocument25 pagesConfidence Levels - Failure Rates - Confidence Intervalsgoldpanr8222No ratings yet

- Measurement System Analyses Gauge Repeatability and Reproducibility Methods PDFDocument8 pagesMeasurement System Analyses Gauge Repeatability and Reproducibility Methods PDFFortune FireNo ratings yet

- Optometry: Comparison of Four Different Binocular Balancing TechniquesDocument4 pagesOptometry: Comparison of Four Different Binocular Balancing TechniquesQonita Aizati QomaruddinNo ratings yet

- Periodontal Phenotype AlveolarDocument13 pagesPeriodontal Phenotype Alveolar김문규No ratings yet

- Reliability Analysis According To APA - Kinza Saher BasraDocument30 pagesReliability Analysis According To APA - Kinza Saher BasraKinza Saher BasraNo ratings yet

- Montgomery-Asberg Depression Rating Scale in Clinical Practice: Psychometric Properties On Serbian PatientsDocument7 pagesMontgomery-Asberg Depression Rating Scale in Clinical Practice: Psychometric Properties On Serbian PatientsjhuNo ratings yet

- AayaDocument8 pagesAayaLovely nuyNo ratings yet

- Selection For FM Residency Training in CanadaDocument6 pagesSelection For FM Residency Training in CanadaJasmik SinghNo ratings yet

- Graduate Employability and Higher Education S Con - 2021 - Journal of HospitalitDocument11 pagesGraduate Employability and Higher Education S Con - 2021 - Journal of HospitalitANDI SETIAWAN [AB]No ratings yet

- Outpatient Satisfaction With Primary Health Care Services in Vietnam - Multilevel Analysis Results From The Vietnam Health Facilities Assessment 2015Document11 pagesOutpatient Satisfaction With Primary Health Care Services in Vietnam - Multilevel Analysis Results From The Vietnam Health Facilities Assessment 2015ctthang44No ratings yet

- Csurvey2 ManualDocument40 pagesCsurvey2 Manualafa81No ratings yet

- Analysis of Gonial Angle in Relation To Age GenderDocument5 pagesAnalysis of Gonial Angle in Relation To Age GenderhabeebNo ratings yet

- Correlation CoefficientDocument7 pagesCorrelation CoefficientDimple PatelNo ratings yet

- Evaluation of Breathing Pattern: Comparison of A Manual Assessment of Respiratory Motion (MARM) and Respiratory Induction PlethysmographyDocument10 pagesEvaluation of Breathing Pattern: Comparison of A Manual Assessment of Respiratory Motion (MARM) and Respiratory Induction PlethysmographySamuelNo ratings yet

- Validation of The Standardized Version of RQLQ Juniper1999Document6 pagesValidation of The Standardized Version of RQLQ Juniper1999IchsanNo ratings yet

- Scarlata 2019Document19 pagesScarlata 2019Daniel PredaNo ratings yet

- Weir 2005 JSCR Reliability PDFDocument10 pagesWeir 2005 JSCR Reliability PDFhenriqueNo ratings yet

- Vertigo Sympton ScaleDocument9 pagesVertigo Sympton ScaleIvan HoNo ratings yet

- Hop Testing Provides A Reliable and Valid Outcome Measure During Rehabilitation After Anterior Cruciate Ligament Reconstruction PDFDocument13 pagesHop Testing Provides A Reliable and Valid Outcome Measure During Rehabilitation After Anterior Cruciate Ligament Reconstruction PDFCleber PimentaNo ratings yet

- Hospital Strategic Management Training PDFDocument9 pagesHospital Strategic Management Training PDFkartikaNo ratings yet

- Reliability and Learning Styles QuestionnaireDocument10 pagesReliability and Learning Styles Questionnairefriska anjaNo ratings yet

- Range of Motion and Lordosis of The Lumbar SpineDocument8 pagesRange of Motion and Lordosis of The Lumbar SpinekhaledNo ratings yet

- Assessment properties Brazilian versions FSS-ICU FIM critically ill ICU patientsDocument8 pagesAssessment properties Brazilian versions FSS-ICU FIM critically ill ICU patientsrpcostta8952No ratings yet