Professional Documents

Culture Documents

Eigen Values - Snapshots

Eigen Values - Snapshots

Uploaded by

Vidya VOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Eigen Values - Snapshots

Eigen Values - Snapshots

Uploaded by

Vidya VCopyright:

Available Formats

Objective

Can build predictive modelling , reduce multi collinearity with adjusted r square of 42% and can

make promotional statergy.

View > principal components > and get output

Use eigen value approach – take values more than 1 have reduced 21 to 6 , will have only 6

promotional startergies. – Till more than 1 the contributon will be significant

When you rebuild the model consider only 6 ( combining PCA with regression – gives you PCR)

6 components are there

Principal Components Analysis

n = 400

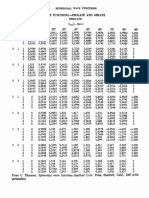

Eigenanalysis of the Correlation Matrix

Component Eigenvalue Proportion Cumulative Stop as soon as u reach 1 – till 1.6168

1 4.7276 0.2251 0.2251

2 3.5495 0.1690 0.3941

3 2.4162 0.1151 0.5092

Decreasing trend 22%>16%>11%>9% - if he is close to 9 he

4 2.3757 0.1131 0.6223 will purchase it – model works under the assumption of

5 2.0085 0.0956 0.7180 normality with 6 components 79 % variance can be

6 1.6168 0.0770 0.7950

7 0.7860 0.0374 0.8324 explained.

8 0.7179 0.0342 0.8666

9 0.5592 0.0266 0.8932

10 0.3938 0.0188 0.9120

11 0.3506 0.0167 0.9287

12 0.3376 0.0161 0.9447

13 0.2666 0.0127 0.9574

14 0.2453 0.0117 0.9691

15 0.1671 0.0080 0.9771

16 0.1398 0.0067 0.9837

17 0.0945 0.0045 0.9882

18 0.0810 0.0039 0.9921

19 0.0742 0.0035 0.9956

20 0.0533 0.0025 0.9981

21 0.0389 0.0019 1.0000

Eigenvectors (component loadings)

PC1 PC2 PC3 PC4 PC5 PC6 PC7

X1 0.013 -0.306 0.145 -0.431 0.029 0.047 -0.310

X2 0.025 -0.302 0.149 -0.433 0.015 0.041 -0.301

X3 0.037 -0.301 0.118 -0.376 -0.020 0.001 0.175

X4 0.016 -0.262 0.066 -0.275 -0.035 0.048 0.618

X5 0.410 0.024 -0.028 0.006 0.039 0.034 -0.251

X6 0.414 0.032 -0.013 0.007 0.011 0.023 -0.239

X7 0.429 0.027 -0.039 0.008 0.017 0.042 -0.103

X8 0.430 0.011 -0.030 -0.001 0.021 0.019 -0.029

X9 0.401 0.006 -0.012 0.001 -0.040 0.002 0.265

X10 0.351 -0.005 -0.001 0.010 -0.025 -0.051 0.431

X11 -0.013 -0.159 -0.534 -0.054 0.145 -0.001 -0.009

X12 -0.026 -0.168 -0.563 -0.033 0.092 -0.001 -0.026

X13 -0.026 -0.135 -0.528 -0.089 0.080 -0.040 0.012

X14 0.023 0.021 0.026 -0.074 0.079 -0.695 -0.015

X15 0.023 0.031 0.039 -0.062 0.104 -0.691 -0.017

X16 -0.012 -0.255 0.125 0.219 0.491 0.042 0.047

X17 0.007 -0.260 0.141 0.232 0.486 0.049 0.023

X18 0.031 -0.201 0.129 0.235 0.366 0.001 -0.016

X19 0.017 -0.382 0.019 0.281 -0.329 -0.070 -0.050

X20 0.022 -0.376 0.014 0.301 -0.324 -0.079 -0.053

X21 0.015 -0.348 0.003 0.248 -0.339 -0.097 -0.054

PC8 PC9 PC10 PC11 PC12 PC13 PC14

X1 -0.226 -0.020 0.140 0.138 0.066 0.044 -0.027

X2 -0.249 -0.036 0.127 0.151 0.057 0.002 -0.065

X3 0.169 0.191 -0.519 -0.527 -0.294 -0.079 0.103

X4 0.499 -0.082 0.313 0.282 0.172 0.027 -0.023

X5 0.320 -0.024 0.009 -0.074 -0.014 0.275 -0.295

X6 0.324 -0.015 0.011 -0.071 -0.008 0.285 -0.219

X7 0.122 -0.014 -0.013 0.037 0.067 -0.280 0.329

X8 -0.025 -0.049 0.035 0.089 0.048 -0.411 0.353

X9 -0.352 -0.009 -0.016 0.051 -0.013 -0.085 0.060

X10 -0.500 0.052 -0.015 -0.043 -0.067 0.305 -0.298

X11 0.041 -0.046 -0.058 0.300 -0.500 -0.106 -0.063

X12 -0.033 0.030 -0.021 0.105 -0.130 0.096 -0.009

X13 -0.064 0.071 0.092 -0.432 0.639 0.021 0.044

X14 0.058 -0.010 -0.511 0.373 0.301 -0.006 -0.088

X15 0.016 -0.044 0.540 -0.324 -0.307 -0.033 0.091

X16 -0.039 -0.378 -0.021 -0.060 0.051 -0.014 -0.018

X17 -0.012 -0.285 -0.106 -0.081 -0.003 0.091 0.081

X18 0.026 0.843 0.129 0.137 0.031 -0.042 -0.022

X19 0.007 -0.055 0.018 -0.048 0.023 -0.281 -0.289

X20 0.010 -0.037 0.022 -0.057 0.010 -0.257 -0.258

X21 -0.013 0.016 -0.023 0.101 -0.033 0.556 0.587

PC15 PC16 PC17 PC18 PC19 PC20 PC21

X1 -0.002 -0.023 -0.192 0.623 -0.273 0.034 -0.051

X2 -0.022 0.010 0.155 -0.608 0.309 -0.032 0.073

X3 0.043 -0.005 0.061 0.001 -0.027 -0.002 -0.002

X4 0.019 -0.001 -0.037 -0.015 0.003 0.010 0.008

X5 -0.028 0.105 0.076 -0.120 -0.202 0.641 -0.095

X6 0.053 0.071 -0.009 0.081 0.093 -0.707 0.083

X7 -0.080 -0.343 -0.086 0.244 0.604 0.195 0.007

X8 -0.005 -0.144 0.045 -0.266 -0.622 -0.155 0.026

X9 0.254 0.724 0.017 0.098 0.152 0.040 -0.073

X10 -0.198 -0.450 -0.027 -0.012 -0.033 -0.012 0.043

X11 -0.516 0.163 0.075 0.054 0.017 -0.044 0.003

X12 0.728 -0.240 -0.110 -0.056 0.001 0.039 -0.004

X13 -0.243 0.107 0.023 -0.003 -0.007 -0.023 0.001

X14 -0.001 0.002 0.002 0.010 -0.007 0.001 0.004

X15 0.034 0.008 -0.022 -0.002 0.027 0.014 -0.014

X16 0.107 -0.078 0.650 0.185 0.010 -0.017 0.001

X17 -0.082 0.084 -0.675 -0.189 0.013 0.003 0.001

X18 -0.004 0.025 0.051 0.001 0.000 -0.008 -0.013

X19 -0.005 -0.072 -0.041 -0.038 0.040 -0.097 -0.686

X20 0.031 0.043 -0.052 0.063 -0.017 0.091 0.705

X21 -0.089 0.031 0.117 -0.015 -0.030 0.017 -0.026

Go to + now if u check u will have 6 components added.

Eliminate pc6 and rebuild the model

Now check for collinearity and other analysis u see that It is 1 so the components are not dependent

on each other.

Seeing the eigen vector of x5 x6 x7 x8 x9 – they are all dependent on something – like financials

check for overloadings with eigen vectors. For each component check this. Give a promotinal

stratergy for each of them based on the overloading for each component in the eigen vector.

You might also like

- Thread Relief Chart PDFDocument1 pageThread Relief Chart PDFSergey ShkapovNo ratings yet

- 2010-10-05 010234 Amount Spent Per Week On RecreationDocument8 pages2010-10-05 010234 Amount Spent Per Week On RecreationEraj Rehan0% (1)

- Outlet Summary Caesar IIDocument8 pagesOutlet Summary Caesar IIpanji uteNo ratings yet

- Structure Control and Base Isolation: Masters of Science in Earthquake Engineering Assignment 1Document14 pagesStructure Control and Base Isolation: Masters of Science in Earthquake Engineering Assignment 1Rupesh UpretyNo ratings yet

- Naca 3412Document5 pagesNaca 3412Julio José Chirinos GarcíaNo ratings yet

- Chapter-4: Simulation ResultsDocument18 pagesChapter-4: Simulation ResultsAlok PandeyNo ratings yet

- Assignment 2 Solution PDFDocument15 pagesAssignment 2 Solution PDFabimalainNo ratings yet

- SDOF Damped Forced Vibration - NewmarkDocument5 pagesSDOF Damped Forced Vibration - NewmarkAbu Hadiyd Al-IkhwanNo ratings yet

- Naca 2418Document6 pagesNaca 2418B๖๒๒๗๐๐๕ Jitlada AumpiromNo ratings yet

- OutputDocument51 pagesOutputrizkiginanjar776No ratings yet

- XF Goe222 Il 50000Document2 pagesXF Goe222 Il 50000huy4ngooNo ratings yet

- Book 1 WsDocument21 pagesBook 1 WsHarrison DwyerNo ratings yet

- XF 2032c Il 1000002265Document2 pagesXF 2032c Il 1000002265parklane79No ratings yet

- Typical Drawing Tracker For A ProjectDocument3 pagesTypical Drawing Tracker For A ProjectPrashanth ShyamalaNo ratings yet

- Foil CLARK Y AIRFOIL - T1 - Re0.730 - M0.00 - N9.0Document3 pagesFoil CLARK Y AIRFOIL - T1 - Re0.730 - M0.00 - N9.0Miguel LealNo ratings yet

- Apéndice eDocument14 pagesApéndice esaraNo ratings yet

- Naca 0015 JavafoilDocument1 pageNaca 0015 JavafoilDeny Bayu SaefudinNo ratings yet

- Foil Clark y Airfoil t1 Re0.620 m0.00 n9.0Document3 pagesFoil Clark y Airfoil t1 Re0.620 m0.00 n9.0Miguel LealNo ratings yet

- Standard Gauge For Sheet and Plate Iron & SteelDocument3 pagesStandard Gauge For Sheet and Plate Iron & Steelnelson121No ratings yet

- Foil Clark y Airfoil t1 Re0.610 m0.00 n9.0Document3 pagesFoil Clark y Airfoil t1 Re0.610 m0.00 n9.0Miguel LealNo ratings yet

- Foil CLARK Y AIRFOIL - T1 - Re0.720 - M0.00 - N9.0Document3 pagesFoil CLARK Y AIRFOIL - T1 - Re0.720 - M0.00 - N9.0Miguel LealNo ratings yet

- Foil CLARK Y AIRFOIL - T1 - Re0.210 - M0.00 - N9.0Document3 pagesFoil CLARK Y AIRFOIL - T1 - Re0.210 - M0.00 - N9.0Miguel LealNo ratings yet

- TreeDocument224 pagesTreeshaimenneNo ratings yet

- GarchDocument53 pagesGarchDipak KumarNo ratings yet

- Tarea 5 - TecnicasDocument70 pagesTarea 5 - TecnicasIng. Ricardo B.No ratings yet

- Z-Chart & Loss Function v05Document1 pageZ-Chart & Loss Function v05Malvin VitoNo ratings yet

- Z-Chart & Loss FunctionDocument1 pageZ-Chart & Loss FunctionVipul SharmaNo ratings yet

- HW2 AnswerkeyDocument420 pagesHW2 AnswerkeyAntonio AguiarNo ratings yet

- Rene Bos r2 (161-164)Document4 pagesRene Bos r2 (161-164)FRANCO RICARDO GUERRON QUIROZNo ratings yet

- Win FlumeDocument1 pageWin Flumeomar wilson carvallo muñozNo ratings yet

- Table BinomialeDocument2 pagesTable BinomialejaimegemmeNo ratings yet

- Foil CLARK Y AIRFOIL - T1 - Re0.370 - M0.00 - N9.0Document3 pagesFoil CLARK Y AIRFOIL - T1 - Re0.370 - M0.00 - N9.0Miguel LealNo ratings yet

- R0-Design Calculation Report of Loading FrameDocument34 pagesR0-Design Calculation Report of Loading FrameMuhammad Irfan ButtNo ratings yet

- Assignment Module2Document14 pagesAssignment Module2alok pratap singhNo ratings yet

- Name Namratha Nambiar Roll Number AU2014080Document26 pagesName Namratha Nambiar Roll Number AU2014080Namratha Ganesh NambiarNo ratings yet

- ME 303 Homework 6: All AllDocument8 pagesME 303 Homework 6: All AllJoe ApolloNo ratings yet

- Long Term Response: Strain Stress Axial Load εc εp εcf εsf εpf fc fs fp N, kipsDocument2 pagesLong Term Response: Strain Stress Axial Load εc εp εcf εsf εpf fc fs fp N, kipsAmmad AlizaiNo ratings yet

- Foil Clark y Airfoil t1 Re0.640 m0.00 n9.0Document3 pagesFoil Clark y Airfoil t1 Re0.640 m0.00 n9.0Miguel LealNo ratings yet

- Longitud (CM) 120 ALTURA (CM) 13.5Document9 pagesLongitud (CM) 120 ALTURA (CM) 13.5Jimena RubioNo ratings yet

- Table: Modal Participating Mass Ratios Case Mode Period Ux Uy Uz SumuxsumuysumuzDocument2 pagesTable: Modal Participating Mass Ratios Case Mode Period Ux Uy Uz SumuxsumuysumuzcrvishnuramNo ratings yet

- Asset Pricing-2 Homework #3: A.Vamsi Krishna Roll No:019Document7 pagesAsset Pricing-2 Homework #3: A.Vamsi Krishna Roll No:019Vamsikrishna ANo ratings yet

- Foil CLARK Y AIRFOIL - T1 - Re0.740 - M0.00 - N9.0Document3 pagesFoil CLARK Y AIRFOIL - T1 - Re0.740 - M0.00 - N9.0Miguel LealNo ratings yet

- 488 Appendix A3: Tabulations of Resistance Design DataDocument3 pages488 Appendix A3: Tabulations of Resistance Design DataChien Manh NguyenNo ratings yet

- Foil CLARK Y AIRFOIL - T1 - Re0.350 - M0.00 - N9.0Document3 pagesFoil CLARK Y AIRFOIL - T1 - Re0.350 - M0.00 - N9.0Miguel LealNo ratings yet

- Tugas Termo 12.6 ABCDocument5 pagesTugas Termo 12.6 ABCari suryo lenggonoNo ratings yet

- Foil Clark y Airfoil t1 Re0.650 m0.00 n9.0Document3 pagesFoil Clark y Airfoil t1 Re0.650 m0.00 n9.0Miguel LealNo ratings yet

- Xm07 000Document3 pagesXm07 000priyamNo ratings yet

- Curva de Margules: P (MMHG) X1 Y1 X2 Y2 Ɣ1 Ɣ2Document17 pagesCurva de Margules: P (MMHG) X1 Y1 X2 Y2 Ɣ1 Ɣ2José Leonardo Fernández BalderaNo ratings yet

- Tablas BiponoDocument4 pagesTablas BiponolaegcrackNo ratings yet

- Foil CLARK Y AIRFOIL - T1 - Re0.360 - M0.00 - N9.0Document3 pagesFoil CLARK Y AIRFOIL - T1 - Re0.360 - M0.00 - N9.0Miguel LealNo ratings yet

- DisplacementDocument4 pagesDisplacementAndi HakimNo ratings yet

- Foil CLARK Y AIRFOIL - T1 - Re0.750 - M0.00 - N9.0Document3 pagesFoil CLARK Y AIRFOIL - T1 - Re0.750 - M0.00 - N9.0Miguel LealNo ratings yet

- Foil CLARK Y AIRFOIL - T1 - Re0.710 - M0.00 - N9.0Document3 pagesFoil CLARK Y AIRFOIL - T1 - Re0.710 - M0.00 - N9.0Miguel LealNo ratings yet

- Tables KellerDocument14 pagesTables KellermoralityandhonorNo ratings yet

- Table 3 - Present Value of $1Document1 pageTable 3 - Present Value of $1Inggrid ManuNo ratings yet

- UntitledDocument2 pagesUntitledPaulo Henrique FerreiraNo ratings yet

- OUTPUTDocument16 pagesOUTPUTsehu falahNo ratings yet

- Spheroidal Wave Functions: ProlateDocument2 pagesSpheroidal Wave Functions: ProlatelotannaNo ratings yet

- Question 1 (I) For 0% Damping: Displacement (MM)Document63 pagesQuestion 1 (I) For 0% Damping: Displacement (MM)Kshitiz ShresthaNo ratings yet

- Math Practice Simplified: Decimals & Percents (Book H): Practicing the Concepts of Decimals and PercentagesFrom EverandMath Practice Simplified: Decimals & Percents (Book H): Practicing the Concepts of Decimals and PercentagesRating: 5 out of 5 stars5/5 (3)

- Building Trust and Honest Work Relationship: Resolving ConflictDocument2 pagesBuilding Trust and Honest Work Relationship: Resolving ConflictVidya VNo ratings yet

- Case Study: Medisys Corp.: The Intenscare Product Development TeamDocument10 pagesCase Study: Medisys Corp.: The Intenscare Product Development TeamVidya VNo ratings yet

- Lasers Electric Arcs Electron Beams: Step 1: Modeling and SoftwareDocument3 pagesLasers Electric Arcs Electron Beams: Step 1: Modeling and SoftwareVidya VNo ratings yet

- Operations Managemnt Case Study - Parts EmporiumDocument7 pagesOperations Managemnt Case Study - Parts EmporiumVidya VNo ratings yet

- Coefficient Std. Error T-Ratio P-ValueDocument3 pagesCoefficient Std. Error T-Ratio P-ValueVidya VNo ratings yet

- Principal Component AnalysisDocument2 pagesPrincipal Component AnalysisVidya VNo ratings yet

- DFD-Draw, Rules, Mistakes - 18 and 19Document4 pagesDFD-Draw, Rules, Mistakes - 18 and 19Vidya VNo ratings yet

- Methodologies, SDLC Caselets Solved-Session 17Document7 pagesMethodologies, SDLC Caselets Solved-Session 17Vidya VNo ratings yet

- Marketing Management II Product Management-Saftey Product For Senior CitizensDocument16 pagesMarketing Management II Product Management-Saftey Product For Senior CitizensVidya VNo ratings yet

- Mock BudgetDocument4 pagesMock BudgetVidya VNo ratings yet

- Annova Tests: Claims: Null Hypothesis: Alternative HypothesisDocument3 pagesAnnova Tests: Claims: Null Hypothesis: Alternative HypothesisVidya VNo ratings yet

- Child Care Business PlanDocument14 pagesChild Care Business PlanVidya VNo ratings yet

- Costing Sheet SpaDocument11 pagesCosting Sheet SpaVidya VNo ratings yet

- Fall of Bpl-Microeconomic Reasons: MIE PROJECT, GROUP 9 - 2021-2023Document9 pagesFall of Bpl-Microeconomic Reasons: MIE PROJECT, GROUP 9 - 2021-2023Vidya VNo ratings yet

- Analytic CombinatoricsDocument6 pagesAnalytic CombinatoricsHugo Acosta MezaNo ratings yet

- North Central Mindanao College: College of Business Administration and AccountancyDocument4 pagesNorth Central Mindanao College: College of Business Administration and AccountancyAdonMikeMaranganCatacutanNo ratings yet

- Classification DecisionTreesNaiveBayeskNNDocument75 pagesClassification DecisionTreesNaiveBayeskNNDev kartik AgarwalNo ratings yet

- QUBE-Servo LQR Control Workbook (Student)Document6 pagesQUBE-Servo LQR Control Workbook (Student)Rizkie Denny PratamaNo ratings yet

- ML Practice QuestionsDocument6 pagesML Practice QuestionsNasis DerejeNo ratings yet

- Gromacs GuideDocument257 pagesGromacs GuidefairusyfitriaNo ratings yet

- Answer Report (Preditive Modelling)Document29 pagesAnswer Report (Preditive Modelling)Shweta Lakhera100% (1)

- CN Lecture 8 Error Control CodesDocument23 pagesCN Lecture 8 Error Control CodesMr. NikuNo ratings yet

- A Hybrid Approach For Energy Consumption Forecasting With A New Feature Engineering and Optimization Framework in Smart GridDocument17 pagesA Hybrid Approach For Energy Consumption Forecasting With A New Feature Engineering and Optimization Framework in Smart GridiliasshamNo ratings yet

- Cyber Threat Detection Based On Artificial NeuralDocument20 pagesCyber Threat Detection Based On Artificial Neuralsravanpy111No ratings yet

- Computation of Gauss-Jacobi, Gauss-Radau-Jacobi and Gauss-Lobatto-Jacobi Quadrature Formulae Using Golub-Welsch MethodDocument9 pagesComputation of Gauss-Jacobi, Gauss-Radau-Jacobi and Gauss-Lobatto-Jacobi Quadrature Formulae Using Golub-Welsch MethodFlorian Leonard Gozman100% (2)

- Exercise 7Document2 pagesExercise 7Akmaral AmanturdievaNo ratings yet

- Iterative Figure-Ground DiscriminationDocument4 pagesIterative Figure-Ground Discriminationmehdi13622473No ratings yet

- 09 ECE 3125 ECE 3242 - Practice Problems - March 14 2012Document3 pages09 ECE 3125 ECE 3242 - Practice Problems - March 14 2012creepymaggotsNo ratings yet

- Bbit 3202 Artificial Intelligence Exam 2Document3 pagesBbit 3202 Artificial Intelligence Exam 2family DBNo ratings yet

- Seismic Isolation: Linear Theory of Base IsolationDocument2 pagesSeismic Isolation: Linear Theory of Base IsolationBoanerges ValdezNo ratings yet

- Rjurnal, 1 - 9Document9 pagesRjurnal, 1 - 9hajeongwoo111No ratings yet

- Review - 3 - Load Forecasting PDFDocument25 pagesReview - 3 - Load Forecasting PDFhabte gebreial shrashrNo ratings yet

- SKF3013 Physical Chemistry I: Prof. Dr. Ramli Ibrahim Dr. Norlaili Abu BakarDocument24 pagesSKF3013 Physical Chemistry I: Prof. Dr. Ramli Ibrahim Dr. Norlaili Abu BakarAisha NajihaNo ratings yet

- Long Division With No Remainders Activity Sheets Ver 4Document4 pagesLong Division With No Remainders Activity Sheets Ver 4FonNo ratings yet

- REGNO:311119106029 Melodina Carnelian D Loyola - Icam College of Engineering and Technology (Licet)Document6 pagesREGNO:311119106029 Melodina Carnelian D Loyola - Icam College of Engineering and Technology (Licet)MELODINA CARNELIAN 19EC061No ratings yet

- Draw A Net Work Diagram of Activities For ProjectDocument2 pagesDraw A Net Work Diagram of Activities For ProjectpradeepNo ratings yet

- DSP 3Document25 pagesDSP 3Jayan GoelNo ratings yet

- MCQs On Correlation and Regression AnalysisDocument3 pagesMCQs On Correlation and Regression AnalysisMuhammad Imdadullah82% (17)

- Internal Assessment Test-Iii Department of Computer Science & EngineeringDocument2 pagesInternal Assessment Test-Iii Department of Computer Science & EngineeringOmeshwarNo ratings yet

- CPP With Algorithm and FlowchartDocument10 pagesCPP With Algorithm and Flowchartjhegs tinduganNo ratings yet

- Cambridge International AS & A Level: Mathematics 9709/62 May/June 2021Document12 pagesCambridge International AS & A Level: Mathematics 9709/62 May/June 2021Mayur MandhubNo ratings yet

- Analysis of Algorithms: CS 302 - Data Structures Section 2.6Document48 pagesAnalysis of Algorithms: CS 302 - Data Structures Section 2.6Shoaibakhtar AkhtarkaleemNo ratings yet

- Document 770Document6 pagesDocument 770thamthoiNo ratings yet