0% found this document useful (0 votes)

212 views14 pagesRegression

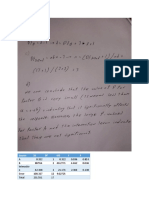

The document discusses different types of regression analysis. It begins by explaining linear regression, including simple linear regression with one independent variable and multiple linear regression with several independent variables. It then discusses the steps involved in regression analysis and the formula for simple linear regression. Next, it covers the method of ordinary least squares estimation and the coefficient of determination (R2) as a measure of goodness of fit. It concludes by introducing five main types of regression—linear, logistic, polynomial, stepwise, and multivariate adaptive regression splines—and providing brief descriptions of their properties.

Uploaded by

Andleeb RazzaqCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as DOCX, PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

212 views14 pagesRegression

The document discusses different types of regression analysis. It begins by explaining linear regression, including simple linear regression with one independent variable and multiple linear regression with several independent variables. It then discusses the steps involved in regression analysis and the formula for simple linear regression. Next, it covers the method of ordinary least squares estimation and the coefficient of determination (R2) as a measure of goodness of fit. It concludes by introducing five main types of regression—linear, logistic, polynomial, stepwise, and multivariate adaptive regression splines—and providing brief descriptions of their properties.

Uploaded by

Andleeb RazzaqCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as DOCX, PDF, TXT or read online on Scribd