Professional Documents

Culture Documents

Univ Auckland Lecture03

Uploaded by

Er Biswajit Chatterjee0 ratings0% found this document useful (0 votes)

19 views12 pagesOriginal Title

Univ-Auckland-lecture03

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

19 views12 pagesUniv Auckland Lecture03

Uploaded by

Er Biswajit ChatterjeeCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 12

Typical Complexity Curves

T(n) ∝ log n logarithmic

T(n) ∝ n linear

T(n) ∝ n log n

T(n) ∝ n2 quadratic

T(n) ∝ n3 cubic

T(n) ∝ nk polynomial

T(n) ∝ 2n exponential

Lecture 3 COMPSCI 220 - AP G Gimel'farb 1

Relative growth: g(n) = f(n)

f(5)

Input size n

Function f(n) 5 25 125 625

Constant 1 1 1 1 1

Logarithm log5n 1 2 3 4

Linear n 1 5 25 125

“n log n” n log5n 1 10 75 500

Quadratic n2 1 25 (52) 625 (54) 15,625 (56)

Cubic n3 1 125 (53) 15,625 (56) 1,953,125 (59)

Exponential 2n 1 220 ≈106 2120 ≈1036 2620 ≈10187

Lecture 3 COMPSCI 220 - AP G Gimel'farb 2

“Big-Oh” O(…) : Formal Definition

Let f(n) and g(n) be nonnegative-valued functions defined

on nonnegative integers n

The function g(n) is O(f) (read: g(n) is Big Oh of f(n)) iff

there exists a positive real constant c and a positive

integer n0 such that g(n) ≤ cf(n) for all n > n0

– Notation “iff” is an abbreviation of “if and only if”

– Example 1.9 (p.15): g(n) = 100log10n is O(n)

⇐ g(n) < n if n > 238 or g(n) < 0.3n if n > 1000

Lecture 3 COMPSCI 220 - AP G Gimel'farb 3

g(n) is O(f(n)), or g(n) = O(f(n))

g(n) is O(f(n)) if:

a constant c > 0 exists such

that cf(n) grows faster

than g(n) for all n > n0

To prove that some function

g(n) is O(f(n)) means to

show for g and f such

constants c and n0 exist

The constants c and n0 are

interdependent

Lecture 3 COMPSCI 220 - AP G Gimel'farb 4

“Big-Oh” O(…) : Informal Meaning

• If g(n) is O(f(n)), an algorithm with running time

g(n) runs asymptotically (i.e. for large n), at

most as fast, to within a constant factor, as an

algorithm with running time f(n)

O(f(n)) specifies an asymptotic upper bound, i.e. g(n)

for large n may approach closer and closer to cf(n)

Notation g(n) = O(f(n)) means actually g(n)∈O(f(n)),

i.e. g(n) is a member of the set O(f(n)) of functions

increasing with the same or lesser rate if n → ∞

Lecture 3 COMPSCI 220 - AP G Gimel'farb 5

Big Omega Ω(…)

• The function g(n) is Ω(f(n)) iff there exists a

positive real constant c and a positive integer n0

such that g(n) ≥ cf(n) for all n > n0

Ω(…) is opposite to O(…) and specifies an asymptotic

lower bound: if g(n) is Ω(f(n)) then f(n) is O(g(n))

Example 1: 5n2 is Ω(n) ⇐ 5n2 ≥ 5n for n ≥ 1

Example 2: 0.01n is Ω(log n) ⇐ 0.01n ≥ 0.5log10n

for n ≥ 100

Lecture 3 COMPSCI 220 - AP G Gimel'farb 6

Big Theta Θ(…)

• The function g(n) is Θ(f(n)) iff there exists two

positive real constants c1 and c2 and a positive

integer n0 such that c1 f(n) ≤ g(n) ≤ c2 f(n) for

all n > n0

g(n) is Θ(f(n)) ⇒

g(n) is O(f(n)) AND f(n) is O(g(n))

Ex.: the same rate of increase for g(n) = n + 5n0.5 and f(n) = n

⇒ n ≤ n + 5n0.5 ≤ 6n for n > 1

Lecture 3 COMPSCI 220 - AP G Gimel'farb 7

Comparisons: Two Crucial Ideas

• Exact running time function is unimportant since it

can be multiplied by an arbitrary positive constant.

• Two functions are compared asymptotically, for

large n, and not near the origin

– If the constants c involved are very large, then the

asymptotical behaviour is of no practical interest!

– To prove that g(n) is not O(f(n)), Ω(f(n)), or Θ

(f(n)) we have to show that the desired constants do

not exist, i.e. lead to a contradiction

Lecture 3 COMPSCI 220 - AP G Gimel'farb 8

Example 1.12, p.17

Linear function g(n) = an + b; a > 0, is O(n)

To prove, we form a chain of inequalities:

g(n) ≤ a n + |b| ≤ g(n) ≤ (a + |b|) · n for all n ≥ 1

Do not write O(2n) or O(an + b) as this means still O(n)!

O(n) - running time:

T(n) = 3n + 1 T(n) = 108 + n

T(n) = 50 + 10– 8 n T(n) = 106 n + 1

Remember that “Big-Oh” describes an “asymptotic behaviour”

for large problem sizes

Lecture 3 COMPSCI 220 - AP G Gimel'farb 9

Example 1.13, p.17

Polynomial Pk(n) = ak nk +ak−1nk-1+…+a1n+a0; ak > 0,

is O(nk) ⇐ Pk(n) ≤ (ak +|ak−1|+ … + |a0|) nk ; n≥1

Do not write O(Pk(n)) as this means still O(nk)!

O(nk) - running time:

• T(n) = 3n2 + 5n + 1 is O(n2) Is it also O(n3)?

• T(n) = 10−8 n3 + 108 n2 + 30 is O(n3)

• T(n) = 10−8 n8 + 1000n + 1 is O(n8)

T(n) = Pk(n) ⇒ O(nm), m ≥ k; Θ(nk); Ω(nm); m ≤ k

Lecture 3 COMPSCI 220 - AP G Gimel'farb 10

Example 1.14, p.17

Exponential g(n) = 2n+k is O( 2n): 2n+k = 2k · 2n for all n

Exponential g(n) = mn+k is O( ln), l ≥ m > 1:

mn+k ≤ ln+k = lk · ln for all n, k

A “brute-force” search for the best combination of n

interdependent binary decisions by exhausting all the 2n

possible combinations has exponential time complexity!

Therefore, try to find a more efficient way of solving

the decision problem with n ≥ 20 … 30

Lecture 3 COMPSCI 220 - AP G Gimel'farb 11

Example 1.15, p.17

• Logarithmic function g(n) = logm n has the same rate

of increase as log2 n because

logm n = logm 2 · log2 n for all n, m > 0

Do not write O(logm n) as this means still O(log n)!

You will find later that the most efficient search for data in

an ordered array has logarithmic time complexity

Lecture 3 COMPSCI 220 - AP G Gimel'farb 12

You might also like

- CS 253: Algorithms: Growth of FunctionsDocument22 pagesCS 253: Algorithms: Growth of FunctionsVishalkumar BhattNo ratings yet

- Analyzing Algorithm Complexity with Big-Oh, Big Omega, and Big Theta NotationDocument21 pagesAnalyzing Algorithm Complexity with Big-Oh, Big Omega, and Big Theta Notationsanandan_1986No ratings yet

- DAA ADEG Asymptotic Notation Minggu 4 - 11Document40 pagesDAA ADEG Asymptotic Notation Minggu 4 - 11Andika NugrahaNo ratings yet

- DAA ADEG Asymptotic Notation Minggu 4 11Document40 pagesDAA ADEG Asymptotic Notation Minggu 4 11Agra ArimbawaNo ratings yet

- CSC 305 VTL Lecture 04 20211Document26 pagesCSC 305 VTL Lecture 04 20211Bello TaiwoNo ratings yet

- Algorithms AnalysisDocument21 pagesAlgorithms AnalysisYashika AggarwalNo ratings yet

- Landau Notation: Dejvuth SuwimonteerabuthDocument13 pagesLandau Notation: Dejvuth SuwimonteerabuthNaomi ChandraNo ratings yet

- Bahan Presentasi. Chapter 03 - MITDocument23 pagesBahan Presentasi. Chapter 03 - MITAhmad Lukman HakimNo ratings yet

- AsymptoticNotation PDFDocument31 pagesAsymptoticNotation PDFMiguel Angel PiñonNo ratings yet

- Asymptotic NotationDocument66 pagesAsymptotic NotationFitriyah SulistiowatiNo ratings yet

- Big ODocument28 pagesBig OChandan kumar MohantaNo ratings yet

- Lect03 102 2019Document24 pagesLect03 102 2019Mukesh KumarNo ratings yet

- CS148 Algorithms Asymptotic AnalysisDocument41 pagesCS148 Algorithms Asymptotic AnalysisSharmaine MagcantaNo ratings yet

- The Growth of Functions and Big-O NotationDocument9 pagesThe Growth of Functions and Big-O Notationethio 90's music loverNo ratings yet

- Asymptotic Notation, Review of Functions & SummationsDocument45 pagesAsymptotic Notation, Review of Functions & Summationsnikhilesh walde100% (1)

- Big ODocument27 pagesBig ONeha SinghNo ratings yet

- AAA-5Document35 pagesAAA-5Muhammad Khaleel AfzalNo ratings yet

- Computer Sciences Department Bahria University (Karachi Campus)Document35 pagesComputer Sciences Department Bahria University (Karachi Campus)AbdullahNo ratings yet

- Data Structures 12 Asymptotic NotationsDocument29 pagesData Structures 12 Asymptotic NotationsSaad AlamNo ratings yet

- Section 2.2 of Rosen: Cse235@cse - Unl.eduDocument6 pagesSection 2.2 of Rosen: Cse235@cse - Unl.eduDibyendu ChakrabortyNo ratings yet

- 2IL50 Data Structures: 2018-19 Q3 Lecture 2: Analysis of AlgorithmsDocument39 pages2IL50 Data Structures: 2018-19 Q3 Lecture 2: Analysis of AlgorithmsJhonNo ratings yet

- CSD 205 – Design and Analysis of Algorithms: Asymptotic Notations and AnalysisDocument44 pagesCSD 205 – Design and Analysis of Algorithms: Asymptotic Notations and Analysisinstance oneNo ratings yet

- AdaDocument62 pagesAdasagarkondamudiNo ratings yet

- Analysis of Algorithms: Asymptotic Analysis and Big-O NotationDocument48 pagesAnalysis of Algorithms: Asymptotic Analysis and Big-O NotationAli Sher ShahidNo ratings yet

- Intro to Algorithms Chapter 3 Growth FunctionsDocument20 pagesIntro to Algorithms Chapter 3 Growth FunctionsAyesha shehzadNo ratings yet

- Algorithms and Data Structures I: Asymptotic Notations Θ, O, o, Ω, and ωDocument19 pagesAlgorithms and Data Structures I: Asymptotic Notations Θ, O, o, Ω, and ωMykhailo ShubinNo ratings yet

- Asymptotic Definitions For The Analysis of AlgorithmsDocument26 pagesAsymptotic Definitions For The Analysis of AlgorithmsSalman AhmadNo ratings yet

- Asymptotic Notation, Review of Functions & Summations: 12 June 2012 Comp 122, Spring 2004Document39 pagesAsymptotic Notation, Review of Functions & Summations: 12 June 2012 Comp 122, Spring 2004Deepa ArunNo ratings yet

- Understanding Asymptotic NotationDocument39 pagesUnderstanding Asymptotic NotationEr Umesh ThoriyaNo ratings yet

- Asymptotic Notation Review: Functions, Summations & ComplexityDocument39 pagesAsymptotic Notation Review: Functions, Summations & ComplexityMunawar AhmedNo ratings yet

- Department of M.Tech-Cse: Course: Design and Analysis of Algorithms Module: Fundamentals of Algorithm AnalysisDocument29 pagesDepartment of M.Tech-Cse: Course: Design and Analysis of Algorithms Module: Fundamentals of Algorithm AnalysissreerajNo ratings yet

- Week2 - Growth of FunctionsDocument64 pagesWeek2 - Growth of FunctionsFahama Bin EkramNo ratings yet

- Asymptotic NotationsDocument24 pagesAsymptotic NotationsMUHAMMAD USMANNo ratings yet

- Algorithms: Run Time Calculation Asymptotic NotationDocument38 pagesAlgorithms: Run Time Calculation Asymptotic NotationamanNo ratings yet

- Lect 6 - Asymptotic Notation-1Document24 pagesLect 6 - Asymptotic Notation-1kaushik.grNo ratings yet

- Analysis of Algorithms: Asymptotic NotationDocument25 pagesAnalysis of Algorithms: Asymptotic Notationhariom12367855No ratings yet

- Analysis of Algorithms: Asymptotic NotationDocument25 pagesAnalysis of Algorithms: Asymptotic Notationhariom12367855No ratings yet

- Asymptotic Notation, Review of Functions & SummationsDocument39 pagesAsymptotic Notation, Review of Functions & SummationsAshish SharmaNo ratings yet

- 02 AsympDocument39 pages02 AsympReaper UnseenNo ratings yet

- Mathematical Foundation of AlgorithmsDocument23 pagesMathematical Foundation of Algorithmsarup sarkerNo ratings yet

- CS 203 Data Structure & Algorithms: Performance AnalysisDocument26 pagesCS 203 Data Structure & Algorithms: Performance AnalysisSreejaNo ratings yet

- Growth of FunctionDocument8 pagesGrowth of FunctionjitensoftNo ratings yet

- CS 702 Lec10Document9 pagesCS 702 Lec10Muhammad TausifNo ratings yet

- Asymptotic Notation, Review of Functions & SummationsDocument39 pagesAsymptotic Notation, Review of Functions & SummationsLalita LuthraNo ratings yet

- 1-Complexity Analysis Basics-23-24Document74 pages1-Complexity Analysis Basics-23-24walid annadNo ratings yet

- CSD 205 - Algorithms Analysis and Asymptotic NotationsDocument47 pagesCSD 205 - Algorithms Analysis and Asymptotic NotationsHassan Sharjeel JoiyaNo ratings yet

- 03 - Inha Asymptotic NotationsDocument28 pages03 - Inha Asymptotic Notationsrashid.shorustamov2003No ratings yet

- Lec - 2 - Analysis of Algorithms (Part 2)Document45 pagesLec - 2 - Analysis of Algorithms (Part 2)Ifat NixNo ratings yet

- Asymptotic Notation - Analysis of AlgorithmsDocument31 pagesAsymptotic Notation - Analysis of AlgorithmsManya KhaterNo ratings yet

- Asymptotic NotationDocument39 pagesAsymptotic NotationthornemusauNo ratings yet

- Performance Analysis: Order of Growth AnalysisDocument20 pagesPerformance Analysis: Order of Growth AnalysisSteve LukeNo ratings yet

- Asymptotic Analysis-1/KICSIT/Mr. Fahim Khan/1Document29 pagesAsymptotic Analysis-1/KICSIT/Mr. Fahim Khan/1Sanwal abbasiNo ratings yet

- Time ComplexityDocument13 pagesTime Complexityapi-3814408100% (1)

- Algorithms and Data Structures Lecture Slides: Asymptotic Notations and Growth Rate of Functions, Brassard Chap. 3Document41 pagesAlgorithms and Data Structures Lecture Slides: Asymptotic Notations and Growth Rate of Functions, Brassard Chap. 3Phạm Gia DũngNo ratings yet

- COMP2230 Introduction To Algorithmics: Lecture OverviewDocument19 pagesCOMP2230 Introduction To Algorithmics: Lecture OverviewMrZaggyNo ratings yet

- Analysis of Algorithms: Dr. Ijaz HussainDocument61 pagesAnalysis of Algorithms: Dr. Ijaz HussainUmarNo ratings yet

- Asymptotic Not SEM IVDocument19 pagesAsymptotic Not SEM IVRishabh RaiNo ratings yet

- Lecture2 CleanDocument39 pagesLecture2 CleanAishah MabayojeNo ratings yet

- Asymptotic Notations ExplainedDocument29 pagesAsymptotic Notations Explainedabood jalladNo ratings yet

- Academics HandbookDocument57 pagesAcademics HandbookSubraNo ratings yet

- Aspect NewsDocument13 pagesAspect NewsEr Biswajit ChatterjeeNo ratings yet

- Problems with Fishes, Dice, Credit Cards, and MoreDocument8 pagesProblems with Fishes, Dice, Credit Cards, and MoreEr Biswajit ChatterjeeNo ratings yet

- Exercises for the continuation flightDocument4 pagesExercises for the continuation flightEr Biswajit ChatterjeeNo ratings yet

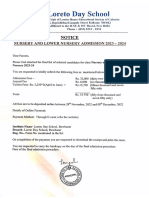

- Guidelinefore Care Proof Loreto Day School BowbazarDocument2 pagesGuidelinefore Care Proof Loreto Day School BowbazarEr Biswajit ChatterjeeNo ratings yet

- Payment Notice For Nursery and Lower Nursery 23-24 - 28novvDocument2 pagesPayment Notice For Nursery and Lower Nursery 23-24 - 28novvEr Biswajit ChatterjeeNo ratings yet

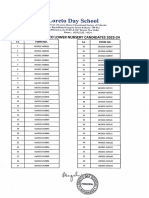

- List of Selected Lower Nursery Candidates 2023 2024Document3 pagesList of Selected Lower Nursery Candidates 2023 2024Er Biswajit ChatterjeeNo ratings yet

- List of Selected Nursery Candidates 2023 2024Document3 pagesList of Selected Nursery Candidates 2023 2024Er Biswajit ChatterjeeNo ratings yet

- Final PG 23 24 638204347Document21 pagesFinal PG 23 24 638204347Er Biswajit ChatterjeeNo ratings yet

- Mathzc234 Course HandoutDocument5 pagesMathzc234 Course HandoutAnandababuNo ratings yet

- Gravity Gradient TorqueDocument7 pagesGravity Gradient TorquePomPhongsatornSaisutjaritNo ratings yet

- Infix To PostfixDocument27 pagesInfix To PostfixbrainyhamzaNo ratings yet

- A Linear ProgramDocument58 pagesA Linear Programgprakash_mNo ratings yet

- Riemann Surface of Complex Logarithm and Multiplicative CalculusDocument19 pagesRiemann Surface of Complex Logarithm and Multiplicative Calculussameer denebNo ratings yet

- Drying Shrinkage of Concrete With Blended Cementitious Binders: Experimental Study and Application of ModelsDocument18 pagesDrying Shrinkage of Concrete With Blended Cementitious Binders: Experimental Study and Application of ModelsNM2104TE06 PRATHURI SUMANTHNo ratings yet

- Numerical Methods ReviewerDocument2 pagesNumerical Methods ReviewerKim Brian CarboNo ratings yet

- Elementary Theory of StructuresDocument112 pagesElementary Theory of StructuresGodwin AcquahNo ratings yet

- Bill Gaede: Science 341 (2014) What Is Physics?Document13 pagesBill Gaede: Science 341 (2014) What Is Physics?Herczegh TamasNo ratings yet

- Economics Question on Fixed and Variable CostsDocument3 pagesEconomics Question on Fixed and Variable CostskishellaNo ratings yet

- Objective Questions: Yx Xy X Xy XDocument10 pagesObjective Questions: Yx Xy X Xy XPragati DuttaNo ratings yet

- Chapter 10 Differential EquationsDocument22 pagesChapter 10 Differential EquationsAloysius Raj50% (2)

- Vo MAT 267 ONLINE B Fall 2022.ddeskinn - Section 12.1Document2 pagesVo MAT 267 ONLINE B Fall 2022.ddeskinn - Section 12.1NHƯ NGUYỄN THANHNo ratings yet

- Assignment 1Document7 pagesAssignment 1robert_tanNo ratings yet

- Digital Signal Processing With Mathlab Examples, Vol 1Document649 pagesDigital Signal Processing With Mathlab Examples, Vol 1Toti Caceres100% (3)

- Dr. Muhammad Shahzad Department of Computing (DOC), SEECS, NUSTDocument45 pagesDr. Muhammad Shahzad Department of Computing (DOC), SEECS, NUSTMaham JahangirNo ratings yet

- DFS C program to perform depth-first search on an undirected graphDocument5 pagesDFS C program to perform depth-first search on an undirected graphom shirdhankarNo ratings yet

- D 1780 - 99 Rde3odaDocument4 pagesD 1780 - 99 Rde3odaMarceloNo ratings yet

- Morphometric Analysis of Kabini River Basin in H.D.Kote Taluk, Mysore, Karnataka, IndiaDocument11 pagesMorphometric Analysis of Kabini River Basin in H.D.Kote Taluk, Mysore, Karnataka, IndiaEditor IJTSRDNo ratings yet

- Consistent inflow turbulence generator for LES evaluation of wind-induced responses for tall buildingsDocument19 pagesConsistent inflow turbulence generator for LES evaluation of wind-induced responses for tall buildingsHaitham AboshoshaNo ratings yet

- Condensed Matter PhysicsDocument10 pagesCondensed Matter PhysicsChristopher BrownNo ratings yet

- ME501 L3 Stress-Strain Relations Oct 2017Document8 pagesME501 L3 Stress-Strain Relations Oct 2017Firas RocktNo ratings yet

- Determinants of Costs and Cost FunctionDocument9 pagesDeterminants of Costs and Cost FunctionAsta YunoNo ratings yet

- Summative Test Q2W3&W4Document4 pagesSummative Test Q2W3&W4Querubee Donato Diolula50% (2)

- Multiple-Choice Test Eigenvalues and Eigenvectors: Complete Solution SetDocument7 pagesMultiple-Choice Test Eigenvalues and Eigenvectors: Complete Solution SetMegala SeshaNo ratings yet

- Dynamics of MachineDocument24 pagesDynamics of Machinesara vanaNo ratings yet

- Research Methodology: by Asaye Gebrewold (PHD Candidate)Document17 pagesResearch Methodology: by Asaye Gebrewold (PHD Candidate)natnael haileNo ratings yet

- Lecture 2 Bearing and Punching Stress, StrainDocument16 pagesLecture 2 Bearing and Punching Stress, StrainDennisVigoNo ratings yet

- Lesson Plans ALGEBRASeptember 20221Document2 pagesLesson Plans ALGEBRASeptember 20221Dev MauniNo ratings yet

- FAQs in C LanguageDocument256 pagesFAQs in C LanguageShrinivas A B0% (2)