Professional Documents

Culture Documents

2019 Hattendorf Theorem-2

Uploaded by

hanCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

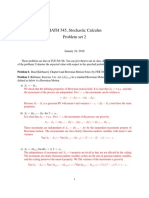

2019 Hattendorf Theorem-2

Uploaded by

hanCopyright:

Available Formats

Hattendorf’s Theorem

Let

T

L = vTx bTx − ∫ x vt πt dt

0

be the future loss (prospective loss) random variable in a general fully-continuous life insurance

model.

=

Theorem: Var[ L] E[[vTx (bTx − TxV )]2 ] .

Proof: It follows from the following form of Thiele’s differential equation,

vt π=

t dt d (v tV ) + v (bt − tV )µ x +t dt ,

t t

that

T T

L= vTx bTx − ∫ x d(vt tV ) − ∫ x vt (bt − tV )µ x +t dt

0 0

or

T

V vTx (bTx − TxV ) − ∫ x vt (bt − tV )µ x +t dt .

L − 0=

0

Because 0V = E[ L] , the theorem is proved if we can show that

T

E[[vTx (bTx − TxV ) − ∫ x vt (bt − tV )µ x +=

t dt ] ] E[[v (bTx − TxV )] ] ,

2 Tx 2

0

or that

T T

E[[ ∫ x vt (bt − tV )µ x +=

t dt ] ] 2E[v (bTx − TxV ) × ∫ v (bt − tV )µ x +t dt ] ,

2 Tx x t

(*)

0 0

which we now show by means of integration by parts. By the law of the unconscious statistician

and because

fTx ( s )ds = −d s px ,

the LHS of (*) is

s =∞ s

−∫ [ ∫ vt (bt − tV )µ x +t dt ]2 d s px

s =0 0

s s =∞ s =∞ s

− [ ∫ vt (bt − tV )µ x +t dt ]2 × s px

= − ∫s=0 s px × d[ ∫ vt (bt − tV )µ x +t dt ]2

0 s =0 0

s =∞ s

+∫

= px × d[ ∫ vt (bt − tV )µ x +t dt ]2

s =0 s 0

s =∞ s s

= ∫s=0 s px × 2[ ∫ vt (bt − tV )µ x +t dt ]2−1 × d[ ∫ vt (bt − tV )µ x +t dt ]

0 0

s =∞ s t

= 2∫ px [ ∫ v (bt − tV )µ x +t dt ] × v s (bs − sV )µ x + s ds

s =0 s 0

which is the RHS of (*) as s px × µ x + s = fTx ( s ) . Q.E.D.

Because we do not need to assume 0V to be zero, we obtain the following corollary.

The variance of the time-t future loss (prospective loss) r.v.,

Tx+t t

t=

L vTx+t bt +Tx+t − ∫ v πt + s ds ,

0

is

( )

2

Var[ t L] E vTx+t bt +Tx+t − t +Tx+tV .

=

Applications: AM Exercise 8.24, Exercise 6.6 For Exercise 6.6, you first note that if µx+τ = µ for

all τ > 0, then tV ( Ax ) = 0 for all t. Hence, by Hattendorf’s Theorem,

µ

Var[ L] =E[[vTx (1 − 0)]2 ] = 2 Ax = .

µ + 2δ

Remarks (i) Hattendorf’s Theorem for the full discrete case has an extra factor:

Kx

( )

2

− ∑ v j π j E v K x +1 bK x +1 − K x +1V × px + K x .

Var v K x +1bK x +1=

j =0

Again, it is not necessary to assume 0V to be zero. You may want to try to derive the formula by

summation by parts; it is not easy.

(ii) Consider the last part in Exercise 6.12 of AM. Here, Kx is a geometric random variable.

Then, px+k = r for all integers k, and jVx = 0 for all integer j. Hence,

Var[ L]= E[[v K x +1 (1 − 0)]2 × r ]= 2

Ax × r ,

which immediately gives the solution to HW Set 6, Problem 1(ii).

(iii) Professor Hans U. Gerber formula would illustrate the decomposition,

vt π=

t dt d (v tV ) + v (bt − tV )µ x +t dt ,

t t

as follows:

You might also like

- GW9 SolutionsDocument3 pagesGW9 Solutionswangshiui2002No ratings yet

- Problem 2.35 PDFDocument3 pagesProblem 2.35 PDFKauê BrittoNo ratings yet

- UebungsserieDocument1 pageUebungsserieTobias Staub100% (1)

- DemostracionesDocument20 pagesDemostracionesEstefanyRojasNo ratings yet

- EEE 303 HW # 1 SolutionsDocument22 pagesEEE 303 HW # 1 SolutionsDhirendra Kumar SinghNo ratings yet

- MATH 545, Stochastic Calculus Problem Set 2: January 24, 2019Document7 pagesMATH 545, Stochastic Calculus Problem Set 2: January 24, 2019patriciaNo ratings yet

- Tutorial 5 PDEDocument2 pagesTutorial 5 PDENurul AlizaNo ratings yet

- The Wave Equation On RDocument12 pagesThe Wave Equation On RElohim Ortiz CaballeroNo ratings yet

- Wave EquationDocument18 pagesWave EquationBibekNo ratings yet

- APM346 Week 3 2018FDocument4 pagesAPM346 Week 3 2018FGyebi EmmanuelNo ratings yet

- Problem 2.28 PDFDocument2 pagesProblem 2.28 PDFKauê BrittoNo ratings yet

- P7.2-2, 4, 5, 7 P7.3-1, 2, 5 P7.4-1, 4 P7.5-2, 4, 13, 14, 20 P7.6-1, 3 P7.7-2, 6 P7.8-1, 3, 5, 6, 13Document20 pagesP7.2-2, 4, 5, 7 P7.3-1, 2, 5 P7.4-1, 4 P7.5-2, 4, 13, 14, 20 P7.6-1, 3 P7.7-2, 6 P7.8-1, 3, 5, 6, 13Vibhuti NarayanNo ratings yet

- Chapter 7 Homework SolutionDocument20 pagesChapter 7 Homework SolutionAndy SimbañaNo ratings yet

- Math2 AssignmentDocument8 pagesMath2 AssignmentAhmed ElqazazNo ratings yet

- 109 3 EM2 ExamDocument2 pages109 3 EM2 ExamSimon LaplaceNo ratings yet

- Mechanics 2 1Document19 pagesMechanics 2 1Azhar MehmoodNo ratings yet

- Ex4 22Document3 pagesEx4 22Harsh RajNo ratings yet

- Exercise 3 - SolutionDocument8 pagesExercise 3 - SolutionFatimah Azzahra ArhamNo ratings yet

- Tutorial Sheet 3Document7 pagesTutorial Sheet 3m.cortis.mNo ratings yet

- Time-Dependent BC: Module 5: Heat EquationDocument6 pagesTime-Dependent BC: Module 5: Heat EquationDh huNo ratings yet

- Evans PDE Solution Chapter 5 SobolevDocument8 pagesEvans PDE Solution Chapter 5 SobolevHazard100% (1)

- Pedroso Probset 1Document11 pagesPedroso Probset 1Princess Niña B. PedrosoNo ratings yet

- Laplace TransferFunctionsDocument23 pagesLaplace TransferFunctionsHera-Mae Granada AñoraNo ratings yet

- Stochastic Analysis in Finance IIIDocument17 pagesStochastic Analysis in Finance IIItrols sadNo ratings yet

- Assignment 2b SolutionsDocument12 pagesAssignment 2b SolutionsArfaz HussainNo ratings yet

- Plobleme Mixte (Cu Condit II Init Iale & La Frontier A)Document1 pagePlobleme Mixte (Cu Condit II Init Iale & La Frontier A)irinaNo ratings yet

- TH THDocument8 pagesTH THDuna areny molneNo ratings yet

- 7 Linear Quadratic Control: 7.1 The ProblemDocument10 pages7 Linear Quadratic Control: 7.1 The Problemdude2010No ratings yet

- A Homework On Solid Mechanics: Problem 1: Determinant Identity Q.1aDocument5 pagesA Homework On Solid Mechanics: Problem 1: Determinant Identity Q.1aAmritaNo ratings yet

- Safprob 6 Wide Ranging Stochastic Problems Deals With SafiiiDocument2 pagesSafprob 6 Wide Ranging Stochastic Problems Deals With SafiiiDeVazo OnomaNo ratings yet

- Onda de Serrucho FinalDocument2 pagesOnda de Serrucho FinalJosedanielNo ratings yet

- Onda de Serrucho FinalDocument2 pagesOnda de Serrucho FinalJosedanielNo ratings yet

- LCHOsolnDocument4 pagesLCHOsolnDaniela F L BNo ratings yet

- Signals Sampling TheoremDocument3 pagesSignals Sampling TheoremBhuvan Susheel MekaNo ratings yet

- Advanced Fluid Mechanics - Chapter 03 - Exact Solution of N-S EquationDocument44 pagesAdvanced Fluid Mechanics - Chapter 03 - Exact Solution of N-S Equationhari sNo ratings yet

- Properties of The Wave Equation On RDocument12 pagesProperties of The Wave Equation On RElohim Ortiz CaballeroNo ratings yet

- Ntegration in Ractice: HapterDocument1 pageNtegration in Ractice: HapterLuis SalgadoNo ratings yet

- A Homework On Engineering Analysis: Problem 1: First Order Odes Q.1ADocument5 pagesA Homework On Engineering Analysis: Problem 1: First Order Odes Q.1AAmritaNo ratings yet

- Signals Sampling TheoremDocument3 pagesSignals Sampling TheoremKirubasri SNo ratings yet

- Dwnload Full Essentials of Probability Statistics For Engineers Scientists 1st Edition Walpole Solutions Manual PDFDocument36 pagesDwnload Full Essentials of Probability Statistics For Engineers Scientists 1st Edition Walpole Solutions Manual PDFmarilynfrancisvvplri100% (9)

- Exercise Session 4. Solutions. Fixed Income and Credit Risk: T U T T UDocument4 pagesExercise Session 4. Solutions. Fixed Income and Credit Risk: T U T T UjeanboncruNo ratings yet

- Method of Multiples ScaleDocument16 pagesMethod of Multiples ScaleJunaidvali ShaikNo ratings yet

- 7Document2 pages7juwa12588No ratings yet

- Dy DX Dy DT DT DX /DT CSC CSCDocument2 pagesDy DX Dy DT DT DX /DT CSC CSCAlfredo KawengianNo ratings yet

- Assignment 3Document2 pagesAssignment 3Ravindra ShettyNo ratings yet

- Math207 HW3Document2 pagesMath207 HW3PramodNo ratings yet

- Relativistic Kinematics: Raghunath Sahoo Indian Institute of Technology Indore, India-452020Document41 pagesRelativistic Kinematics: Raghunath Sahoo Indian Institute of Technology Indore, India-452020aleacunia87No ratings yet

- =⃗B ⋅d ⃗A= 2 πx =∫ 2 π = 2 π ln +w = −dΦ =− 2 π ln +w =− 2 π ln +w where = (a +bt) =b: = − 4 π × 10 T (1.00 m) 2 π 0.0100 m +0.100 m 0.0100 m (10.0 A/ s) −4.80 ×10 VDocument1 page=⃗B ⋅d ⃗A= 2 πx =∫ 2 π = 2 π ln +w = −dΦ =− 2 π ln +w =− 2 π ln +w where = (a +bt) =b: = − 4 π × 10 T (1.00 m) 2 π 0.0100 m +0.100 m 0.0100 m (10.0 A/ s) −4.80 ×10 VZung TranNo ratings yet

- Corvinus 2021 Dynamic Macroeconomics Problem Set 01 SolutionDocument5 pagesCorvinus 2021 Dynamic Macroeconomics Problem Set 01 SolutionsamNo ratings yet

- TutorialDocument29 pagesTutorialAmalinaNo ratings yet

- Ex4 21Document2 pagesEx4 21Harsh RajNo ratings yet

- Duhamel PrincipleDocument2 pagesDuhamel PrincipleArshpreet SinghNo ratings yet

- Distribusi SeragamDocument7 pagesDistribusi Seragamherlina balangsawangNo ratings yet

- Strogatz NDCs 24 e 9Document2 pagesStrogatz NDCs 24 e 9Prabhat RanjanNo ratings yet

- Kinematics DynamicsDocument104 pagesKinematics DynamicsNadiaa AdjoviNo ratings yet

- Arc Length and Surface Area in Parametric Equations: MATH 211, Calculus IIDocument27 pagesArc Length and Surface Area in Parametric Equations: MATH 211, Calculus IIAli ElbasryNo ratings yet

- Solution 10Document7 pagesSolution 10PerepePereNo ratings yet

- Full Download Essentials of Probability Statistics For Engineers Scientists 1st Edition Walpole Solutions ManualDocument36 pagesFull Download Essentials of Probability Statistics For Engineers Scientists 1st Edition Walpole Solutions Manuallibra.mimejx90w100% (33)

- PHYS Problem Set 1Document10 pagesPHYS Problem Set 1khaizanjohnNo ratings yet

- PDF Sensors Air Conditioning Automotive AN1Document5 pagesPDF Sensors Air Conditioning Automotive AN1Karthik RajaNo ratings yet

- SAE-J1850 Communication Protocol Conformity Transmission Controller For Automotive LANDocument59 pagesSAE-J1850 Communication Protocol Conformity Transmission Controller For Automotive LANMa Ngoc TrungNo ratings yet

- Guar Gum: Product Data Sheet (PDS)Document1 pageGuar Gum: Product Data Sheet (PDS)Moatz HamedNo ratings yet

- State-Of-The-Art of Battery State-Of-Charge DeterminationDocument19 pagesState-Of-The-Art of Battery State-Of-Charge Determinationyasvanthkumar sNo ratings yet

- TP 3017D Taman Angkasa Nuri - V01Document25 pagesTP 3017D Taman Angkasa Nuri - V01Najwa AzmanNo ratings yet

- Olympus UT Catalog PDFDocument52 pagesOlympus UT Catalog PDFGTpianomanNo ratings yet

- Pspice Project-BJT AmplifierDocument4 pagesPspice Project-BJT AmplifierSerdar7tepe100% (1)

- Lab Report Bacteria CountDocument5 pagesLab Report Bacteria Countsarahyahaya67% (3)

- PDF 4.6 MDocument2 pagesPDF 4.6 MmdisicNo ratings yet

- Math 11-CORE Gen Math-Q2-Week 1Document26 pagesMath 11-CORE Gen Math-Q2-Week 1Christian GebañaNo ratings yet

- Sample Questions Paper 2 - TNQT Digital-4July19Document6 pagesSample Questions Paper 2 - TNQT Digital-4July19Gudimetla KowshikNo ratings yet

- Stock Price Prediction Using LSTMDocument29 pagesStock Price Prediction Using LSTMKunal GargNo ratings yet

- Completation Inteligent RevistaDocument9 pagesCompletation Inteligent RevistaGabriel Castellon HinojosaNo ratings yet

- Methods of Test For Rheometer - ODR: 1. ScopeDocument1 pageMethods of Test For Rheometer - ODR: 1. ScopeArun GuptaNo ratings yet

- SampleDocument43 pagesSampleSri E.Maheswar Reddy Assistant ProfessorNo ratings yet

- About Planets - Vaishali's ProjectDocument3 pagesAbout Planets - Vaishali's ProjectRaj KumarNo ratings yet

- Research Proposal TransformerDocument3 pagesResearch Proposal Transformersohalder1026No ratings yet

- ManageEngine Application Manager Best PracticesDocument12 pagesManageEngine Application Manager Best PracticesNghiêm Sỹ Tâm PhươngNo ratings yet

- Guide c07 742458Document14 pagesGuide c07 742458Chen ComseNo ratings yet

- Fujitsu APMDocument2 pagesFujitsu APMLuis D100% (1)

- Is 4031 Part 4 - ConsistencyDocument4 pagesIs 4031 Part 4 - ConsistencyCrypto AbhishekNo ratings yet

- DREHMO Matic-C ENDocument36 pagesDREHMO Matic-C ENsimbamikeNo ratings yet

- A User's Guide To Winsteps PDFDocument667 pagesA User's Guide To Winsteps PDFjepwilNo ratings yet

- IB Lite 1 11 0 New FeaturesDocument11 pagesIB Lite 1 11 0 New Featuresm.n.malasNo ratings yet

- LSMW To Update Customer Master Records With Standard ObjectDocument9 pagesLSMW To Update Customer Master Records With Standard ObjectShahid_ONNo ratings yet

- Calculation of Altitude CorrectionDocument3 pagesCalculation of Altitude CorrectionMikami TeruNo ratings yet

- Cell Biology: Science Explorer - Cells and HeredityDocument242 pagesCell Biology: Science Explorer - Cells and HeredityZeinab ElkholyNo ratings yet

- Hard Disk Drive Specification HGST Travelstar Z7K500Document173 pagesHard Disk Drive Specification HGST Travelstar Z7K500OmegalexNo ratings yet

- Applications of Heat PipeDocument17 pagesApplications of Heat PipeManikantaNaupadaNo ratings yet

- Surveying PDFDocument215 pagesSurveying PDFShaira Mae Cañedo100% (1)