Professional Documents

Culture Documents

Drones and spatial AI for improving crop yield

Uploaded by

gopika hariOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Drones and spatial AI for improving crop yield

Uploaded by

gopika hariCopyright:

Available Formats

Drones and Spatial Artificial Intelligence

for Improving Crop Yield: An Implementation Concept

Carson Farmer and Dr. Hector Medina

Abstract

Bochkovskiy, A., Wang, C.Y. and Liao, H.Y.M., 2020. Yolov4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934.

Flight Direction Sooty Mold Sooty Mold

Expected Challenges and Results

With the expected total agricultural yield expected to increase by 50%-100% by the year 2050, Sooty Mold Expected Challenges:

agricultural producers are looking for innovative methods for increasing crop yield. By utilizing

1. Diverse Dataset and Annotation/Labeling: With the research focuses on directly

computer vision and artificial intelligence, researchers have shown that computer algorithms can

recognize weeds from crop growth. With recent innovations in low-cost hardware allowing for

helping farmers, considerations for the types of crop, possible pest and diseases,

depth perception alongside high resolution imaging, new network architectures capable of and the variety of weeds needs to be accounted for when designing the system. The

detecting and recognizing weeds, pests, and other potential crop issues are in development. The training data developed for the system will require a diverse grouping of different

solution we are proposing to develop utilizes a vision processing unit to perform accelerated plants and diseases to be present. An issue that could arise is having a dataset too

machine learning on camera images` to achieve near real-time results without incurring extra small where the network is not able to be trained to accurately recognize the disease

computational time on the host computer. The system allows for off-network or edge applications or inaccurately characterizes plants. In performing data gathering flights, images

to be developed which are capable of performing object recognition and classification without the from the camera can be directly taken and stored. The human aspect of the project

use of powerful computers. Furthermore, with the average farm in Virginia being approximately will involve a manual process of annotating each image and labels plants, diseases,

180 acres, autonomous mapping and planning is required for accurate estimation of crop health.

weeds, and pests for detection. The labeled images will look similar to Fig. 2.

With this application, a prior unmanned aerial vehicle (UAV), which was used for social distance

monitoring, provides a suitable vehicle for performing autonomous mapping and crop analysis of

Furthermore, the fusion of depth data with the optical image will be introduced

the field. Combining the autonomous UAV with the advanced camera system, achieving accurate which will requires further logging of information alongside the images (see Fig.

crop health information is now in the realm of possibility. Furthermore, farmers will benefit from 4).

the additional crop information provided by the system and can implement custom pest prevention 2. Route Planning and Navigation: With the drone flying over different fields and

and herbicide deployment protocols. With these new advances, future improvements to how crop types, a suitable navigation system is required. The implementation of the

farming is conducted are expected. The results expected to be achieved are shown in Fig. 1. navigation scheme will need to make use of visual cues from the field to determine

crop boundaries. Since UAVs have a tradeoff between battery life and weight,

achieving optimal flight paths will allow for coverage of the field while minimizing

Background and Introduction Figure 1. Visual Representation of Drone-based Spatial AI for Crop Improvement

Combining cutting-edge low-power computer vision hardware and autonomous flight, the goal of the research is to develop a flight system

capable of gathering large amount of information from a field for processing on an HPC to provide insight to farmers on the best actions to take

the flight time of the drone.

3. Data Interpretation: After a neural network similar to Fig. 5 has proceed the image

to produce an increase in total yield.

In Virginia, a significant amount of income results from agriculture and related and depth data from the drone, the actions and recommendations to the farmer need

industries [1]. According to the Virginia Department of Agriculture and Consumer to be determined. These actions are formulated from a combination of analysis of

Services, agriculture comprises approximately $70 billion of the total state income and historical data informed by a literature review of the appropriate methods

provides more than 334,000 jobs in the Commonwealth of Virginia [1]. Five of the top mitigating the loss incurred.

ten nationwide agricultural commodities are produced in Virginia [1]. With recent

interests in increasing the total yield of farms to meet growing demand around the Expected Results:

world, farmers are beginning to turn to “smart” techniques to further improve their total 4. Informed Decision Process for Mitigating Crop Losses: Ultimately, the research

yield [2, 3, 4]. With artificial intelligence (AI) and computer vision (CV) becoming Figure 2. Annotations of Sooty Mold on Leaves aims to produce an integrated system capable of determining the impacts of

more accessible and deployable, the agriculture industry is beginning to utilize these In performing the research, a diverse dataset will be gathered which will include annotations of plant type, disease, pest, and weeds. The external factors on crop growth. Furthermore, the system will be designed to be

diverse dataset will be a challenge for the research to both gather and annotate the images for training. With recent advances in annotation scalable to a large number of producers to aid in increasing crop yield. With the

advances in unique ways [5-7]. One such example is the Microsoft project Farmbeat software, the process and be easily done but human interaction will be required to process a large number of images.

[6]. The Farmbeats project combined advances in wireless communication with informed decision process, producers are expected to see an increase in total yield

distributed sensors to determine soil moisture levels for farms [6]. From examining as a result of targeted actions

other external factors on crop growth and yield, weeds were shows to have a significant 5. Crop Type, Pest, Disease, and Weed Dataset with Depth Information: A byproduct

impact on the growth of plants and distribution of nutrients and have developed metric of the research will be a dataset containing information on different crop types,

for measuring the impact of weeds [8]. These metrics predict that as the density of pests, diseases, and weeds correlated with depth information gathered from the

weeds in the field increases, then the total crop yield will rapidly decrease. Likewise, it cameras. The new dataset will be required for training the neural networks

has been shown that the influence of rodents can decrease crop yield by at least 12% [9]. responsible for determining the locations, sizes, and shapes of the external factors.

Furthermore, diseases, such as Sooty Mold, have been found to create widespread losses 6. Large-Scale Crop Management System: The process of deploying the neural

to farms (see Fig. 2) [10]. While these “smart” systems have proven useful in creasing network demonstrates a new era in integrating HPC and cloud resources into

total yield, the average farm in Virginia is 181 acres [1], which creates a challenge in agriculture. The crop management system developed provides a key milestone for

deploying sensors and cameras to monitor the whole field. The use of a drone and producers in seeing an increase in crop yield from technological interfaces.

additional information about crops will be able to benefit farmers by helping them

increase the total yield. In utilizing autonomous drones, the research aims to combine

the power of advanced computer vision and machine learning to autonomous systems to

gather information about the field and distribution of plant diseases, weeds, and insects.

Conclusion

Methods Figure 4. Depth Map (left) and Image (right) of a Bush.

The advanced camera system to be mounted on the drone is capable of capturing 4k image along with performing stereoscopic depth

As a result of the research, innovations in the agriculture industry are expected. With

producers experiencing an increased demand, measure to improve the total crop yield

Major Milestone: Figure 3. Currently Developed Drone

The drone that is currently being used by TRACER Labs was developed for detection of people for enforcing social distancing at outdoor

calculations in close to real-time. Moreover, the camera is equipped with a vision processing unit which can perform convolutional are required. By combining low-power cutting-edge computer vision hardware and

1. Dataset Creation neural network calculation in close to real-time on the images and depth data. These advances allow for the drone to autonomously

gatherings. Drawing from the lessons learned during the prior research, the future agriculture project builds upon the drone by adding low-

navigate and capture images of the field and log areas of interest. autonomous drones, the work is expected to produce a trained neural network for

2. Field Navigation power computer vision modules for gathering depth and image information.

locating plants, weeds, diseases, and pests from aerial images. Moreover, a machine

3. Model Architecture Selection and Training for Images and Long-Term Yield

4. Development of Crop Treatment Recommendations 1 2 3 4 5 6 7 8 9 10 11 12 13 14

learning approach for determining appropriate actions to improve an increase in crop

5. Deployment for User Testing yield. As the system is deployed, user testing and feedback will aid in further

refinement of the system to produce actionable information for the producers. The

Description of Milestones entire process is part of a new revolution in agriculture where technology and

6. Dataset Creation: Resulting from an extensive literature review of plant diseases and crop traditional farming methods are merging to produce informed and improved

changes, a dataset of labelled and annotated images will be created. Furthermore, the dataset agricultural processes.

needs to include images of the host plant and crop. These images will aid in locating the A B

individual plants from aerial images.

7. Field Navigation: To limit power consumption of the drone while achieving optimal field

coverage, the drone will be required to identify the planting direction of the crop and create

an optimal flight path while covering the area. The design of the algorithm provides a

method for performing full field navigation autonomously in locations where GPS

References and/or

coordinates may not be known for the field. The drone used for the tests in shown in Fig. 3.

8. Model Architecture Selection and Training for Images and Long-Term Yield: With a dataset 4 4.5 5 5.5 6 6.5 7

of crop images along with weeds, pests, and diseases, the implementation of a neural network C

trained to recognized the key characteristics is required. In modern CV, the problem is

decomposed into two tasks: object detection and classification (see Fig. 5). With several

major models existing to accomplish these tasks, the training of such a model for the

Acknowledgments

[1] Agriculture facts and figures. Virginia Department of Agriculture and Consumer Services, 2021.

agriculture application will require multiple iterations and testing to achieve an accurately [2] Navin Ramankutty, Zia Mehrabi, Katharina Waha, Larissa Jarvis, Claire Kremen, Mario Herrero, and Loren H Rieseberg. Trends in global agricultural land use:

trained model. implications for environmental health and food security. Annual review of plant biology, 69:789–815, 2018.

9. Development of Crop Treatment Recommendations: After using a machine learning approach [3] Muhammad Ayaz, Mohammad Ammad-Uddin, Zubair Sharif, Ali Mansour, and El-Hadi M Aggoune. Internet-of-things (iot)-based smart agriculture: Toward making

the fields talk. IEEE Access, 7:129551–129583, 2019.

on the large-scale data collected from the drones, a target set of actions, such as the

application of pesticides and fertilizers to specific areas will be produced. The goal of the [4] N Suma, Sandra Rhea Samson, S Saranya, G Shanmugapriya, and R Subhashri. Iot based smart agriculture monitoring system. International Journal on Recent

and Innovation Trends in computing and communication, 5(2):177–181, 2017.

actions will be help farmers actively work to increase crop yield during the growing cycle. [5] Mang Tik Chiu, Xingqian Xu, Yunchao Wei, Zilong Huang, Alexander G Schwing, Robert Brunner, Hrant Khachatrian, Hovnatan Karapetyan, Ivan Dozier, Greg Rose, et al.

The information from the 3rd Milestone will determine the appropriate actions to be taken to Agriculture-vision: A large aerial image database for agricultural pattern analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition,

pages 2828–2838, 2020.

improve the overall yield.

[6] Deepak Vasisht, Zerina Kapetanovic, Jongho Won, Xinxin Jin, Ranveer Chandra, Sudipta Sinha, Ashish Kapoor, Madhusudhan Sudarshan, and Sean Stratman. Farmbeats:

10. Deployment of User Testing: The final stage of the research involves incorporating all of the An iot platform for data-driven agriculture. In 14th USENIX Symposium on Networked Systems Design and Implementation (NSDI17), pages 515–529, 2017.

prior milestones into a coherent user interface that is accessible to the farmer. As part of the [7] Maanak Gupta, Mahmoud Abdelsalam, Sajad Khorsandroo, and Sudip Mittal. Security and privacy in smart farming: Challenges and opportunities. IEEE Access,

research, the plan is to connect both local and international farms to the proposed system to Figure 5. Network Architecture for Object Detection and Classification in Image Only Convolutional Neural Networks. 8:34564–34584, 2020.

The process of creating a image processing neural network involves taking the input image and first determining regions of interest. Next the regions of interest are combined with a predictor to determine if the region is a classifiable object or the background. Then, depending on

receive feedback on the system. In processing the data of multiple farms of varying sizes the type of detector, a final stage can be added to performed for advanced predictions on the image at the cost of increasing the number of training parameters and computational time. With the research being conducted, the depth data can be formatted as an extra channel in the

[8] HDJ Van Heemst. The influence of weed competition on crop yield. Agricultural Systems, 18(2):81–93,1985.

high performance computing (HPC) along with cloud-based resources will be utilized to image. Image from [11] [9] Peter R Brown, Neil I Huth, Peter B Banks, and Grant R Singleton. Relationship between abundance of rodents and damage to agricultural crops. Agriculture, ecosystems &

environment, 120(2-4):405–415, 2007.

provide farm and field specific corrective actions. The deployment of the functional system

will provide new opportunities for crop providers to improve crop yield during the growth [10] Tedders, W. L., & Smith, J. S. (1976). Shading effect on pecan by sooty mold growth. Journal of Economic Entomology, 69(4), 551-553.

cycle. [11] Bochkovskiy, A., Wang, C.Y. and Liao, H.Y.M., 2020. Yolov4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934.

You might also like

- Introduction To Augmented Reality Hardware: Augmented Reality Will Change The Way We Live Now: 1, #1From EverandIntroduction To Augmented Reality Hardware: Augmented Reality Will Change The Way We Live Now: 1, #1No ratings yet

- Applied Cognitive Informatics and Cognitive Computing On Data ManagementDocument2 pagesApplied Cognitive Informatics and Cognitive Computing On Data ManagementMuthuram RNo ratings yet

- 04-2022 WIFS Y BelousovDocument6 pages04-2022 WIFS Y BelousovRémi CogranneNo ratings yet

- WHO EMP MAR 2012.3 Eng PDFDocument78 pagesWHO EMP MAR 2012.3 Eng PDFonovNo ratings yet

- Synopsis On Real Estate PHPDocument11 pagesSynopsis On Real Estate PHPDrSanjeev K ChaudharyNo ratings yet

- 70th Annual Conference of IAOH Mumbai - Delegate Brochure - 09-10apr2022Document4 pages70th Annual Conference of IAOH Mumbai - Delegate Brochure - 09-10apr2022Karthik SNo ratings yet

- Welcome To Your Digital Edition Of: Medical Design BriefsDocument42 pagesWelcome To Your Digital Edition Of: Medical Design Briefsarturojimenez72No ratings yet

- A Novel Edge-Based Multi-Layer Hierarchical Architecture For Federated LearningDocument5 pagesA Novel Edge-Based Multi-Layer Hierarchical Architecture For Federated LearningAmardip Kumar SinghNo ratings yet

- Law and Agriculture Final DraftDocument15 pagesLaw and Agriculture Final DraftVipul GautamNo ratings yet

- Khanh Do Bridging+Sustaiable+Development+and+Hypersurface Fall+2010Document103 pagesKhanh Do Bridging+Sustaiable+Development+and+Hypersurface Fall+2010Anonymous XQy14k7No ratings yet

- Column - Statistical Techniques - MedTech IntelligenceDocument1 pageColumn - Statistical Techniques - MedTech Intelligencehanipe2979No ratings yet

- International Journal of Intelligent Systems and Applications in EngineeringDocument6 pagesInternational Journal of Intelligent Systems and Applications in EngineeringJorge JiménezNo ratings yet

- Data Protection Impact Assessment Student Research: 1. Project Outline - What and WhyDocument6 pagesData Protection Impact Assessment Student Research: 1. Project Outline - What and WhyHerdade, Martini de CamposNo ratings yet

- MBA ProjectDocument32 pagesMBA ProjectHemali RameshNo ratings yet

- Khosla Ventures: On Disrupting Healthcare SectorDocument110 pagesKhosla Ventures: On Disrupting Healthcare SectorMohammed KhairyNo ratings yet

- M&E Plan for Organizational DevelopmentDocument18 pagesM&E Plan for Organizational DevelopmentTARE FOUNDATIONNo ratings yet

- Int Endodontic J - 2022 - Chaniotis - Present Status and Future Directions Management of Curved and Calcified Root CanalsDocument29 pagesInt Endodontic J - 2022 - Chaniotis - Present Status and Future Directions Management of Curved and Calcified Root CanalsLaura AmayaNo ratings yet

- Hospital Information-QuizDocument2 pagesHospital Information-QuizGeeyan Marlchest B NavarroNo ratings yet

- Q2 - PoWi GK - Google and Android Handout - Simran, Emilie, KiraDocument2 pagesQ2 - PoWi GK - Google and Android Handout - Simran, Emilie, KiraSimranNo ratings yet

- Technical Guidance: For The Development of The Growing Area Aspects of Bivalve Mollusc Sanitation ProgrammesDocument292 pagesTechnical Guidance: For The Development of The Growing Area Aspects of Bivalve Mollusc Sanitation Programmesmelisa saNo ratings yet

- Goldman Sachs CertificateDocument1 pageGoldman Sachs CertificateSAI PRANEETH REDDY DHADINo ratings yet

- Deep Semantic Segmentation of Natural and Medical ImagesDocument47 pagesDeep Semantic Segmentation of Natural and Medical ImagesMario Galindo QueraltNo ratings yet

- Optimizing Differentially Private Release of Hierarchical Census Data GroupsDocument17 pagesOptimizing Differentially Private Release of Hierarchical Census Data GroupsJohn SmithNo ratings yet

- Biomimicry Innovation Toolkit GuideDocument3 pagesBiomimicry Innovation Toolkit GuideNadav BagimNo ratings yet

- SAFC Biosciences Scientific Posters - Optimization of Serum-Free Media For EB14 Cell Growth and Viral ProductionDocument1 pageSAFC Biosciences Scientific Posters - Optimization of Serum-Free Media For EB14 Cell Growth and Viral ProductionSAFC-GlobalNo ratings yet

- BOSA: Binary Orientation Search Algorithm: ArticleDocument6 pagesBOSA: Binary Orientation Search Algorithm: ArticleSampath AnbuNo ratings yet

- Project ProposalDocument3 pagesProject ProposalBharathNo ratings yet

- Sejal Divekar Resume1Document2 pagesSejal Divekar Resume1Jason StanleyNo ratings yet

- Dubai HSE LAwDocument103 pagesDubai HSE LAwnoufalhse100% (2)

- School Counseling Example Action Plan TemplateDocument1 pageSchool Counseling Example Action Plan TemplateMJNo ratings yet

- Tugas7MetPenJI - Ridhallah Bil Fauzan - 20101154250027 - Ti2Document1 pageTugas7MetPenJI - Ridhallah Bil Fauzan - 20101154250027 - Ti2ridhallah bil fauzan 20-27No ratings yet

- Rapid Object Detection Using A Boosted Cascade of Simple FeaturesDocument9 pagesRapid Object Detection Using A Boosted Cascade of Simple FeaturesYuvraj NegiNo ratings yet

- Road Safety Forecasts in Five European Countries Using Structural Time-Series ModelsDocument1 pageRoad Safety Forecasts in Five European Countries Using Structural Time-Series ModelsEndashu KeneaNo ratings yet

- Question-Bank Cloud ComputingDocument3 pagesQuestion-Bank Cloud ComputingHanar AhmedNo ratings yet

- Smart Cameras As Embedded Systems-REPORTDocument17 pagesSmart Cameras As Embedded Systems-REPORTRajalaxmi Devagirkar Kangokar100% (1)

- Sat ImagesDocument1 pageSat ImagesJaniVacaPerezNo ratings yet

- Hes 008 - Sas 20Document1 pageHes 008 - Sas 20Juliannah ColinaresNo ratings yet

- 3.2.2 Building Program and Site EvaluationDocument4 pages3.2.2 Building Program and Site EvaluationshepiaekaNo ratings yet

- MIS - Wipro LimitedDocument7 pagesMIS - Wipro LimitedMehul DevatwalNo ratings yet

- Fertility Williams Estradiol 2005Document4 pagesFertility Williams Estradiol 2005Fouad RahiouyNo ratings yet

- Geoinformatics 2010 Vol02Document60 pagesGeoinformatics 2010 Vol02protogeografoNo ratings yet

- BiotechnologyDocument1 pageBiotechnologyNathan Stuart The Retarded idiotNo ratings yet

- 1 Interim ReportDocument29 pages1 Interim ReportPiyush sharmaNo ratings yet

- Goldman Sachs CertificateDocument1 pageGoldman Sachs CertificateAjjuNo ratings yet

- Summative Criterion D 2022 3 1Document4 pagesSummative Criterion D 2022 3 1Agus SulfiantoNo ratings yet

- Research Associate AdDocument7 pagesResearch Associate AdNarendra TrivediNo ratings yet

- Situation Analysis On Digital Learning in IndonesiaDocument112 pagesSituation Analysis On Digital Learning in IndonesiaAam YuliaNo ratings yet

- NPdeQ43o8P9HJmJzg - Goldman Sachs - CLSQjsjwQ4wkjGxkE - 1628572050403 - Completion - CertificateDocument1 pageNPdeQ43o8P9HJmJzg - Goldman Sachs - CLSQjsjwQ4wkjGxkE - 1628572050403 - Completion - CertificateVishal BidariNo ratings yet

- IT0005-Laboratory-Exercise-6 - Backup Data To External StorageDocument4 pagesIT0005-Laboratory-Exercise-6 - Backup Data To External StorageRhea KimNo ratings yet

- Research: Basis For Comparison Primary Data Secondary DataDocument6 pagesResearch: Basis For Comparison Primary Data Secondary DataMr ProductNo ratings yet

- SEBoKv1.0 FinalDocument852 pagesSEBoKv1.0 Finalh_bekhitNo ratings yet

- PIPAc Technical Note2Document1 pagePIPAc Technical Note2Jonayed Hossain SarkerNo ratings yet

- Smart Healthcare ApplicationDocument13 pagesSmart Healthcare ApplicationGaurav SuryavanshiNo ratings yet

- Robotic Telemanipulation SystemsDocument70 pagesRobotic Telemanipulation SystemsAbhishek DharNo ratings yet

- Goal-oriented communication reduces errors in real-time trackingDocument5 pagesGoal-oriented communication reduces errors in real-time trackingBhargav ChiruNo ratings yet

- ISEA Workshop on IoT EcosystemDocument2 pagesISEA Workshop on IoT EcosystemHarpreet Singh BaggaNo ratings yet

- Microbiology University Questions Paper GuideDocument6 pagesMicrobiology University Questions Paper GuideJENNIFER JOHN MBBS2020No ratings yet

- Manual Handling Policy 2018Document35 pagesManual Handling Policy 2018QNo ratings yet

- Learning-Based Practical Smartphone-SlidesDocument26 pagesLearning-Based Practical Smartphone-SlidesliyliNo ratings yet

- Microsoft Project Gantt Chart TutorialDocument7 pagesMicrosoft Project Gantt Chart Tutorialgopika hariNo ratings yet

- EJMCM - Volume 7 - Issue 5 - Pages 160-176Document17 pagesEJMCM - Volume 7 - Issue 5 - Pages 160-176gopika hariNo ratings yet

- A Hybrid of Watermark Scheme With Encryption To Improve Security of Medical ImagesDocument8 pagesA Hybrid of Watermark Scheme With Encryption To Improve Security of Medical Imagesgopika hariNo ratings yet

- CENG 3264 Lab Report InstructionDocument2 pagesCENG 3264 Lab Report Instructiongopika hariNo ratings yet

- CENG 3264: Engin Des and Project MGMT - Lab 3 Matlab Tutorial II - Image ProcessingDocument5 pagesCENG 3264: Engin Des and Project MGMT - Lab 3 Matlab Tutorial II - Image Processinggopika hariNo ratings yet

- Gestión Del Riesgo EmpresarialDocument5 pagesGestión Del Riesgo EmpresarialLuis YanayacoNo ratings yet

- Chapter 4 Chapter 4:: Topics: Digital Design and Computer Architecture, 2Document13 pagesChapter 4 Chapter 4:: Topics: Digital Design and Computer Architecture, 2gopika hariNo ratings yet

- CENG 5133: Computer Architecture Design: Sequential CircuitsDocument22 pagesCENG 5133: Computer Architecture Design: Sequential Circuitsgopika hariNo ratings yet

- Design For Electrical and Computer EngineersDocument323 pagesDesign For Electrical and Computer EngineersKyle Chang67% (3)

- Chapter 3 Chapter 3:: Topics: Digital Design and Computer Architecture, 2Document26 pagesChapter 3 Chapter 3:: Topics: Digital Design and Computer Architecture, 2gopika hariNo ratings yet

- 7Document12 pages7gopika hariNo ratings yet

- Chapter 1 Chapter 1:: Topics: Digital Design and Computer Architecture, 2Document25 pagesChapter 1 Chapter 1:: Topics: Digital Design and Computer Architecture, 2gopika hariNo ratings yet

- 1Document18 pages1gopika hariNo ratings yet

- 1Document18 pages1gopika hariNo ratings yet

- Lmx2370/Lmx2371/Lmx2372 Pllatinum Dual Frequency Synthesizer For RF Personal CommunicationsDocument16 pagesLmx2370/Lmx2371/Lmx2372 Pllatinum Dual Frequency Synthesizer For RF Personal Communications40818248No ratings yet

- Assignment in English Plus Core 11 Full CircleDocument76 pagesAssignment in English Plus Core 11 Full CircleRitu Maan68% (38)

- GypsumDocument79 pagesGypsumMansi GirotraNo ratings yet

- Bipolar I Disorder Case ExampleDocument6 pagesBipolar I Disorder Case ExampleGrape JuiceNo ratings yet

- CHAPT 12a PDFDocument2 pagesCHAPT 12a PDFindocode100% (1)

- Physical Activity Guidelines Advisory Committee ReportDocument683 pagesPhysical Activity Guidelines Advisory Committee Reportyitz22100% (1)

- SE 276B Syllabus Winter 2018Document2 pagesSE 276B Syllabus Winter 2018Manu VegaNo ratings yet

- Char Lynn 104 2000 Series Motor Data SheetDocument28 pagesChar Lynn 104 2000 Series Motor Data Sheetsyahril boonieNo ratings yet

- Mitsubishi Colt 2007 Rear AxleDocument2 pagesMitsubishi Colt 2007 Rear AxlenalokinNo ratings yet

- Friends of Hursley School: Late Summer NewsletterDocument6 pagesFriends of Hursley School: Late Summer Newsletterapi-25947758No ratings yet

- AstigmatismDocument1 pageAstigmatismAmmellya PutriNo ratings yet

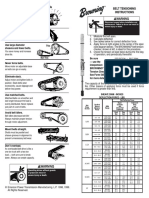

- Browning Belt Tension GaugeDocument2 pagesBrowning Belt Tension GaugeJasperken2xNo ratings yet

- Types of swords from around the worldDocument4 pagesTypes of swords from around the worldДмитрий МихальчукNo ratings yet

- Problem Set 3_Cross-Text ConnectionDocument31 pagesProblem Set 3_Cross-Text Connectiontrinhdat11012010No ratings yet

- Column FootingDocument57 pagesColumn Footingnuwan01100% (7)

- Nursing TheoriesDocument9 pagesNursing TheoriesMichelleneChenTadleNo ratings yet

- Journal of The Folk Song Society No.8Document82 pagesJournal of The Folk Song Society No.8jackmcfrenzieNo ratings yet

- B+G+2 Boq - (367-625)Document116 pagesB+G+2 Boq - (367-625)Amy Fitzpatrick100% (3)

- Bathymetry and Its Applications PDFDocument158 pagesBathymetry and Its Applications PDFArseni MaximNo ratings yet

- Solar Collectors Final WordDocument14 pagesSolar Collectors Final WordVaibhav Vithoba NaikNo ratings yet

- Foundation of EducationDocument31 pagesFoundation of EducationM T Ząřřąř100% (1)

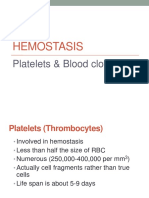

- Platelets & Blood Clotting: The Hemostasis ProcessDocument34 pagesPlatelets & Blood Clotting: The Hemostasis ProcesssamayaNo ratings yet

- MUSCULAR SYSTEM WORKSHEET Slides 1 To 4Document4 pagesMUSCULAR SYSTEM WORKSHEET Slides 1 To 4kwaiyuen ohnNo ratings yet

- Catalogo Tecnico Gb-S v07Document29 pagesCatalogo Tecnico Gb-S v07farou9 bmzNo ratings yet

- 9th Mole Concept and Problems Based On PDFDocument2 pages9th Mole Concept and Problems Based On PDFMintu KhanNo ratings yet

- Solitaire Premier - Presentation (Small File)Document18 pagesSolitaire Premier - Presentation (Small File)Shrikant BadheNo ratings yet

- Algebra 1 FINAL EXAM REVIEW 2Document2 pagesAlgebra 1 FINAL EXAM REVIEW 2Makala DarwoodNo ratings yet

- Final Seminar ReportDocument35 pagesFinal Seminar ReportHrutik BhandareNo ratings yet

- Analog Layout Design (Industrial Training)Document10 pagesAnalog Layout Design (Industrial Training)Shivaksh SharmaNo ratings yet

- Tcgbutopia G8Document216 pagesTcgbutopia G8faffsNo ratings yet