Professional Documents

Culture Documents

(AI-Machine Learning) Optimized Sensorless Human Heartrate Estimation For A Dance Workout Application

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

(AI-Machine Learning) Optimized Sensorless Human Heartrate Estimation For A Dance Workout Application

Copyright:

Available Formats

Volume 5, Issue 8, August – 2020 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

[AI-Machine Learning] Optimized Sensorless

Human Heartrate Estimation for a

Dance Workout Application

G. Jeong1 ; N. Freitas2

1

Gyumin Jeong, Hansung University, Devunlimit

2

Núria Freitas, Catholic University of Korea, Devunlimit

Seoul Business Agency, Seoul Innovation Challenge 2019

Abstract:- Over the last decade, there has been a great Recently, a growing number of studies have been

effort to use technology to make exercise more proving the feasibility and accuracy of remote heartrate

interactive, measurable and gamified. However, in estimations, as it has been shown that video can capture

order to improve the accuracy of the detections and minute skin color changes that follow the heart pulse and

measurements needed, these efforts have always that can’t be seen through naked eye.

translated themselves into multiple sensors including

purpose specific hardware, which results in extra On this paper we aim to extract vital data by

expenses and induces limitations on the final mobility of estimating the users’ pulse through machine-learning, from

the user. In this paper we aim to optimize a sensorless RGB signals using the minute skin color change that the

system that estimates the real-time user heartrate and face camera can catch, and achieve an equal or higher

performs better than the current wearable technology, accuracy rate than the most commonly used smart watch.

for further calorie and other vital indicators We also change the used convolutional neural network

calculations. The findings here will be applied on a (CNN) from Resnet to Densenet and tried to improve the

posture correction system for a dance and fitness accuracy from the researched system.

application.

II. OBSERVATION METHODS AND INDICATORS

Keywords:- Sensorless heartrate estimation – Artificial

Intelligence (A.I.) – Machine learning – Posenet – The observations on this paper have been done using a

DenseNet – Real-time heartrate estimation – Heartrate at a real-time video taken with a single regular RGB camera,

distance– Dance – K-pop – E-sports – South Korea. instead of regular infrared cameras or smart watches’

photoplethysmography.

I. INTRODUCTION

For further calculations we measured the number of

Heartrate is a well-known indicator of the intensity of frames that have data outputs in a second to set the actual

exercise. From heartrate, other indicators such as burnt service frame per second (FPS) rate.

calories can be derived. Currently most commercial

products on the fitness and diet market require sensors to Since fast and diverse motion, as well as a high

estimate the heartrate. Common examples for these sensors illuminance (lux) could be problematic in the measure of

are smart watches and chest bands. the data, in order to determine whether or not the normal

operation is possible within those conditions, we

Due the nature of our platform, we aim to eliminate experimented with over the regular values of illuminance

the necessity of wearable sensors, letting the user free to and tested over the dataset.

move and perform fast movements around safely.

IJISRT20AUG002 www.ijisrt.com 888

Volume 5, Issue 8, August – 2020 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

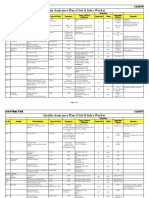

< Overview of Major Performance Indicators >

World's highest level

Percentage Measuring

Performance Indicator Unit Final objective (Holding company / holding

(%) organization

country)

More than TTA accredited

1. Data output speed fps 15~30 fps (Microsoft, US) 30

24fps certification test

More than Less than 5,000lux TTA accredited

2. Illuminance lux 30

5,000lux (Microsoft, US) certification test

TTA accredited

3. Heart rate match rate % More than 90% 90%(Apple, US) 20

certification test

<Sample Definition and Measurement Method>

Sample Number of samples Measurement method (standard, environment, result

Performance Indicator

Definition (n ≥ 5) calculation, etc.)

Measured frames per second (fps) in the result data value

1. Data output speed 1 per System 5 image taken through the deep learning machine when

shooting in real time

Measures illuminance (lux) in the driving environment, and

2. Illuminance 1 per System 5 executes in the environment above the standard illuminance

value.

Measures the match rate (%) between the predicted value and

the actual heart rate using artificial neural network

3. Heart rate match rate 1 per System 5

technology through real-time image data (frames with

accurate heart rate / full frame)

4. Observation position Measure the accuracy of the skeleton value obtained by the

1 per System 5

error deep learning machine when shooting in real time

Table 1:- Performance indicators, objectives and observation methods.

III. PROCESS AND SCHEDULES and research institutes for research purposes and its license

is free.

It took 3 months to design and develop a new artificial

neural network algorithm for heart rate measurement to Some of the features of other databases unused in the

measure and provide information about heart rate to experiment and the reasons why we do not choose them

application users by using image data captured by RGB include:

camera without specific sensor. Another month to verify HCI Tagging Database: License is free, but the end user

and correct errors. cannot use the database for non-academic purposes as a

license agreement.

IV. EXPERIMENT BU-3DFE (Binghamton University 3D Facial

Expression) Database: Available to external parties only

A. Select Data Set under the agreement of the licensing offices of

For this experiment we choose to use the VIPL-HR Binghamton University and Pittsburgh University, and

Database (Pure Database). The reasons for that choice were only to external parties seeking research for non-profit

because of its large multi-model with 2,378 visible light purposes.

video (VIS), 752 near infrared video (NIR) recorded from Oulu Bio-Face (OBF) database: No download link is

107 different people that includes various variations such as available.

head movements, lighting variations, and filmed device Cohface database: only for scientific research.

changes. Also, because we could learn about the proposed

spatio-temporal representation used to monitor and estimate

the heart rate (RhythmNet) that has very promising results

in both public domain and VIPL-HR estimation databases.

Lastly, the VIPL-HR Dataset is distributed to universities

IJISRT20AUG002 www.ijisrt.com 889

Volume 5, Issue 8, August – 2020 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

B. Data Set Pre-processing Cropped face image classification

After converting a file containing a large number of

frames in avi format in Pure Database into several files in

png format and executing detect_face.exe, the human face

is detected in the png format file. We stored the coordinates

in a csv file. Using face coordinates saved as csv file,

execute face_cropper.exe and we cut only the face part into

256x256 size and save it as a png format file. Using csv

files containing time stamp information for each frame,

heart rate information for each frame's time stamp is linked

through comparison in the csv file containing the heart rate

information for each time stamp. After that, we moved the

png file with only the 256x256 face cut into a folder

defined by heart rate.

C. Training

Fig 1:- Cloud software flow chart for heart rate extraction Execution Environment (Hardware / software)

CPU Intel i7-7700k

Seetaface Face Memory DDR4 32GB

SeetaFace is a facial recognition engine for heart rate Graphic Card Nvidia GTX1660Ti 6GB

extraction and works with three core modules. First, the SSD 256 GB

FaceDetector module is a face detection module. The

FaceLandmarker module is the module that identifies the Execution Count / Time

key position of the face. The FaceRecognizer module is a Based on the GPU memory capacity, it is set to batch size 2.

module that extracts and compares facial features. Using 400 times of study, total time required one week.

the 3-core module Seetaface face recognition engine, after

the face in the video is detected, the position of the detected

D. Verification

face part is determined. Thereafter, only a face portion is

cut for analysis from the predetermined face position. The

cropper used with the mentioned engine module was

produced by SeetaTech and can be obtained from the link

below as an open source under the BSD 2-Clause license.

https://github.com/seetafaceengine/SeetaFace2/blob/master/

README_en.md,

https://github.com/seetaface/SeetaFaceEngine2/tree/master/

FaceCropper

Fig 2

V. PROCEDURES

The test procedure for extracting heart rate is as follows. After installing Ubuntu, install the utilities associated with your

development source. Run the following command to install the relevant utility.

(1) > sudo add-apt-repository ppa:deadsnakes/ppa

(2) > sudo apt-get update

(3) > sudo apt-get –y upgrade

(4) > sudo apt-get install -y vim python3.6 python3.6-dev htop gparted make openssh-server curl git

(5) > curl https://bootstrap.pypa.io/get-pip.py | sudo -H python3.6

(6) > sudo update-alternatives –install /usr/bin/python python /usr/bin/python3.6 1

(7) > sudo update-alternatives –install /usr/bin/pip pip /usr/bin/ pip3.6 1

(8) > sudo su (get root privileges)

(9) > passwd root (Set root external password)

(10) > wget http://download.jetbrains.com/python/pycharm-community-2019.1.1.tar.gz

(11) > tar –zxvf pycharm-community-2019.1.1.tar.gz

IJISRT20AUG002 www.ijisrt.com 890

Volume 5, Issue 8, August – 2020 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

Next, we install a GPU (including cuda) on Linux. In the same way as above, write the following command to install.

(93) > sh -c ‘echo “deb

http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64/” >> /etc/apt/sources.list.d/cuda.list’

(2) > sh -c ‘echo “deb http://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu1604/x86_64 /” >>

/etc/apt/sources.list.d/cuda.list’

(3) > apt-get update

(4) > apt-cache search nvidia

(5) > apt-get install nvidia-410

(6) > apt-ket adv –fetch-keys http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64/7fa2af80.pub

(7) > apt-get update

(8) > apt-get install cuda-9-0 nvidia-cuda-toolkit

(9) > cat /usr/local/cuda/version.txt

(10) > wget http://developer.download.nvidia.com/compute/redist/cudnn/v7.1.4/cudnn-9.0-linux-x64-v7.1.tgz

(11) > tar xvfz cudnn-9.0-linux-x64-v7.1.tgz

(12) > cp -P cuda/include/cudnn.h /usr/local/cuda/include

(13) > cp -P cuda/lib64/libcudnn* /usr/local/cuda/lib64

(14) > chmod a+r /usr/local/cuda/include/cudnn.h/usr/local/cuda/lib64/libcudnn*

(15) > apt-get install –y libcupti-dev

(16) > vi /home/user/.bashrc

export PATH=/usr/local/cuda/bin:$PATH

export

LD_LIBRARY_PATH=”$LD_LIBRARY_PATH:/usr/local/cuda/lib64:/usr/local/cuda/extras/CUPTI/lib64”

export CUDA_HOME=/usr/local/cuda

(17) > vi ~/.bashrc

export PATH=/usr/local/cuda/bin:$PATH

export

LD_LIBRARY_PATH=”$LD_LIBRARY_PATH:/usr/local/cuda/lib64:/usr/local/cuda/extras/CUPTI/lib64”

export CUDA_HOME=/usr/local/cuda

(18) > source ~/.bashrc

(19) > nvcc –version

When the installation is complete, install the Python The test was conducted five times for each item, and it

compiler on Linux. Download the pycharm distribution was confirmed whether all of the tests met the final

package from development goals presented in the previous section 2,

https://www.jetbrains.com/pycharm/download/#section=wi ‘Observation methods and indicators’. As shown in the test

ndows and extract the compressed file. results below, the heart rate accuracy measured was

93.28%, which met the target value of 90% or higher from

VI. RESULTS AND DISCUSSION the commonly used smartwatch standard and even

surpassed by 0.66% the current Resnet model, The heart

The experiment has tested two items: the accuracy of rate analysis FPS was 69.704 to 70.660 fps, which all met

measured heart rate and the reported heart rate from the the target value of 24 fps or higher.

dataset and the heart rate analysis FPS.

Index Tested Images Successfully Accuracy

Tested Images

1 15,141 14,123 93.28%

2 15,141 14,123 93.28%

3 15,141 14,123 93.28%

4 15,141 14,123 93.28%

5 15,141 14,123 93.28%

Average 93.28%

Table 2:- Heartrate Accuracy with DenseNet.

IJISRT20AUG002 www.ijisrt.com 891

Volume 5, Issue 8, August – 2020 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

Index Tested Images Analysis Time Heartrate Image Analysis FPS

1 15,141 214.71 sec 70.522 FPS

2 15,141 217.02 sec 69.768 FPS

3 15,141 214.28 sec 70.660 FPS

4 15,141 215.36 sec 70.306 FPS

5 15,141 217.22 sec 69.704 FPS

Average 70.192 FPS

Table 3:- Heartrate Analysis FPS

REFERENCES

[1]. http://vipl.ict.ac.cn/uploadfile/upload/2018111615545

295.pdf

[2]. https://www.cv

foundation.org/openaccess/content_cvpr_2016/papers/

Tulyakov_Self

Adaptive_Matrix_Completion_CVPR_2016_paper.pd

f

[3]. https://mahnob-db.eu/hci-tagging/

[4]. http://www.cs.binghamton.edu/~lijun/Research/3DFE/

3DFE_Analysis.html

[5]. https://ieeexplore.ieee.org/document/8373836

[6]. https://www.idiap.ch/dataset/cohface

IJISRT20AUG002 www.ijisrt.com 892

You might also like

- What Is Data AcquisitionDocument5 pagesWhat Is Data AcquisitionM.Saravana Kumar..M.ENo ratings yet

- Chapter AI PDFDocument20 pagesChapter AI PDFKarthik CNo ratings yet

- Internship Report DiabetesPredictionDocument15 pagesInternship Report DiabetesPredictionDhanyaNo ratings yet

- Duke Smartgen-Plantview 0116 PDFDocument33 pagesDuke Smartgen-Plantview 0116 PDFAnonymous OFwyjaMyNo ratings yet

- Duke Smartgen-Plantview 0116Document33 pagesDuke Smartgen-Plantview 0116Anonymous OFwyjaMyNo ratings yet

- Report 2023Document35 pagesReport 2023Jahaziel PonceNo ratings yet

- C.2 - Computational Techniques in Stat. Anal. & Exploitation of CNC Mach. Exp. Data - IGI Global, 2012Document35 pagesC.2 - Computational Techniques in Stat. Anal. & Exploitation of CNC Mach. Exp. Data - IGI Global, 2012vaxevNo ratings yet

- ADAP 2.0: Measurement and Analysis Software For Biochrom Anthos Microplate ReadersDocument44 pagesADAP 2.0: Measurement and Analysis Software For Biochrom Anthos Microplate ReadersNETO95No ratings yet

- Assigment 2Document7 pagesAssigment 2Heaven varghese C S C SNo ratings yet

- 1.1 Should Software Engineers Worry About Hardware?: Chapter OneDocument16 pages1.1 Should Software Engineers Worry About Hardware?: Chapter OnenagarajuNo ratings yet

- A Brief Overview of Software Testing MetricsDocument8 pagesA Brief Overview of Software Testing MetricsPrasad DannapaneniNo ratings yet

- 2022 05 ISES - PresDocument25 pages2022 05 ISES - PressonnyNo ratings yet

- Technical FAQsDocument3 pagesTechnical FAQsDong Hyun KangNo ratings yet

- NDTMA VisiConsult Presentation August 2021Document47 pagesNDTMA VisiConsult Presentation August 2021rhinemineNo ratings yet

- SEMetric 1Document30 pagesSEMetric 1Anit KayasthaNo ratings yet

- Process Capability Index in An InstantDocument8 pagesProcess Capability Index in An InstantSukhdev BhatiaNo ratings yet

- Final Project PosterDocument1 pageFinal Project Posterapi-297515479No ratings yet

- RareEventDetection User GuideDocument24 pagesRareEventDetection User GuideChris PelletierNo ratings yet

- A Software Tool For Quality Assurance of Computed / Digital Radiography (CR/DR) SystemsDocument11 pagesA Software Tool For Quality Assurance of Computed / Digital Radiography (CR/DR) SystemsYuda FhunkshyangNo ratings yet

- AeroRemote Insights Brochure M1343 0520 RevBDocument4 pagesAeroRemote Insights Brochure M1343 0520 RevBservice iyadMedicalNo ratings yet

- 0455 v1 DS DynamicStudio Base PackageDocument5 pages0455 v1 DS DynamicStudio Base PackageRameshNo ratings yet

- NewproposalDocument23 pagesNewproposalAbirhaNo ratings yet

- System Identifiction of Servo RigDocument29 pagesSystem Identifiction of Servo Rigkarthikkumar411No ratings yet

- AB CheatsheetDocument13 pagesAB CheatsheetPradeep SandraNo ratings yet

- The Systems Life CycleDocument28 pagesThe Systems Life CycleNeetikaNo ratings yet

- Introduction To DOE's New Energy Assessment Tool Suite MEASUR - Slides PDFDocument16 pagesIntroduction To DOE's New Energy Assessment Tool Suite MEASUR - Slides PDFAnu ParthaNo ratings yet

- Kruss Bro Product Overview 2022 enDocument105 pagesKruss Bro Product Overview 2022 enSanggari MogarajaNo ratings yet

- Heart Rate Monitoring System Using FingeDocument4 pagesHeart Rate Monitoring System Using FingeAndreea Ilie100% (1)

- CT Somatom Go Up Va20 Data-Sheet Hood05162002889273 152104434 4Document28 pagesCT Somatom Go Up Va20 Data-Sheet Hood05162002889273 152104434 4Sergio Alejandro CastroNo ratings yet

- Datasheet Go - Up VA20Document28 pagesDatasheet Go - Up VA20CeoĐứcTrường100% (2)

- A Matlab Simulink Development and Verification Platform For A Frequency Estimation SystemDocument8 pagesA Matlab Simulink Development and Verification Platform For A Frequency Estimation SystemN'GOLO MAMADOU KONENo ratings yet

- 14-06-2021-1623658615-6 - Ijcse-4. Ijcse (Foc - Dec 2020) - Heart Rate Prediction Without Using Physical DevicesDocument6 pages14-06-2021-1623658615-6 - Ijcse-4. Ijcse (Foc - Dec 2020) - Heart Rate Prediction Without Using Physical Devicesiaset123No ratings yet

- White Paper - Using PI System Analytics To See Rotating Equipment Life Expectancy REV-2Document24 pagesWhite Paper - Using PI System Analytics To See Rotating Equipment Life Expectancy REV-2Arsa Arka GutomoNo ratings yet

- METHODOLOGYDocument13 pagesMETHODOLOGYPaul De AsisNo ratings yet

- Human Resource Management by Machine Learning AlgorithmsDocument6 pagesHuman Resource Management by Machine Learning AlgorithmsIJRASETPublicationsNo ratings yet

- A Brief Overview of Software Testing MetricsDocument9 pagesA Brief Overview of Software Testing MetricsarbanNo ratings yet

- Iented Test Using Ate: Technique For Vlsi'SDocument8 pagesIented Test Using Ate: Technique For Vlsi'SSrivatsava GuduriNo ratings yet

- CIVA 2017 WebinarDocument21 pagesCIVA 2017 WebinarOscar DorantesNo ratings yet

- Methodology: What Does Data Acquisition Mean?Document6 pagesMethodology: What Does Data Acquisition Mean?Nithish K mNo ratings yet

- Internship Report SampleDocument16 pagesInternship Report Samplecasco123No ratings yet

- Store Management System Project ReportDocument46 pagesStore Management System Project ReportSHIVANI KOTHARINo ratings yet

- Machine Learning Ai Manufacturing PDFDocument6 pagesMachine Learning Ai Manufacturing PDFNitin RathiNo ratings yet

- NVIDIA Investor Presentation Oct 2022Document31 pagesNVIDIA Investor Presentation Oct 2022Rogers KenyoNo ratings yet

- Review Paper: Real Time Based Data Acquisition SystemDocument2 pagesReview Paper: Real Time Based Data Acquisition SystemEditor IJRITCCNo ratings yet

- Software: Measurlink Is An Easy-To-Use, Windows-Based Family of Quality Data Management Software ApplicationsDocument8 pagesSoftware: Measurlink Is An Easy-To-Use, Windows-Based Family of Quality Data Management Software ApplicationsBrandi DaleNo ratings yet

- Camsort DigitalDocument4 pagesCamsort Digitaldeepak agrawalNo ratings yet

- Data Stride Analytics - Pitch PDFDocument10 pagesData Stride Analytics - Pitch PDFKarthik CNo ratings yet

- 1 NI Tutorial 3536 enDocument5 pages1 NI Tutorial 3536 enLuislo FdmNo ratings yet

- Towards More Efficient DSP Implementations: An Analysis Into The Sources of Error in DSP DesignDocument7 pagesTowards More Efficient DSP Implementations: An Analysis Into The Sources of Error in DSP DesignHumira ayoubNo ratings yet

- PR-11146 - Brochure TM Optix MTS Brochure PR-11146Document8 pagesPR-11146 - Brochure TM Optix MTS Brochure PR-11146lab RSUDPameungpeukNo ratings yet

- Computer-Assisted Audit Tools and Techniques ApplicationDocument28 pagesComputer-Assisted Audit Tools and Techniques ApplicationGelyn RaguinNo ratings yet

- VSM 4 Plug-And-play Sensor Platform For Legacy Industrial Machine MonitoringDocument4 pagesVSM 4 Plug-And-play Sensor Platform For Legacy Industrial Machine MonitoringElif CeylanNo ratings yet

- Awareness Session On: Computer-Assisted Audit Tools and Techniques"Document22 pagesAwareness Session On: Computer-Assisted Audit Tools and Techniques"Sunil Kumar PatroNo ratings yet

- Business Intelligence and Artificial Neural Network (Ann) : ThaniaDocument18 pagesBusiness Intelligence and Artificial Neural Network (Ann) : ThaniaNia GodelvaNo ratings yet

- ETP - STP DigitalizationDocument8 pagesETP - STP DigitalizationHarisomNo ratings yet

- A Review of The Volume Estimation Techniques of The FruitsDocument5 pagesA Review of The Volume Estimation Techniques of The FruitsTezera ChubaNo ratings yet

- Guardian Angel Brochure BDocument9 pagesGuardian Angel Brochure BronfrendNo ratings yet

- Getting Started with Tiva ARM Cortex M4 Microcontrollers: A Lab Manual for Tiva LaunchPad Evaluation KitFrom EverandGetting Started with Tiva ARM Cortex M4 Microcontrollers: A Lab Manual for Tiva LaunchPad Evaluation KitNo ratings yet

- Smarter Decisions – The Intersection of Internet of Things and Decision ScienceFrom EverandSmarter Decisions – The Intersection of Internet of Things and Decision ScienceNo ratings yet

- Forensic Advantages and Disadvantages of Raman Spectroscopy Methods in Various Banknotes Analysis and The Observed Discordant ResultsDocument12 pagesForensic Advantages and Disadvantages of Raman Spectroscopy Methods in Various Banknotes Analysis and The Observed Discordant ResultsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Factors Influencing The Use of Improved Maize Seed and Participation in The Seed Demonstration Program by Smallholder Farmers in Kwali Area Council Abuja, NigeriaDocument6 pagesFactors Influencing The Use of Improved Maize Seed and Participation in The Seed Demonstration Program by Smallholder Farmers in Kwali Area Council Abuja, NigeriaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Study Assessing Viability of Installing 20kw Solar Power For The Electrical & Electronic Engineering Department Rufus Giwa Polytechnic OwoDocument6 pagesStudy Assessing Viability of Installing 20kw Solar Power For The Electrical & Electronic Engineering Department Rufus Giwa Polytechnic OwoInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Cyber Security Awareness and Educational Outcomes of Grade 4 LearnersDocument33 pagesCyber Security Awareness and Educational Outcomes of Grade 4 LearnersInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Blockchain Based Decentralized ApplicationDocument7 pagesBlockchain Based Decentralized ApplicationInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Smart Health Care SystemDocument8 pagesSmart Health Care SystemInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- An Industry That Capitalizes Off of Women's Insecurities?Document8 pagesAn Industry That Capitalizes Off of Women's Insecurities?International Journal of Innovative Science and Research TechnologyNo ratings yet

- Unmasking Phishing Threats Through Cutting-Edge Machine LearningDocument8 pagesUnmasking Phishing Threats Through Cutting-Edge Machine LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Smart Cities: Boosting Economic Growth Through Innovation and EfficiencyDocument19 pagesSmart Cities: Boosting Economic Growth Through Innovation and EfficiencyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Impact of Silver Nanoparticles Infused in Blood in A Stenosed Artery Under The Effect of Magnetic Field Imp. of Silver Nano. Inf. in Blood in A Sten. Art. Under The Eff. of Mag. FieldDocument6 pagesImpact of Silver Nanoparticles Infused in Blood in A Stenosed Artery Under The Effect of Magnetic Field Imp. of Silver Nano. Inf. in Blood in A Sten. Art. Under The Eff. of Mag. FieldInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Parastomal Hernia: A Case Report, Repaired by Modified Laparascopic Sugarbaker TechniqueDocument2 pagesParastomal Hernia: A Case Report, Repaired by Modified Laparascopic Sugarbaker TechniqueInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Visual Water: An Integration of App and Web To Understand Chemical ElementsDocument5 pagesVisual Water: An Integration of App and Web To Understand Chemical ElementsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Insights Into Nipah Virus: A Review of Epidemiology, Pathogenesis, and Therapeutic AdvancesDocument8 pagesInsights Into Nipah Virus: A Review of Epidemiology, Pathogenesis, and Therapeutic AdvancesInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Compact and Wearable Ventilator System For Enhanced Patient CareDocument4 pagesCompact and Wearable Ventilator System For Enhanced Patient CareInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Quantifying of Radioactive Elements in Soil, Water and Plant Samples Using Laser Induced Breakdown Spectroscopy (LIBS) TechniqueDocument6 pagesQuantifying of Radioactive Elements in Soil, Water and Plant Samples Using Laser Induced Breakdown Spectroscopy (LIBS) TechniqueInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- An Analysis On Mental Health Issues Among IndividualsDocument6 pagesAn Analysis On Mental Health Issues Among IndividualsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Implications of Adnexal Invasions in Primary Extramammary Paget's Disease: A Systematic ReviewDocument6 pagesImplications of Adnexal Invasions in Primary Extramammary Paget's Disease: A Systematic ReviewInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Predict The Heart Attack Possibilities Using Machine LearningDocument2 pagesPredict The Heart Attack Possibilities Using Machine LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Air Quality Index Prediction Using Bi-LSTMDocument8 pagesAir Quality Index Prediction Using Bi-LSTMInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Parkinson's Detection Using Voice Features and Spiral DrawingsDocument5 pagesParkinson's Detection Using Voice Features and Spiral DrawingsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Relationship Between Teacher Reflective Practice and Students Engagement in The Public Elementary SchoolDocument31 pagesThe Relationship Between Teacher Reflective Practice and Students Engagement in The Public Elementary SchoolInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Keywords:-Ibadhy Chooranam, Cataract, Kann Kasam,: Siddha Medicine, Kann NoigalDocument7 pagesKeywords:-Ibadhy Chooranam, Cataract, Kann Kasam,: Siddha Medicine, Kann NoigalInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Investigating Factors Influencing Employee Absenteeism: A Case Study of Secondary Schools in MuscatDocument16 pagesInvestigating Factors Influencing Employee Absenteeism: A Case Study of Secondary Schools in MuscatInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Making of Object Recognition Eyeglasses For The Visually Impaired Using Image AIDocument6 pagesThe Making of Object Recognition Eyeglasses For The Visually Impaired Using Image AIInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Utilization of Date Palm (Phoenix Dactylifera) Leaf Fiber As A Main Component in Making An Improvised Water FilterDocument11 pagesThe Utilization of Date Palm (Phoenix Dactylifera) Leaf Fiber As A Main Component in Making An Improvised Water FilterInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Harnessing Open Innovation For Translating Global Languages Into Indian LanuagesDocument7 pagesHarnessing Open Innovation For Translating Global Languages Into Indian LanuagesInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Diabetic Retinopathy Stage Detection Using CNN and Inception V3Document9 pagesDiabetic Retinopathy Stage Detection Using CNN and Inception V3International Journal of Innovative Science and Research TechnologyNo ratings yet

- Advancing Healthcare Predictions: Harnessing Machine Learning For Accurate Health Index PrognosisDocument8 pagesAdvancing Healthcare Predictions: Harnessing Machine Learning For Accurate Health Index PrognosisInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Dense Wavelength Division Multiplexing (DWDM) in IT Networks: A Leap Beyond Synchronous Digital Hierarchy (SDH)Document2 pagesDense Wavelength Division Multiplexing (DWDM) in IT Networks: A Leap Beyond Synchronous Digital Hierarchy (SDH)International Journal of Innovative Science and Research TechnologyNo ratings yet

- Terracing As An Old-Style Scheme of Soil Water Preservation in Djingliya-Mandara Mountains - CameroonDocument14 pagesTerracing As An Old-Style Scheme of Soil Water Preservation in Djingliya-Mandara Mountains - CameroonInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- A List of 142 Adjectives To Learn For Success in The TOEFLDocument4 pagesA List of 142 Adjectives To Learn For Success in The TOEFLchintyaNo ratings yet

- Class 12 Unit-2 2022Document4 pagesClass 12 Unit-2 2022Shreya mauryaNo ratings yet

- Top Survival Tips - Kevin Reeve - OnPoint Tactical PDFDocument8 pagesTop Survival Tips - Kevin Reeve - OnPoint Tactical PDFBillLudley5100% (1)

- Aits 2122 PT I Jeea 2022 TD Paper 2 SolDocument14 pagesAits 2122 PT I Jeea 2022 TD Paper 2 SolSoumodeep NayakNo ratings yet

- Datasheet of STS 6000K H1 GCADocument1 pageDatasheet of STS 6000K H1 GCAHome AutomatingNo ratings yet

- Pinterest or Thinterest Social Comparison and Body Image On Social MediaDocument9 pagesPinterest or Thinterest Social Comparison and Body Image On Social MediaAgung IkhssaniNo ratings yet

- CA Level 2Document50 pagesCA Level 2Cikya ComelNo ratings yet

- Buku BaruDocument51 pagesBuku BaruFirdaus HoNo ratings yet

- Quality Assurance Plan - CivilDocument11 pagesQuality Assurance Plan - CivilDeviPrasadNathNo ratings yet

- Unit 2 Module 2 Combined-1Document14 pagesUnit 2 Module 2 Combined-1api-2930012170% (2)

- Major Stakeholders in Health Care SystemDocument5 pagesMajor Stakeholders in Health Care SystemANITTA S100% (1)

- 3114 Entrance-Door-Sensor 10 18 18Document5 pages3114 Entrance-Door-Sensor 10 18 18Hamilton Amilcar MirandaNo ratings yet

- Nugent 2010 Chapter 3Document13 pagesNugent 2010 Chapter 3Ingrid BobosNo ratings yet

- Agile in ISO 9001 - How To Integrate Agile Processes Into Your Quality Management System-Springer (2023)Document67 pagesAgile in ISO 9001 - How To Integrate Agile Processes Into Your Quality Management System-Springer (2023)j.paulo.mcNo ratings yet

- HSG 2023 KeyDocument36 pagesHSG 2023 Keyle827010No ratings yet

- "Prayagraj ": Destination Visit ReportDocument5 pages"Prayagraj ": Destination Visit ReportswetaNo ratings yet

- Aryan Civilization and Invasion TheoryDocument60 pagesAryan Civilization and Invasion TheorySaleh Mohammad Tarif 1912343630No ratings yet

- Week 1-2 Module 1 Chapter 1 Action RseearchDocument18 pagesWeek 1-2 Module 1 Chapter 1 Action RseearchJustine Kyle BasilanNo ratings yet

- NHD Process PaperDocument2 pagesNHD Process Paperapi-122116050No ratings yet

- 2015 Grade 4 English HL Test MemoDocument5 pages2015 Grade 4 English HL Test MemorosinaNo ratings yet

- The Evolution of Knowledge Management Systems Needs To Be ManagedDocument14 pagesThe Evolution of Knowledge Management Systems Needs To Be ManagedhenaediNo ratings yet

- ASTM D 4437-99 Standard Practice For Determining The Integrity of Fiel Seams Used in Joining Flexible Polymeric Sheet GeomembranesDocument3 pagesASTM D 4437-99 Standard Practice For Determining The Integrity of Fiel Seams Used in Joining Flexible Polymeric Sheet GeomembranesPablo Antonio Valcárcel Vargas100% (2)

- Purchasing and Supply Chain Management (The Mcgraw-Hill/Irwin Series in Operations and Decision)Document14 pagesPurchasing and Supply Chain Management (The Mcgraw-Hill/Irwin Series in Operations and Decision)Abd ZouhierNo ratings yet

- Boeing SWOT AnalysisDocument3 pagesBoeing SWOT AnalysisAlexandra ApostolNo ratings yet

- Student Committee Sma Al Abidin Bilingual Boarding School: I. BackgroundDocument5 pagesStudent Committee Sma Al Abidin Bilingual Boarding School: I. BackgroundAzizah Bilqis ArroyanNo ratings yet

- Product 97 File1Document2 pagesProduct 97 File1Stefan StefanNo ratings yet

- Review On Antibiotic Reidues in Animl ProductsDocument6 pagesReview On Antibiotic Reidues in Animl ProductsMa. Princess LumainNo ratings yet

- Chapter 3-CP For Armed Conflict SituationDocument23 pagesChapter 3-CP For Armed Conflict Situationisidro.ganadenNo ratings yet

- Introduction To Human MovementDocument5 pagesIntroduction To Human MovementNiema Tejano FloroNo ratings yet

- Industry GeneralDocument24 pagesIndustry GeneralilieoniciucNo ratings yet