Professional Documents

Culture Documents

Ed Aaaaaaa

Uploaded by

Lady Lykah de GuzmanCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Ed Aaaaaaa

Uploaded by

Lady Lykah de GuzmanCopyright:

Available Formats

Parametric Statistics • Denoted by H0

• The statement being tested.

Parametric statistical

• Assumed true until evidence

• Procedures are inferential indicates otherwise.

procedures that rely on testing claims • Must contain the condition of

regarding parameters such as the equality and must be written with

population mean, the population the symbol =, ≤, or ≥.

standard deviation, or the population

proportion. 2. Alternative Hypothesis

• Apply to data in ratio scale, and some • Denoted by Ha or H1.

apply to data in interval scale. • Statement that must be true if the

Two Common Forms of Statistical Inference null hypothesis is false.

• Sometimes referred to as the

• Estimation - It is used to approximate research hypothesis.

the value of an unknown population • Must contain the condition of

parameter. equality and must be written with the

• Hypothesis Testing - It is a procedure symbol 6=,<, or >.

on sample evidence and probability,

used to test claims regarding a Set the level of significance or alpha level (α)

characteristic of one or more You should establish a predetermined level

populations. of significance, below which you will reject

Procedures for Testing Hypothesis the null hypothesis.

1. State the null and alternative hypothesis. The generally accepted levels are 0.10, 0.05,

and 0.01.

2. Set the level of significance or alpha level

(α). Determine the appropriate test to use

Parametric Test

3. Determine the appropriate test to use.

1. One-Sample T-test

4. Determine the p-value.

2. Dependent Sample T-test

5. Make Statistical decision.

3. Independent Sample T-test

6. Draw conclusion.

4. One-Way Analysis of Variance

HYPOTHESIS - preconceived idea, assumed

to be true but has to be tested for its truth or 5. Pearson Product Moment Correlation

falsity. Note: Make sure to verify that the

assumptions of every statistical test are

Two Types of Hypothesis satisfied.

1. Null Hypothesis

In stating your decision, you can use: Common Assumptions

• Fail to reject the null hypothesis/ Do • Approximately Normally Distributed

not reject the null hypothesis/ Retain • Homogeneity of Variances

the null hypothesis. • Samples must be independent of

• Reject the null hypothesis. each other.

Note: Testing Normality of the Data

It is important to recognize that we never To determine if the data follows a normal

accept the null hypothesis. We are merely distribution, we can use the graphical or

saying that the sample evidence is not strong numerical method.

enough to warrant rejection of the null

Graphical

hypothesis.

1. Histogram

Interpretation

2. Normal Q-Q Plot

➢ Less than alpha, does not follow

normal distribution. Numerical

➢ More than alpha, does follow

1. Kolmogorov Smirnov Test

normal distribution.

2. Lilliefors

Decision Rule:

3. Anderson - Darling Test

If p-value is less than or equal to the level of

significance, reject H0, otherwise failed to 4. Shapiro Wilk Test

reject H0.

Histogram

➢ Kapag mas mataas sa alpha level,

Histogram plots the observed values

failed to reject Ho

against their frequency, states a visual

➢ Kapag mas mababa sa alpha level,

estimation whether the distribution is

reject Ho

bell shaped or not. If the histogram form

Draw Conclusion a bell shaped it is considered that the

data follows a normal distribution.

Record conclusions and recommendations

in a report, and associate interpretations to Syntax:

justify your conclusion or hist(SAMPLE_DATAV2$GRADE_MATH,

recommendations. probability = T , col = 'GREEN')

➢ There is no sufficient evidence - lines(density(SAMPLE_DATAV2$GRADE_

failed to reject Ho. MATH) , col = 'RED')

➢ There is sufficient evidence – reject

Normal Q-Q Plot

Ho

Q-Q probability plots display the

Assumptions of Parametric Statistics

observed values against normally

distributed data (represented by the line). factor(SAMPLE_DATAV2$FAV

If the points are close to the diagonal line ORITE_SUBJECT))

it is considered that the data follows a 2. Levenes test

normal distribution. • More robust to departures

from normality than Bartletts

Syntax:

test.

qqnorm(SAMPLE_DATAV2$GRADE_MAT

• Syntax:

H)

leveneTest(SAMPLE_DATAV2

qqline(SAMPLE_DATAV2$GRADE_MATH) $GRADE_MATH ~

factor(SAMPLE_DATAV2$FAV

Example: Use the four numerical test for

ORITE_SUBJECT))

normality to grade in math from the

SAMPLE DATEV2 and determine if the Null Hypothesis:

data is normal.

Equal Variances Assumed.

• shapiro.test(SAMPLE_DATAV2$G

Alternative Hypothesis:

RADE_MATH) – Shapiro wilk test

• lillie.test(SAMPLE_DATAV2$GRAD Equal Variances Not Assumed.

E_MATH) - Lilliefors Test

One-sample t-test is used to compare the

• ad.test(SAMPLE_DATAV2$GRADE

mean of one sample to a known standard

_MATH) - Anderson Darling Test

(theoretical or hypothetical) mean (μ).

• ks.test(SAMPLE_DATAV2$GRADE

_MATH, "pnorm") - Kolmogorov Assumptions

Smirnov Test

• Samples must be independent of

Testing the Homogeneity of Variances each other.

• Approximately Normally Distributed.

There are many ways of testing data for

homogeneity of variance. Two methods are Syntax: t.test(score, mu = 70, alternative =

'greater', conf.level = 0.05)

shown here.

To perform inference on the difference of

1. Bartletts test

two population means, we must first

• If the data is normally

determine whether the data come from an

distributed, this is the best

independent or dependent sample.

test to use. It is sensitive to

data which is not normally Distinguish between Independent and

distribution; it is more likely Dependent Sample

to return a ”false positive”

• A sampling method is independent

when the data is non-normal.

when the individuals selected for

• Syntax:

one sample do not dictate which

bartlett.test(SAMPLE_DATAV

individuals are to be in a second

2$GRADE_MATH ~

sample.

• A sampling method is dependent • Your dependent variable should

when the individual selected to be in be measured on a continuous

one sample are used to determine scale (i.e., it is measured at the

the individuals to be in the second interval or ratio level).

sample. • Your independent variable should

consist of two categorical,

Dependent Sample T-test (also called the

independent groups.

paired sample t-test) compares the

• You should have independence of

means of two related groups to

observations, which means that

determine whether there is a statistically

there is no relationship between

significant difference between these

the observations in each group or

means.

between the groups themselves.

Assumptions • There should be no significant

outliers.

• Your dependent variable should

• Your dependent variable should

be measured at the interval or

be approximately normally

ratio level (i.e.,they are

distributed for each group of the

continuous).

independent variable.

• Your independent variable should

• There needs to be homogeneity

consist of two categorical,”

of variances. If the variance of

related groups” or” matched

two independent groups are not

pairs”.

equal, r software will calculate

• There should be no significant

welch two sample t-test instead

outliers in the differences

of independent sample t-test.

between the two related groups.

• The distribution of the Syntax: t.test (shallow, deep,

differences in the dependent alternative = 'two.sided', var.equal = T,

variable between the two related conf.level = 1-0.10)

groups should be approximately

One-way analysis of variance

normally distributed.

(ANOVA) is a method of testing the

Syntax: t.test (pre, post, alternative = equality of two or more population

'less', paired = T, conf.level = 1-0.05 means by analyzing sample variances.

Independent Sample T-test is used to It is a more general form of the t-test

test whether population means are that is appropriate to use with three

significantly different from each other, or more data groups.

using the means from randomly drawn

Assumptions:

samples.

• Your dependent variable

Assumptions:

should be measured at the

interval or ratio level (i.e., Pearson product moment correlation

they are continuous). coefficient (Pearson r) is a measure of

• Your independent variable thestrength of a linear association between

should consist of two or more two variables and is denoted by r.

categorical, independent

Syntax: cor.test(<x>, <y>, method =

groups.

"pearson", conf.level = 1 - α)

• You should have

independence of x: independent variable

observations, which means

y: dependent variable

that there is no relationship

between the observations in cor.test(number_of_person, no_of_cups,

each group or between the method = "pearson", conf.level = 1-0.05)

groups themselves.

Assumptions:

• There should be no significant

outliers. • Your two variables should be

• Your dependent variable measured at the interval or ratio level

should be approximately (i.e., they are continuous).

normally distributed for each • There is a linear relationship between

group of the independent your two variables.

variable. • There should be no significant

• There needs to be outliers.

homogeneity of variances. If • Your variables should be

the variance of more than approximately normally distributed.

two independent groups are

Non - Parametric Statistics

not equal, R software will

calculate Welch’s anova for • It refers to a statistical method in

unequal variances. which the data is not required to fit a

normal distribution. Due to such

Syntax:

reason, they are sometimes referred

• factor1 <- c(replicate(6, to as distribution-free tests.

'compact'),replicate(6, • Nonparametric tests serve as an

'midsize'), replicate(6, alternative to parametric tests.

'fullsize')) • Most non-parametric tests apply to

• leveneTest(car1~ data in an ordinal scale, and some

factor(factor1)) apply to data in nominal scale.

Note: Do not use non-parametric procedures

if parametric procedures can be used.

• oneway.test(car1 ~

factor(factor1), var.equal = F) Advantages of Non-Parametric Statistical

Procedures

• Most non- parametric tests have very • Your dependent variable should be

few requirements, it is unlikely that measured at the ordinal or

these tests will be used improperly. continuous level.

• For some non-parametric procedures, • Your independent variable should

the computations are fairly easy. consist of two categorical,” related

• The procedures can be used for count groups” or” matched pairs”.

data or rank data such as the rankings

Syntax: wilcox.test(Year_2009, Year_1979,

of a movie as excellent, good, fair, or

alternative = "two.sided", paired = TRUE,

poor.

conf.level= 1-0.05, exact = FALSE)

Disadvantages of Non - Parametric

Mann Whitney U-Test is a non-parametric

Statistical Procedures

procedure that is used to test the equality of

• Nonparametric procedures are less two population means from independent

efficient than parametric procedures. samples. Non-parametric equivalent of

• The results may or may not provide independent sample t-test.

an accurate answer because they are Assumptions:

distribution free.

• Your dependent variable should be

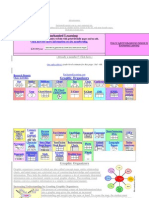

Non-Parametric Tests

measured at the ordinal or

• One-Sample Sign Test continuous level.

• Wilcoxon Signed Rank Test • Your independent variable should

• Mann Whitney U - Test consist of two categorical,”

• Kruskal Wallis H - Test independent groups”.

• Spearman Rank Correlation Test Syntax: wilcox.test(ill, healthy, alternative =

• Chi - square Test "greater", conf.level = 1-0.10, exact = F)

One Sample Sign Test is a nonparametric Kruskal Wallis H-Test is a rank based non-

equivalent of tests regarding a single parametric test that can be used to

population mean. determine if there are statistically significant

Assumption: differences between two or more groups of

an independent variable on continuous or

• The samples must be independent. ordinal dependent variable. It is a non-

Syntax: signmean.test(<numeric vector>, mu parametric equivalent to one way ANOVA.

= <known mean>, alternative=’<condition>’, Assumptions:

conf.level=1 – α)

• One independent variable with two

Wilcoxon Signed Rank Test is a non- or more levels (independent groups).

parametric equivalent to t-test for two The test is more commonly used

related samples. when you have three or more levels.

Assumptions:

• The level of measurement of Assumptions:

dependent variable are ordinal,

• There are 2 variables, and both

interval or ratio level.

are measured as categories,

• Your observations should be

usually at the nominal level.

independent.

However, categories may be

Syntax: city<-c(replicate(8, "makati"), ordinal. Interval or ratio data that

replicate(8, "mnl"), replicate(8, "qc")) have been collapsed into ordinal

categories may also be used.

kruskal.test(scores~factor(city))

• The two variables should consist

Spearman Rank Correlation (Spearman Rho) of two or more categorical,

is used to measure the strength and direction independent groups.

of association between two ordinal or • The data in the cells should be

continuous variables. It is a non-parametric frequencies or counts of cases

version of the Pearson Product-Moment rather than percentages or some

correlation. other transformation of the data.

• For a 2 by 2 table, all expected

Assumptions:

frequencies > 5.

• The two variables should be • For a larger table, all expected

measured on an ordinal or frequencies > 1 and no more than

continuous scale. 20% of all cells may have

• There needs to be a monotonic expected frequencies < 5.

relationship between the two

Syntax: matrix(<numeric vector>, nrow = <n>,

variables.

ncol = <m>, byrow = <bool>, dimnames =

Syntax: cor.test(<numeric vector list(<vector>,<vector>))

(independent)> , <numeric vector

chisq.test(<matrix>)

(dependent)> method = ’spearman’,

conf.level = 0.95) data<c(202,294,300,17,250,302,295,17,102,

110,103,8)

cor.test(swimming_rank, cycling_rank,

method = "spearman", conf.level = .95) rowname<-c("Obese","overweight","normal

weight","underweight")

Chi-Square: Test for independence is

used to discover if there is association colname<c("Thriving","struggling","Sufferig")

between two categorical variables.

bmi<-matrix(data, nrow = 4, ncol = 3, byrow

Command for Chi-Square Test: = F, dimnames = list(rowname, colname))

chisq.test(<x>,<y>) chisq.test(bmi)

x: numeric vector or matrix

y: numeric vector; ignore if x is a matrix

You might also like

- CH04 - Wooldridge - 7e PPT - 2ppDocument38 pagesCH04 - Wooldridge - 7e PPT - 2ppMahlatse Mabeba100% (2)

- Hypothesis Testing (MODIFIED)Document47 pagesHypothesis Testing (MODIFIED)Danna ValdezNo ratings yet

- BA Module 3 SummaryDocument3 pagesBA Module 3 SummaryChristian SuryadiNo ratings yet

- Analysis of Statistical Software With Special Reference To Statistical Package For Social Sciences (SPSS)Document29 pagesAnalysis of Statistical Software With Special Reference To Statistical Package For Social Sciences (SPSS)ShrutiNo ratings yet

- Time Series Forecasting Using Deep Learning - MATLAB & SimulinkDocument6 pagesTime Series Forecasting Using Deep Learning - MATLAB & SimulinkAli Algargary100% (1)

- International Journal of Trend in Scientific Research and Development (IJTSRD)Document16 pagesInternational Journal of Trend in Scientific Research and Development (IJTSRD)Editor IJTSRDNo ratings yet

- Quantitative Methods For Management: Session - 10Document95 pagesQuantitative Methods For Management: Session - 10manish guptaNo ratings yet

- E 7 Bda 3 CaDocument15 pagesE 7 Bda 3 CaKaranNo ratings yet

- Elle. HYPOTHESIS TESTINGDocument25 pagesElle. HYPOTHESIS TESTINGJhianne EstacojaNo ratings yet

- Quiz Part ADocument15 pagesQuiz Part AKaranNo ratings yet

- STT100 Hypothesis Testing Assignment HelpDocument22 pagesSTT100 Hypothesis Testing Assignment HelpZaman AsifNo ratings yet

- HypothesisDocument2 pagesHypothesisMachi KomacineNo ratings yet

- Testing of HypothesisDocument18 pagesTesting of HypothesisPavan DeshpandeNo ratings yet

- Quantitative Methods For Management: Session - 10Document95 pagesQuantitative Methods For Management: Session - 10sudheer gottetiNo ratings yet

- Stat ReviewerDocument3 pagesStat Reviewermaria luisa radaNo ratings yet

- Reviewer in StatisticsDocument5 pagesReviewer in StatisticsGail LeslieNo ratings yet

- Chapter 4Document77 pagesChapter 4Dyg Nademah Pengiran MustaphaNo ratings yet

- EDU 901C-Advance Statistics - Strategies For Hypothesis Test - 023440Document29 pagesEDU 901C-Advance Statistics - Strategies For Hypothesis Test - 023440Solomon kiplimoNo ratings yet

- StatDocument2 pagesStatNick Cris GadorNo ratings yet

- Eda Hypothesis Testing For Single SampleDocument6 pagesEda Hypothesis Testing For Single SampleMaryang DescartesNo ratings yet

- 5 - Hypothesis Testing HandoutDocument2 pages5 - Hypothesis Testing HandoutMichaellah Bless SantanderNo ratings yet

- Fundamentals of Hypothesis Testing: Zoheb Alam KhanDocument82 pagesFundamentals of Hypothesis Testing: Zoheb Alam KhanRetno Ajeng Anissa WidiatriNo ratings yet

- HypothesisDocument9 pagesHypothesissameenapathankhanNo ratings yet

- Inferential Statistic IDocument83 pagesInferential Statistic IThiviyashiniNo ratings yet

- Conducting Hypothesis Tests Using Classical and P-Value MethodsDocument34 pagesConducting Hypothesis Tests Using Classical and P-Value MethodsManzanilla FlorianNo ratings yet

- Hypothesis Testing Guide for One-Sample and Two-Sample TestsDocument48 pagesHypothesis Testing Guide for One-Sample and Two-Sample TestsJessy SeptalistaNo ratings yet

- Module 3 1Document6 pagesModule 3 1Claryx VheaNo ratings yet

- Hypothesis Testing: ETF1100 Business Statistics Week 5Document13 pagesHypothesis Testing: ETF1100 Business Statistics Week 5KaranNo ratings yet

- Statistical Significance of CPA SalariesDocument40 pagesStatistical Significance of CPA SalariesBhumika SinghNo ratings yet

- Statbis Inferential StatisticsDocument27 pagesStatbis Inferential StatisticsLabibah HanifahNo ratings yet

- Statbis Inferential StatisticsDocument27 pagesStatbis Inferential StatisticsLabibah HanifahNo ratings yet

- MATRIKULASI STATISTIKDocument78 pagesMATRIKULASI STATISTIKArisa MayNo ratings yet

- Hypothesis Testing: Reject or Fail To Reject? That Is The Question!Document100 pagesHypothesis Testing: Reject or Fail To Reject? That Is The Question!Satish ChandraNo ratings yet

- CAEMA6 Probability & Statistics II - Set GDocument32 pagesCAEMA6 Probability & Statistics II - Set G汪及云No ratings yet

- Hypothesis TestingDocument60 pagesHypothesis TestingRobiNo ratings yet

- 14 - Hypothesis Testing For One MeanDocument59 pages14 - Hypothesis Testing For One MeanmrNo ratings yet

- Hypothesis Testing Variables and StatisticsDocument21 pagesHypothesis Testing Variables and StatisticsKavisa GhoshNo ratings yet

- de 6 Dca 404 CDocument31 pagesde 6 Dca 404 CFarrukh JamilNo ratings yet

- U2.T4 Session 3 Introduction To Hypothesis Testing SY2223Document30 pagesU2.T4 Session 3 Introduction To Hypothesis Testing SY2223Christian TacanNo ratings yet

- Setting Up The Hypotheses: Inferential Statistics: Making Data-Driven DecisionsDocument2 pagesSetting Up The Hypotheses: Inferential Statistics: Making Data-Driven DecisionsRicardo Del NiroNo ratings yet

- Hypothesis-Fall 20Document5 pagesHypothesis-Fall 20RupalNo ratings yet

- Complete Collection To Be Studied, It Contains All Subjects of InterestDocument3 pagesComplete Collection To Be Studied, It Contains All Subjects of InterestJayakumar ChenniahNo ratings yet

- Stat 139 - Unit 03 - Hypothesis Testing - 1 Per PageDocument32 pagesStat 139 - Unit 03 - Hypothesis Testing - 1 Per PageDevendra MahajanNo ratings yet

- Hypothesis Testing Quick Reference - 2Document16 pagesHypothesis Testing Quick Reference - 2Swathi MithaiNo ratings yet

- Biostat Lec Part 5 (Hypothesis) - V2Document6 pagesBiostat Lec Part 5 (Hypothesis) - V2Claudelle MangubatNo ratings yet

- 102 Chapter 9 & 10 NotesDocument6 pages102 Chapter 9 & 10 Notestito askerNo ratings yet

- Chapter 16. Frequency Distribution, Hypothesis Testing, and Cross-TabulationDocument18 pagesChapter 16. Frequency Distribution, Hypothesis Testing, and Cross-TabulationAaron Pinto100% (1)

- Business Statistics Hypothesis Testing GuideDocument33 pagesBusiness Statistics Hypothesis Testing GuideNOOR UL AIN SHAHNo ratings yet

- Statistics Hypothesis TestingDocument7 pagesStatistics Hypothesis TestingWasif ImranNo ratings yet

- Hypothesis Testing Hand NotreDocument6 pagesHypothesis Testing Hand NotreAmzad DPNo ratings yet

- ASV CribSheet Hypothesis TestingDocument2 pagesASV CribSheet Hypothesis TestingvaldesasNo ratings yet

- Stt151a NotesDocument14 pagesStt151a NotesjanaariveNo ratings yet

- Chapter 10Document25 pagesChapter 10Hasan HubailNo ratings yet

- Learning Unit 8Document20 pagesLearning Unit 8Nubaila EssopNo ratings yet

- Hypothesis+tests+involving+a+sample+mean+or+proportionDocument45 pagesHypothesis+tests+involving+a+sample+mean+or+proportionJerome Badillo100% (1)

- Hypothesis Testing and Statistical TestsDocument32 pagesHypothesis Testing and Statistical TestsZyanne Kyle AuzaNo ratings yet

- W7 Lecture7Document19 pagesW7 Lecture7Thi Nam PhạmNo ratings yet

- Statistical Tests of DifferenceDocument41 pagesStatistical Tests of DifferenceChristian KezNo ratings yet

- AQM Week 11 LectureDocument21 pagesAQM Week 11 LectureMahsa SalekiNo ratings yet

- Test On The Mean of A Normal DistributionDocument18 pagesTest On The Mean of A Normal DistributionRiss EdullantesNo ratings yet

- Hypothesis TestingDocument80 pagesHypothesis TestingM. JaddouaNo ratings yet

- Math 403 Engineering Data Analysi1Document10 pagesMath 403 Engineering Data Analysi1Marco YvanNo ratings yet

- 0901 2880 PDFDocument6 pages0901 2880 PDFBluze Steele MansonNo ratings yet

- Teacher Notes: Own Production To Other Peers. This Is PenalizedDocument7 pagesTeacher Notes: Own Production To Other Peers. This Is Penalizedgabriela acostaNo ratings yet

- Research MethodsDocument85 pagesResearch Methods1992janki.rajyaguruNo ratings yet

- Quantitative Techniques at a GlanceDocument20 pagesQuantitative Techniques at a GlanceDivyangi WaliaNo ratings yet

- Review of Educational Research 1975 Gardner 43 57Document15 pagesReview of Educational Research 1975 Gardner 43 57Roy Umaña CarrilloNo ratings yet

- Using Tables To Enhance Trustworthiness in Qualitative ResearchDocument21 pagesUsing Tables To Enhance Trustworthiness in Qualitative ResearchitaNo ratings yet

- Quantitative Research MethodologyDocument12 pagesQuantitative Research MethodologyYowgeswarNo ratings yet

- EconometricsDocument300 pagesEconometricsmriley@gmail.com100% (4)

- Analysis of Item Discrimination Power of Mathematical Representation Test TrialDocument2 pagesAnalysis of Item Discrimination Power of Mathematical Representation Test TrialAhmad Safi'iNo ratings yet

- Methods of Research-Lession 9Document32 pagesMethods of Research-Lession 9Renj LoiseNo ratings yet

- Problems in Uncertainty With Solutions Physics 1Document13 pagesProblems in Uncertainty With Solutions Physics 1asNo ratings yet

- Group D - Module 2 Problems Excel FinalDocument9 pagesGroup D - Module 2 Problems Excel FinalMyuran SivarajahNo ratings yet

- Empirical Probability DistributionsDocument11 pagesEmpirical Probability DistributionsAngelyka RabeNo ratings yet

- The Influence of Service Quality On Customer Satisfaction in Gran Puri Hotel ManadoDocument13 pagesThe Influence of Service Quality On Customer Satisfaction in Gran Puri Hotel Manadokelly aditya japNo ratings yet

- Research Problem & HypothesesDocument20 pagesResearch Problem & Hypotheseseski318No ratings yet

- Chapter 3 Sinaw - Sinaw Acne SoapDocument4 pagesChapter 3 Sinaw - Sinaw Acne SoapRysa May LibawanNo ratings yet

- Assignment 1Document5 pagesAssignment 1Kalaiselvan MohanNo ratings yet

- Griffiths QMCH 1 P 17Document3 pagesGriffiths QMCH 1 P 17abc xyzNo ratings yet

- PCA - 4EGB - Science Phenomenal Book 3Document10 pagesPCA - 4EGB - Science Phenomenal Book 3MARJORIE CONRADE CRESPONo ratings yet

- Many Graphic OrganiserDocument8 pagesMany Graphic OrganiserMay'n SnNo ratings yet

- Application of ResearchDocument11 pagesApplication of ResearchJincy.P. BabuNo ratings yet

- June 2011 QDocument8 pagesJune 2011 Qapi-202577489No ratings yet

- Basic Stata AssignmentDocument8 pagesBasic Stata AssignmentGolam RamijNo ratings yet

- Francis Bacon PDFDocument6 pagesFrancis Bacon PDFKushagra AgnihotriNo ratings yet

- Chapter 1 - Introduction To Nursing ResearchDocument5 pagesChapter 1 - Introduction To Nursing ResearchKathrina Ioannou100% (3)

- AP Stats Formula SheetDocument7 pagesAP Stats Formula SheetJoel-Jude MorancyNo ratings yet