Professional Documents

Culture Documents

Continuous Gesture Recognition For Flexible Human-Robot Interaction

Uploaded by

muhammad bilalOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Continuous Gesture Recognition For Flexible Human-Robot Interaction

Uploaded by

muhammad bilalCopyright:

Available Formats

Continuous gesture recognition for

flexible human-robot interaction

Presented By

Muhammad Bilal 16-ECT-50

Muhammad Yasin 16-ECT-78

Bilal Mustafa 16-ECT-08

Project Supervisor: Dr. Furqan Shaukat

Outline of Presentation

Time Line

Progress in Vacations

Department of Electronics Engineering

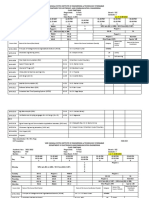

Time Line(1/3)

7th Semester 2019-20

Phase No Phase Estimated Time Date(2019-20) Status

1 Literature Review 6 Weeks 01-09 to 12-10 Completed

2 Algorithm for gesture 6 Weeks 13-10 to 24-11 Completed

Recognition (CNN)

3 Algorithm for gesture 4 Weeks 25-11 to 23-12 Completed

classification (LSTM)

Department of Electronics Engineering

Time Line(2/3)

8th Semester 2020

Phase No Phase Estimated Time Date(2020) Status

1 Testing ,Verification and 2 Weeks 01-02 to 15-02 Completed

modification of Algorithm

1 Purchase Hardware(Robot) 2 Weeks 16-02 to 29-02 Completed

2 Designing Robot 3 Weeks 01-03 to 21-03 Completed

Hardware

3 Integration of hardware 2 Weeks 22-03 to 04-04 In

and software Progress

4 Testing and Debugging 2 Weeks 05-04 ---- In

Progress

Department of Electronics Engineering

Time Line(3/3)

8th Semester 2020

Phase No Phase Estimated Time Date(2020) Status

5 Documentation 2 Weeks ------- ----

6 Documentation Review 1 Weeks ------ ----

7 Submission -----

Department of Electronics Engineering

Progress in Vacations

We interface raspberry pi with camera , this camera is specially designed for

Rasberry Pi

We test our pre trained model on raspberry pi , (We have not complete hardware

because, Robotic arm is in hostel because when vacations announced we did not

know exact duration at that time)

Initially the accuracy is very poor, It was very rare when it detect accurate gesture

Department of Electronics Engineering

Progress in Vacations

We make little changes code in, because it was designed to control keyboard, But we need to take

different signals for servo motors

We write a code using open cv and some Image processing concepts to detect gestures, It detect

gesture on basis of defect points. In good lighting conditions it can static gestures .

We also test some other models to detect gesture from github.

Sir now we are decide to train CNN model on our own Dataset , we are making videos of only

five gestures .

Department of Electronics Engineering

You might also like

- IT Infrastructure and Network TechDocument2 pagesIT Infrastructure and Network TechMidnight ProgrammingNo ratings yet

- Ece 3-2 Cs Syllabus - Ug - r20Document113 pagesEce 3-2 Cs Syllabus - Ug - r20Jøhn WeßłeyNo ratings yet

- Ece 3-1 Cs Syllabus - Ug - r20Document110 pagesEce 3-1 Cs Syllabus - Ug - r20Lusy LightNo ratings yet

- AJP MicroprojectDocument10 pagesAJP MicroprojectshubhaM100% (1)

- Sensors & Its Applications (AEC 0304)Document161 pagesSensors & Its Applications (AEC 0304)WowskinNo ratings yet

- Responsive Travel Agency: SVPM'S Institute of Technology and Engg. Malegaonbk, (2020-2021)Document50 pagesResponsive Travel Agency: SVPM'S Institute of Technology and Engg. Malegaonbk, (2020-2021)Suyash MusaleNo ratings yet

- Week 1 - Iot - Assignment 1 - AnsDocument3 pagesWeek 1 - Iot - Assignment 1 - Ansdude RohitNo ratings yet

- SH As Hi 1Document30 pagesSH As Hi 1Shashi BalaNo ratings yet

- Mirpur University of Science and Technology (MUST), Mirpur-10250 (AJK), PakistanDocument3 pagesMirpur University of Science and Technology (MUST), Mirpur-10250 (AJK), PakistanCH HamzaNo ratings yet

- Course Code: 4361103: Page 1 of 8Document8 pagesCourse Code: 4361103: Page 1 of 8rashmin tannaNo ratings yet

- Class Schedule 20151-16 (Sem-1)Document2 pagesClass Schedule 20151-16 (Sem-1)yo23209684No ratings yet

- Laboratory Manual 2021-2022: Digital Signal Processing LaboratoryDocument77 pagesLaboratory Manual 2021-2022: Digital Signal Processing LaboratorySanket AmbadagattiNo ratings yet

- Project Status Report - COM - Oct2021Document5 pagesProject Status Report - COM - Oct2021Parasaram SrinivasNo ratings yet

- ECE Syllabus July-Dec 2017Document159 pagesECE Syllabus July-Dec 2017The FZ25 BoyNo ratings yet

- Final CPP MrunalDocument72 pagesFinal CPP Mrunal04-SYCO- Manali Ghule.No ratings yet

- Jira Xray Test ExecutionsDocument8 pagesJira Xray Test ExecutionsEdward Lawin GuzmanNo ratings yet

- IV B.Tech TT I Sem 2021-22Document6 pagesIV B.Tech TT I Sem 2021-22ravikumar rayalaNo ratings yet

- Iot Based Transformer Monitoring System: Savitribai Phule Pune UniversityDocument69 pagesIot Based Transformer Monitoring System: Savitribai Phule Pune UniversityyashNo ratings yet

- CSE BTECH IV SEM SCHEME SYLLABUS - Jan 2022Document16 pagesCSE BTECH IV SEM SCHEME SYLLABUS - Jan 2022Aditya ChouhanNo ratings yet

- Mobile Computing and Networks IT BRanchDocument10 pagesMobile Computing and Networks IT BRanchNihar ChaudhariNo ratings yet

- ETI MicroprojectDocument17 pagesETI Microproject13432Tejaswi Kale.No ratings yet

- Elements of Computer Science EngineeringDocument2 pagesElements of Computer Science EngineeringSrinivas KanakalaNo ratings yet

- Guidelines For Sem VIII Choice R16 Regular ExaminationDocument5 pagesGuidelines For Sem VIII Choice R16 Regular ExaminationJvc NfuNo ratings yet

- Computer NetworksDocument6 pagesComputer Networksutubeyash05No ratings yet

- Project Status Report - COM - Jan2022Document4 pagesProject Status Report - COM - Jan2022Parasaram SrinivasNo ratings yet

- Engineering Excellence For Your Business 22 February 2018, Athens GreeceDocument49 pagesEngineering Excellence For Your Business 22 February 2018, Athens GreeceSPYROS KALOGERASNo ratings yet

- Epl Manual PDFDocument27 pagesEpl Manual PDFAnisha SelvamNo ratings yet

- NC Electronic Eng. Instrumentation and Control Modules 2022Document178 pagesNC Electronic Eng. Instrumentation and Control Modules 2022nhodoaliNo ratings yet

- Cse Iot Btech III Yr V Sem Scheme SyllabusDocument28 pagesCse Iot Btech III Yr V Sem Scheme SyllabusVed Kumar GuptaNo ratings yet

- 15ecsc702 576 Kle50-15ecsc702Document5 pages15ecsc702 576 Kle50-15ecsc702Aniket AmbekarNo ratings yet

- 05.M.Tech .RemoteDocument37 pages05.M.Tech .RemoteAnonymous fYHyRa2XNo ratings yet

- Project Status Report - COM - Nov2021Document7 pagesProject Status Report - COM - Nov2021Parasaram SrinivasNo ratings yet

- NC Electronic Eng. Instrumentation and Control Modules 2022Document174 pagesNC Electronic Eng. Instrumentation and Control Modules 2022Kudzy ChigodoraNo ratings yet

- Introduction To Microprocessor: (ACSE0405)Document167 pagesIntroduction To Microprocessor: (ACSE0405)ragoho4677No ratings yet

- Minor in IoT Scheme 20Document10 pagesMinor in IoT Scheme 20Naveen KumarNo ratings yet

- Faculty of Engineering Ain Shams University Final ExaminationDocument9 pagesFaculty of Engineering Ain Shams University Final ExaminationRitaNo ratings yet

- EE220L DLD Lab Manual Fall 2022Document104 pagesEE220L DLD Lab Manual Fall 2022Shoaib MushtaqNo ratings yet

- Ete Learning MaterialDocument164 pagesEte Learning MaterialgpkpaperNo ratings yet

- Semester-IV: Computer Networking Course Code: 4340703Document10 pagesSemester-IV: Computer Networking Course Code: 4340703Zaidali BurmawalaNo ratings yet

- Priti Kadam Dte ProjectDocument15 pagesPriti Kadam Dte ProjectAk MarathiNo ratings yet

- Sample Paper 3Document13 pagesSample Paper 3Snapi LifestyleNo ratings yet

- ECE 4-1 CS Syllabus - UG - R20Document110 pagesECE 4-1 CS Syllabus - UG - R20Jimma112No ratings yet

- Gite AnkitaDocument6 pagesGite AnkitaSairaj Net CafeNo ratings yet

- Syllabus V Sem CS DipDocument25 pagesSyllabus V Sem CS DipSK BeharNo ratings yet

- Embedded System & Microcontroller Application Course Code: 4351102Document8 pagesEmbedded System & Microcontroller Application Course Code: 4351102Raggy TannaNo ratings yet

- 2 ProjectDocument108 pages2 ProjectJaneNo ratings yet

- Report 1 DeekshiDocument30 pagesReport 1 DeekshiBhoomika BhuvanNo ratings yet

- Nad SyllabysDocument10 pagesNad Syllabys2160 38 Advait MhalungekarNo ratings yet

- Noting SheetDocument27 pagesNoting Sheet14009No ratings yet

- Shravani GDocument73 pagesShravani G04-SYCO- Manali Ghule.No ratings yet

- DT Worksheet 1.3 21BCS3920Document5 pagesDT Worksheet 1.3 21BCS3920Gaurav saiNo ratings yet

- Nep Sem 1 & 2Document1 pageNep Sem 1 & 2Bala AnandNo ratings yet

- Unit 1Document107 pagesUnit 1Machindra GaikarNo ratings yet

- 0-Internal Viva MCS-CS-ITDocument1 page0-Internal Viva MCS-CS-ITseemabNo ratings yet

- Embedded IndexDocument1 pageEmbedded IndexSameer KohokNo ratings yet

- B.E. Ecs Sem Viii SyllabusDocument66 pagesB.E. Ecs Sem Viii Syllabus8816 Gautam ManuelNo ratings yet

- Chhattisgarh Swami Vivekanand Technical University, BhilaiDocument22 pagesChhattisgarh Swami Vivekanand Technical University, Bhilaibheshram janghelNo ratings yet

- Cse Btech IV Yr Vii Sem Scheme Syllabus July 2022Document25 pagesCse Btech IV Yr Vii Sem Scheme Syllabus July 2022Ved Kumar GuptaNo ratings yet

- Ae ZG633 Course HandoutDocument3 pagesAe ZG633 Course HandoutMAHENDRAN DNo ratings yet

- Complete Year 2022 Current Affairs PDF Jan-Dec Current AffairsDocument163 pagesComplete Year 2022 Current Affairs PDF Jan-Dec Current Affairsmuhammad bilalNo ratings yet

- Airport Security ForcesDocument1 pageAirport Security Forcesmuhammad bilalNo ratings yet

- Satellite Image Classification With Deep LearningDocument7 pagesSatellite Image Classification With Deep Learningmuhammad bilalNo ratings yet

- Calibration of Low-Cost Particle Sensors by Using Machine-Learning MethodDocument5 pagesCalibration of Low-Cost Particle Sensors by Using Machine-Learning Methodmuhammad bilalNo ratings yet

- Modeling and Simulation of Quadcopter Using PID Controller: January 2016Document9 pagesModeling and Simulation of Quadcopter Using PID Controller: January 2016muhammad bilalNo ratings yet

- Head Movement Controlled Electronic WheelchairDocument36 pagesHead Movement Controlled Electronic WheelchairAleo Cris BuizaNo ratings yet

- Implementation of Hand Gesture Recognition System To Aid Deaf-Dumb PeopleDocument15 pagesImplementation of Hand Gesture Recognition System To Aid Deaf-Dumb PeopleSanjay ShelarNo ratings yet

- SV2021112102Document12 pagesSV2021112102pashaNo ratings yet

- Control Mouse Using Hand Gesture and VoiceDocument10 pagesControl Mouse Using Hand Gesture and VoiceIJRASETPublicationsNo ratings yet

- These FinalDocument107 pagesThese FinalAhmed OUADAHINo ratings yet

- A Gesture Based Camera Controlling Method in The 3D Virtual SpaceDocument10 pagesA Gesture Based Camera Controlling Method in The 3D Virtual SpaceStefhanno Rukmana Gunawan AbhiyasaNo ratings yet

- Finger Tracking in Real Time Human Computer InteractionDocument12 pagesFinger Tracking in Real Time Human Computer InteractionJishnu RemeshNo ratings yet

- Black BookDocument76 pagesBlack BookHimanshu Thakkar100% (1)

- Shadow RobotDocument29 pagesShadow Robotteentalksopedia100% (1)

- Gesture Volume ControlDocument10 pagesGesture Volume Controlmohammed faizanNo ratings yet

- Urls TemporalesDocument2 pagesUrls TemporalesGersonNo ratings yet

- Gesture-Based Interaction - Lecture 8 - Next Generation User Interfaces (4018166FNR)Document56 pagesGesture-Based Interaction - Lecture 8 - Next Generation User Interfaces (4018166FNR)Beat SignerNo ratings yet

- Gesture Controlled Mouse and Voice AssistantDocument7 pagesGesture Controlled Mouse and Voice AssistantIJRASETPublicationsNo ratings yet

- SeminarDocument26 pagesSeminarharshitha gNo ratings yet

- Early Detection of Diabetes - Diabetic RetinopathyDocument66 pagesEarly Detection of Diabetes - Diabetic RetinopathyHemant MallahNo ratings yet

- Download textbook Android Studio 3 0 Development Essentials Android 8 Edition Neil Smyth ebook all chapter pdfDocument53 pagesDownload textbook Android Studio 3 0 Development Essentials Android 8 Edition Neil Smyth ebook all chapter pdfmarjorie.paulson577100% (11)

- Hand Gesture Controlled Virtual Mouse Using Artificial Intelligence Ijariie19380Document14 pagesHand Gesture Controlled Virtual Mouse Using Artificial Intelligence Ijariie19380Rr RrNo ratings yet

- Seminar Presentation On Sixth Sense TechnologyDocument33 pagesSeminar Presentation On Sixth Sense TechnologyMeNo ratings yet

- Design and Implementation of Air Mouse Using Accelerometer SensorDocument3 pagesDesign and Implementation of Air Mouse Using Accelerometer SensorKesthara VNo ratings yet

- Mpower Project PDFDocument15 pagesMpower Project PDFsachin singhNo ratings yet

- Home Automation Using Hand GestureDocument19 pagesHome Automation Using Hand GestureChandrashekhar KatagiNo ratings yet

- SixthSense TechnologyDocument21 pagesSixthSense TechnologyNishith LakhlaniNo ratings yet

- A Seminar Report OnDocument19 pagesA Seminar Report OnSowmya KasojuNo ratings yet

- Hand GestureDocument5 pagesHand GestureBunny JainNo ratings yet

- 15.-Drum-Dance-Music-Machine Construction of A Technical PDFDocument6 pages15.-Drum-Dance-Music-Machine Construction of A Technical PDFJuan Pacho NoiseNo ratings yet

- Chapter 6 InterfacesDocument114 pagesChapter 6 InterfacesJerrymaeNo ratings yet

- Research Paper On 6th Sense TechnologyDocument8 pagesResearch Paper On 6th Sense Technologyjhwmemrhf100% (1)

- Paper 11-Gesture Controlled Robot Using Image ProcessingDocument9 pagesPaper 11-Gesture Controlled Robot Using Image ProcessingYash SharmaNo ratings yet

- Real Time Hand Gesture Recognition Using Finger TipsDocument5 pagesReal Time Hand Gesture Recognition Using Finger TipsJapjeet SinghNo ratings yet

- Voice Controlled Wheel ChairDocument6 pagesVoice Controlled Wheel ChairAnish GawandeNo ratings yet