Professional Documents

Culture Documents

Floating Point

Uploaded by

Ashish Tiwari0 ratings0% found this document useful (0 votes)

7 views16 pagesCopyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

7 views16 pagesFloating Point

Uploaded by

Ashish TiwariCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 16

Real Numbers IEEE 754 Floating Point Arithmetic

Computer Systems Architecture

http://cs.nott.ac.uk/∼txa/g51csa/

Thorsten Altenkirch and Liyang Hu

School of Computer Science

University of Nottingham

Lecture 08: Real Numbers and IEEE 754 Arithmetic

Real Numbers IEEE 754 Floating Point Arithmetic

Representing Real Numbers

So far we can compute with a subset of numbers:

Unsigned integers [0, 232 ) ⊂ N

Signed integers [−231 , 231 ) ⊂ Z

What about numbers such as

3.1415926 . . .

1/3 = 0.33333333 . . .

299792458

How do we represent smaller quantities than 1?

Real Numbers IEEE 754 Floating Point Arithmetic

Shifting the Point

Use decimal point to separate integer and fractional parts

Digits after the point denote 1/10th , 1/100th , . . .

Similarly, we can use the binary point

Bit 3rd 2nd 1st 0th . -1st -2nd -3rd -4th

Weight 23 22 21 20 . 2-1 2-2 2-3 2-4

Left denote integer weights; right for fractional weights

What is 0.1012 in decimal?

0.1012 = 2-1 + 2-3 = 0.5 + 0.125 = 0.625

What is 0.110 in binary?

0.110 = 0.0001100110011 . . .2

Digits repeat forever – no exact finite representation!

Real Numbers IEEE 754 Floating Point Arithmetic

Fixed Point Arithmetic

How do we represent non-integer numbers on computers?

Use binary scaling: e.g. let one increment represent 1/16th

Hence, 0000.00012 = 1 ∼ = 1/16 = 0.0625

∼

0001.10002 = 24 = 24/16 = 1.5

Fixed position for binary point

Addition same as integers: a/c + b/c = (a + b)/c

Multiplication mostly unchanged, but must scale after

a b (a × b)/c

× =

c c c

e.g. 1.5 × 2.5 = 3.75

0001.10002 × 0010.10002 = 0011.1100 00002

Thankfully division by 2e is fast!

Real Numbers IEEE 754 Floating Point Arithmetic

Overview of Fixed Point

Integer arithmetic is simple and fast

Games often used fixed point for performance;

Digital Signal Processors still do, for accuracy

No need for a separate floating point coprocessor

Limited range

A 32-bit Q24 word can only represent [−27 , 27 − 2-24 ]

Insufficient range for physical constants, e.g.

Speed of light c = 2.9979246 × 109 m/s

Planck’s constant h = 6.6260693 × 10-34 N·m·s

Exact representation only for (multiples of) powers of two

Cannot represent certain numbers exactly,

e.g. 0.01 for financial calculations

Real Numbers IEEE 754 Floating Point Arithmetic

Scientific Notation

We use scientific notation for very large/small numbers

e.g. 2.998 × 109 , 6.626 × 10-34

General form ±m × b e where

The mantissa contains a decimal point

The exponent is an integer e ∈ Z

The base can be any positive integer b ∈ N∗

A number is normalised when 1 ≤ m < b

Normalise a number by adjusting the exponent e

10 × 100 is not normalised; but 1.0 × 101 is

In general, which number cannot be normalised?

Zero can never be normalised

NaN and ±∞ also considered denormalised

Real Numbers IEEE 754 Floating Point Arithmetic

Floating Point Notation

Floating point – scientific notation in base 2

In the past, manufacturers had incompatible hardware

Different bit layouts, rounding, and representable values

Programs gave different results on different machines

IEEE 754: Standard for Binary Floating-Point Arithmetic

IEEE – Institute of Electrical and Electronic Engineers

Exact definitions for arithmetic operations

– the same wrong answers, everywhere

IEEE Single (32-bit) and Double (64-bit) precision

Gives exact layout of bits, and defines basic arithmetic

Implemented on coprocessor 1 of the MIPS architecture

Part of Java language definition

Real Numbers IEEE 754 Floating Point Arithmetic

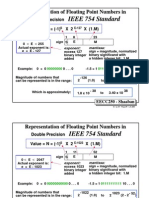

IEEE 754 Floating Point Format

Computer representations are finite

IEEE 754 is a sign and magnitude format

Here, magnitude consists of the exponent and mantissa

Single Precision (32 bits)

sign exponent mantissa

1 bit ← 8 bits → ← 23 bits →

Double Precision (64 bits)

sign exponent mantissa

1 bit ← 11 bits → ← 52 bits →

Real Numbers IEEE 754 Floating Point Arithmetic

IEEE 754 Encoding (Single Precision)

Sign: 0 for positive, 1 for negative

Exponent: 8-bit excess-127, between 0116 and FE16

0016 and FF16 are reserved – see later

Mantissa: 23-bit binary fraction

No need to store leading bit – the hidden bit

Normalised binary numbers always begin with 1

c.f. normalised decimal numbers begin with 1. . . 9

Reading the fields as unsigned integers,

(−1)s × 1.m × 2e−127

Special numbers contain 0016 or FF16 in exponent field

±∞, NaN, 0 and some very small values

Real Numbers IEEE 754 Floating Point Arithmetic

IEEE 754 Special Values

Largest and smallest normalised 32-bit number?

Largest: 1.1111...2 × 2127 ≈ 3.403 × 1038

Smallest: 1.000...2 × 2-126 ≈ 1.175 × 10-38

We can still represent some values less than 2-126

Denormalised numbers have 0016 in the exponent field

The hidden bit no longer read as 1

Conveniently, 0000 000016 represents (positive) zero

IEEE 754 Single Precision Summary

Exponent Mantissa Value Description

0016 =0 0 Zero

0016 += 0 ±0.m × 2-126 Denormalised

0116 to FE16 ±1.m × 2e−127 Normalised

FF16 =0 ±∞ Infinities

FF16 += 0 NaN Not a Number

Real Numbers IEEE 754 Floating Point Arithmetic

Overflow, Underflow, Infinities and NaN

Underflow: result < smallest normalised number

Overflow: result > largest representable number

Why do we want infinities and NaN?

Alternative is to give a wrong value, or raise an exception

Overflow gives ±∞ (underflow gives denormalised)

In long computations, only a few results may be wrong

Raising exceptions would abort entire process

Giving a misleading result is just plain dangerous

Infinities and NaN propagate through calculations, e.g.

1 + (1 ÷ 0) = 1 + ∞ = ∞

(0 ÷ 0) + 1 = NaN + 1 = NaN

Real Numbers IEEE 754 Floating Point Arithmetic

Examples

Convert the following to 32-bit IEEE 754 format

1.010 = 1.02 × 20 = 0 0111 1111 00000...

1.510 = 1.12 × 20 = 0 0111 1111 10000...

10010 = 1.10012 × 26 = 0 1000 0101 10010...

0.110 ≈ 1.100112 × 2-4 = 0 0111 1011 100110...

Check your answers using

http://www.h-schmidt.net/FloatApplet/IEEE754.html

Convert to a hypothetical 12-bit format,

4 bits excess-7 exponent, 7 bits mantissa:

3.141610 ≈ 1.10010012 × 21 = 0 1000 1001001

Real Numbers IEEE 754 Floating Point Arithmetic

Floating Point Addition

Suppose f0 = m0 × 2e0 , f1 = m1 × 2e1 and e0 ≥ e1

Then f0 + f1 = (m0 + m1 × 2e1 −e0 ) × 2e0

1 Shift the smaller number right until exponents match

2 Add/subtract the mantissas, depending on sign

3 Normalise the sum by adjusting exponent

4 Check for overflow

5 Round to available bits

6 Result may need further normalisation; if so, goto step 3

Real Numbers IEEE 754 Floating Point Arithmetic

Floating Point Multiplication

Suppose f0 = m0 × 2e0 and f1 = m1 × 2e1

Then f0 × f1 = m0 × m1 × 2e0 +e1

1 Add the exponents (be careful, excess-n encoding!)

2 Multiply the mantissas, setting the sign of the product

3 Normalise the product by adjusting exponent

4 Check for overflow

5 Round to available bits

6 Result may need further normalisation; if so, goto step 3

Real Numbers IEEE 754 Floating Point Arithmetic

IEEE 754 Rounding

Hardware needs two extra bits (round, guard) for rounding

IEEE 754 defines four rounding modes

Round Up Always toward +∞

Round Down Always toward −∞

Towards Zero Round down if positive, up if negative

Round to Even Rounds to nearest even value: in a tie,

pick the closest ‘even’ number: e.g. 1.5

rounds to 2.0, but 4.5 rounds to 4.0

MIPS and Java uses round to even by default

Real Numbers IEEE 754 Floating Point Arithmetic

Exercise: Rounding

Round off the last two digits from the following

Interpret the numbers as 6-bit sign and magnitude

Number To +∞ To −∞ To Zero To Even

+0001.01 +0010 +0001 +0001 +0001

-0001.11 -0001 -0010 -0001 -0010

+0101.10 +0110 +0101 +0101 +0110

+0100.10 +0101 +0100 +0100 +0100

-0011.10 -0011 -0100 -0011 -0100

Give 2.2 to two bits after the binary point: 10.012

Round 1.375 and 1.125 to two places: 1.102 and 1.002

You might also like

- Digital Electronics For Engineering and Diploma CoursesFrom EverandDigital Electronics For Engineering and Diploma CoursesNo ratings yet

- L02 Numerical ComputationDocument43 pagesL02 Numerical ComputationMuhammad Ali RazaNo ratings yet

- IEEE Standard 754 Floating-PointDocument9 pagesIEEE Standard 754 Floating-PointLucifer StarkNo ratings yet

- Computations in Mechanical Engineering: Numbers and VectorsDocument18 pagesComputations in Mechanical Engineering: Numbers and VectorsTanaka OkadaNo ratings yet

- Lec07 - Computer Arithmetic - Floating-Point Representation and ArithmeticDocument42 pagesLec07 - Computer Arithmetic - Floating-Point Representation and ArithmeticTAN SOO CHINNo ratings yet

- CEF352 Lect2Document18 pagesCEF352 Lect2Tabi JosephNo ratings yet

- What Are Floating Point Numbers?Document7 pagesWhat Are Floating Point Numbers?gsrashu19No ratings yet

- Implementation of Floating Point MultiplierDocument4 pagesImplementation of Floating Point MultiplierRavi KumarNo ratings yet

- COA UNIT-III PPTs Dr.G.Bhaskar ECEDocument64 pagesCOA UNIT-III PPTs Dr.G.Bhaskar ECECharan Sai ReddyNo ratings yet

- Pooja VashisthDocument35 pagesPooja VashisthApurva SinghNo ratings yet

- GSC-320 Numerical Computing: Lecturer:Fasiha IkramDocument17 pagesGSC-320 Numerical Computing: Lecturer:Fasiha IkramYunus Sh Mustafa ZanzibarNo ratings yet

- Single Precision Floating-Point ConversionDocument6 pagesSingle Precision Floating-Point Conversionps_v@hotmail.comNo ratings yet

- The IEEE Standard For Floating Point ArithmeticDocument9 pagesThe IEEE Standard For Floating Point ArithmeticVijay KumarNo ratings yet

- Floating Point Numbers: CS031 September 12, 2011Document22 pagesFloating Point Numbers: CS031 September 12, 2011fmsarwarNo ratings yet

- CA Notes 01Document14 pagesCA Notes 01Akshat AgarwalNo ratings yet

- The World Is Not Just Integers: Programming Languages Support Numbers With FractionDocument51 pagesThe World Is Not Just Integers: Programming Languages Support Numbers With FractionIT PersonNo ratings yet

- COMP0068 Lecture10 High Level Data TypesDocument25 pagesCOMP0068 Lecture10 High Level Data Typesh802651No ratings yet

- UNIT V Finite Word Length Effects Lecture Notes ModifiedDocument11 pagesUNIT V Finite Word Length Effects Lecture Notes ModifiedramuamtNo ratings yet

- Floating PointDocument26 pagesFloating PointdarshanNo ratings yet

- Approximations, Errors and Their Analysis: AML702 Applied Computational MethodsDocument13 pagesApproximations, Errors and Their Analysis: AML702 Applied Computational Methodsprasad_batheNo ratings yet

- IEEE Standard 754 Floating Point Numbers - GeeksforGeeks PDFDocument7 pagesIEEE Standard 754 Floating Point Numbers - GeeksforGeeks PDFMaksat BayramovNo ratings yet

- Lecture 10 (Temp)Document50 pagesLecture 10 (Temp)AntonNo ratings yet

- Lecture 4 - Computer ArithmeticDocument18 pagesLecture 4 - Computer ArithmeticHuzaika MatloobNo ratings yet

- ECE 0142 Floating Point RepsDocument31 pagesECE 0142 Floating Point RepsRaaghav SrinivasanNo ratings yet

- Floating Point ArithmeticDocument30 pagesFloating Point ArithmeticshwetabhagatNo ratings yet

- 32 Bit Floating Point ALUDocument7 pages32 Bit Floating Point ALUTrần Trúc Nam Hải0% (1)

- Computer Organization and Architecture Week 3Document32 pagesComputer Organization and Architecture Week 3Iksan BukhoriNo ratings yet

- Akdeniz University CSE206 Computer Organization Number Systems and Computer ArithmeticDocument39 pagesAkdeniz University CSE206 Computer Organization Number Systems and Computer Arithmetictemhem racuNo ratings yet

- Floating PointDocument26 pagesFloating PointShanmugha Priya BalasubramanianNo ratings yet

- Division: Check For 0 Divisor Long Division ApproachDocument27 pagesDivision: Check For 0 Divisor Long Division ApproachArvind RameshNo ratings yet

- 2 CS1FC16 Information RepresentationDocument4 pages2 CS1FC16 Information RepresentationAnna AbcxyzNo ratings yet

- MATH-206 Assignment 1Document3 pagesMATH-206 Assignment 1Ch M Sami JuttNo ratings yet

- Floating PointDocument33 pagesFloating PointProject Manager IIT Kanpur CPWDNo ratings yet

- Design of Single Precision Floating Point Multiplication Algorithm With Vector SupportDocument8 pagesDesign of Single Precision Floating Point Multiplication Algorithm With Vector SupportInternational Journal of Scientific Research in Science, Engineering and Technology ( IJSRSET )No ratings yet

- FLPTDocument13 pagesFLPTfunny chintuNo ratings yet

- IEEE Single and Double Precision Floating Point FormatsDocument6 pagesIEEE Single and Double Precision Floating Point Formatsomar shadyNo ratings yet

- International Journal of Engineering Research and DevelopmentDocument6 pagesInternational Journal of Engineering Research and DevelopmentIJERDNo ratings yet

- Floating point and fixed point number representationsDocument8 pagesFloating point and fixed point number representationsPramodh PalukuriNo ratings yet

- BCA-Digital Computer OrganizationDocument116 pagesBCA-Digital Computer Organization27072002kuldeepNo ratings yet

- MATH1070 2 Error and Computer Arithmetic PDFDocument60 pagesMATH1070 2 Error and Computer Arithmetic PDFShah Saud AlamNo ratings yet

- MATH1070 2 Error and Computer ArithmeticDocument60 pagesMATH1070 2 Error and Computer ArithmeticphongiswindyNo ratings yet

- The World Is Not Just Integers: Programming Languages Support Numbers With FractionDocument51 pagesThe World Is Not Just Integers: Programming Languages Support Numbers With FractionAyala AlexNo ratings yet

- Floating Point Arithmetic ClassDocument24 pagesFloating Point Arithmetic ClassManisha RajputNo ratings yet

- Floatpoint IntroDocument20 pagesFloatpoint IntroItishree PatiNo ratings yet

- FAC1002 - Binary Number SystemDocument31 pagesFAC1002 - Binary Number Systemmohammad aljihad bin mohd jamal FJ1No ratings yet

- CS37 Lecture 7 Floating Point NumbersDocument39 pagesCS37 Lecture 7 Floating Point NumbersdvfgdfggdfNo ratings yet

- 01 Error-1Document34 pages01 Error-1Dian Hani OktavianaNo ratings yet

- Chapter 3 Arithmetic For Computers-NewDocument32 pagesChapter 3 Arithmetic For Computers-NewStephanieNo ratings yet

- 95% Completely Clueless: " of The Folks Out There Are About Floating-Point."Document33 pages95% Completely Clueless: " of The Folks Out There Are About Floating-Point."svkarthik83No ratings yet

- 01A - Erorr Komp Num 01Document29 pages01A - Erorr Komp Num 01Mess YeahNo ratings yet

- Computer Systems Architecture Assignment 1 ReportDocument15 pagesComputer Systems Architecture Assignment 1 ReportDũng NguyễnNo ratings yet

- 10 MIPS Floating Point ArithmeticDocument28 pages10 MIPS Floating Point ArithmeticHabibullah Khan MazariNo ratings yet

- IEEE Paper On Floating PointDocument28 pagesIEEE Paper On Floating Pointapi-3721660No ratings yet

- Chapter 03Document51 pagesChapter 03abdallahalmaddanNo ratings yet

- Chapter 03 RISC VDocument52 pagesChapter 03 RISC VPushkal MishraNo ratings yet

- Lec 21Document18 pagesLec 21Ahmed Razi UllahNo ratings yet

- CPE 6204 - Number Systems ModuleDocument10 pagesCPE 6204 - Number Systems ModuleBen GwenNo ratings yet

- Floating Point Arithmetic and RepresentationsDocument19 pagesFloating Point Arithmetic and RepresentationsDevika csbsNo ratings yet

- Computer Science Coursebook-9-24Document16 pagesComputer Science Coursebook-9-24api-470106119No ratings yet

- Decision Tree Learning ExplainedDocument16 pagesDecision Tree Learning ExplainedAshish TiwariNo ratings yet

- Snoop Ivades Planet EarthDocument29 pagesSnoop Ivades Planet EarthKoutheir EBNo ratings yet

- Classification: Decision TreesDocument30 pagesClassification: Decision TreesAshish TiwariNo ratings yet

- Decision Trees and Boosting: Helge Voss (MPI-K, Heidelberg) TMVA WorkshopDocument30 pagesDecision Trees and Boosting: Helge Voss (MPI-K, Heidelberg) TMVA WorkshopAshish TiwariNo ratings yet

- Decision Trees CLSDocument43 pagesDecision Trees CLSAshish TiwariNo ratings yet

- Decision Trees: Andrew W. Moore Professor School of Computer Science Carnegie Mellon UniversityDocument101 pagesDecision Trees: Andrew W. Moore Professor School of Computer Science Carnegie Mellon UniversityAshish TiwariNo ratings yet

- Introduction To HDL Day - 3: STC On HDL For Digital System Design 1Document8 pagesIntroduction To HDL Day - 3: STC On HDL For Digital System Design 1Ashish Tiwari50% (2)

- Precise InterruptsDocument17 pagesPrecise InterruptsAshish TiwariNo ratings yet

- TH THDocument3 pagesTH THDeepa Junnarkar DegwekarNo ratings yet

- Web Services: 1. SSL CertificatesDocument9 pagesWeb Services: 1. SSL CertificatesAshish TiwariNo ratings yet

- CheatsheetDocument1 pageCheatsheetAshish TiwariNo ratings yet

- Real World ProblemsDocument15 pagesReal World ProblemsAshish TiwariNo ratings yet

- Uploads Notes Btech 4sem It OS End Term Sample PaperDocument9 pagesUploads Notes Btech 4sem It OS End Term Sample PaperAshish TiwariNo ratings yet

- GPRS and 3G Wireless Systems ArchitectureDocument20 pagesGPRS and 3G Wireless Systems ArchitectureKamaldeep SinghNo ratings yet

- Advt NTDocument3 pagesAdvt NTAshish TiwariNo ratings yet

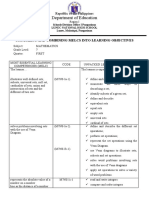

- Department of Education: Unpacking and Combining Melcs Into Learning ObjectivesDocument4 pagesDepartment of Education: Unpacking and Combining Melcs Into Learning ObjectivesEguita Theresa88% (8)

- Total Gadha ProgressionDocument15 pagesTotal Gadha ProgressionSaket Shahi100% (1)

- Don Bosco Technical Institute of MakatiDocument1 pageDon Bosco Technical Institute of MakatiRose Ann YamcoNo ratings yet

- Binary Arithmetic - Negative Numbers and Subtraction: Not All Integers Are PositiveDocument7 pagesBinary Arithmetic - Negative Numbers and Subtraction: Not All Integers Are PositiveBharath ManjeshNo ratings yet

- PRINTF FORMATTINGDocument5 pagesPRINTF FORMATTINGLeandroSousaBrazNo ratings yet

- Easa Dgca Module HandbookDocument148 pagesEasa Dgca Module Handbookkunnann0% (1)

- Q2 Module 6 Grade 4Document8 pagesQ2 Module 6 Grade 4Reyka OlympiaNo ratings yet

- Lecture - 2 PDFDocument7 pagesLecture - 2 PDFTanzir HasanNo ratings yet

- Math 7 Adm Module 9 FinalDocument27 pagesMath 7 Adm Module 9 FinalTheresa EscNo ratings yet

- Map of Barangays in Ormoc City, Leyte, PhilippinesDocument1 pageMap of Barangays in Ormoc City, Leyte, PhilippinesRochie Mae LuceroNo ratings yet

- H. Vic Dannon - Cantor's Set, The Cardinality of The Reals, and The Continuum HypothesisDocument16 pagesH. Vic Dannon - Cantor's Set, The Cardinality of The Reals, and The Continuum HypothesisNine000No ratings yet

- University of Massachusetts Dept. of Electrical & Computer EngineeringDocument39 pagesUniversity of Massachusetts Dept. of Electrical & Computer EngineeringBrim TautanNo ratings yet

- IGCSE (9-1) Maths - Practice Paper 6H Mark SchemeDocument17 pagesIGCSE (9-1) Maths - Practice Paper 6H Mark SchemeHan ZhangNo ratings yet

- Lec 09 111Document246 pagesLec 09 111Anonymous eWMnRr70qNo ratings yet

- Technical description of land parcel boundaries and coordinatesDocument1 pageTechnical description of land parcel boundaries and coordinatesCirilo Jr. LagnasonNo ratings yet

- Expectations: Mathematics 7 Quarter 1 Week 6Document9 pagesExpectations: Mathematics 7 Quarter 1 Week 6Shiela Mae NuquiNo ratings yet

- Powers of 2 Table - Vaughn's SummariesDocument2 pagesPowers of 2 Table - Vaughn's SummariesAnonymous NEqv0Uy7KNo ratings yet

- Verdaderos Numeros HipercomplejosDocument46 pagesVerdaderos Numeros HipercomplejosDaniel AlejandroNo ratings yet

- Final Presentation (Persentage, Base and Rate)Document29 pagesFinal Presentation (Persentage, Base and Rate)Julie Ann Sanchez100% (1)

- MATH-QUARTER 1, WEEK 3 Day 1-4Document58 pagesMATH-QUARTER 1, WEEK 3 Day 1-4Rhodz Cacho-Egango FerrerNo ratings yet

- Ch-9 RD MathsDocument55 pagesCh-9 RD MathsThe VRAJ GAMESNo ratings yet

- Q - 1 Fill in The Blanks. (3M)Document2 pagesQ - 1 Fill in The Blanks. (3M)Amit AnandNo ratings yet

- Math Quiz Bee Grades 3-4Document26 pagesMath Quiz Bee Grades 3-4julie guansingNo ratings yet

- 3 IntroductoryDocument8 pages3 IntroductoryRahul GuptaNo ratings yet

- Digital Electronics 1Document266 pagesDigital Electronics 1Teqip SharmaNo ratings yet

- Prediction Paper - Non Calculator Paper 1 MSDocument4 pagesPrediction Paper - Non Calculator Paper 1 MSnastase_maryanaNo ratings yet

- Fraction Beed2a Elem5Document27 pagesFraction Beed2a Elem5Christine CamaraNo ratings yet

- Topics in Contemporary Mathematics 10th Edition Bello Test Bank 1Document35 pagesTopics in Contemporary Mathematics 10th Edition Bello Test Bank 1Howard Goforth100% (41)

- Number PatternDocument7 pagesNumber PatternYong ChunNo ratings yet

- Lesson 9 5 Multiplication Division of Radical ExpressionsDocument17 pagesLesson 9 5 Multiplication Division of Radical Expressionsapi-233527181100% (1)