Professional Documents

Culture Documents

Stereo Vision Applying Opencv and Raspberry Pi: Commission II

Uploaded by

Souvik DattaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Stereo Vision Applying Opencv and Raspberry Pi: Commission II

Uploaded by

Souvik DattaCopyright:

Available Formats

The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W17, 2019

6th International Workshop LowCost 3D – Sensors, Algorithms, Applications, 2–3 December 2019, Strasbourg, France

STEREO VISION APPLYING OPENCV AND RASPBERRY PI

G. Pomaska

University of Applied Sciences Bielefeld, Germany, gp@imagefact.de

Commission II

KEY WORDS: Stereo Vision, OpenCV, Python, Raspberry Pi, Camera Module, Infrared Photography, Image Processing

ABSTRACT:

This article points out the single board computer Raspberry Pi and the related camera modules for image acquisition. Particular

attention is directed to stereoscopic image recording and post processing software applying OpenCV. A design of a camera network

is created and applied to a field application. The OpenCV computer vision library and its Python binding provides some script

samples to encourage users developing their own custom tailored scripts. Stereoscopic recording is intended for extended base lines

without a mechanical bar. Image series will be taken in order to wipe out moving objects from the frames. And finally the NoIR

camera made infrared photography possible with low effort. Computer, accupack and lens board are assembled in a 3D printed

housing operated by a mobile device.

1. INTRODUCTION 2. RASPBERRY PI HARDWARE

1.1 Stereo Vision 2.1 Raspberry Pi Boards

Visualizing and extracting spatial information from digital Without going to much into detail some remarks about the

images, taken from two vantage points, referred to as stereo computer hardware are listed here. The single board computer

vision. Comparing the relative positions of objects in the frames Raspberry Pi was original invented to promote computer

enables extracting of 3D information as well as in the biological science in schools and education. Today one can find this little

process. The normal case of stereo vision arranges two cameras computer in industrial applications as well. By now the number

horizontally within in a base distance, pointing in the same of sold items is about 20 millions. Current devices are the

direction. This arrangement results in two different Version 3 followed by Version 4 (since June, 2019), the Zero

perspectives. A human may compare the half-pictures of the and the compute modules. A compute module comes as a plug-

stereogram in a stereoscopic device. A computer applies in-board with connection pins only on the board itself. A

algorithms to automatically match corresponding points and Raspberry Pi 3B with 1,6 GHz frequency, 1 GB RAM, WLAN

store the depth information in a disparity map. Initially the and common interfaces has a street price of around 35 Euro.

working chain starts from the camera calibration, followed by The officially supported OS is a free Raspian Linux. A micro

image refinement and stereoscopic image rectification. The SD-card is used as mass storage. Due to the compactness and

stereoscopic window should be adjusted for convenient the integrated WLAN adapter the camera stuff presented in this

viewing. A disparity map may be converted into a point cloud, paper is driven by the RPi Zero W.

represented by 3D coordinates with a known scale or used for

presentation of spatial scenes in one image by mouse movement

or screen rotation on mobile devices.

1.2 OpenCV and Python

The application programmer has access to the necessary

algorithms by OpenCV an API for solving computer vision

problems. OpenCV incorporates methods for acquiring,

processing and analyzing image data from real scenes.

Interfaces to languages as C++, Java and Python are

implemented on different operating systems. Primary the

OpenCV library was developed since 1999 by Intel Russia. The

cross-platform Software is now available under the BSD Figure 1. Raspberry Pi Zero W and camera module V1.3

license, free for academic and commercial use. Since 2012

OpenCV is under continuous development of the non-profit

organization OpenCV.org. For beginning programmers 2.2 RPi Camera Modules

OpenCV Python binding on a Raspberry Pi is placed as a

suitable tool introducing image processing and machine vision. The Raspberry Pi foundation provides related camera modules

for image acquisition. Successor of the OmniVision Sensor V

1.3 with 5 MP (2592 x 1944 pix ) image resolution is version 2,

a Sony IMX219 sensor with an extended image resolution of 8

MP (3280 x 2464 pix). Night vision versions without infrared

filters are even available. The board sizes only 25 x 20 mm,

shortest focus distance is named at approx. 80 cm. M12 mounts

This contribution has been peer-reviewed.

https://doi.org/10.5194/isprs-archives-XLII-2-W17-265-2019 | © Authors 2019. CC BY 4.0 License. 265

The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W17, 2019

6th International Workshop LowCost 3D – Sensors, Algorithms, Applications, 2–3 December 2019, Strasbourg, France

can be fixed for use of different lenses. Figure 2 displays the into the CSI interface. The CSI port on the Zero is smaller

direct view on the sensor without a lens and the M12 mount for therefore the cable differs from the standard shape. ZeroCam

carrying changeable lenses. Beside the original dismounted NoIR is a special camera modification for the Zero.

lens, a tele lens and a 120 degree wide angle lens are depicted.

Camera and computer are connected by a ribbon cable plugged

Shell commands raspistill, raspiyuv and raspivid, raspividyuv def viewPicture(file):

can drive the camera for still photography and video recording. view = cv2.resize(cv2.imread(file),\

The yuv extension don't use an encoder and writes directly to (320,240))

the disk. Camera format is 4:3 or 16:9 according to the selected cv2.imshow(file,view)

cv2.waitKey(0)

mode. The camera produces previews only on directly

connected HDMI displays. Small displays beginning from 3.5

inch with low resolutions are offered for monitoring the camera def main():

image. A command for testing the camera may use the cam = PiCamera(resolution=(2592,1944),\

following options: framerate = 15)

raspstill -k -t 0 -vf -hf cam.iso = 200

K switches to keyboard mode, t defines the preview time in ms, time.sleep(2)#wait automatic control

0 is infinitely, vf and hf flip the image vertically and cam.shutter_speed = cam.exposure_speed

horizontally. One can quit the mode by pressing the x key. cam.exposure_mode = 'off'

g = cam.awb_gains

cam.awb_mode = 'off'

cam.awb_gains = g

for i in range (n): # take n picture

imName = BASEDIR + '%02d.jpg' %i

take_picture (cam,imName)

viewPicture(imName)

time.sleep(delay)

Figure 2: Lens modification accessories cv2.destroyAllWindows()

main()

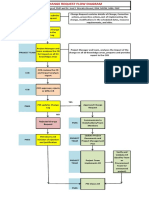

3. IMAGE ACQUISITION 3.2 A Camera Network

3.1 Camera Settings A basic network configuration for stereoscopic imaging is given

as illustrated in figure 3. A mobile router handles the fix IP

The PiCamera class is a Python API for driving the camera via addresses of two RPis and connects to a mobile computer

software commands. Focusing on stereo vision two cameras named host here. Additional hardware in this configuration is a

should fire exactly at the same time. The characteristics of a HDMI display and a small thermal printer. The host computer

rolling shutter system do not meet a high demand on takes use of a Web interface for further image processing. Image

synchronization. Since we consider here a very long base line files are named by a random code. Catching the according QR

and series for image stacking the latency is a minor problem. codes with a smartphone enables direct download. Random

codes are stored and printed for later downloading from the

If a sync time within 1/1000 s is required, the crowd funding internet.

project StereoPi will comply. StereoPi is an interface board

with two CSI sockets connected to a RPi compute module. The

end user benefits from the application software SLP (StereoPi

Live stream Playground), distributed as a Raspian Linux image

file. For more details about StereoPi visit the projects web site

http://stereopi.com.

As applied in time laps photography images should display

equivalent lightning conditions in brightness, saturation and

color because of intending the later stacking process. A recipe

for capturing consistent images runs as follows: Fix the shutter

speed, define the ISO value, set analog and digital gain to fixed

values, switch exposure mode and white balance off and set

fixed values for automatic white balance gains. First let the

camera warm up 2 seconds and wait for automatic control. A

python script that takes n images within a time delay reads as

follows:

from picamera import PiCamera

import time Figure 3: Camera network for taking stereograms

import cv2

#

BASEDIR = 'photo/' Virtually sync of the cameras can be implemented by GPIO

n = 7 # number of pictures request or wireless by SSH secure shell protocol or TCP socket

delay = 10 # time between pictures programming. Talking about socket programming is outside the

def take_picture(cam,file): scope of this paper. Sending a command to a remote computer

cam.capture(file) via SSH is in python a simple call of a sub process:

This contribution has been peer-reviewed.

https://doi.org/10.5194/isprs-archives-XLII-2-W17-265-2019 | © Authors 2019. CC BY 4.0 License. 266

The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W17, 2019

6th International Workshop LowCost 3D – Sensors, Algorithms, Applications, 2–3 December 2019, Strasbourg, France

import subprocess stack and median enables saving the background extracted

cmdLine ='ssh pi@IPremoteComputer \ frame.

raspistill -o image.jpg' def stackServer(target='server/'):

subprocess.run(cmdLine) photos = glob.glob(target + '*.png')

img=[]

To avoid password authentification the sshpass tool should be for filename in photos:

added to the command like img.append(np.array(Image.open\

cmdLine = 'sshpass -p (filename)))

passwordRemoteComputer ssh ... as sequence = np.stack(img,axis=3)

above … result = np.median(sequence,axis=3).\

astype(np.uint8)

4. IMAGE PROCESSING APPLYING OPENCV

Image.fromarray(result).save\

('serverStack.png')

Due to the less stronger computing power of the RPi Zero, the

return

following procedures are processed on the so called host

computer. The host is a mobile device like a tablet or notebook

From now on we continue processing only with two images, the

or may be an RPi 4. Whereas the camera calibration is a pre-

left and right image of the stereogram.

process, all the other functions follow as post-production.

4.3 Histogram Equalization

4.1 Camera Calibration

Pre-processing for balancing images is known as histogram

The OpenCV camera calibration runs as a standard procedure

equalization. It will make dark images less dark and bright

carried out by taking pictures of a regular point pattern or a

images less bright. OpenCV provides the contrast limited

checker board. Points or intersections at the checker board are

adaptive histogram equalization method applied to a CLAHE

detected in the images and stored in the array of image

object. A color image must be first transformed into the YUV

coordinates, while the according object coordinates are given by

or LAB format. The channels must be split and the L-channel is

the pattern and a constant z-Value. Comparing the

processed by the apply method. Subsequent the channels have

measurements with the object points provides the camera matrix

to be merged back and the conversion from LAB to BGR

and lens distortion for the camera or in case of stereo-rig

finishes the procedure. Even in landscape shootings is a

calibration for both cameras. Additional the relative position

significant refinement obtainable. The following code snippet

between the vantage points are given through the rotation

applied to an infrared image (figure 6) demonstrates the

matrix and the translation vector. Following the calibration

improvement.

image refinement can be executed by transferring image

coordinates about the principle point deviation and lens def histogramAdjust(imgFile):

distortion removal. After this step the images are presented as img1 = cv2.imread(imgFile)

they were taken by a pinhole camera. img1_lab = cv2.cvtColor(img1,\

cv2.COLOR_BGR2LAB)

clahe = cv2.createCLAHE(clipLimit=2.0,

tileGridSize=(8,8))

img1_lab[:,:,0] = clahe.apply \

(img1_lab[:,:,0])

imgRes =

cv2.cvtColor(img1_yuv,\2

cv2.COLOR_LAB2BGR)

cv2.imwrite('clahe' +imgFile, imgRes)

return

Figure 4: Calibrating a camera rig with OpenCV

4.2 Image Pre-processing

4.2.1 Erase moving objects

Both cameras are calibrated and provide upon request refined

images due to the known camera matrix and distortion

coefficients from a former calibration. Grabbing the images

from the cameras in a predefined data structure is the first step

in the workflow before the stacking process follows. Figure 5: Histogram equalization applying the CLAHE

method

It is necessary to wipe out moving objects from multiple images

4.4 Stereo Image Rectification

taken from a fixed camera position. We do it by calculating the

median of the images as noted in the script. The code gives With regard to the geometric conditions an image pair should

some more information about the power of Python. The have the same image content with minimum hidden parts and a

directory server/ is transferred to the function. Applying the perfect alignment is requested as well. It is necessary to perform

globe module lists all PNG-files. Looping through the list by camera calibration respectively stereo-rig calibration in

reading the images into a numpy array and calling the methods advance. The process used to project images onto a common

This contribution has been peer-reviewed.

https://doi.org/10.5194/isprs-archives-XLII-2-W17-265-2019 | © Authors 2019. CC BY 4.0 License. 267

The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W17, 2019

6th International Workshop LowCost 3D – Sensors, Algorithms, Applications, 2–3 December 2019, Strasbourg, France

image plane is named image rectification. It differs between the To ensure equal lightning conditions exposure is used as

calibrated and uncalibrated case. The first case takes as input described in chapter 3.1.

values the calibration data, the latter needs corresponding points

in the images. The characteristic of rectified images are that all 5.1 Camera for Field Usage

corresponding lines are parallel to the horizontal axis and that

they have the same vertical values. Finally vertical parallaxes Figure 7 displays the CAD construction and the 3D printed

are eliminated. Figure 6 illustrates the situation of a free hand parts of a camera housing. The lens board carries two cameras.

taken image pair. Key points are detected by the ORB operator One is equipped with a RGB sensor and M12 mount for

and matched with the Brute-Fore Matcher. Both algorithms are changing lenses from tele angle to wide angle. The other is the

made available again by OpenCV. The corresponding points NoIR camera equipped with a step up ring to change the

depict different values in the y-coordinates. The paralle view infrared filters with different wavelength protection. The left

image pair underneath in figure 6 is a rectified version and camera (called Backbord) will act as the server, the right camera

doesn't show vertical parallaxes anymore. Colours come frome (called Steuerbord) as a client. The server is used for pointing

saturation extension. The uncalibrated rectification algorithm and delivering pictures as requested in intervals by the client.

used here is taken from (Spizhevoy, Rybnikov, 2018) and The computer is powered via USB by the battery from inside

assumes calibrated images recorded from good positioned the carrier. The camera platform has to be adjusted in the first

cameras. step by a bubble level. Pointing 90 degree to the baseline is

supported by software. First the both cameras point to the

opposite camera each other. Afterwards rotation about 90

degrees targets the object. This principle follows the well

known procedure from former photo theodolites. Accuracy of

the adjustment depends on the available hardware and should

comply to the uncalibrated rectification case. By the way, our

camera calibration yield to a focal length of 2.596 pixel at

3280x2464 resolution that gives a horizontal field of view of

about 65 degree.

Figure 6: Stereo image rectification, the uncalibrated case

4.5 Stereo Formatting Figure 7: Camera housing design fabricated by a 3D printer

A stereoscopic image pair has to be converted into a

stereoscopic format for viewing: side-by-side, cross, or 5.2 User Interface

anaglyph are the alternatives. ImageMagick is a professional

tool for editing or composing bitmap images. If it is installed on Since there are any mechanical operating switching missing, the

the machine a system command can be called like complete photography runs under software control. We are

cmdLine='magick montage -mode concatenate \ talking about headless systems. Booting includes running the

leftImage rightImage targetFile' VNC server and starting the application software. We are

subprocess.run (cmdLine) connected with fixed IP addresses to a network. There is no

The combination of left and right image is stored in targetFile, keyboard input intended. Starting the VNC viewer on a mobile

figure 10 and 11 are samples of ImageMagicks montage device gives access to a GUI interface. If necessary, the camera

command. settings may be predefined by a separate panel, that is used for

both cams.

Setting the stereoscopic window, the plane that comes with zero

parallax, and cropping the image should be outside the scope of

this paper. Finally we calculate the depth map by instantiate a

stereoSGBM_create object and calculate it with the compute

method taking again benefit oft the OpenCV library.

5. FROM CONCEPT TO APPLICATION

Corresponding with the above delineated network configuration

a hyper base stereoscopic application for taking photographs on

side should be developed. Characteristics of the procedure are

the extreme long base line without a physical base and

subtracting moving objects from the images. That requires

picture taking of sequences followed by a stacking procedure.

This contribution has been peer-reviewed.

https://doi.org/10.5194/isprs-archives-XLII-2-W17-265-2019 | © Authors 2019. CC BY 4.0 License. 268

The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W17, 2019

6th International Workshop LowCost 3D – Sensors, Algorithms, Applications, 2–3 December 2019, Strasbourg, France

photography it is common to extend the sky by mixing the

colour channels, for example changing blue with red. Figure 10

displays an image taken with a 720 nm filter and channel mixed

between blue and red.

Figure 10: Infrared stereo image after channel mixing

Figure 8: VNC viewer window showing the camera settings

panel

The side-by-side stereo format in figure 10 looks like a

greyscale image but it isn't. Colour information is still available.

The server script enables pointing along the baseline and Finally the saturation has to be extended for getting the wanted

controlling the images. After pointing the camera to the target false colour effect. The final images are deeply influenced by

object the server hands over the control to the client. Note that the existing light conditions and image manipulations as well.

we can easy control the horizontal setting. For distance objects Observe figure 11 and enjoy the artificially output of the scene.

the parallel direction control is more difficult. Other functions

are provided for taking separate series of photos or back the

current photos up to a random directory. Starting photo taking

is activated by the client script and performed quasi

simultaneously. Consider that we take several photos for

stacking and there is no need for a very short latency. Don't

forget backing the photos up before starting a new job. Talking

here about the File management is outside the aim of this paper.

While the RPi Zero has limited power, post processing and

stereoscopic calculations run on a host computer and don't

effect the field work. Figure 11: Saturation extended, cross view stereo format

REFERENCES

Image editing software.

https://imagemagick.org

Infrared and ultraviolet photography.

http://www.astrosurf.com/luxorion/photo-ir-uv.htm

OpenCV-Python Tutorials.

https://docs.opencv.org/3.4.7/d6/d00/tutorial_py_root.html

Open source stereoscopic camera based on Raspberry Pi.

http://stereopi.com/

Figure 9: GUI interface for taking photographs Picamera documentation.

https://picamera.readthedocs.io/en/release-1.13/

5.3 Infrared Photography

Raspberry Pi Foundation. https://www.raspberrypi.org/

A digital camera sensor collects light from wavelengths approx.

between 400 nm and 1000 nm. To convert the energy into Spizhevoy, Alexey, Rybnikov, Aleksandr: OpenCV 3 Computer

colour information the light is separated by colour filtering. Vision with Python Cookbook. Packt Publishing, Birmingham,

Well known is the Bayer-Pattern with two times green and a red 2018

and blue filter to match a pixels colour. In case of the Foveon

sensor the pixel are layered instead of chessboard design.

Protecting the sensor for light outside the visible range,

beginning approx. at 600 nm, a IR blocking filter is assembled

in front of the lens. This filter is missing on the Raspberry Pi

NoIR camera module. If we take photos in daylight with the

NoIR sensor it presents a purple coloured image. For now

keeping out colour near infrared filters block the incoming light

until a certain wavelength. For example 530 nm, 650 nm or 720

nm are customary for outdoor scenes. In landscape

This contribution has been peer-reviewed.

https://doi.org/10.5194/isprs-archives-XLII-2-W17-265-2019 | © Authors 2019. CC BY 4.0 License. 269

You might also like

- SAP Analytics Cloud - Landscape Architecture and Life-Cycle ManagementDocument57 pagesSAP Analytics Cloud - Landscape Architecture and Life-Cycle ManagementtataxpNo ratings yet

- Object Tracking Robot Onraspberry Pi Using OpencvDocument4 pagesObject Tracking Robot Onraspberry Pi Using OpencvAyakyusha AiNo ratings yet

- 2022-2023-1st-Quarter-Exam-Computer Programming Grade 12-1920Document6 pages2022-2023-1st-Quarter-Exam-Computer Programming Grade 12-1920Edeson John CabanesNo ratings yet

- Camera Jammer Synopsis Electronics Engineering Project Erole Technologies PVT LTDDocument11 pagesCamera Jammer Synopsis Electronics Engineering Project Erole Technologies PVT LTDErole Technologies Pvt ltd Homemade EngineerNo ratings yet

- Create 3D Games in Godot: A Live WorkshopDocument24 pagesCreate 3D Games in Godot: A Live WorkshopLuis OENo ratings yet

- Change Request Flow DiagramDocument2 pagesChange Request Flow DiagramSwapnil0% (1)

- 019 PDFDocument6 pages019 PDFNguyễnĐạtNo ratings yet

- FPGA Robot Arm Assistant: Final Project ReportDocument32 pagesFPGA Robot Arm Assistant: Final Project Reporthassan angularNo ratings yet

- Dji Robomaster Ai Challenge Technical ReportDocument10 pagesDji Robomaster Ai Challenge Technical ReportSaad khalilNo ratings yet

- Real-Time Machine Vision Based Robot: G. Sathya G. Ramesh ChandraDocument10 pagesReal-Time Machine Vision Based Robot: G. Sathya G. Ramesh ChandragrchandraNo ratings yet

- Mobile Phone Camera Interface PrimerDocument13 pagesMobile Phone Camera Interface PrimerOttoNo ratings yet

- Chapter-3 Modal Implementation and AnalysisDocument23 pagesChapter-3 Modal Implementation and AnalysisAbbey Benedict TuminiNo ratings yet

- Raspberry Pi Camera SoftwareDocument1 pageRaspberry Pi Camera SoftwareSteve AttwoodNo ratings yet

- Ijresm V1 I12 74Document4 pagesIjresm V1 I12 74BHUPANI SREE MADHUKIRAN ECEUG-2020 BATCHNo ratings yet

- Arduino DroneDocument27 pagesArduino DroneEdwardNo ratings yet

- Research PaperDocument5 pagesResearch Papertooba mukhtarNo ratings yet

- g4 Project ReportDocument60 pagesg4 Project ReportDrake Moses KabbsNo ratings yet

- Thesisfors - Removed (1) - 22-43Document22 pagesThesisfors - Removed (1) - 22-43zinda abadNo ratings yet

- Interfacing of MATLAB With Arduino For Face RecognitionDocument5 pagesInterfacing of MATLAB With Arduino For Face Recognitionsrijith rajNo ratings yet

- Introducing Enhanced V4L2 APIs for Digital Camera DevicesDocument58 pagesIntroducing Enhanced V4L2 APIs for Digital Camera DevicesThamilarasan PalaniNo ratings yet

- NI Tutorial 2808 enDocument4 pagesNI Tutorial 2808 enharjanto_utomoNo ratings yet

- 113 115 Object Detection and Tracking Using Image ProcessingDocument3 pages113 115 Object Detection and Tracking Using Image ProcessingRezaa NishikawaNo ratings yet

- SSRN Id4152378Document9 pagesSSRN Id4152378Pedro OdorizziNo ratings yet

- Acquiring From GigE CamerasDocument5 pagesAcquiring From GigE Camerascnrk777No ratings yet

- La Divina Comedia 2Document7 pagesLa Divina Comedia 2JoseSantizRuizNo ratings yet

- Live Video Streaming Using Raspberry Pi in Iot Devices IJERTCONV5IS19009Document5 pagesLive Video Streaming Using Raspberry Pi in Iot Devices IJERTCONV5IS19009Sruthi ReddyNo ratings yet

- 10.31829 2689 6958.jes2020 3 (1) 111Document18 pages10.31829 2689 6958.jes2020 3 (1) 111nikhilshetty1951No ratings yet

- Huu Quocnguyen2015 PDFDocument3 pagesHuu Quocnguyen2015 PDFPEPE HUARCONo ratings yet

- Automatic License Plate Recoganization System Based On Image Processing Using LabviewDocument5 pagesAutomatic License Plate Recoganization System Based On Image Processing Using LabviewNguyen Minh ThaiNo ratings yet

- Real Time Object Detection & Tracking with Rotating CameraDocument7 pagesReal Time Object Detection & Tracking with Rotating CameraARif HakimNo ratings yet

- Esp32 CamDocument6 pagesEsp32 CamDaniel FirdausNo ratings yet

- The Opencv User Guide: Release 2.4.9.0Document23 pagesThe Opencv User Guide: Release 2.4.9.0osilatorNo ratings yet

- Object detection and tracking using image processingDocument3 pagesObject detection and tracking using image processingSreekanth PagadapalliNo ratings yet

- Wa0026.Document9 pagesWa0026.NaveenNo ratings yet

- Iirdem Design and Implementation of Finger Writing in Air by Using Open CV (Computer Vision) Library On ArmDocument5 pagesIirdem Design and Implementation of Finger Writing in Air by Using Open CV (Computer Vision) Library On ArmiaetsdiaetsdNo ratings yet

- Raspberry Pi Based Portable 3D Measurement SystemDocument8 pagesRaspberry Pi Based Portable 3D Measurement SystemSamet ÇetinNo ratings yet

- Embedded Real Time Video Monitoring System Using Arm: Kavitha Mamindla, Dr.V.Padmaja, CH - NagadeepaDocument5 pagesEmbedded Real Time Video Monitoring System Using Arm: Kavitha Mamindla, Dr.V.Padmaja, CH - Nagadeepabrao89No ratings yet

- Cognex Industrial Cameras PDFDocument4 pagesCognex Industrial Cameras PDFkdsNo ratings yet

- 2D23D Final Report Team B3Document31 pages2D23D Final Report Team B3Jeremy LeungNo ratings yet

- Implementation of Image Processing On Raspberry PiDocument5 pagesImplementation of Image Processing On Raspberry Piajithmanohar1221No ratings yet

- CC12 enDocument76 pagesCC12 enemadhsobhyNo ratings yet

- Lane Detection and Tracking by Video SensorsDocument6 pagesLane Detection and Tracking by Video SensorsTriệu NguyễnNo ratings yet

- Smart CameraDocument8 pagesSmart CameraSanthosh SalemNo ratings yet

- TNE 022A 20190402 00 - JAI SDK To eBUSDocument12 pagesTNE 022A 20190402 00 - JAI SDK To eBUSloyclayNo ratings yet

- F-ViewII enDocument75 pagesF-ViewII enemadhsobhyNo ratings yet

- HOM E Secu Rity AND Safe TY Syst EMDocument18 pagesHOM E Secu Rity AND Safe TY Syst EMAws FaeqNo ratings yet

- Pic-Microcontroller Based Neural Network & Image Processing Controlled Low Cost Autonomous VehicleDocument4 pagesPic-Microcontroller Based Neural Network & Image Processing Controlled Low Cost Autonomous VehicleAravindha BhatNo ratings yet

- Topic: Automatic Face Mask DetectionDocument40 pagesTopic: Automatic Face Mask DetectionRohan AshishNo ratings yet

- Ijarcce 200Document3 pagesIjarcce 200Saeideh OraeiNo ratings yet

- Automatic Image Registration Using MATLAB Simulink Model Configured On DM6437EVM ProcessorDocument4 pagesAutomatic Image Registration Using MATLAB Simulink Model Configured On DM6437EVM ProcessorAnonymous gF0DJW10yNo ratings yet

- Muscle Movement and Facial Emoji Detection Via Raspberry Pi AuthorsDocument8 pagesMuscle Movement and Facial Emoji Detection Via Raspberry Pi AuthorsGokul KNo ratings yet

- Applications of Computer GraphicsDocument40 pagesApplications of Computer GraphicsMar AnniNo ratings yet

- Fpga Based Object Tracking System: Project ReportDocument12 pagesFpga Based Object Tracking System: Project ReportAchraf El aouameNo ratings yet

- Li DeepFusion Lidar-Camera Deep Fusion For Multi-Modal 3D Object Detection CVPR 2022 PaperDocument10 pagesLi DeepFusion Lidar-Camera Deep Fusion For Multi-Modal 3D Object Detection CVPR 2022 PaperhaisabiNo ratings yet

- Hand Gesture Based Camera Monitorning System Using Raspberry PiDocument4 pagesHand Gesture Based Camera Monitorning System Using Raspberry PidanangkitaNo ratings yet

- VITSAT-1 PAYLOAD REPORT camera goalsDocument12 pagesVITSAT-1 PAYLOAD REPORT camera goalsVarun ReddyNo ratings yet

- Image Processing ReportDocument15 pagesImage Processing Reportrobin singhNo ratings yet

- 3DUNDERWORLD User ManualDocument5 pages3DUNDERWORLD User Manualquyen2012No ratings yet

- Multi Sensor Write Up PaperDocument7 pagesMulti Sensor Write Up PaperTRIAD TECHNO SERVICESNo ratings yet

- Seminar Paper 1Document4 pagesSeminar Paper 1Ravichandra GanganarasaiahNo ratings yet

- Kinect camera applied for autonomous robot signalling panel recognitionDocument9 pagesKinect camera applied for autonomous robot signalling panel recognitionRaul AlexNo ratings yet

- Tracking For Studio CamerasDocument6 pagesTracking For Studio CamerasZAIDAN DIDINo ratings yet

- Kinect v2 For Mobile Robot Navigation: Evaluation and ModelingDocument7 pagesKinect v2 For Mobile Robot Navigation: Evaluation and ModelingWaseem HassanNo ratings yet

- National Grid Evolution and StructureDocument30 pagesNational Grid Evolution and StructureSouvik DattaNo ratings yet

- Meera P. S. SELECT, VIT Chennai: Eee 3003: Power System EngineeringDocument9 pagesMeera P. S. SELECT, VIT Chennai: Eee 3003: Power System EngineeringSouvik DattaNo ratings yet

- Class On 6th September, 2021Document11 pagesClass On 6th September, 2021Souvik DattaNo ratings yet

- Meera P. S. SELECT, VIT Chennai: Eee 3003: Power System EngineeringDocument10 pagesMeera P. S. SELECT, VIT Chennai: Eee 3003: Power System EngineeringSouvik DattaNo ratings yet

- ESP8266 WiFi Guide: Network Protocols ExplainedDocument7 pagesESP8266 WiFi Guide: Network Protocols ExplainedSouvik DattaNo ratings yet

- Closed Loop Solar - Research GateDocument6 pagesClosed Loop Solar - Research GateSouvik DattaNo ratings yet

- Mencoba BerbagiDocument10 pagesMencoba BerbagimelziambarNo ratings yet

- Syllogism Best Concepts, Method, Approach To Solve Reasoning ForDocument22 pagesSyllogism Best Concepts, Method, Approach To Solve Reasoning ForIndrajeeetNo ratings yet

- E CommerceDocument13 pagesE CommercesatishgwNo ratings yet

- My CV/PortfolioDocument29 pagesMy CV/PortfolioWes BallaoNo ratings yet

- PBGGDDocument4 pagesPBGGDLuis Rodriguez100% (2)

- Iut JournalDocument20 pagesIut JournalRong GuoNo ratings yet

- Correlations: NotesDocument4 pagesCorrelations: NotesMamank BerBudi SantosoNo ratings yet

- Adedayo Akinlabi Final PaperDocument3 pagesAdedayo Akinlabi Final PaperDayo TageNo ratings yet

- AI Multiagent Shopping System Recommends Optimal ProductsDocument9 pagesAI Multiagent Shopping System Recommends Optimal ProductsDaniel RizviNo ratings yet

- Fiber Doctor System Introduction ISSUE 1.30 - NCEDocument55 pagesFiber Doctor System Introduction ISSUE 1.30 - NCErafael antonio padilla mayorgaNo ratings yet

- FUNCTIONSDocument16 pagesFUNCTIONSHero KardeşlerNo ratings yet

- AirPrime - GNSS Tool - User Guide - Rev5.0Document44 pagesAirPrime - GNSS Tool - User Guide - Rev5.0nguyen nguyenNo ratings yet

- NUCES Grading PolicyDocument2 pagesNUCES Grading PolicyFaisal TehseenNo ratings yet

- Manual AL1900Document103 pagesManual AL1900Mario Julio de Oliveira SilvaNo ratings yet

- Poojan Kheni 190640107007 Sem 8 Internship PPT Review 0Document29 pagesPoojan Kheni 190640107007 Sem 8 Internship PPT Review 0PARTH RAJPUTNo ratings yet

- 150 Sofware Project Ideas For Students of Computer ScienceDocument5 pages150 Sofware Project Ideas For Students of Computer SciencePuneet Arora81% (27)

- Near-Optimal Learning of Tree-Structured Distributions by Chow-LiuDocument33 pagesNear-Optimal Learning of Tree-Structured Distributions by Chow-Liusutanu gayenNo ratings yet

- DigitalOcean Invoice 2021 SepDocument1 pageDigitalOcean Invoice 2021 SepsajidNo ratings yet

- OOP_LAB_EMPLOYEE_FILEDocument4 pagesOOP_LAB_EMPLOYEE_FILENazia buttNo ratings yet

- Practicum Evaluation Tool 1 1Document3 pagesPracticum Evaluation Tool 1 1api-583841034No ratings yet

- Nokia Siemens Networks SGSN SG7.0 Cause DescriptionDocument81 pagesNokia Siemens Networks SGSN SG7.0 Cause DescriptionBibiana TamayoNo ratings yet

- Invinsense OMDRDocument7 pagesInvinsense OMDRinfoperceptNo ratings yet

- PX-4226A-A: Owner's ManualDocument38 pagesPX-4226A-A: Owner's ManualFaizall SahbudinNo ratings yet

- Computer Networks (BEG374CO)Document1 pageComputer Networks (BEG374CO)Subas ShresthaNo ratings yet

- PIC16 (L) F1704/8: 8-Bit Advanced Analog Flash MicrocontrollersDocument472 pagesPIC16 (L) F1704/8: 8-Bit Advanced Analog Flash MicrocontrollersGabriel VillanuevaNo ratings yet

- BFX SeriesDocument10 pagesBFX SeriesPuji AntoroNo ratings yet

- Nikita Sarels 22251423 Computer Info Systems 2Document8 pagesNikita Sarels 22251423 Computer Info Systems 2anthony sarelsNo ratings yet