Professional Documents

Culture Documents

1 Dobot

Uploaded by

rk krishnaOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

1 Dobot

Uploaded by

rk krishnaCopyright:

Available Formats

1 Dobot

The Dobot Magician Robot Arm is multi-task robot arm that has black and white body with

consistent quality at fingertips Uni body design, un box and enjoy right away Designed for

desktop, safe and easily lifted. It contains a Vacuum pump Kit , Gripper, Writing and Drawing

Kit, 3D Printing Kit, and other accessories. It has many features like writing, drawing, 3D

printing and even playing chessing with human. Dobot Magician is an All-in-One Robot arm for

Education. It can be controlled by PC, phone, gesture or voice since it can communicate by USB,

Wifi and Bluetooch. Dobot Magician has 4 axes while its maximum reach is 320mm and its

position repeatability(control) is 0.2mm. Dobot magician has 13 extension ports, 1

programmable key and 2MB offline command storage. One of dobots main characters is its

various end effectors, such as 3D Printer Kit, pen holder, vacuum suction cap, gripper and laser.

The softwares for dobot magician are Dobot Studio(for Windows XP, win7 SPI, win8/win10,

mac osx 10.10 and mac osx 10.11, mac osx 10.12), Repetier Host, GrblController3.6,

DobotBlockly (Visual Programming editor) while the software develop kit is Communication

Protocol, Dobot Program Library. In ROS, dobot doesnt have much function except controlling

the arm by keyboard using package. Dobot magician has 13 extension ports, 1 programmable

key and 2MB offline command storage. One of dobots main characters is its various

endeffectors, such as 3D Printer Kit, pen holder, vacuum suction cap, gripper and laser.Th

1. Motion Planning of Humanoid Robot Arm for grasping task [3]

This paper demonstrates a new method for computing numerical solution to the motion

planning of humanoid robot arm. The method is based on combination of two nonlinear

programming techniques which are Forward recursion formula and FBS method.

2. Autonomous vision-guided bi-manual grasping and manipulation [4]

This paper describes the implementation, demonstration and evaluation of a variety of

autonomous, visionguided manipulation capabilities, using a dual-arm Baxter robot. It starts

from the case that human operator moves the master arm and slave arm follows the master

arm, and then, it combines an image-based visual servoing scheme with the first case to perform

dual-arm manipulation without human intervention.

3. Arm grasping for mobile robot transportation using Kinect sensor and kinematic analysis [1]

In this paper, we describe how the grasping and placing strategies for 6 DOF arms of a H20

mobile robot can be supported to achieve a high precision performance for a safe

transportation. An accurate kinematic model has bean used in this paper to find safe path from

one pose to another precisely.

4. A new method for mobile robot arm blind grasping using ultrasonic sensors and Artificial Neural

Networks [2]

The paper presents a new method to realize mobile robot arm grasping in indoor laboratory

environments. This method adopts a blind strategy, which does not need the robot arms be

mounted any kind sensors and avoid calculating the complex kinematic equations of the arms.

The method includes:

(a) two robot on-board ultrasonic sensors in base are utilized to measure the distances

between the robot base and the front arm grasping tables;

(b) an Artificial Neural Networks (ANN) is proposed to learn/establish the nonlinear relationship

between the ultrasonic distances and the joint controlling values. After executing the

training step using sampling data, the ANN can forecast/generate the next-step joint

controlling values fast and accurately by inputting a new pair of realtime ultrasonic

measured distances;

(c) to let the blind strategy matching with the transportation process, an arm controlling

component with user interfaces is developed; (d) a method named training arm is adopted

to prepare the training data for the training procedure of the ANN model. Finally, an

experiment proves that the proposed strategy has good performance in both of the

accuracy and the real-time computation, which can be applied to the real-time arm

operations for the mobile robot transportation in laboratory automation.

5. Find-object

Find-object is a Simple Qt interface to try OpenCV implementations of SIFT, SURF, FAST, BRIEF

and other feature detectors and descriptors.

it have many features like:

(a) You can change any parameters at runtime, make it easier to test feature detectors and

descriptors without always recompiling.

(b) Detectors/descriptors supported (from OpenCV): BRIEF, Dense, FAST, GoodFeaturesToTrack,

MSER, ORB, SIFT, STAR, SURF, FREAK and BRISK.

(c) Sample code with the OpenCV C++ interface below...

(d) For an example of an application using SURF descriptors: see my project RTAB-Map (an

appearance-based loop closure detector for SLAM).

2.2. Yi Yang

1. Dynamic Sensor-Based Control of Robots with Visual Feedback This paper describe the

formulation of sensory feedback models for systems which incorporate complex mappings

between robot, sensor, and world coordinate frames. These models explicitly address the use of

sensory features to define hierarchical control structures, and the definition of control strategies

which achieve consistent dynamic performance. Specific simulation studies examine how

adaptive control may be used to control a robot based on image feature reference and feedback

signals.

2. Vision-based Motion Planning For A Robot Arm Using Topology Representing Networks

This paper describe the concept of the Perceptual Control Manifold and the Topology

Representing Network. By exploiting the topology preserving features of the neural network,

path planning strategies defined on the TRN lead to flexible obstacle avoidance. The practical

feasibility of the approach is demonstrated by the results of simulation with a PUMA robot and

experiments with a Mitsubishi Robot.

3. A Framework for Robot Motion Planning with Sensor Constraints This paper propose a motion

planning framework that achieves this with the help of a space called the perceptual control

manifold (PCM) defined on the product of the robot configuration space and an image-based

feature space. They show how the task of intercepting a moving target can be mapped to the

PCM, using image feature trajectories of the robot end-effector and the moving target. This

leads to the generation of motion plans that satisfy various constraints and optimality criteria

derived from the robot kinematics, the control system, and the sensing mechanism, specific

interception tasks are analyzed to illustrate this visionbased planning technique.

4. Motion Planning of a Pneumatic Robot Using a Neural Network

This paper present a framework for sensor-based robot motion planning that uses learning to

handle arbitrarily configured sensors and robots. Autonomous robotics requires the generation

of motion plans for achieving goals while satisfying environmental constraints. Classical motion

planning is defined on a configuration space which is generally assumed to be known, implying

the complete knowledge of both the robot kinematics as well as knowledge of the obstacles in

the configuration space. Uncertainty, however, is prevalent, which makes such motion planning

techniques inadequate for practical purposes. Sensors such as cameras can help in overcoming

uncertainties but require proper utilization of sensor feedback for this purpose. A robot motion

plan should incorporate constraints from the sensor system as well as criteria for optimizing the

sensor feedback. However, in most motion planning approaches, sensing is decoupled from

planning. A framework for motion planning was proposed that considers sensors as an integral

part of the: definition of the motion goal. The approach is based on the concept of a Perceptual

Control Manifold (PCM), defined on the product of the robot configuration space and sensor

space. The PCM provides a flexible way of developing motion plans that exploit sensors

effectively. However, there are robotic systems, where the PCM cannot be derived analytically,

since the exact mathematical relationship between configuration space, sensor space, and

control signals is not known. Even if the PCM is known analytically, motion planning may require

the tedious and error prone process of calibration of both the kinematic and imaging

parameters of the system. Instead of using the analytical expressions for deriving the PCM, they

therefore propose the use of a self-organizing neural network to learn the topology of this

manifold. They first develop the general PCM concept, then describe the Topology Representing

Network (TRN) algorithm they use to approximate the PCM and a diffusion-based path planning

strategy which can be employed in conjunction with the TRN. Path control and flexible obstacle

avoidance demonstrate the feasibility of this approach for motion planning in a realistic

environment and illustrate the potential for further robotic applications

You might also like

- 3Document6 pages3Samuel HamiltonNo ratings yet

- 11-Mechatronics Robot Navigation Using Machine Learning and AIDocument4 pages11-Mechatronics Robot Navigation Using Machine Learning and AIsanjayshelarNo ratings yet

- Base PapDocument6 pagesBase PapAnonymous ovq7UE2WzNo ratings yet

- 1 PBDocument7 pages1 PBFyndi Aw AwNo ratings yet

- Intelligent Mobility: Autonomous Outdoor Robotics at The DFKIDocument7 pagesIntelligent Mobility: Autonomous Outdoor Robotics at The DFKIFernando MartinezNo ratings yet

- 1 s2.0 S0921889016302512 MainDocument15 pages1 s2.0 S0921889016302512 Mainfaith_khp73301No ratings yet

- VCRA Improved.Document15 pagesVCRA Improved.adwait naikNo ratings yet

- Term Paper EE 105Document11 pagesTerm Paper EE 105shaktishoaibNo ratings yet

- Accelerometer-Based Control of An Industrial Robotic ArmDocument6 pagesAccelerometer-Based Control of An Industrial Robotic ArmsanjusanjanareddyNo ratings yet

- 2005 Path Planning Protocol For Collaborative Multi-Robot SystemsDocument6 pages2005 Path Planning Protocol For Collaborative Multi-Robot SystemsEx6TenZNo ratings yet

- SM3075Document15 pagesSM3075eir235dNo ratings yet

- Cooperative Robots Architecture For An Assistive ScenarioDocument2 pagesCooperative Robots Architecture For An Assistive ScenarioAndreaNo ratings yet

- Virtual Camera Perspectives Within A 3-D Interface For Robotic Search and RescueDocument5 pagesVirtual Camera Perspectives Within A 3-D Interface For Robotic Search and RescueVishu KumarNo ratings yet

- Imtc RobotNavigation 7594Document5 pagesImtc RobotNavigation 7594flv_91No ratings yet

- Isie 2012Document6 pagesIsie 2012rfm61No ratings yet

- A sEMG-Based Shared Control System With No-Target Obstacle Avoidance For Omnidirectional Mobile RobotsDocument11 pagesA sEMG-Based Shared Control System With No-Target Obstacle Avoidance For Omnidirectional Mobile Robotsabinaya_359109181No ratings yet

- Shadow RobotDocument29 pagesShadow Robotteentalksopedia100% (1)

- Introduction ..: Implementation of A Stereo Vision System For Visual Feedback Control of Robotic Arm ManipulationsDocument17 pagesIntroduction ..: Implementation of A Stereo Vision System For Visual Feedback Control of Robotic Arm Manipulationsmostafa elazabNo ratings yet

- WsRobotVision Gori Etal2013Document6 pagesWsRobotVision Gori Etal2013mounamalarNo ratings yet

- Object Handling in Cluttered Indoor Environment With A Mobile ManipulatorDocument6 pagesObject Handling in Cluttered Indoor Environment With A Mobile ManipulatorSomeone LolNo ratings yet

- Teleoperation of Humanoid Motion Using 3G Communication NetworkDocument6 pagesTeleoperation of Humanoid Motion Using 3G Communication NetworkDr Awais YasinNo ratings yet

- A Method of Indoor Mobile Robot Navigation by Using ControlDocument6 pagesA Method of Indoor Mobile Robot Navigation by Using ControlengrodeNo ratings yet

- Wall-Following Using A Kinect Sensor For Corridor Coverage NavigationDocument6 pagesWall-Following Using A Kinect Sensor For Corridor Coverage NavigationRafiudin DarmawanNo ratings yet

- Navigation For An Intelligent Mobile RobotDocument11 pagesNavigation For An Intelligent Mobile RobotOnkar ViralekarNo ratings yet

- Collision Avoidance in Multi-Robot Systems: Chengtao Cai and Chunsheng Yang Qidan Zhu and Yanhua LiangDocument6 pagesCollision Avoidance in Multi-Robot Systems: Chengtao Cai and Chunsheng Yang Qidan Zhu and Yanhua Liangshyam SaravananNo ratings yet

- Mobile Robots For Search and Rescue: Niramon Ruangpayoongsak Hubert Roth Jan ChudobaDocument6 pagesMobile Robots For Search and Rescue: Niramon Ruangpayoongsak Hubert Roth Jan ChudobaNemitha LakshanNo ratings yet

- Final Report On Autonomous Mobile Robot NavigationDocument30 pagesFinal Report On Autonomous Mobile Robot NavigationChanderbhan GoyalNo ratings yet

- Computers: State Estimation and Localization Based On Sensor Fusion For Autonomous Robots in Indoor EnvironmentDocument15 pagesComputers: State Estimation and Localization Based On Sensor Fusion For Autonomous Robots in Indoor EnvironmentsowNo ratings yet

- Integration of Open Source Platform Duckietown and Gesture Recognition As An Interactive Interface For The Museum Robotic GuideDocument5 pagesIntegration of Open Source Platform Duckietown and Gesture Recognition As An Interactive Interface For The Museum Robotic GuideandreNo ratings yet

- Wireless Robot Control With Robotic Arm Using Mems and ZigbeeDocument5 pagesWireless Robot Control With Robotic Arm Using Mems and ZigbeeCh RamanaNo ratings yet

- Automatic Generation of Control Programs For Walking Robots Using Genetic ProgrammingDocument10 pagesAutomatic Generation of Control Programs For Walking Robots Using Genetic ProgrammingManju PriyaNo ratings yet

- FinalYearProjectExecutiveSummary 2012Document41 pagesFinalYearProjectExecutiveSummary 2012Syahmi AzharNo ratings yet

- Removed PapersDocument6 pagesRemoved Papersghulam mohi ud dinNo ratings yet

- Implementation of Visionbased Autonomous Mobile Platform To Cont 2018Document6 pagesImplementation of Visionbased Autonomous Mobile Platform To Cont 2018tou kaiNo ratings yet

- Automated Navigation Using Snake-Like RobotDocument6 pagesAutomated Navigation Using Snake-Like RobotAfzal AhmadNo ratings yet

- High-Level Programming and Control For Industrial Robotics: Using A Hand-Held Accelerometer-Based Input Device For Gesture and Posture RecognitionDocument21 pagesHigh-Level Programming and Control For Industrial Robotics: Using A Hand-Held Accelerometer-Based Input Device For Gesture and Posture RecognitionIvan TrubeljaNo ratings yet

- Mobile Robot Obstacle Avoidance in Labyrinth Environment Using Fuzzy Logic ApproachDocument5 pagesMobile Robot Obstacle Avoidance in Labyrinth Environment Using Fuzzy Logic ApproachHarsha Vardhan BaleNo ratings yet

- PT Engleza (Trebuie Tradus Tot)Document10 pagesPT Engleza (Trebuie Tradus Tot)Spring ReportNo ratings yet

- Indoor Intelligent Mobile Robot Localization Using Fuzzy Compensation and Kalman Filter To Fuse The Data of Gyroscope and MagnetometerDocument12 pagesIndoor Intelligent Mobile Robot Localization Using Fuzzy Compensation and Kalman Filter To Fuse The Data of Gyroscope and MagnetometerWheel ChairNo ratings yet

- Path Planning of Mobile Robot Using ROSDocument8 pagesPath Planning of Mobile Robot Using ROSInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Development of A Dynamic Intelligent Recognition System For A Real-Time Tracking RobotDocument9 pagesDevelopment of A Dynamic Intelligent Recognition System For A Real-Time Tracking RobotIAES International Journal of Robotics and AutomationNo ratings yet

- Object Distance MeasuremetnDocument22 pagesObject Distance MeasuremetnRavi ChanderNo ratings yet

- Robot Control ThesisDocument5 pagesRobot Control Thesisafloaaffbkmmbx100% (1)

- Bet'S Basavakalyan Engineering College BASAVAKALYAN-585327: "RF Controlled Multi-Terrain Robot"Document20 pagesBet'S Basavakalyan Engineering College BASAVAKALYAN-585327: "RF Controlled Multi-Terrain Robot"Abhay BhujNo ratings yet

- 1I11-IJAET1111203 Novel 3dDocument12 pages1I11-IJAET1111203 Novel 3dIJAET JournalNo ratings yet

- Feature Extraction For Autonomous Ro Bot Navigation in Urban Environme NtsDocument8 pagesFeature Extraction For Autonomous Ro Bot Navigation in Urban Environme NtsDouglas IsraelNo ratings yet

- FYP Review PaperDocument6 pagesFYP Review PaperMatthew MontebelloNo ratings yet

- Gesture Controlled Robot With Robotic ArmDocument10 pagesGesture Controlled Robot With Robotic ArmIJRASETPublicationsNo ratings yet

- A Modular Control Architecture For Semi-Autonomous NavigationDocument4 pagesA Modular Control Architecture For Semi-Autonomous NavigationKai GustavNo ratings yet

- Ijett V14P237 PDFDocument4 pagesIjett V14P237 PDFRahul RajNo ratings yet

- JETIRFA06001Document5 pagesJETIRFA06001Trần ĐạtNo ratings yet

- Integrated Image Processing and Path Planning For Robotic SketchingDocument6 pagesIntegrated Image Processing and Path Planning For Robotic SketchingĐào Văn HưngNo ratings yet

- Three Axis Kinematics Study For Motion Capture Using Augmented RealityDocument37 pagesThree Axis Kinematics Study For Motion Capture Using Augmented RealityINNOVATIVE COMPUTING REVIEWNo ratings yet

- Automatic Target Recognition: Advances in Computer Vision Techniques for Target RecognitionFrom EverandAutomatic Target Recognition: Advances in Computer Vision Techniques for Target RecognitionNo ratings yet

- A User Interface For Assistive Grasping: Jonathan Weisz, Carmine Elvezio, and Peter K. AllenDocument6 pagesA User Interface For Assistive Grasping: Jonathan Weisz, Carmine Elvezio, and Peter K. AllenBmn85No ratings yet

- Human Pose Estimation Using Superpixels and SVM ClassifierDocument18 pagesHuman Pose Estimation Using Superpixels and SVM ClassifierNikitaNo ratings yet

- Articulated Body Pose Estimation: Unlocking Human Motion in Computer VisionFrom EverandArticulated Body Pose Estimation: Unlocking Human Motion in Computer VisionNo ratings yet

- Visual Sensor Network: Exploring the Power of Visual Sensor Networks in Computer VisionFrom EverandVisual Sensor Network: Exploring the Power of Visual Sensor Networks in Computer VisionNo ratings yet

- Name PlateDocument8 pagesName Platerk krishnaNo ratings yet

- Skill Development CentreDocument2 pagesSkill Development Centrerk krishnaNo ratings yet

- Add Lab EquipmentsDocument2 pagesAdd Lab Equipmentsrk krishnaNo ratings yet

- Free EnergyDocument12 pagesFree Energyrk krishnaNo ratings yet

- The RoleDocument3 pagesThe Rolerk krishnaNo ratings yet

- PneumaticsDocument7 pagesPneumaticsrk krishnaNo ratings yet

- Pipe Friction 1Document3 pagesPipe Friction 1rk krishnaNo ratings yet

- Aravind Unit 2Document37 pagesAravind Unit 2rk krishnaNo ratings yet

- Centrifugal PumpDocument4 pagesCentrifugal Pumprk krishnaNo ratings yet

- SAU1603 Automotive Safety: School of Mechanical Engineering Department of Automobile EngineeringDocument59 pagesSAU1603 Automotive Safety: School of Mechanical Engineering Department of Automobile Engineeringrk krishnaNo ratings yet

- 2 - VenturimeterDocument4 pages2 - Venturimeterrk krishnaNo ratings yet

- Chemistry April 15Document3 pagesChemistry April 15rk krishnaNo ratings yet

- Bernoullis TheoremDocument5 pagesBernoullis Theoremrk krishnaNo ratings yet

- ADV Communication Skills LAB QPDocument4 pagesADV Communication Skills LAB QPrk krishnaNo ratings yet

- Kom QB (R20)Document6 pagesKom QB (R20)rk krishnaNo ratings yet

- SM Instruction Guide With Screenshots SLED Self MaintainerDocument5 pagesSM Instruction Guide With Screenshots SLED Self MaintainerNoel MendozaNo ratings yet

- Bayer Clinitek-500-Operator-Manual PDFDocument77 pagesBayer Clinitek-500-Operator-Manual PDFSergio Iván CasillasNo ratings yet

- Catalogo Lowara DomoDocument6 pagesCatalogo Lowara DomoSergio ZegarraNo ratings yet

- Ebook Sobotta Atlas of Anatomy Vol 1 General Anatomy and Musculoskeletal System English Latin16Th Ed PDF Full Chapter PDFDocument68 pagesEbook Sobotta Atlas of Anatomy Vol 1 General Anatomy and Musculoskeletal System English Latin16Th Ed PDF Full Chapter PDFkarren.brown188100% (25)

- MBA Project Report of DY Patil Distance MBADocument4 pagesMBA Project Report of DY Patil Distance MBAPrakashB144No ratings yet

- Endodontic and Restorative Management of A Lower Molar With A Calcified Pulp Chamber.Document7 pagesEndodontic and Restorative Management of A Lower Molar With A Calcified Pulp Chamber.Nicolas SantanderNo ratings yet

- Milk Production Trend - Dairy Development - Outlook PDFDocument10 pagesMilk Production Trend - Dairy Development - Outlook PDFrubel_nsuNo ratings yet

- Human Activities Notes For Form TwoDocument3 pagesHuman Activities Notes For Form TwoedwinmasaiNo ratings yet

- Decision Tree PrimerDocument42 pagesDecision Tree PrimerMuzzamil JanjuaNo ratings yet

- Federico Giusfredi - Syntax of The Luwian LanguageDocument229 pagesFederico Giusfredi - Syntax of The Luwian LanguageLucySky7No ratings yet

- Manual Ga Ma785gt Ud3hDocument104 pagesManual Ga Ma785gt Ud3htrasviviNo ratings yet

- Sustainable Transportation and Electric VehiclesDocument17 pagesSustainable Transportation and Electric Vehicless131744No ratings yet

- Anh 7 Cum 1 (2017-2018)Document6 pagesAnh 7 Cum 1 (2017-2018)anhthunoban2011No ratings yet

- CH 1 MCQ ETIDocument14 pagesCH 1 MCQ ETIShlok PurohitNo ratings yet

- Imanager U2000 ME Release Notes 01 PDFDocument180 pagesImanager U2000 ME Release Notes 01 PDFCamilo Andres OrozcoNo ratings yet

- Mathematical LanguageDocument9 pagesMathematical Languagejrqagua00332No ratings yet

- Settlement Chart: Foundation Settlement Analysis - ElasticDocument2 pagesSettlement Chart: Foundation Settlement Analysis - ElasticCSEC Uganda Ltd.No ratings yet

- NewJaisa Corporate ProfileDocument14 pagesNewJaisa Corporate ProfileCRAZY ಕನ್ನಡಿಗNo ratings yet

- For Students ModuleDocument44 pagesFor Students ModuleJohn vincent VellezaNo ratings yet

- Detailed Lesson PlanDocument10 pagesDetailed Lesson PlanNyca PostradoNo ratings yet

- Comparative and Superlative Adjectives Fun Activities Games Grammar Guides 10529Document14 pagesComparative and Superlative Adjectives Fun Activities Games Grammar Guides 10529Nhung Mai ThịNo ratings yet

- Statistics With The Ti-83 Plus (And Silver Edition) : Edit (1) 1:edit 5:setupeditor ProtDocument10 pagesStatistics With The Ti-83 Plus (And Silver Edition) : Edit (1) 1:edit 5:setupeditor ProtkkathrynannaNo ratings yet

- Zachary Evans ResumeDocument2 pagesZachary Evans Resumeapi-248576943No ratings yet

- Wound Repair and RegenerationDocument8 pagesWound Repair and RegenerationdrsamnNo ratings yet

- Rocna and Vulcan Anchor DimensionsDocument2 pagesRocna and Vulcan Anchor DimensionsJoseph PintoNo ratings yet

- Panasonic SC BT200Document60 pagesPanasonic SC BT200Mark CoatesNo ratings yet

- 731 Mitendra Pratap SinghDocument2 pages731 Mitendra Pratap Singhmitendra pratap singhNo ratings yet

- Vietnam Maritime UniversityDocument36 pagesVietnam Maritime UniversitySơn BáchNo ratings yet

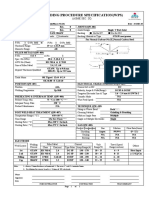

- Welding Procedure Specification (WPS) : (Asme Sec. Ix)Document1 pageWelding Procedure Specification (WPS) : (Asme Sec. Ix)Ahmed Lepda100% (1)

- BCA Syllabus 2016-17 CBCS RevisedDocument83 pagesBCA Syllabus 2016-17 CBCS Revisedmalliknl50% (2)