Professional Documents

Culture Documents

Calibration Methods and Reference Standards in Ultrasonic Testing

Uploaded by

Kevin HuangOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Calibration Methods and Reference Standards in Ultrasonic Testing

Uploaded by

Kevin HuangCopyright:

Available Formats

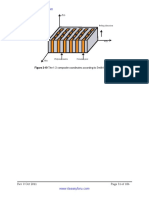

If the material is relatively thick or the crack is relatively short, the crack base echo and

the crack tip diffraction echo could appear on the scope display simultaneously (as

seen in the figure). This can be attributed to the divergence

of the sound beam where it becomes wide enough to cover

the entire crack length. In such case, though the angle of the

beam striking the base of the crack is slightly different than

the angle of the beam striking the tip of the crack, the

previous equation still holds reasonably accurate and it can

be used for estimating the crack length.

Calibration Methods

Calibration refers to the act of evaluating and adjusting the precision and accuracy of

measurement equipment. In ultrasonic testing, several forms of calibrations must

occur. First, the electronics of the equipment must be calibrated to ensure that they

are performing as designed. This operation is usually performed by the equipment

manufacturer and will not be discussed further in this material. It is also usually

necessary for the operator to perform a "user calibration" of the equipment. This user

calibration is necessary because most ultrasonic equipment can be reconfigured for

use in a large variety of applications. The user must "calibrate" the system, which

includes the equipment settings, the transducer, and the test setup, to validate that

the desired level of precision and accuracy are achieved.

In ultrasonic testing, reference standards are used to establish a general level of

consistency in measurements and to help interpret and quantify the information

contained in the received signal. The figure shows some of the most commonly used

reference standards for the calibration of ultrasonic equipment. Reference standards

are used to validate that the equipment and the setup provide similar results from one

day to the next and that similar results are produced by different systems. Reference

standards also help the inspector to estimate the size of flaws. In a pulse-echo type

setup, signal strength depends on

both the size of the flaw and the

distance between the flaw and the

transducer. The inspector can use a

reference standard with an artificially

induced flaw of known size and at

approximately the same distance

away for the transducer to produce a

Introduction to Non-Destructive Testing Techniques

Ultrasonic Testing Page 33 of 36

signal. By comparing the signal from the reference standard to that received from the

actual flaw, the inspector can estimate the flaw size.

The material of the reference standard should be the same as the material being

inspected and the artificially induced flaw should closely resemble that of the actual

flaw. This second requirement is a major limitation of most standard reference

samples. Most use drilled holes and notches that do not closely represent real flaws. In

most cases the artificially induced defects in reference standards are better reflectors

of sound energy (due to their flatter and smoother surfaces) and produce indications

that are larger than those that a similar sized flaw would produce. Producing more

"realistic" defects is cost prohibitive in most cases and, therefore, the inspector can

only make an estimate of the flaw size.

Reference standards are mainly used to calibrate instruments prior to performing the

inspection and, in general, they are also useful for:

Checking the performance of both angle-beam and normal-beam transducers

(sensitivity, resolution, beam spread, etc.)

Determining the sound beam exit point of angle-beam transducers

Determining the refracted angle produced

Calibrating sound path distance

Evaluating instrument performance (time base, linearity, etc.)

Introduction to Some of the Common Standards

A wide variety of standard calibration blocks of different designs, sizes and systems of

units (mm or inch) are available. The type of standard calibration block used is

dependent on the NDT application and the form and shape of the object being

evaluated. The most commonly used standard calibration blocks are those of the;

International Institute of Welding (IIW), American Welding Society (AWS) and

American Society of Testing and Materials (ASTM). Only two of the most commonly

used standard calibration blocks are introduced here.

IIW Type US-1 Calibration Block

This block is a general purpose calibration block that can be used for calibrating angle-

beam transducers as well as normal beam transducers. The material from which IIW

blocks are prepared is specified as killed, open hearth or electric furnace, low-carbon

steel in the normalized condition and with a grain size of McQuaid-Ehn No. 8. Official

IIW blocks are dimensioned in the metric system of units.

Introduction to Non-Destructive Testing Techniques

Ultrasonic Testing Page 34 of 36

The block has several features that facilitate checking

and calibrating many of the parameters and functions of

the transducer as well as the instrument where that

includes; angle-beam exit (index) point, beam angle,

beam spared, time base, linearity, resolution, dead zone,

sensitivity and range setting.

The figure below shows some of the uses of the block.

ASTM - Miniature Angle-Beam Calibration Block (V2)

The miniature angle-beam block is used in a somewhat similar manner as the as the

IIW block, but is smaller and lighter. The miniature angle-beam block is primarily used

in the field for checking the characteristics of angle-beam transducers.

With the miniature block, beam angle and exit point

can be checked for an angle-beam transducer. Both

the 25 and 50 mm radius surfaces provide ways for

checking the location of the exit point of the

transducer and for calibrating the time base of the

Introduction to Non-Destructive Testing Techniques

Ultrasonic Testing Page 35 of 36

instrument in terms of metal distance. The small hole provides a reflector for checking

beam angle and for setting the instrument gain.

Distance Amplitude Correction (DAC)

Acoustic signals from the same reflecting surface will

have different amplitudes at different distances from

the transducer. A distance amplitude correction (DAC)

curve provides a means of establishing a graphic

“reference level sensitivity” as a function of the

distance to the reflector (i.e., time on the A-scan

display). The use of DAC allows signals reflected from

similar discontinuities to be evaluated where signal

attenuation as a function of depth has been correlated.

DAC will allow for loss in amplitude over material depth (time) to be represented

graphically on the A-scan display. Because near field length and beam spread vary

according to transducer size and frequency, and materials vary in attenuation and

velocity, a DAC curve must be established for each different situation. DAC may be

employed in both longitudinal and shear modes of operation as well as either contact

or immersion inspection techniques.

A DAC curve is constructed from the peak amplitude

responses from reflectors of equal area at different

distances in the same material. Reference standards

which incorporate side drilled holes (SDH), flat

bottom holes (FBH), or notches whereby the

reflectors are located at varying depths are

commonly used. A-scan echoes are displayed at

their non-electronically compensated height and

the peak amplitude of each signal is marked to

construct the DAC curve as shown in the figure. It is

important to recognize that regardless of the type

of reflector used, the size and shape of the reflector

must be constant.

The same method is used for constructing DAC curves for angle beam transducers,

however in that case both the first and second leg reflections can be used for

constructing the DAC curve.

Introduction to Non-Destructive Testing Techniques

Ultrasonic Testing Page 36 of 36

You might also like

- UT Testing Add01a Equipment CalibrationsDocument97 pagesUT Testing Add01a Equipment CalibrationsdebduttamallikNo ratings yet

- Prxnrnant Testtng: Buane & Ii T P O'NeillDocument6 pagesPrxnrnant Testtng: Buane & Ii T P O'NeillAzeem ShaikhNo ratings yet

- 2008 Development of A Procedure For The Ultrasonic Examination of Nickel LNG Storage Tank Welds Using Phased Array TechnologyDocument5 pages2008 Development of A Procedure For The Ultrasonic Examination of Nickel LNG Storage Tank Welds Using Phased Array Technologyநந்த குமார் சம்பத் நாகராஜன்No ratings yet

- Ultrasonic Testing Variables GuideDocument21 pagesUltrasonic Testing Variables GuidepktienNo ratings yet

- Ultrasonic Measurements for Process Control: Theory, Techniques, ApplicationsFrom EverandUltrasonic Measurements for Process Control: Theory, Techniques, ApplicationsNo ratings yet

- RTD LoRUS (Long Range Ultrasonics)Document1 pageRTD LoRUS (Long Range Ultrasonics)fakmiloNo ratings yet

- Radiation Safety of X Ray Generators and Other Radiation Sources Used for Inspection Purposes and for Non-medical Human Imaging: Specific Safety GuideFrom EverandRadiation Safety of X Ray Generators and Other Radiation Sources Used for Inspection Purposes and for Non-medical Human Imaging: Specific Safety GuideNo ratings yet

- Ultrasonic Testing - Wikipedia PDFDocument26 pagesUltrasonic Testing - Wikipedia PDFKarthicWaitingNo ratings yet

- Transient Electromagnetic-Thermal Nondestructive Testing: Pulsed Eddy Current and Transient Eddy Current ThermographyFrom EverandTransient Electromagnetic-Thermal Nondestructive Testing: Pulsed Eddy Current and Transient Eddy Current ThermographyRating: 5 out of 5 stars5/5 (1)

- Corrosion MappingDocument17 pagesCorrosion MappingJuliog100% (1)

- Inspection Report Bifab Ut On Duplex Stainless Steel Piping PDFDocument11 pagesInspection Report Bifab Ut On Duplex Stainless Steel Piping PDFquiron2014No ratings yet

- Mini-Wheel Encoder: Standard InclusionsDocument2 pagesMini-Wheel Encoder: Standard InclusionsGhaithNo ratings yet

- ECHOGRAPH Ultrasonic Probes Brochure SummaryDocument4 pagesECHOGRAPH Ultrasonic Probes Brochure Summarycarlos100% (1)

- Thickness Gauging Level 2 (Questions & Answers)Document3 pagesThickness Gauging Level 2 (Questions & Answers)kingston100% (2)

- Lrut Special AplicationDocument20 pagesLrut Special AplicationAbdur Rahim100% (1)

- 8.5 Curved Surface Correction (CSC) - Olympus IMSDocument4 pages8.5 Curved Surface Correction (CSC) - Olympus IMSTHIRU.SNo ratings yet

- DGS DGS MethodDocument6 pagesDGS DGS MethodAlzaki AbdullahNo ratings yet

- Process Specification For Ultrasonic Inspection of Welds PDFDocument14 pagesProcess Specification For Ultrasonic Inspection of Welds PDFFernandoi100% (1)

- U5 - Ultrasonic InspectionDocument83 pagesU5 - Ultrasonic InspectionSuraj B SNo ratings yet

- CIVA NDT SoftwareDocument8 pagesCIVA NDT SoftwareBala KrishnanNo ratings yet

- Ultrasonic Phased Array Technique For Austenitic Weld Inspection PDFDocument4 pagesUltrasonic Phased Array Technique For Austenitic Weld Inspection PDFRAFAEL ANDRADENo ratings yet

- High Temperature Ultrasonic ScanningDocument7 pagesHigh Temperature Ultrasonic ScanningscribdmustaphaNo ratings yet

- Weld Scanning ProcedureDocument5 pagesWeld Scanning ProcedureLutfi IsmailNo ratings yet

- Ultrasonic Report: Probe Details and Position (Fig 1)Document2 pagesUltrasonic Report: Probe Details and Position (Fig 1)BALA GANESH100% (1)

- NDT For Roller CoasterDocument5 pagesNDT For Roller Coasterluqman syakirNo ratings yet

- Circ-it configured Environmental SpecificationsDocument2 pagesCirc-it configured Environmental SpecificationsMarcos Kaian Moraes RodriguesNo ratings yet

- Guide to common NDT methodsDocument3 pagesGuide to common NDT methodsmabppuNo ratings yet

- Ultrasonidos Manual PDFDocument78 pagesUltrasonidos Manual PDFJose Luis Gonzalez Perez100% (1)

- Iso TC 44 SC 5 N 490Document25 pagesIso TC 44 SC 5 N 490Satwant singhNo ratings yet

- Basic Principles of Ultrasonic TestingDocument101 pagesBasic Principles of Ultrasonic TestingJohn Eric OliverNo ratings yet

- Ultrasonic Examination Austenitic and Dissimilar WeldsDocument6 pagesUltrasonic Examination Austenitic and Dissimilar WeldshocimtmNo ratings yet

- E 1065 - 99-UT-transdDocument22 pagesE 1065 - 99-UT-transdDemian PereiraNo ratings yet

- BeamTool Scan Plan SummaryDocument2 pagesBeamTool Scan Plan Summarywilfran villegasNo ratings yet

- Ect MAD 8D Calibration Procedure: Using The Vertical Volts MethodDocument7 pagesEct MAD 8D Calibration Procedure: Using The Vertical Volts MethodShanmukhaTeliNo ratings yet

- Mandatory Appendix V Phased Array Linear ScanningDocument2 pagesMandatory Appendix V Phased Array Linear ScanningAngelTinoco100% (1)

- Introduction To UT Flaw DetectorDocument26 pagesIntroduction To UT Flaw DetectorNail Widya Satya100% (1)

- Automated Robotic InspectionDocument6 pagesAutomated Robotic Inspectionprakush01975225403No ratings yet

- ECT Vs IRISDocument4 pagesECT Vs IRISAnuradha SivakumarNo ratings yet

- 005.how Phased Arrays Work - FCBDocument34 pages005.how Phased Arrays Work - FCBShravanKumar JingarNo ratings yet

- Guidelines For The Preparation and Grading of NDTDocument4 pagesGuidelines For The Preparation and Grading of NDTL...nNo ratings yet

- LRPDF-Applus RTD Catalogus Probe DepartmentDocument28 pagesLRPDF-Applus RTD Catalogus Probe DepartmentDhanasekaran RNo ratings yet

- MX2 Training Program 10F Acoustic Wedge VerificationDocument21 pagesMX2 Training Program 10F Acoustic Wedge VerificationANH TAI MAINo ratings yet

- Application of Phased Array For Corrosion Resistant Alloy (CRA) WeldsDocument6 pagesApplication of Phased Array For Corrosion Resistant Alloy (CRA) WeldsKevin HuangNo ratings yet

- Olympus NDT Canada Provides Recommended Calibration Tubes for Near Field TestingDocument1 pageOlympus NDT Canada Provides Recommended Calibration Tubes for Near Field TestingMahmood KhanNo ratings yet

- Ultrasonic Angle-Beam Examination of Steel Plates: Standard Specification ForDocument3 pagesUltrasonic Angle-Beam Examination of Steel Plates: Standard Specification ForSama UmateNo ratings yet

- General DNV Qualification Applus RTD Rotoscan AUT System - 2009-4129 - Rev.03 - SignedDocument60 pagesGeneral DNV Qualification Applus RTD Rotoscan AUT System - 2009-4129 - Rev.03 - SignedfayyazdnvNo ratings yet

- Radiograph Interpretation GuideDocument36 pagesRadiograph Interpretation GuideMaverikbjNo ratings yet

- CINDE Course Calendar - Feb 3, 2016Document32 pagesCINDE Course Calendar - Feb 3, 2016PeterNo ratings yet

- India Oman Qatar Abudhabi CanadaDocument7 pagesIndia Oman Qatar Abudhabi CanadaMadhusudanNo ratings yet

- ATTAR Student Handbook GuideDocument13 pagesATTAR Student Handbook GuideRahul PatelNo ratings yet

- PCN OutlineDocument26 pagesPCN Outlinekendall0609No ratings yet

- Appendix 1 Part 1b Ultrasonic AUT & MEPAUT 4th Edition February 2016Document17 pagesAppendix 1 Part 1b Ultrasonic AUT & MEPAUT 4th Edition February 2016Brandon EricksonNo ratings yet

- Phased Array Probes Application MatrixDocument1 pagePhased Array Probes Application MatrixFethi BELOUISNo ratings yet

- Level IiiDocument3 pagesLevel IiiMangalraj MadasamyNo ratings yet

- Nondestructive Examination (NDE) Technology and Codes Student Manual Introduction To Radiographic ExaminationDocument77 pagesNondestructive Examination (NDE) Technology and Codes Student Manual Introduction To Radiographic ExaminationphanthanhhungNo ratings yet

- Ultrasonic Examination Part 2Document4 pagesUltrasonic Examination Part 2JlkKumarNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 35Document1 pagePCN Phased Array Ultrasonic Testing Material - 35Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 33Document1 pagePCN Phased Array Ultrasonic Testing Material - 33Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 30Document1 pagePCN Phased Array Ultrasonic Testing Material - 30Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 36Document1 pagePCN Phased Array Ultrasonic Testing Material - 36Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 34Document1 pagePCN Phased Array Ultrasonic Testing Material - 34Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 31Document1 pagePCN Phased Array Ultrasonic Testing Material - 31Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 32Document1 pagePCN Phased Array Ultrasonic Testing Material - 32Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 24Document1 pagePCN Phased Array Ultrasonic Testing Material - 24Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 21Document1 pagePCN Phased Array Ultrasonic Testing Material - 21Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 26Document1 pagePCN Phased Array Ultrasonic Testing Material - 26Kevin HuangNo ratings yet

- Transducer Near and Far Field ZonesDocument1 pageTransducer Near and Far Field ZonesKevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 22Document1 pagePCN Phased Array Ultrasonic Testing Material - 22Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 27Document1 pagePCN Phased Array Ultrasonic Testing Material - 27Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 28Document1 pagePCN Phased Array Ultrasonic Testing Material - 28Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 16Document1 pagePCN Phased Array Ultrasonic Testing Material - 16Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 19Document1 pagePCN Phased Array Ultrasonic Testing Material - 19Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 25Document1 pagePCN Phased Array Ultrasonic Testing Material - 25Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 17Document1 pagePCN Phased Array Ultrasonic Testing Material - 17Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 14Document1 pagePCN Phased Array Ultrasonic Testing Material - 14Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 18Document1 pagePCN Phased Array Ultrasonic Testing Material - 18Kevin HuangNo ratings yet

- Optimized Title for Phased Array Probe Delay Dependence FigureDocument1 pageOptimized Title for Phased Array Probe Delay Dependence FigureKevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 12Document1 pagePCN Phased Array Ultrasonic Testing Material - 12Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 11Document1 pagePCN Phased Array Ultrasonic Testing Material - 11Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 9Document1 pagePCN Phased Array Ultrasonic Testing Material - 9Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 7Document1 pagePCN Phased Array Ultrasonic Testing Material - 7Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 10Document1 pagePCN Phased Array Ultrasonic Testing Material - 10Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 13Document1 pagePCN Phased Array Ultrasonic Testing Material - 13Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 8Document1 pagePCN Phased Array Ultrasonic Testing Material - 8Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 4Document1 pagePCN Phased Array Ultrasonic Testing Material - 4Kevin HuangNo ratings yet

- PCN Phased Array Ultrasonic Testing Material - 3Document1 pagePCN Phased Array Ultrasonic Testing Material - 3Kevin HuangNo ratings yet

- Measuring Quality in Supply ChainsDocument52 pagesMeasuring Quality in Supply ChainsrupaNo ratings yet

- Operating Instructions: BA208C/07/en/10.05 51517596Document32 pagesOperating Instructions: BA208C/07/en/10.05 51517596Kristen CollierNo ratings yet

- A1a Infrared An2Document9 pagesA1a Infrared An2Ehab Mostafa MareiNo ratings yet

- Turbidity Meter (Hach 2100q)Document30 pagesTurbidity Meter (Hach 2100q)Desca PrenandaNo ratings yet

- Isweek SprintIR CO2 SensorDocument2 pagesIsweek SprintIR CO2 SensorindustrialNo ratings yet

- EMS-2 Engine Monitoring System ManualDocument20 pagesEMS-2 Engine Monitoring System Manualcristian crespoNo ratings yet

- MEC400 Introduction to Instrumentation and Control SystemsDocument22 pagesMEC400 Introduction to Instrumentation and Control SystemsAayush PatidarNo ratings yet

- Software Applications: Uncertainty Calculation Module DetailsDocument9 pagesSoftware Applications: Uncertainty Calculation Module DetailsbabushleshaNo ratings yet

- Aashto TP62-07Document18 pagesAashto TP62-07amin13177100% (1)

- Guide For Iso/Iec 17025 Application: ChangesDocument27 pagesGuide For Iso/Iec 17025 Application: Changeseyad thyapNo ratings yet

- 805TS Weighing Indicator ManualDocument47 pages805TS Weighing Indicator ManualEl DOnNo ratings yet

- ROV Mounted UT GaugeDocument66 pagesROV Mounted UT GaugeNEERAJ NARAYANANNo ratings yet

- Agilent Vacuum Training GuideDocument27 pagesAgilent Vacuum Training GuidesinytellsNo ratings yet

- 7400Document14 pages7400uthmboxNo ratings yet

- Recommended laboratory test for predicting initial retroreflectivity of pavement markings from glass bead qualityDocument137 pagesRecommended laboratory test for predicting initial retroreflectivity of pavement markings from glass bead qualityindraandikapNo ratings yet

- Alfa Laval Separartor s937 Parameter ListDocument16 pagesAlfa Laval Separartor s937 Parameter ListIgors VrublevskisNo ratings yet

- Manual GyrocompactadorDocument160 pagesManual GyrocompactadorJluis Ipn100% (2)

- ISO 17025 Lead Auditor - Delegate PackDocument170 pagesISO 17025 Lead Auditor - Delegate Packhrz grkNo ratings yet

- Hydraulic System Calibration ProceduresDocument5 pagesHydraulic System Calibration ProceduresMohamed FathyNo ratings yet

- Hull Condition Monitoring Systems: Guide ForDocument23 pagesHull Condition Monitoring Systems: Guide ForjanzeroNo ratings yet

- Roth Section Two Die MakingDocument8 pagesRoth Section Two Die Makingmayank123No ratings yet

- R&D Negative TempDocument1 pageR&D Negative Tempvijay.victorysolutions01No ratings yet

- Aectp 300 3Document284 pagesAectp 300 3AlexNo ratings yet

- Saep 21Document29 pagesSaep 21brecht1980No ratings yet

- DKD-R - 6-1 2014Document39 pagesDKD-R - 6-1 2014japofff100% (1)

- FG05W1 - Introduction To Process Control PDFDocument28 pagesFG05W1 - Introduction To Process Control PDFknightfelix12100% (1)

- Mathis Tci: Thermal Conductivity AnalyzerDocument6 pagesMathis Tci: Thermal Conductivity AnalyzerSani PoulouNo ratings yet

- Forest Service Quality Assurance Plan TemplateDocument25 pagesForest Service Quality Assurance Plan TemplatelohNo ratings yet

- Calibrate Pressure Gauge Using Dead Weight TesterDocument7 pagesCalibrate Pressure Gauge Using Dead Weight TesterGopinath DonNo ratings yet

- TLE4997/98 Evaluation Kit Software GuideDocument26 pagesTLE4997/98 Evaluation Kit Software GuideKhangLeNo ratings yet

- Nuclear Energy in the 21st Century: World Nuclear University PressFrom EverandNuclear Energy in the 21st Century: World Nuclear University PressRating: 4.5 out of 5 stars4.5/5 (3)

- Functional Safety from Scratch: A Practical Guide to Process Industry ApplicationsFrom EverandFunctional Safety from Scratch: A Practical Guide to Process Industry ApplicationsNo ratings yet

- Piping and Pipeline Calculations Manual: Construction, Design Fabrication and ExaminationFrom EverandPiping and Pipeline Calculations Manual: Construction, Design Fabrication and ExaminationRating: 4 out of 5 stars4/5 (18)

- Trevor Kletz Compendium: His Process Safety Wisdom Updated for a New GenerationFrom EverandTrevor Kletz Compendium: His Process Safety Wisdom Updated for a New GenerationNo ratings yet

- The Periodic Table of Elements - Post-Transition Metals, Metalloids and Nonmetals | Children's Chemistry BookFrom EverandThe Periodic Table of Elements - Post-Transition Metals, Metalloids and Nonmetals | Children's Chemistry BookNo ratings yet

- Gas-Liquid And Liquid-Liquid SeparatorsFrom EverandGas-Liquid And Liquid-Liquid SeparatorsRating: 3.5 out of 5 stars3.5/5 (3)

- Guidelines for Chemical Process Quantitative Risk AnalysisFrom EverandGuidelines for Chemical Process Quantitative Risk AnalysisRating: 5 out of 5 stars5/5 (1)

- Well Control for Completions and InterventionsFrom EverandWell Control for Completions and InterventionsRating: 4 out of 5 stars4/5 (10)

- Robotics: Designing the Mechanisms for Automated MachineryFrom EverandRobotics: Designing the Mechanisms for Automated MachineryRating: 4.5 out of 5 stars4.5/5 (8)

- An Introduction to the Periodic Table of Elements : Chemistry Textbook Grade 8 | Children's Chemistry BooksFrom EverandAn Introduction to the Periodic Table of Elements : Chemistry Textbook Grade 8 | Children's Chemistry BooksRating: 5 out of 5 stars5/5 (1)

- Guidelines for Siting and Layout of FacilitiesFrom EverandGuidelines for Siting and Layout of FacilitiesNo ratings yet

- Guidelines for Developing Quantitative Safety Risk CriteriaFrom EverandGuidelines for Developing Quantitative Safety Risk CriteriaNo ratings yet

- An Applied Guide to Water and Effluent Treatment Plant DesignFrom EverandAn Applied Guide to Water and Effluent Treatment Plant DesignRating: 5 out of 5 stars5/5 (4)

- Bow Ties in Risk Management: A Concept Book for Process SafetyFrom EverandBow Ties in Risk Management: A Concept Book for Process SafetyNo ratings yet

- Guidelines for Vapor Cloud Explosion, Pressure Vessel Burst, BLEVE, and Flash Fire HazardsFrom EverandGuidelines for Vapor Cloud Explosion, Pressure Vessel Burst, BLEVE, and Flash Fire HazardsNo ratings yet

- Understanding Process Equipment for Operators and EngineersFrom EverandUnderstanding Process Equipment for Operators and EngineersRating: 4.5 out of 5 stars4.5/5 (3)

- Physical and Chemical Equilibrium for Chemical EngineersFrom EverandPhysical and Chemical Equilibrium for Chemical EngineersRating: 5 out of 5 stars5/5 (1)

- Guidelines for Engineering Design for Process SafetyFrom EverandGuidelines for Engineering Design for Process SafetyNo ratings yet

- Conduct of Operations and Operational Discipline: For Improving Process Safety in IndustryFrom EverandConduct of Operations and Operational Discipline: For Improving Process Safety in IndustryRating: 5 out of 5 stars5/5 (1)

- Perfume Engineering: Design, Performance and ClassificationFrom EverandPerfume Engineering: Design, Performance and ClassificationRating: 4 out of 5 stars4/5 (5)

- Process Steam Systems: A Practical Guide for Operators, Maintainers, and DesignersFrom EverandProcess Steam Systems: A Practical Guide for Operators, Maintainers, and DesignersNo ratings yet

- Major Accidents to the Environment: A Practical Guide to the Seveso II-Directive and COMAH RegulationsFrom EverandMajor Accidents to the Environment: A Practical Guide to the Seveso II-Directive and COMAH RegulationsNo ratings yet