Professional Documents

Culture Documents

Lecture01 VDL

Lecture01 VDL

Uploaded by

nikhit5Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Lecture01 VDL

Lecture01 VDL

Uploaded by

nikhit5Copyright:

Available Formats

Very Deep Learning

Lecture 02

Dr. Muhammad Zeshan Afzal, Prof. Didier Stricker

MindGarage, University of Kaiserslautern

afzal.tukl@gmail.com

M. Zeshan Afzal, Very Deep Learning Ch. 2

Recap

M. Zeshan Afzal, Very Deep Learning Ch. 2 3

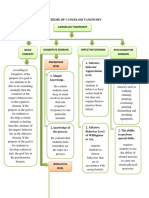

Artificial

Hierarchy Intellegence

Machine

Learning

Shallow

Deep Learning

Learning

Self-

Supervised Supervised Unsupervised Reinforcement

supervised

Structured

Unsupervised Regression Classification

Prediction

Self- Linear

supervised Regression

Reinforcement

M. Zeshan Afzal, Very Deep Learning Ch. 2 4

Supervised Learning

Input Model output

x 𝑓𝑤 y

Learning: is the estimation of the parameter w from the training data {(𝑥𝑖, 𝑦𝑖 )}𝑛𝑖=1

Inference: Make prediction on unkown point x i.e., 𝑦 = 𝑓𝑤 𝑥

M. Zeshan Afzal, Very Deep Learning Ch. 2 5

Artificial

Hierarchy Intellegence

Machine

Learning

Shallow

Deep Learning

Learning

Self-

Supervised Supervised Unsupervised Reinforcement

supervised

Structured

Unsupervised Regression Classification

Prediction

Self- Linear

supervised Regression

Reinforcement

M. Zeshan Afzal, Very Deep Learning Ch. 2 6

Examples Supervised Learning (Regression)

Input Model output

𝑓𝑤 31.45

Mapping:

M. Zeshan Afzal, Very Deep Learning Ch. 2 7

Artificial

Hierarchy Intellegence

Machine

Learning

Shallow

Deep Learning

Learning

Self-

Supervised Supervised Unsupervised Reinforcement

supervised

Structured

Unsupervised Regression Classification

Prediction

Self- Linear

supervised Regression

Reinforcement

M. Zeshan Afzal, Very Deep Learning Ch. 2 8

Capacity, Overfitting, Underfitting

◼ Terminology

^ Capacity: Complexity of functions which can be represented by model f

^ Underfitting: Model too simple, does not achieve low error on training set

^ Overfitting: Training error small, but test error (= generalization error) large

Too low capacity almost there about right capcity too high

M. Zeshan Afzal, Very Deep Learning Ch. 2 9

Capacity, Overfitting, Underfitting

◼ General Approach: Split dataset into training, validation and test set

Choose values of hyperparameters (e.g., degree of polynomial,

learning rate in neural net, ..) using validation set.

Important: Evaluate once on test set

◼ It is very (!!) important to use independent test data

^ Typically 50% for training (60)

^ 20% for validation (20) DATA SPLIT

^ 30% for testing (20)

testing; 30

◼ However, might change

^ Depending on number of data available training; 50

◼ For less data we use cross validation

validation; 20

M. Zeshan Afzal, Very Deep Learning Ch. 2 10

Artificial

Hierarchy Intellegence

Machine

Learning

Shallow

Deep Learning

Learning

Self-

Supervised Supervised Unsupervised Reinforcement

supervised

Structured

Unsupervised Regression Classification

Prediction

Self- Linear

supervised Regression

Ridge

Reinforcement

Regression

M. Zeshan Afzal, Very Deep Learning Ch. 2 11

Shallow Learning

Ridge Regression

M. Zeshan Afzal, Very Deep Learning Ch. 2 12

Ridge Regression

◼ Polynomial curve model

◼ Ridge Regression

◼ Add regularization \lambda to discourage large parameters

◼ It has a closed form solution

M. Zeshan Afzal, Very Deep Learning Ch. 2 13

Ridge Regression

◼ M = 15

◼ left most linear regression,

◼ Others: left to write weak to strong regularization

M. Zeshan Afzal, Very Deep Learning Ch. 2 14

Estimators, Bias and Variance

M. Zeshan Afzal, Very Deep Learning Ch. 2 15

Estimators, Bias and Variance

◼ Point Estimator

^ A point estimator is a function that maps a dataset to model

parameter Estimator

Estimate

^ Example: estimator of ridge regression

^ Hat on w ( ) signifies that its an estimate

^ A good estimator is the one that returns the estimate close to the true one

^ The data is drawn from a random process (xi, yi) ∼ pdata(·)

^ Thus any function of the data is random and is a random variable

M. Zeshan Afzal, Very Deep Learning Ch. 2 16

Estimators, Bias and Variance

M. Zeshan Afzal, Very Deep Learning Ch. 2 17

Estimators, Bias, Variance

►is unbiased ⇔ Bias( ) = 0 ►A good estimator has low variance

►A good estimator has little bias

◼ Bias-Variance Dilemma:

►Statistical learning theory tells us that we can’t have both ⇒ there is a trade-off

M. Zeshan Afzal, Very Deep Learning Ch. 2 18

Bias, Variance

◼ Datasets = 100

◼ True Model = Green line

Variance refers to an algos Bias occurs when the Limited

sensitivity to specific set of flexibility to learn the true signal

training data

Lambda = 0.0000001 Lambda = 5

M. Zeshan Afzal, Very Deep Learning Ch. 2 19

Estimators, Bias, Variance

The bias and the variance tradeoff:

M. Zeshan Afzal, Very Deep Learning Ch. 2 20

Terms

◼ Last lecture

^ What is AI

^ Types of AI

^ What is machine learning

^ What is deep learning

^ What are the types of learning

^ Linear Regression, Polynomial Fitting

^ Capacity, Overfitting, Underfitting

◼ This Lecture

^ Ridge regression

^ Estimators, Bias, Variance

M. Zeshan Afzal, Very Deep Learning Ch. 2 21

Maximum Likelihood Estimation

M. Zeshan Afzal, Very Deep Learning Ch. 2 22

Maximum Likelihood Estimation

◼ We now reinterpret out results by taking a probabilistic point of view

◼ Let be a set samples drawn i.i.d from

◼ Let the model be a parametric family of probability

distribution

◼ The conditional maximum likelihood estimator for w is given by

M. Zeshan Afzal, Very Deep Learning Ch. 2 23

Maximum Likelihood Estimation

◼ Example

^ Assuming we obtain

M. Zeshan Afzal, Very Deep Learning Ch. 2 24

Maximum Likelihood Estimation

◼ We see that choosing as Gaussian distribution causes

maximum-likelihood to yield exactly the same least square

estimator that has been derived before

◼ Variations:

^ If we choose as a Laplace distribution, we are going to obtain

we will get the norm and the expression becomes

M. Zeshan Afzal, Very Deep Learning Ch. 2 26

Maximum Likelihood Estimation

◼ We see that choosing as Gaussian distribution causes

maximum-likelihood to yield exactly the same least square

estimator that has been derived before

◼ Consistency: as the number of training samples the

maximum likelihood estimate converges to the true parameters

◼ Efficiency: The ML estimate converges most quickly as N

increases

◼ These theoretical considerations make ML more appealing

M. Zeshan Afzal, Very Deep Learning Ch. 2 27

Artificial

Hierarchy Intellegence

Machine

Learning

Shallow

Deep Learning

Learning

Self-

Supervised Supervised Unsupervised Reinforcement

supervised

Structured

Unsupervised Regression Classification

Prediction

Self- Linear

supervised Regression

Ridge

Reinforcement

Regression

M. Zeshan Afzal, Very Deep Learning Ch. 2 28

Examples Supervised Learning (Classification)

Input Model output

𝑓𝑤 cat

Mapping:

{ 0, 1 }

M. Zeshan Afzal, Very Deep Learning Ch. 2 29

Artificial

Hierarchy Intellegence

Machine

Learning

Shallow

Deep Learning

Learning

Self-

Supervised Supervised Unsupervised Reinforcement

supervised

Structured

Unsupervised Regression Classification

Prediction

Self- Linear Logistic

supervised Regression Regression

Ridge

Reinforcement

Regression

M. Zeshan Afzal, Very Deep Learning Ch. 2 30

Logistic Regression

M. Zeshan Afzal, Very Deep Learning Ch. 2 31

Logistic Regression

◼ We have already see the Maximum Likelihood Estimator

◼ We now perform a binary classification

◼ How should we choose the model is this case

◼ Answer: Bernoulli distribution

where predicted by the model:

M. Zeshan Afzal, Very Deep Learning Ch. 2 32

Logistic Regression

◼ In summary we have assumed the Bernoulli

distribution

Where

◼ The question is that how to choose

◼ We are working with discrete distribution i.e

◼ We can choose the

The sigmoid is given as follows

M. Zeshan Afzal, Very Deep Learning Ch. 2 33

Logistic Regression

◼ Putting it together

◼ In machine learning we use a general term ‘loss function’ rather than the error

function

◼ We minimize the dissimilarity between the empirical data distribution

(defined by the training set) and the model distribution

M. Zeshan Afzal, Very Deep Learning Ch. 2 34

Logistic Regression

◼ Binary Cross Entropy Loss

◼ For yi = 1 the loss L is minimized if yˆi = 1

◼ For y i = 0 the loss L is minimized if yˆi = 0

◼ Thus, L is minimal if yˆi = y i

◼ Can be extended to > 2 classes

M. Zeshan Afzal, Very Deep Learning Ch. 2 35

Logistic Regression

◼ A simple 1D example

◼ Dataset X with positive (yi = 1) and negative (yi = 0) samples

Source: https://towardsdatascience.com/understanding-binary-cross-entropy-log-loss-a-visual-explanation-a3ac6025181a

M. Zeshan Afzal, Very Deep Learning Ch. 2 36

Logistic Regression

◼ A simple 1D example

►Logistic regressor f w (x) = σ(w0 + w1x) fit to dataset X

Source: https://towardsdatascience.com/understanding-binary-cross-entropy-log-loss-a-visual-explanation-a3ac6025181a

M. Zeshan Afzal, Very Deep Learning Ch. 2 37

Logistic Regression

◼ A simple 1D example

◼ Probabilities of classifier f w (xi) for positive samples (yi = 1)

Source: https://towardsdatascience.com/understanding-binary-cross-entropy-log-loss-a-visual-explanation-a3ac6025181a

M. Zeshan Afzal, Very Deep Learning Ch. 2 38

Logistic Regression

◼ A simple 1D example

►Probabilities of classifier f w (x i ) for negative samples (yi = 0)

Source: https://towardsdatascience.com/understanding-binary-cross-entropy-log-loss-a-visual-explanation-a3ac6025181a

M. Zeshan Afzal, Very Deep Learning Ch. 2 39

Logistic Regression

◼ A simple 1D example

◼ Putting both together

Source: https://towardsdatascience.com/understanding-binary-cross-entropy-log-loss-a-visual-explanation-a3ac6025181a

M. Zeshan Afzal, Very Deep Learning Ch. 2 40

Logistic Regression

◼ A simple 1D example

◼ Putting both together

Source: https://towardsdatascience.com/understanding-binary-cross-entropy-log-loss-a-visual-explanation-a3ac6025181a

M. Zeshan Afzal, Very Deep Learning Ch. 2 41

Logistic Regression

◼ Maximum Likelihood for Logistic Regression:

With and

◼ How do we find the minimizer of

◼ In comparison to linear regression the loss is not quadratic in w

◼ We must apply the iterative gradient based optimization The gradient is

M. Zeshan Afzal, Very Deep Learning Ch. 2 42

Logistic Regression

◼ Gradient Descent

^ Pick the step size and tolerance

^ Initialize

^ Repeat until

◼ Variants

^ Line Search

^ Conjugate gradients Source: https://en.wikipedia.org/wiki/Conjugate_gradient_method

^ L-BFGS

M. Zeshan Afzal, Very Deep Learning Ch. 2 43

Logistic Regression

◼ Examples in 2D

◼ Logistic Regression model:

M. Zeshan Afzal, Very Deep Learning Ch. 2 44

Logistic Regression

◼ Maximizing the Log-Likelihood is equivalent to

minimizing the Cross Entropy or KL divergence

M. Zeshan Afzal, Very Deep Learning Ch. 2 45

Summary (Second Part)

Maximum Likelihood Estimation

• Maximum Likelihood Estimation is Least Square

Estimation when Gaussian distribution is assumed

M. Zeshan Afzal, Very Deep Learning Ch. 2 46

Summary (Second Part)

Maximum Likelihood Estimation

• Logistic Regression

• Maximum Likelihood Estimation of Bernoulli

• Example of Binary Classification using Logistic

Regression

• Optimization

• Gradient Descent

• Relation to Information theory

• Maximizing the Log-Likelihood is equivalent to

minimizing the Cross Entropy or KL divergence

M. Zeshan Afzal, Very Deep Learning Ch. 2 47

Computational Graphs

M. Zeshan Afzal, Very Deep Learning Ch. 2 48

Thanks a lot for your Attention

M. Zeshan Afzal, Very Deep Learning Ch. 2 49

You might also like

- 2nd Quarter Exam Grade 7Document4 pages2nd Quarter Exam Grade 7Lily Mar VinluanNo ratings yet

- Learning Theories - PSTHE - BSMTDocument6 pagesLearning Theories - PSTHE - BSMTBEA CASSANDRA BELCOURTNo ratings yet

- I S FBA / BIP T: Ndividual Tudent EmplateDocument7 pagesI S FBA / BIP T: Ndividual Tudent EmplatejstncortesNo ratings yet

- Learning Theories Cheat Sheet 1Document2 pagesLearning Theories Cheat Sheet 1api-490667843No ratings yet

- Student A Stages of Escalation Behaviour Plan 29 11 16 1Document1 pageStudent A Stages of Escalation Behaviour Plan 29 11 16 1api-360150352100% (1)

- Knowledge Level: 1. Striated Muscle AbilityDocument7 pagesKnowledge Level: 1. Striated Muscle AbilityMita Sari HalawaNo ratings yet

- Assure Lesson PlanDocument3 pagesAssure Lesson Planapi-340810016100% (1)

- Bion and Klein CharacteristicsDocument5 pagesBion and Klein CharacteristicsElena Stein SparvieriNo ratings yet

- Psychomotor Domain TaxonomyDocument2 pagesPsychomotor Domain TaxonomyRaijū100% (1)

- Understanding The SelfDocument16 pagesUnderstanding The Selfnicolar bearNo ratings yet

- OnlundDocument12 pagesOnlundapi-3764755100% (2)

- Perspectives On The History of Mathematical Logic PDFDocument218 pagesPerspectives On The History of Mathematical Logic PDFPallab Chakraborty100% (1)

- CHAPTER 1 2 and 3Document32 pagesCHAPTER 1 2 and 3Louie-Al Vontroy BenitoNo ratings yet

- Consumer Behaviour - 4: AttitudeDocument5 pagesConsumer Behaviour - 4: AttitudeHimansu S M75% (12)

- Presentasi Kelompok 3 Bahasa Inggris News Item TextDocument12 pagesPresentasi Kelompok 3 Bahasa Inggris News Item TextAS ART70% (10)

- Lecture02 VDLDocument42 pagesLecture02 VDLnikhit5No ratings yet

- UTS UNIT 3a - Learning To Be A Better StudentDocument9 pagesUTS UNIT 3a - Learning To Be A Better StudentdsadadasdNo ratings yet

- Uts 2Document7 pagesUts 2A.J. ChuaNo ratings yet

- Uts FinalsDocument33 pagesUts FinalsA.J. ChuaNo ratings yet

- Difficulties: TopicDocument2 pagesDifficulties: TopicNEROiNo ratings yet

- Assignment 1A (Mind Map)Document1 pageAssignment 1A (Mind Map)200834683No ratings yet

- Psychology 114Document1 pagePsychology 114chalovandeneijkelNo ratings yet

- Learning: Anwar YulistiantoDocument26 pagesLearning: Anwar YulistiantoAhmad Masantum PcaNo ratings yet

- 2 - Multiple Linear RegressionDocument71 pages2 - Multiple Linear RegressionThelazyJoe TMNo ratings yet

- Graphic OrganizerDocument11 pagesGraphic OrganizerKim Lambo RojasNo ratings yet

- Introduction To Probabilistic LearningDocument9 pagesIntroduction To Probabilistic LearninggagamamapapaNo ratings yet

- Lession Plan On 1st Stage of LabourDocument15 pagesLession Plan On 1st Stage of LabourRaj JadhavNo ratings yet

- UTS Lesson 1 BETTER STUDENTDocument45 pagesUTS Lesson 1 BETTER STUDENTAguirre, John CastorNo ratings yet

- Sup Learning 1Document150 pagesSup Learning 1lafdaliNo ratings yet

- Isra University Department of Mecahnical EngineeringDocument1 pageIsra University Department of Mecahnical EngineeringShah JibranNo ratings yet

- Machine LearningDocument21 pagesMachine LearningRajveer JainNo ratings yet

- Evabel (Mita Sari Halawa, 17029035)Document8 pagesEvabel (Mita Sari Halawa, 17029035)Mita Sari HalawaNo ratings yet

- Artificer - V5.0 - UADocument1 pageArtificer - V5.0 - UAFran PerezNo ratings yet

- Error Based and Reward Based Learning: April 2016Document24 pagesError Based and Reward Based Learning: April 2016AngelRibeiro10No ratings yet

- Dsaa RubricsDocument3 pagesDsaa RubricsAlishba SoomroNo ratings yet

- Reference Material Module 2 PPT Slide 63 To 92Document30 pagesReference Material Module 2 PPT Slide 63 To 92Sunshine BorerNo ratings yet

- PR - Module 11 - JRDocument6 pagesPR - Module 11 - JRSamuel Dela CruzNo ratings yet

- Learning Agility in Action: Prev NextDocument2 pagesLearning Agility in Action: Prev NextJoao Paulo MouraNo ratings yet

- Experience Designer Toolkit - Page 1Document1 pageExperience Designer Toolkit - Page 1api-710422057No ratings yet

- Chapter 6 PsychDocument5 pagesChapter 6 PsychGerry Jimenez Sao-anNo ratings yet

- SSL 18 Mar 23 PDFDocument50 pagesSSL 18 Mar 23 PDFarpan singhNo ratings yet

- Chapter 4 Learning and Memory ChartDocument1 pageChapter 4 Learning and Memory ChartsayedNo ratings yet

- Health Education Reviewer (Midterm)Document6 pagesHealth Education Reviewer (Midterm)Carrie Anne Aquila GarciaNo ratings yet

- Session - 7-SupDocument12 pagesSession - 7-SupKunal SinghNo ratings yet

- FOI Quick ReferenceDocument7 pagesFOI Quick ReferenceBob CurtisNo ratings yet

- Psychomotor: 3.5 Domain IiDocument20 pagesPsychomotor: 3.5 Domain IiAlberth AbayNo ratings yet

- Chapter 4 - Human LearningDocument1 pageChapter 4 - Human LearningElyAltabeNo ratings yet

- Weinstein Strategic Learning ModelDocument1 pageWeinstein Strategic Learning ModelGerardo Bañales FazNo ratings yet

- Cover Work Topic 5Document4 pagesCover Work Topic 5Muffaddal MustafaNo ratings yet

- PST Overall Assessment Form - Demo Teach12Document2 pagesPST Overall Assessment Form - Demo Teach12Kim RusteNo ratings yet

- Nouns Mind MapDocument1 pageNouns Mind MapAena AlmuntazaNo ratings yet

- Memory-Learning #4Document13 pagesMemory-Learning #4usama iqbalNo ratings yet

- Motor Learning Theory PDFDocument9 pagesMotor Learning Theory PDFBelinda SitholeNo ratings yet

- Dsaa RubricsDocument2 pagesDsaa RubricsAlishba SoomroNo ratings yet

- We Don'T See Things As They Are, We See Things As We Are.Document13 pagesWe Don'T See Things As They Are, We See Things As We Are.Rohan TandonNo ratings yet

- 01 KoclassificationofskillsDocument1 page01 Koclassificationofskillsisaaceden24No ratings yet

- Module 3 Learning Targets For Performance and ProductOriented AssessmentDocument20 pagesModule 3 Learning Targets For Performance and ProductOriented AssessmentPaul PaguiaNo ratings yet

- Lecture 02 - Warming-Up and Data and Features - PlainDocument23 pagesLecture 02 - Warming-Up and Data and Features - PlainRajaNo ratings yet

- Gradasi Perilaku (Taksonomi) Pada Kur 2013Document7 pagesGradasi Perilaku (Taksonomi) Pada Kur 2013De FiatNo ratings yet

- Consumer Behavior Schiffman and Kanuk OuDocument1 pageConsumer Behavior Schiffman and Kanuk OuMy Huynh Kim ThaoNo ratings yet

- Requirement 1 (Summary Matrix)Document2 pagesRequirement 1 (Summary Matrix)john mark tumbagaNo ratings yet

- Lecture 14Document73 pagesLecture 14adrienleeNo ratings yet

- Chapter 6: Learning MatchingDocument6 pagesChapter 6: Learning Matchingapi-439938944No ratings yet

- Assessment - Study GuideDocument7 pagesAssessment - Study GuideNiño James AceronNo ratings yet

- 2021 Learning Science Cheat SheetDocument14 pages2021 Learning Science Cheat SheetJalajNo ratings yet

- Attention: Test Yourself 4.1Document35 pagesAttention: Test Yourself 4.1Lustre GlarNo ratings yet

- Unit 2 (A) - Attention - Basic Reading 01Document28 pagesUnit 2 (A) - Attention - Basic Reading 01Tanvi ModiNo ratings yet

- Lecture06 VDLDocument79 pagesLecture06 VDLnikhit5No ratings yet

- Lecture07 VDL Part01Document90 pagesLecture07 VDL Part01nikhit5No ratings yet

- Lecture00 VDLDocument69 pagesLecture00 VDLnikhit5No ratings yet

- Lecture02 VDLDocument42 pagesLecture02 VDLnikhit5No ratings yet

- Very Deep Learning - 2Document63 pagesVery Deep Learning - 2nikhit5No ratings yet

- Analysis of Annie Dillard's Total EclipseDocument3 pagesAnalysis of Annie Dillard's Total EclipseJules GriffinNo ratings yet

- Mitch Fryling & Linda Hayes - Motivation in Behavior Analysis: A CritiqueDocument9 pagesMitch Fryling & Linda Hayes - Motivation in Behavior Analysis: A CritiqueIrving Pérez Méndez0% (1)

- Pe Lesson Plan: Knowledge, Skills, Behaviours & DispositionsDocument4 pagesPe Lesson Plan: Knowledge, Skills, Behaviours & Dispositionsapi-377763349No ratings yet

- Yoga Psychology Magazine Fall 2010Document57 pagesYoga Psychology Magazine Fall 2010Sita Anuragamayi Claire0% (1)

- Lesson1 Esp PDFDocument61 pagesLesson1 Esp PDFAlexanderBarreraNo ratings yet

- Technological Advancements and Their Effect On Human LifeDocument9 pagesTechnological Advancements and Their Effect On Human LifeCody SouleNo ratings yet

- Coaching and MentoringDocument12 pagesCoaching and MentoringIon RusuNo ratings yet

- EssayDocument1 pageEssayBagus Wira KumarNo ratings yet

- Averroes Epistemology and Its Critique by AquinasDocument32 pagesAverroes Epistemology and Its Critique by AquinasBrad FieldsNo ratings yet

- Enterprise Architecture: Lecture 1. Lecture NotesDocument10 pagesEnterprise Architecture: Lecture 1. Lecture NotesEsteban DiazNo ratings yet

- Padua - Final Output in Practical Research 1Document6 pagesPadua - Final Output in Practical Research 1Xen's School AccountNo ratings yet

- Simple, Monitoring, Interactive, Learner-Centric Environment (SMILE)Document13 pagesSimple, Monitoring, Interactive, Learner-Centric Environment (SMILE)Grace JansonNo ratings yet

- Principles of High Quality AssessmentDocument31 pagesPrinciples of High Quality AssessmentCezar John SantosNo ratings yet

- G11 RW Critical ReadingDocument19 pagesG11 RW Critical ReadingJonabel AlinsootNo ratings yet

- Welcome To NCSA's CAVE™ at The Beckman InstituteDocument1 pageWelcome To NCSA's CAVE™ at The Beckman InstituteVivi NatashaNo ratings yet

- Academic Discourse, Ken HylandDocument3 pagesAcademic Discourse, Ken HylandIvaylo Dagnev100% (1)

- Success Through A Positive Mental Attitude by Napoleon Hill - W. Clement Stone PDFDocument3 pagesSuccess Through A Positive Mental Attitude by Napoleon Hill - W. Clement Stone PDFArun KN100% (1)

- Lesson Plan 16 Using A RulerDocument9 pagesLesson Plan 16 Using A Rulernegus russellNo ratings yet

- Observation Report: TESOL Certificate ProgramsDocument3 pagesObservation Report: TESOL Certificate Programsapi-291611819No ratings yet

- Capstone Artifact Elcc Standard 6Document2 pagesCapstone Artifact Elcc Standard 6api-404096250100% (1)

- Degree of PredicateDocument18 pagesDegree of PredicateFranciska Xaveria100% (1)

- Checkout Ux ChecklistDocument6 pagesCheckout Ux ChecklisttayronloliloNo ratings yet