Professional Documents

Culture Documents

Site Update For Oakforest-PACS

Uploaded by

visor0855Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Site Update For Oakforest-PACS

Uploaded by

visor0855Copyright:

Available Formats

Site Update for Oakforest-PACS

at JCAHPC

Taisuke Boku

Vice Director, JCAHPC

University of Tsukuba

1 2018/04/24 IXPUG ME 2018 (Site Update)

Center for Computational Sciences, Univ. of Tsukuba

Towards Exascale Computing

Future

PF Exascale

Post K Computer

1000 Tier-1 and tier-2 supercomputers -> Riken

R-CCS AICS

form HPCI and move forward to

Exascale computing like two wheels

100

10 OFP

JCAHPC(U. Tsukuba and

U. Tokyo)

1 TSUBAME2.0

Tokyo Tech.

T2K

U. of Tsukuba

U. of Tokyo

Kyoto U.

2008 2010 2012 2014 2016 2018 2020

IXPUG ME 2018 (Site Update)

2

2018/04/24 Center for Computational Sciences, Univ. of Tsukuba

Deployment plan of 9 supercomputing center (Feb. 2017)

Power consumption indicates maximum of power supply (includes cooling facility)

Fiscal Year 2014 2015 2016 2017 2018 2019 2020 2021 2022 2023 2024 2025

Hokkaido HITACHI SR16000/M1(172TF, 22TB)

Cloud System BS2000 (44TF, 14TB)

3.2 PF (UCC + CFL/M) 0.96MW 30 PF (UCC +

Data Science Cloud / Storage HA8000 / WOS7000

(10TF, 1.96PB) 0.3 PF (Cloud) 0.36MW CFL-M) 2MW

Tohoku NEC SX- SX-ACE(707TF,160TB, 655TB/s) ~30PF, ~30PB/s Mem BW (CFL-D/CFL-M)

9他

(60TF)

LX406e(31TF), Storage(4PB), 3D Vis, 2MW ~3MW

Tsukuba HA-PACS (1166 TF) PACS-X 10PF (TPF) 2MW

COMA (PACS-IX) (1001 TF)

100+ PF 4.5MW

Oakforest-PACS 25 PF (UCC + TPF) 4.2 MW (UCC + TPF)

Tokyo

Fujitsu FX10 Reedbush 1.8〜1.9 PF 0.7 MW 200+ PF

(1PFlops, 150TiB, 408 TB/s),

Hitachi SR16K/M1 (54.9 TF, 10.9 TiB, 28.7 TB/s)

50+ PF (FAC) 3.5MW (FAC) 6.5MW

Tokyo Tech. TSUBAME 2.5 (5.7 PF, TSUBAME 2.5 (3~4 PF, extended)

110+ TB, 1160 TB/s),

TSUBAME 4.0 (100+ PF,

1.4MW TSUBAME 3.0 12 PF, UCC/TPF 2.0MW

>10PB/s, ~2.0MW)

Nagoya FX10(90TF) Fujitsu FX100 (2.9PF, 81 TiB) 100+ PF

CX400(470T

Fujitsu CX400 (774TF, 71TiB)

50+ PF (FAC/UCC + CFL-M)

F) (FAC/UCC+CFL-

SGI UV2000 (24TF, 20TiB) 2MW in total up to 4MW M)up to 4MW

Kyoto Cray: XE6 + GB8K +

XC30 (983TF)

5 PF(FAC/TPF) 50-100+ PF

Cray XC30 (584TF) 1.5 MW (FAC/TPF + UCC) 1.8-2.4 MW

Osaka NEC SX-ACE NEC Express5800 3.2PB/s, 5-10Pflop/s, 1.0-1.5MW (CFL-M) 25.6 PB/s, 50-

100Pflop/s,1.5-

(423TF) (22.4TF) 0.7-1PF (UCC) 2.0MW

Kyushu HA8000 (712TF, 242 TB)

SR16000 (8.2TF, 6TB) 15-20 PF (UCC/TPF) 2.6MW 100-150 PF

FX10 (272.4TF, 36 TB)2.0MW FX10 (FAC/TPF + UCC/TPF)3MW

CX400 (966.2 TF, 183TB) (90.8TFLOPS)

K Computer (10PF, 13MW) ???? Post-K Computer (??. ??)

3 2018/04/24 IXPUG ME 2018 (Site Update)

JCAHPC

n Joint Center for Advanced High Performance Computing

(http://jcahpc.jp)

n Very tight collaboration for “post-T2K” with two

universities

n For main supercomputer resources, uniform specification to

single shared system

n Each university is financially responsible to introduce the

machine and its operation

-> unified procurement toward single system with largest scale

in Japan

n To manage everything smoothly, a joint organization was

established

-> JCAHPC

IXPUG ME 2018 (Site Update) 2018/04/24

4

Center for Computational Sciences, Univ. of Tsukuba

Machine location: Kashiwa Campus of U. Tokyo

U. Tsukuba

Kashiwa

Campus

of U. Tokyo

Hongo Campus of U. Tokyo

IXPUG ME 2018 (Site Update)

5

2018/04/24

Oakforest-PACS (OFP)

U. Tokyo convention U. Tsukuba convention ⇒ Don’t call it just “Oakforest” !

“OFP” is much better

• 25 PFLOPS peak

• 8208 KNL CPUs

• FBB Fat-Tree by

OmniPath

• HPL 13.55 PFLOPS

#1 in Japan

#6➙#7

• HPCG #3➙#5

• Green500 #6➙#21

• Full operation started

Dec. 2016

• Official Program started

on April 2017

IXPUG ME 2018 (Site Update)

6

2018/04/24

Computation node & chassis

Water cooling

wheel & pipe

Chassis with 8 nodes, 2U size

Computation node (Fujitsu next generation PRIMERGY)

with single chip Intel Xeon Phi (Knights Landing, 3+TFLOPS)

and Intel Omni-Path Architecture card (100Gbps)

IXPUG ME 2018 (Site Update)

7

2018/04/24

Water cooling pipes and rear panel radiator

Direct water cooling pipe for CPU Rear-panel indirect

water cooling for

others

IXPUG ME 2018 (Site Update)

8

2018/04/24

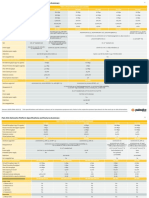

Specification of Oakforest-PACS

Total peak performance 25 PFLOPS

Total number of compute nodes 8,208

Compute Product Fujitsu Next-generation PRIMERGY server for HPC (under

node development)

Processor Intel® Xeon Phi™ (Knights Landing)

Xeon Phi 7250 (1.4GHz TDP) with 68 cores

Memory High BW 16 GB, > 400 GB/sec (MCDRAM, effective rate)

Low BW 96 GB, 115.2 GB/sec (DDR4-2400 x 6ch, peak rate)

Inter- Product Intel® Omni-Path Architecture

connect Link speed 100 Gbps

Topology Fat-tree with full-bisection bandwidth

Login Product Fujitsu PRIMERGY RX2530 M2 server

node # of servers 20

Processor Intel Xeon E5-2690v4 (2.6 GHz 14 core x 2 socket)

Memory 256 GB, 153 GB/sec (DDR4-2400 x 4ch x 2 socket)

IXPUG ME 2018 (Site Update) 2018/04/24

9

Center for Computational Sciences, Univ. of Tsukuba

Specification of Oakforest-PACS (I/O)

Parallel File Type Lustre File System

System Total Capacity 26.2 PB

Meta Product DataDirect Networks MDS server + SFA7700X

data # of MDS 4 servers x 3 set

MDT 7.7 TB (SAS SSD) x 3 set

Object Product DataDirect Networks SFA14KE

storage # of OSS 10 (20)

(Nodes)

Aggregate BW ~500 GB/sec

Fast File Type Burst Buffer, Infinite Memory Engine (by DDN)

Cache System Total capacity 940 TB (NVMe SSD, including parity data by

erasure coding)

Product DataDirect Networks IME14K

# of servers (Nodes) 25 (50)

Aggregate BW ~1,560 GB/sec

IXPUG ME 2018 (Site Update)

10

2018/04/24 Center for Computational Sciences, Univ. of Tsukuba

Full bisection bandwidth Fat-tree by

Intel® Omni-Path Architecture

12 of

768 port Director Switch

(Source by Intel)

2 2

Uplink: 24

362 of

48 port Edge Switch

Downlink: 24

Compute Nodes 8208

1 ... 24 25 ... 48 49 ... 72

Login Nodes 20

Firstly, to reduce switches&cables, we considered :

• All the nodes into subgroups are connected with FBB Fat-tree Parallel FS 64

• Subgroups are connected with each other with >20% of FBB IME 300

But, HW quantity is not so different from globally FBB, and globally FBB is

Mgmt, etc. 8

preferredfor flexible job management.

Total 8600

IXPUG ME 2018 (Site Update) 2018/04/24

11

Facility of Oakforest-PACS system

Power consumption 4.2 MW (including cooling)

➙ actually around 3.0 MW

# of racks 102

Cooling Compute Type Warm-water cooling

system Node Direct cooling (CPU)

Rear door cooling (except CPU)

Facility Cooling tower & Chiller

Others Type Air cooling

Facility PAC

IXPUG ME 2018 (Site Update) 2018/04/24

12

Software of Oakforest-PACS

Compute node Login node

OS CentOS 7, McKernel Red Hat Enterprise Linux 7

Compiler gcc, Intel compiler (C, C++, Fortran)

MPI Intel MPI, MVAPICH2

Library Intel MKL

LAPACK, FFTW, SuperLU, PETSc, METIS, Scotch, ScaLAPACK,

GNU Scientific Library, NetCDF, Parallel netCDF, Xabclib,

ppOpen-HPC, ppOpen-AT, MassiveThreads

Application mpijava, XcalableMP, OpenFOAM, ABINIT-MP, PHASE system,

FrontFlow/blue, FrontISTR, REVOCAP, OpenMX, xTAPP,

AkaiKKR, MODYLAS, ALPS, feram, GROMACS, BLAST, R

packages, Bioconductor, BioPerl, BioRuby

Distributed FS Globus Toolkit, Gfarm

Job Scheduler Fujitsu Technical Computing Suite

Debugger Allinea DDT

Profiler Intel VTune Amplifier, Trace Analyzer & Collector

IXPUG ME 2018 (Site Update) 2018/04/24

13

TOP500 list on Nov. 2017 (#50)

# Machine Architecture Country Rmax (TFLOPS) Rpeak (TFLOPS) MFLOPS/W

MPP (Sunway,

1 TaihuLight, NSCW China 93,014.6 125,435.9 6051.3

SW26010)

Tianhe-2 (MilkyWay-2), Cluster (NUDT,

2 China 33,862.7 54,902.4 1901.5

NSCG CPU + KNC)

MPP (Cray, XC50:

3 Piz Daint, CSCS Switzerland 19,590.0 25,326.3 10398.0

CPU + GPU)

MPP (Exascaler,

4 Gyoukou, JAMSTEC Japan 19,125.8 28,192.0 14167.3

PEZY-SC2)

MPP (Cray, XK7:

5 Titan, ORNL United States 17,590.0 27,112.5 2142.8

CPU + GPU)

MPP (IBM,

6 Sequoia, LLNL United States 17,173.2 20,132.7 2176.6

BlueGene/Q)

Trinity, NNSA/ MPP (Cray,

7 United States 14,137.3 43,902.6 3667.8

LABNL/SNL XC40: MIC)

MPP (Cray, XC40:

8 Cori, NERSC-LBNL United States 14,014.7 27,880.7 3556.7

KNL)

Cluster (Fujitsu,

9 Oakforest-PACS, JCAHPC Japan 13,554.6 25,004.9 4985.1

KNL)

10 K Computer, RIKEN AICS MPP (Fujitsu) Japan 10,510.0 11,280.4 830.2

IXPUG ME 2018 (Site Update)

14

2018/04/24 Center for Computational Sciences, Univ. of Tsukuba

Post-K Computer and OFP

n OFP fills gap between K Computer and Post-K Computer

n Post-K Computer is planned to install 2020-2021 time frame

n K Computer will be shutdown around 2018-2019 ??

n Two system software developed in AICS RIKEN for Post-K

Computer

n McKernel

n OS for Many-core era, for a number of thin-cores without OS jitter and core

binding

n Primary OS (based on Linux) on Post-K, and application development goes

ahead

n XcalableMP (XMP) (in collaboration with U. Tsukuba)

n Parallel programming language for directive-base easy coding on distributed

memory system

n Not like explicit message passing with MPI

IXPUG ME 2018 (Site Update)

15

2018/04/24 Center for Computational Sciences, Univ. of Tsukuba

OFP resource sharing program (nation-wide)

n JCAHPC (20%)

n HPCI – HPC Infrastructure program in Japan to share all supercomputers

(free!)

n Big challenge special use (full system size) – opportunity to use entire

8208 CPUs by just one project for 24 hours, every end of month

n U. Tsukuba (23.5%)

n Interdisciplinary Academic Program (free!)

n Large scale general use

n U. Tokyo (56.5%)

n General use

n Industrial trial use

n Educational use

n Young & Female special use

n Ordinary job can use up to 2048 nodes/job

IXPUG ME 2018 (Site Update)

16

2018/04/24 Center for Computational Sciences, Univ. of Tsukuba

17

Research Area based on CPU Hours

Oakforest-PACS in FY.2017 (TENTATIVE: 2017.4~2017.9)

Engineering

Earth/Space

Material

NICAM-COCO Energy/Physics

GHYDRA

Seism3D

Information Sci.

Education

Industry

SALMON/ Bio

ARTED

Social Sci. & Economics

Lattice QCD Data

IXPUG ME 2018 (Site Update)

2018/04/24 Center for Computational Sciences, Univ. of Tsukuba

Performance variant between nodes

Hamiltonian Current n most of time is consumed for Hamiltonian

Misc. computation Communication calculation

1.4 n not including communication time

Normalized elapse time / Iteration

1.2 n domain size is equal for all nodes

0.126

root cause of strong scaling saturation

Lower is Faster

1 0.109 n

0.127

0.8 0.095 n performance gap exists on any materials

0.6 n Non-algorithmic load-imbalancing

0.925

0.4 0.764 Ø dynamic clock adjustment (DVFS) on turbo

0.2

boost is applied individually on all processors

0

Ø it is observed on under same condition of

Best Worst nodes

Ø on KNL, more sensitive than Xeon

normalized to best case

Ø serious performance degradation on

synchronized large scale system

18 IXPUG ME 2018 (Site 2018/04/24

Update)

Center for Computational Sciences, Univ. of Tsukuba

Operation summary

n Memory model

n basically 50:50 for cache:flat modes

n started to watch the queue condition for “gently” changing the ratio

~ 15%

n planning to introduce “dynamic on-demand switching” in job by job manner

n KNL CPU

n almost good and failure rate is enough under estimation by Fujitsu

n enough stability to support up to 2048 node job

n OPA network

n at first there was a problem at booting up time, but now it’s fixed almost

-> it was the main reason against to the dynamic memory mode change

n hundreds of links have been changed by initial failure, but now stable

n Special operation

n every month, 24hours operation for just one project to occupy entire system

IXPUG ME 2018 (Site Update)

19

2018/04/24

New machine planned at

CCS, U. Tsukuba

“PACS-X” with GPU+FPGA

20 2018/04/24 IXPUG ME 2018 (Site Update)

Center for Computational Sciences, Univ. of Tsukuba

CCS at University of Tsukuba

n Center for Computational Sciences

n Established in 1992

n 12 years as Center for Computational Physics

n Reorganized as Center for Computational Sciences in 2004

n Daily collaborative researches with two kinds of faculty

researchers (about 35 in total)

n Computational Scientists

who have NEEDS (applications)

n Computer Scientists

who have SEEDS (system & solution)

IXPUG ME 2018 (Site Update)

21

2018/04/24 Center for Computational Sciences, Univ. of Tsukuba

PAX (PACS) series history in U. Tsukuba

n Started in 1977 (by Hoshino and Kawai)

n 1st generation PACS in 1978 with 9 CPUs

n 6th generation CP-PACS awarded #1 in TOP500

1996

2006 2012~2013

1978 1980 1989 6th CP-PACS

2nd PAX-32 PACS-CS (7th) HA-PACS (8th) introducing

1st PACS-9 5th QCDPAX #1 in the world first PC cluster solution GPU/FPGA

Year Name Performance

1978年 PACS-9 7 KFLOPS n co-design by computational

1980年 PACS-32 500 KFLOPS scientists and computer scientists

1983年 PAX-128 4 MFLOPS

1984年 PAX-32J 3 MFLOPS n Application-driven development

1989年 QCDPAX 14 GFLOPS n Accumulation of experiences by

1996年 CP-PACS 614 GFLOPS continuous development

2006年 PACS-CS 14.3 TFLOPS

2012~13年 HA-PACS (PACS-VIII) 1.166 PFLOPS

2014年 COMA (PACS-IX) 1.001 PFLOPS

IXPUG ME 2018 (Site Update)

22 2018/04/24 Center for Computational Sciences, Univ. of Tsukuba

AiS

n AiS: Accelerator in Swtich

n Using FPGA not only for computation

offloading but also for communication

n Combining computation offloading and GPU

communication among FPGAs for ultra- CPU

low latency on FPGA computing

FPGA

Especially effective on communication- PCIe

n

comp.

related small/medium computation

comm.

(such as collective communication)

n Covering GPU non-suited computation by

FPGA high speed interconnect

n OpenCL-enable programming for

application users

IXPUG ME 2018 (Site Update)

23

2018/04/24 Center for Computational Sciences, Univ. of Tsukuba

AiS computation model

invoke GPU/FPGA kernsls

data transfer via PCIe

GPU (invoked from FPGA) GPU

CPU CPU

FPGA FPGA

PCIe PCIe

comp. comp.

comm. collective or specialized comm.

communication with

computation

> QSFP28 interconnect

Ethernet Switch

IXPUG ME 2018 (Site Update)

24

2018/04/24 Center for Computational Sciences, Univ. of Tsukuba

Evaluation test-bed

n Pre-PACS-X (PPX) comp. node HCA:

Mellanox IB/EDR

n CCS, U. Tsukuba

n PACS-X prototype IB/EDR : 100Gbps

CPU:Xeon

E5-2660 v4

GPU:

NVIDIA P100 x2

CPU:Xeon

E5-2660 v4

FPGA:

QSFP+ : 40Gbpsx2 Bittware A10PL4

Host OS CentOS 7.3

Host Compiler gcc 4.8.5

FPGA Intel FPGA SDK for OpenCL,

Compiler Intel Quartus Prime Pro

Version 17.0.0 Build 289

IXPUG ME 2018 (Site Update)

25

2018/04/24 Center for Computational Sciences, Univ. of Tsukuba

Time Line

n Feb. 2018: Request for Information

n Apr. 2018: Request for Comment (followings are just

requirement)

n basic specification: AiS-based large cluster with up to 256 nodes

n V100 class of GPU x2

n Stratix10 or UltraScale class of FPGA x1 (25% of total count of nodes)

n OPA x2 or InfiniBand HDR class interconnection

n Aug. 2018: Request for Proposal

n Bidding closed on begin of Sep. 2018

n Mar. 2019: Deployment

n Apr. 2019: Starting official operation

IXPUG ME 2018 (Site Update)

26

2018/04/24 Center for Computational Sciences, Univ. of Tsukuba

You might also like

- British Commercial Computer Digest: Pergamon Computer Data SeriesFrom EverandBritish Commercial Computer Digest: Pergamon Computer Data SeriesNo ratings yet

- MDB 01Document1 pageMDB 01Saahil KhaanNo ratings yet

- Talk 1 Satoshi MatsuokaDocument112 pagesTalk 1 Satoshi Matsuokasofia9909No ratings yet

- Brkarc 2007Document124 pagesBrkarc 2007jansikorski1995No ratings yet

- 2.gigabit EthernetDocument6 pages2.gigabit EthernetSATHISHNo ratings yet

- Pricelist Katalog Holymed - Rev JanDocument23 pagesPricelist Katalog Holymed - Rev JanPT.KARYASURYA MULIANo ratings yet

- Juniper BOMDocument3 pagesJuniper BOMGautam ChakrabortyNo ratings yet

- Laporan Progress Tunjungan TP6Document151 pagesLaporan Progress Tunjungan TP6YogatamaNo ratings yet

- Relay Settings - XlsxsDocument18 pagesRelay Settings - XlsxsAvinash KumarNo ratings yet

- Description: Echo Processor ICDocument4 pagesDescription: Echo Processor ICdasyir baeNo ratings yet

- Huawei Optix Osn 8800 and Boards DatasheetDocument73 pagesHuawei Optix Osn 8800 and Boards Datasheetosama shafterNo ratings yet

- HTC Storage POC: HDS EssauDocument14 pagesHTC Storage POC: HDS EssausalecocoNo ratings yet

- Cable Electrical PropertiesDocument2 pagesCable Electrical PropertiesaungwinnaingNo ratings yet

- BOM Transmission - OSN 1800 II Pro - 31032023xlsxDocument5 pagesBOM Transmission - OSN 1800 II Pro - 31032023xlsxThanhNN0312No ratings yet

- Load ScheduleDocument18 pagesLoad SchedulevijayNo ratings yet

- B CPT Pluggables 975Document42 pagesB CPT Pluggables 975Gia Toàn LêNo ratings yet

- BJT - Transistor For OPSDocument6 pagesBJT - Transistor For OPSwillyNo ratings yet

- CE6850-HI-F-B00 - DatasheetDocument7 pagesCE6850-HI-F-B00 - DatasheetJosimar MoreiraNo ratings yet

- Juniper Training PDFDocument971 pagesJuniper Training PDFroberthaiNo ratings yet

- SMTS File - 1 RS20210015 2021 01 06 13 - 16 - 32Document1 pageSMTS File - 1 RS20210015 2021 01 06 13 - 16 - 32Muhammad RifkyNo ratings yet

- NetApp Hardware Universe 2013Document1 pageNetApp Hardware Universe 2013HHaui63No ratings yet

- OptiX OSN 8800 10-Port 10G Tributary Board TTXDocument38 pagesOptiX OSN 8800 10-Port 10G Tributary Board TTXThunder-Link.com100% (1)

- Shopping Cart - Motorola SolutionsDocument2 pagesShopping Cart - Motorola Solutionskenneth1195No ratings yet

- The Impact of Computer Architectures On Linear Algebra and Numerical LibrariesDocument51 pagesThe Impact of Computer Architectures On Linear Algebra and Numerical LibrariesWeb devNo ratings yet

- F-234968 - ApprovedDocument8 pagesF-234968 - ApprovedAnticlockNo ratings yet

- Timing Solutions For Xilinx FpgasDocument5 pagesTiming Solutions For Xilinx FpgasMohammad JafariNo ratings yet

- VeEX OTN Quick Reference GuideDocument12 pagesVeEX OTN Quick Reference GuideDewan H S SalehinNo ratings yet

- Tecspg 3001 PDFDocument312 pagesTecspg 3001 PDFSaptarshi B.No ratings yet

- Scope of Works - FawleyDocument5 pagesScope of Works - Fawleysreeagile.esNo ratings yet

- Liquids Processing Weekly Schedule: MARCH 9, 2020 - MARCH 14, 2020 (FIRM)Document1 pageLiquids Processing Weekly Schedule: MARCH 9, 2020 - MARCH 14, 2020 (FIRM)DP AbrugenaNo ratings yet

- EP-B101 IntroductionDocument6 pagesEP-B101 IntroductionShabbir AhmedNo ratings yet

- 01 IP TX Design For GUL V2.6 - 20150602Document51 pages01 IP TX Design For GUL V2.6 - 20150602pfvlourencoNo ratings yet

- Mem ControllerDocument44 pagesMem ControllerFauziNo ratings yet

- PowerEdge MX7000 Networking GuideDocument48 pagesPowerEdge MX7000 Networking GuidedanycuppariNo ratings yet

- Ex Series QFX Series Switching Product Comparison Matrix PDFDocument1 pageEx Series QFX Series Switching Product Comparison Matrix PDFCường HoàngNo ratings yet

- G+3 Load Schedule 29-10-2023Document13 pagesG+3 Load Schedule 29-10-2023Saahil KhaanNo ratings yet

- Adsl 402Document9 pagesAdsl 402edward blancoNo ratings yet

- Component Image Product Name Model Quantity Unit Price TotalDocument1 pageComponent Image Product Name Model Quantity Unit Price TotalA.G.V PublishersNo ratings yet

- SOFE 2013 SpearsDocument36 pagesSOFE 2013 SpearsFrank ThomasNo ratings yet

- Bluegenu CasestudyDocument41 pagesBluegenu CasestudyAsif KhanNo ratings yet

- CD Sunway Gopeng v2Document2 pagesCD Sunway Gopeng v2Mohd Izham IdrisNo ratings yet

- Qnap Diagramas y UsosDocument15 pagesQnap Diagramas y UsosELVIA MAYANo ratings yet

- D08!00!026 OTN Reference GuideDocument13 pagesD08!00!026 OTN Reference Guidejaka TingTongNo ratings yet

- DUNLEE CT Tube ReplacementsDocument2 pagesDUNLEE CT Tube ReplacementsAlfonso CofreNo ratings yet

- J4859D Datasheet: Quick SpecDocument3 pagesJ4859D Datasheet: Quick SpecTerra Byte100% (1)

- Schedule & SUM (28-8-2018)Document50 pagesSchedule & SUM (28-8-2018)Saahil KhaanNo ratings yet

- Palo Alto Networks Product Summary SpecsheetDocument3 pagesPalo Alto Networks Product Summary SpecsheetQuang Thịnh LêNo ratings yet

- Load Schedule RevisedDocument11 pagesLoad Schedule RevisedSaahil KhaanNo ratings yet

- Dell PowerEdge SAS SSDDocument3 pagesDell PowerEdge SAS SSDLe Quang ThinhNo ratings yet

- Palo Alto Networks Product Summary SpecsheetDocument3 pagesPalo Alto Networks Product Summary SpecsheetQuangvinh HPNo ratings yet

- Jl686a DatasheetDocument4 pagesJl686a DatasheetTop Value SolutionsNo ratings yet

- Product Name Product Number Cantidad: Fiberclamp, 3Fiber-3Powercable, 10PcsDocument1 pageProduct Name Product Number Cantidad: Fiberclamp, 3Fiber-3Powercable, 10PcsSuhailer BoscanNo ratings yet

- New Datasheet DH SolarDocument1 pageNew Datasheet DH Solarbilel bouassidaNo ratings yet

- K9f5608uoc Flash MemoryDocument41 pagesK9f5608uoc Flash Memoryc2735508No ratings yet

- ATT MasTec Stamped SA Capitan NM 85ft AFS1700Document41 pagesATT MasTec Stamped SA Capitan NM 85ft AFS1700kaysheph100% (1)

- Presentation - CMD Visit - 21.02.19Document42 pagesPresentation - CMD Visit - 21.02.19sadegaonkarNo ratings yet

- (TDB) VRF DVM S Standard For Turkey (ODU, 50Hz, HP)Document34 pages(TDB) VRF DVM S Standard For Turkey (ODU, 50Hz, HP)georgianconstantinNo ratings yet

- Brksec 3021Document131 pagesBrksec 3021alfagemeoNo ratings yet

- KX080 4S EngDocument6 pagesKX080 4S Engsathis ratenomNo ratings yet

- 1.) Pasolink Neo Standard (Lecture)Document41 pages1.) Pasolink Neo Standard (Lecture)nguyenvangiuNo ratings yet

- WI-VI TechnologyDocument23 pagesWI-VI Technologyshaikali100% (5)

- Practical File IPDocument27 pagesPractical File IPtanulakhwal09No ratings yet

- IFRC FSL Tools PresentationDocument13 pagesIFRC FSL Tools PresentationHussam MothanaNo ratings yet

- Revision Q (Answer)Document6 pagesRevision Q (Answer)Johan ZafriNo ratings yet

- PerroskyardDocument1 pagePerroskyardDYLAN JOSUE (SOLUCIONES TECNOLOGICA)No ratings yet

- Abstract SetDocument55 pagesAbstract SetelfotsonNo ratings yet

- 1J2 S4hana1909 BPD en UsDocument18 pages1J2 S4hana1909 BPD en UsBiji RoyNo ratings yet

- Orca Share Media1579045614908Document3 pagesOrca Share Media1579045614908Teresa Marie Yap CorderoNo ratings yet

- Manual de Usuario PTZ HiLookDocument101 pagesManual de Usuario PTZ HiLookIvan Alvarado GNo ratings yet

- Brief Covid 19 and Essential Services Provision For Survivors of Violence Against Women and Girls enDocument11 pagesBrief Covid 19 and Essential Services Provision For Survivors of Violence Against Women and Girls enJacqueline Rocio Valenzuela JimenezNo ratings yet

- Algebra Questions For SSC CGL Set-3 PDFDocument7 pagesAlgebra Questions For SSC CGL Set-3 PDFRatnesh kumar DattaNo ratings yet

- CBCL1501 SI RegisterDocument17 pagesCBCL1501 SI Registertuyen nguyen longNo ratings yet

- Paper 9007Document11 pagesPaper 9007IJARSCT JournalNo ratings yet

- 3685 Mir 006 PDFDocument1 page3685 Mir 006 PDFashishpearlNo ratings yet

- OR Question PapersDocument14 pagesOR Question Paperslakshaypopli9No ratings yet

- BSP Pipe ThreadDocument1 pageBSP Pipe ThreadgvmindiaNo ratings yet

- TA-BVS240 243 EN LowDocument14 pagesTA-BVS240 243 EN LowdilinNo ratings yet

- Itime FAQDocument20 pagesItime FAQadadaed adNo ratings yet

- ISO 9000:2005 - QMS - Fundamentals and VocabularyDocument34 pagesISO 9000:2005 - QMS - Fundamentals and VocabularySafiullah Azeem Ahamed67% (3)

- Deeksha Negi: @negideekshaaDocument6 pagesDeeksha Negi: @negideekshaaShrish PratapNo ratings yet

- Proceedings of The 2nd Annual International Conference On Material, Machines and Methods For Sustainable Development (MMMS2020)Document1 pageProceedings of The 2nd Annual International Conference On Material, Machines and Methods For Sustainable Development (MMMS2020)Nguyen Van QuyenNo ratings yet

- Relee Modulare FINDERDocument76 pagesRelee Modulare FINDERionutNo ratings yet

- Socmed ReportDocument45 pagesSocmed ReportFarhah SyahiraNo ratings yet

- Coiled-Tubing Services: Transforming Your InterventionDocument8 pagesCoiled-Tubing Services: Transforming Your Interventionoswaldo58No ratings yet

- Perrotta 22 ADocument13 pagesPerrotta 22 AZakhan KimahNo ratings yet

- TP1500 Lost User Password - 217747 - Industry Support SiemensDocument3 pagesTP1500 Lost User Password - 217747 - Industry Support Siemensagsan.algabh2718No ratings yet

- Icon Line Trimmer - ICPLT26 Operator ManualDocument10 pagesIcon Line Trimmer - ICPLT26 Operator ManualAnirudh Merugu67% (3)

- TM-1861 AVEVA Administration (1.4) System Administration Rev 1.0Document106 pagesTM-1861 AVEVA Administration (1.4) System Administration Rev 1.0praveen jangir100% (2)

- Lab Manual Industrial ElectronicsDocument81 pagesLab Manual Industrial ElectronicsPS NNo ratings yet

- Cyber Kill Chain-Based Taxonomy of Advanced Persistent Threat Actors: Analogy of Tactics, Techniques, and ProceduresDocument25 pagesCyber Kill Chain-Based Taxonomy of Advanced Persistent Threat Actors: Analogy of Tactics, Techniques, and ProceduresPerez CarlosNo ratings yet