Professional Documents

Culture Documents

DAL Experiment Outputs 6to10

Uploaded by

sujaykulkarni755Original Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

DAL Experiment Outputs 6to10

Uploaded by

sujaykulkarni755Copyright:

Available Formats

10/20/23, 3:18 PM Experiment_6

In [2]: import pandas as pd

import scipy

import numpy as np

from sklearn.preprocessing import MinMaxScaler

import seaborn as sns

import matplotlib.pyplot as plt

df = pd.read_csv('diabetes.csv')

In [3]: print(df.head())

Pregnancies Glucose BloodPressure SkinThickness Insulin BMI \

0 6 148 72 35 0 33.6

1 1 85 66 29 0 26.6

2 8 183 64 0 0 23.3

3 1 89 66 23 94 28.1

4 0 137 40 35 168 43.1

DiabetesPedigreeFunction Age Outcome

0 0.627 50 1

1 0.351 31 0

2 0.672 32 1

3 0.167 21 0

4 2.288 33 1

In [4]: df.info

<bound method DataFrame.info of Pregnancies Glucose BloodPressure SkinThicknes

Out[4]:

s Insulin BMI \

0 6 148 72 35 0 33.6

1 1 85 66 29 0 26.6

2 8 183 64 0 0 23.3

3 1 89 66 23 94 28.1

4 0 137 40 35 168 43.1

.. ... ... ... ... ... ...

763 10 101 76 48 180 32.9

764 2 122 70 27 0 36.8

765 5 121 72 23 112 26.2

766 1 126 60 0 0 30.1

767 1 93 70 31 0 30.4

DiabetesPedigreeFunction Age Outcome

0 0.627 50 1

1 0.351 31 0

2 0.672 32 1

3 0.167 21 0

4 2.288 33 1

.. ... ... ...

763 0.171 63 0

764 0.340 27 0

765 0.245 30 0

766 0.349 47 1

767 0.315 23 0

[768 rows x 9 columns]>

In [5]: df.isnull().sum()

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_6.ipynb?download=false 1/8

10/20/23, 3:18 PM Experiment_6

Pregnancies 0

Out[5]:

Glucose 0

BloodPressure 0

SkinThickness 0

Insulin 0

BMI 0

DiabetesPedigreeFunction 0

Age 0

Outcome 0

dtype: int64

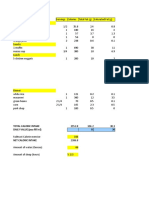

In [6]: df.describe()

Out[6]: Pregnancies Glucose BloodPressure SkinThickness Insulin BMI DiabetesPedigre

count 768.000000 768.000000 768.000000 768.000000 768.000000 768.000000 7

mean 3.845052 120.894531 69.105469 20.536458 79.799479 31.992578

std 3.369578 31.972618 19.355807 15.952218 115.244002 7.884160

min 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000

25% 1.000000 99.000000 62.000000 0.000000 0.000000 27.300000

50% 3.000000 117.000000 72.000000 23.000000 30.500000 32.000000

75% 6.000000 140.250000 80.000000 32.000000 127.250000 36.600000

max 17.000000 199.000000 122.000000 99.000000 846.000000 67.100000

In [24]: fig, axs = plt.subplots(9,1,dpi=95, figsize=(7,17))

i = 0

for col in df.columns:

axs[i].boxplot(df[col], vert=False)

axs[i].set_ylabel(col)

i+=1

plt.show()

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_6.ipynb?download=false 2/8

10/20/23, 3:18 PM Experiment_6

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_6.ipynb?download=false 3/8

10/20/23, 3:18 PM Experiment_6

In [25]: # Identify the quartiles

q1, q3 = np.percentile(df['Insulin'], [25, 75])

# Calculate the interquartile range

iqr = q3 - q1

# Calculate the lower and upper bounds

lower_bound = q1 - (1.5 * iqr)

upper_bound = q3 + (1.5 * iqr)

# Drop the outliers

clean_data = df[(df['Insulin'] >= lower_bound)

& (df['Insulin'] <= upper_bound)]

# Identify the quartiles

q1, q3 = np.percentile(clean_data['Pregnancies'], [25, 75])

# Calculate the interquartile range

iqr = q3 - q1

# Calculate the lower and upper bounds

lower_bound = q1 - (1.5 * iqr)

upper_bound = q3 + (1.5 * iqr)

# Drop the outliers

clean_data = clean_data[(clean_data['Pregnancies'] >= lower_bound)

& (clean_data['Pregnancies'] <= upper_bound)]

# Identify the quartiles

q1, q3 = np.percentile(clean_data['Age'], [25, 75])

# Calculate the interquartile range

iqr = q3 - q1

# Calculate the lower and upper bounds

lower_bound = q1 - (1.5 * iqr)

upper_bound = q3 + (1.5 * iqr)

# Drop the outliers

clean_data = clean_data[(clean_data['Age'] >= lower_bound)

& (clean_data['Age'] <= upper_bound)]

# Identify the quartiles

q1, q3 = np.percentile(clean_data['Glucose'], [25, 75])

# Calculate the interquartile range

iqr = q3 - q1

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_6.ipynb?download=false 4/8

10/20/23, 3:18 PM Experiment_6

# Calculate the lower and upper bounds

lower_bound = q1 - (1.5 * iqr)

upper_bound = q3 + (1.5 * iqr)

# Drop the outliers

clean_data = clean_data[(clean_data['Glucose'] >= lower_bound)

& (clean_data['Glucose'] <= upper_bound)]

# Identify the quartiles

q1, q3 = np.percentile(clean_data['BloodPressure'], [25, 75])

# Calculate the interquartile range

iqr = q3 - q1

# Calculate the lower and upper bounds

lower_bound = q1 - (0.75 * iqr)

upper_bound = q3 + (0.75 * iqr)

# Drop the outliers

clean_data = clean_data[(clean_data['BloodPressure'] >= lower_bound)

& (clean_data['BloodPressure'] <= upper_bound)]

# Identify the quartiles

q1, q3 = np.percentile(clean_data['BMI'], [25, 75])

# Calculate the interquartile range

iqr = q3 - q1

# Calculate the lower and upper bounds

lower_bound = q1 - (1.5 * iqr)

upper_bound = q3 + (1.5 * iqr)

# Drop the outliers

clean_data = clean_data[(clean_data['BMI'] >= lower_bound)

& (clean_data['BMI'] <= upper_bound)]

# Identify the quartiles

q1, q3 = np.percentile(clean_data['DiabetesPedigreeFunction'], [25, 75])

# Calculate the interquartile range

iqr = q3 - q1

# Calculate the lower and upper bounds

lower_bound = q1 - (1.5 * iqr)

upper_bound = q3 + (1.5 * iqr)

# Drop the outliers

clean_data = clean_data[(clean_data['DiabetesPedigreeFunction'] >= lower_bound)

& (clean_data['DiabetesPedigreeFunction'] <= upper_bound)]

In [26]: #correlation

corr = df.corr()

plt.figure(dpi=130)

sns.heatmap(df.corr(), annot=True, fmt= '.2f')

plt.show()

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_6.ipynb?download=false 5/8

10/20/23, 3:18 PM Experiment_6

In [27]: corr['Outcome'].sort_values(ascending = False)

Outcome 1.000000

Out[27]:

Glucose 0.466581

BMI 0.292695

Age 0.238356

Pregnancies 0.221898

DiabetesPedigreeFunction 0.173844

Insulin 0.130548

SkinThickness 0.074752

BloodPressure 0.065068

Name: Outcome, dtype: float64

In [28]: plt.pie(df.Outcome.value_counts(),

labels= ['Diabetes', 'Not Diabetes'],

autopct='%.f', shadow=True)

plt.title('Outcome Proportionality')

plt.show()

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_6.ipynb?download=false 6/8

10/20/23, 3:18 PM Experiment_6

In [29]: # separate array into input and output components

X = df.drop(columns =['Outcome'])

Y = df.Outcome

In [30]: # initialising the MinMaxScaler

scaler = MinMaxScaler(feature_range=(0, 1))

# learning the statistical parameters for each of the data and transforming

rescaledX = scaler.fit_transform(X)

rescaledX[:5]

array([[0.35294118, 0.74371859, 0.59016393, 0.35353535, 0. ,

Out[30]:

0.50074516, 0.23441503, 0.48333333],

[0.05882353, 0.42713568, 0.54098361, 0.29292929, 0. ,

0.39642325, 0.11656704, 0.16666667],

[0.47058824, 0.91959799, 0.52459016, 0. , 0. ,

0.34724292, 0.25362938, 0.18333333],

[0.05882353, 0.44723618, 0.54098361, 0.23232323, 0.11111111,

0.41877794, 0.03800171, 0. ],

[0. , 0.68844221, 0.32786885, 0.35353535, 0.19858156,

0.64232489, 0.94363792, 0.2 ]])

In [31]: from sklearn.preprocessing import StandardScaler

scaler = StandardScaler().fit(X)

rescaledX = scaler.transform(X)

rescaledX[:5]

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_6.ipynb?download=false 7/8

10/20/23, 3:18 PM Experiment_6

array([[ 0.63994726, 0.84832379, 0.14964075, 0.90726993, -0.69289057,

Out[31]:

0.20401277, 0.46849198, 1.4259954 ],

[-0.84488505, -1.12339636, -0.16054575, 0.53090156, -0.69289057,

-0.68442195, -0.36506078, -0.19067191],

[ 1.23388019, 1.94372388, -0.26394125, -1.28821221, -0.69289057,

-1.10325546, 0.60439732, -0.10558415],

[-0.84488505, -0.99820778, -0.16054575, 0.15453319, 0.12330164,

-0.49404308, -0.92076261, -1.04154944],

[-1.14185152, 0.5040552 , -1.50468724, 0.90726993, 0.76583594,

1.4097456 , 5.4849091 , -0.0204964 ]])

In [ ]:

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_6.ipynb?download=false 8/8

10/20/23, 3:34 PM Experiment_7

In [2]: # Python code to demonstrate the working of mean()

# importing statstics to handle statistical operations

import statistics

li = [1,2,3,3,2,2,21]

In [3]: print("The average of list values is:",end="")

print(statistics.mean(li))

The average of list values is:4.857142857142857

In [4]: from statistics import median

In [5]: from fractions import Fraction as fr

In [6]: data1 = (2,3,4,5,7,9,11)

In [7]: print("Median of data-set 1 is %s" %(median(data1)))

Median of data-set 1 is 5

In [8]: from statistics import mode

data1 = (2,3,3,4,5,5,5,5,6,6,6,7)

In [9]: print("Mode of data set 1 is %s"%(mode(data1)))

Mode of data set 1 is 5

In [15]: arr = [1,2,3,4,5]

Maximum = max(arr)

In [16]: Minimum = min(arr)

In [17]: Range = Maximum-Minimum

In [20]: print("Maximum = {}, Minumum = {} and Range = {}".format(Maximum,Minimum,Range))

Maximum = 5, Minumum = 1 and Range = 4

In [21]: from statistics import variance

sample1 = (1,2,5,4,8,9,12)

print("Variance of Sample1 is %s"%(variance(sample1)))

Variance of Sample1 is 15.80952380952381

In [22]: from statistics import stdev

sample1 = (1,2,5,4,8,9,12)

print("The Standard Deviation of Sample1 is %s" %(stdev(sample1)))

The Standard Deviation of Sample1 is 3.9761191895520196

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_7.ipynb?download=false 1/1

10/20/23, 4:28 PM Experiment_8

In [1]: #importing pandas as pd

import pandas as pd

df=pd.read_csv("nba.csv")

In [2]: print(df.head())

Name Team Number Position Age Height Weight \

0 Avery Bradley Boston Celtics 0.0 PG 25.0 6-2 180.0

1 Jae Crowder Boston Celtics 99.0 SF 25.0 6-6 235.0

2 John Holland Boston Celtics 30.0 SG 27.0 6-5 205.0

3 R.J. Hunter Boston Celtics 28.0 SG 22.0 6-5 185.0

4 Jonas Jerebko Boston Celtics 8.0 PF 29.0 6-10 231.0

College Salary

0 Texas 7730337.0

1 Marquette 6796117.0

2 Boston University NaN

3 Georgia State 1148640.0

4 NaN 5000000.0

In [3]: df1=df.groupby('Team')

In [4]: df1.first()

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_8.ipynb?download=false 1/5

10/20/23, 4:28 PM Experiment_8

Out[4]: Name Number Position Age Height Weight College Salar

Team

Atlanta

Kent Bazemore 24.0 SF 26.0 6-5 201.0 Old Dominion 2000000

Hawks

Boston

Avery Bradley 0.0 PG 25.0 6-2 180.0 Texas 7730337

Celtics

Brooklyn Bojan Oklahoma

44.0 SG 27.0 6-8 216.0 3425510

Nets Bogdanovic State

Charlotte Virginia

Nicolas Batum 5.0 SG 27.0 6-8 200.0 13125306

Hornets Commonwealth

Cameron

Chicago Bulls 41.0 PF 25.0 6-9 250.0 New Mexico 845059

Bairstow

Cleveland Matthew

8.0 PG 25.0 6-4 198.0 Saint Mary's 1147276

Cavaliers Dellavedova

Dallas

Justin Anderson 1.0 SG 22.0 6-6 228.0 Virginia 1449000

Mavericks

Denver

Darrell Arthur 0.0 PF 28.0 6-9 235.0 Kansas 2814000

Nuggets

Detroit

Joel Anthony 50.0 C 33.0 6-9 245.0 UNLV 2500000

Pistons

Golden State Leandro

19.0 SG 33.0 6-3 194.0 North Carolina 2500000

Warriors Barbosa

Houston

Trevor Ariza 1.0 SF 30.0 6-8 215.0 UCLA 8193030

Rockets

Indiana

Lavoy Allen 5.0 PF 27.0 6-9 255.0 Temple 4050000

Pacers

Los Angeles

Cole Aldrich 45.0 C 27.0 6-11 250.0 Kansas 1100602

Clippers

Los Angeles

Brandon Bass 2.0 PF 31.0 6-8 250.0 LSU 3000000

Lakers

Memphis

Jordan Adams 3.0 SG 21.0 6-5 209.0 UCLA 1404600

Grizzlies

Miami Heat Chris Bosh 1.0 PF 32.0 6-11 235.0 Georgia Tech 22192730

Milwaukee Giannis

34.0 SF 21.0 6-11 222.0 Arizona 1953960

Bucks Antetokounmpo

Minnesota

Nemanja Bjelica 88.0 PF 28.0 6-10 240.0 Louisville 3950001

Timberwolves

New Orleans

Alexis Ajinca 42.0 C 28.0 7-2 248.0 California 4389607

Pelicans

New York

Arron Afflalo 4.0 SG 30.0 6-5 210.0 UCLA 8000000

Knicks

Oklahoma

Steven Adams 12.0 C 22.0 7-0 255.0 Pittsburgh 2279040

City Thunder

Orlando Dewayne

3.0 C 26.0 7-0 245.0 USC 947276

Magic Dedmon

Philadelphia Elton Brand 42.0 PF 37.0 6-9 254.0 Duke 947276

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_8.ipynb?download=false 2/5

10/20/23, 4:28 PM Experiment_8

Name Number Position Age Height Weight College Salar

Team

76ers

Phoenix Suns Eric Bledsoe 2.0 PG 26.0 6-1 190.0 Kentucky 13500000

Portland Trail

Cliff Alexander 34.0 PF 20.0 6-8 240.0 Kansas 525093

Blazers

Sacramento

Quincy Acy 13.0 SF 25.0 6-7 240.0 Baylor 981348

Kings

San Antonio LaMarcus

12.0 PF 30.0 6-11 240.0 Texas 19689000

Spurs Aldridge

Toronto Bismack

8.0 C 23.0 6-9 245.0 Missouri 2814000

Raptors Biyombo

Utah Jazz Trevor Booker 33.0 PF 28.0 6-8 228.0 Clemson 4775000

Washington

Alan Anderson 6.0 SG 33.0 6-6 220.0 Michigan State 4000000

Wizards

In [5]: df1.get_group('Boston Celtics')

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_8.ipynb?download=false 3/5

10/20/23, 4:29 PM Experiment_8

Out[5]: Name Team Number Position Age Height Weight College Salary

Avery Boston

0 0.0 PG 25.0 6-2 180.0 Texas 7730337.0

Bradley Celtics

Jae Boston

1 99.0 SF 25.0 6-6 235.0 Marquette 6796117.0

Crowder Celtics

John Boston Boston

2 30.0 SG 27.0 6-5 205.0 NaN

Holland Celtics University

Boston

3 R.J. Hunter 28.0 SG 22.0 6-5 185.0 Georgia State 1148640.0

Celtics

Jonas Boston

4 8.0 PF 29.0 6-10 231.0 NaN 5000000.0

Jerebko Celtics

Amir Boston

5 90.0 PF 29.0 6-9 240.0 NaN 12000000.0

Johnson Celtics

Jordan Boston

6 55.0 PF 21.0 6-8 235.0 LSU 1170960.0

Mickey Celtics

Kelly Boston

7 41.0 C 25.0 7-0 238.0 Gonzaga 2165160.0

Olynyk Celtics

Terry Boston

8 12.0 PG 22.0 6-2 190.0 Louisville 1824360.0

Rozier Celtics

Marcus Boston Oklahoma

9 36.0 PG 22.0 6-4 220.0 3431040.0

Smart Celtics State

Jared Boston

10 7.0 C 24.0 6-9 260.0 Ohio State 2569260.0

Sullinger Celtics

Isaiah Boston

11 4.0 PG 27.0 5-9 185.0 Washington 6912869.0

Thomas Celtics

Evan Boston

12 11.0 SG 27.0 6-7 220.0 Ohio State 3425510.0

Turner Celtics

James Boston

13 13.0 SG 20.0 6-6 215.0 Kentucky 1749840.0

Young Celtics

Boston North

14 Tyler Zeller 44.0 C 26.0 7-0 253.0 2616975.0

Celtics Carolina

In [6]: import pandas as pd

df = pd.read_csv("nba.csv")

df2=df.groupby(['Team','Position'])

In [7]: df2.first()

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_8.ipynb?download=false 4/5

10/20/23, 4:29 PM Experiment_8

Out[7]: Name Number Age Height Weight College Salary

Team Position

Atlanta C Al Horford 15.0 30.0 6-10 245.0 Florida 12000000.0

Hawks

Kris

PF 43.0 31.0 6-9 235.0 Minnesota 1000000.0

Humphries

Dennis

PG 17.0 22.0 6-1 172.0 Wake Forest 1763400.0

Schroder

Kent Old

SF 24.0 26.0 6-5 201.0 2000000.0

Bazemore Dominion

Tim

SG Hardaway 10.0 24.0 6-6 205.0 Michigan 1304520.0

Jr.

... ... ... ... ... ... ... ... ...

Washington C North

Marcin

Wizards 13.0 32.0 6-11 240.0 Carolina 11217391.0

Gortat

State

Drew

PF 90.0 34.0 6-10 250.0 Kansas 3300000.0

Gooden

Ramon

PG 7.0 30.0 6-3 190.0 Nevada 2170465.0

Sessions

Jared Boston

SF 1.0 30.0 6-7 225.0 4375000.0

Dudley College

Alan Michigan

SG 6.0 33.0 6-6 220.0 4000000.0

Anderson State

149 rows × 7 columns

localhost:8888/nbconvert/html/Experiments 6 to 10/Experiment_8.ipynb?download=false 5/5

10/20/23, 4:35 PM Experiment 9 - Jupyter Notebook

In [4]: import matplotlib.pyplot as plt

from scipy import stats

x = [5,7,8,7,2,17,2,9,4,11,12,9,6]

y = [99,86,87,88,111,86,103,87,94,78,77,85,86]

slope, intercept,r,p,std_err=stats.linregress(x,y)

def myfunc(x):

return slope *x+ intercept

mymodel=list(map(myfunc,x))

plt.scatter(x,y)

plt.plot(x,mymodel)

plt.show()

localhost:8888/notebooks/Experiments 6 to 10/Experiment 9.ipynb 1/1

10/20/23, 4:54 PM Experiment 10 - Jupyter Notebook

In [14]: import pandas as pd

from sklearn import linear_model

# Read the CSV file into a DataFrame

df = pd.read_csv("data1.csv")

# Drop rows with non-numeric values in the 'Weight' and 'Volume' columns

df = df[pd.to_numeric(df['Weight'], errors='coerce').notna()]

df = df[pd.to_numeric(df['Volume'], errors='coerce').notna()]

# Convert 'Weight' and 'Volume' columns to numeric type

df['Weight'] = pd.to_numeric(df['Weight'])

df['Volume'] = pd.to_numeric(df['Volume'])

# Define the independent variables (features) X and the dependent variable (ta

X = df[['Weight', 'Volume']]

Y = df['CO2']

# Create a linear regression model

regr = linear_model.LinearRegression()

# Fit the linear regression model to the data

regr.fit(X, Y)

# Predict the CO2 emissions for a new data point with 'Weight' = 2300 and 'Vol

predictedCO2 = regr.predict([[2300, 1300]])

# Print the predicted CO2 emissions

print(predictedCO2)

[107.2087328]

C:\Users\Lenovo\anaconda3\Lib\site-packages\sklearn\base.py:464: UserWarning:

X does not have valid feature names, but LinearRegression was fitted with fea

ture names

warnings.warn(

In [ ]:

localhost:8888/notebooks/Experiments 6 to 10/Experiment 10.ipynb 1/1

You might also like

- Pima Indian Diabetes QuestionsDocument6 pagesPima Indian Diabetes QuestionsAMAN PRAKASHNo ratings yet

- Savage Worlds - Unofficial New HindrancesDocument3 pagesSavage Worlds - Unofficial New HindrancesLeandro MandoNo ratings yet

- SHO Paper 2Document9 pagesSHO Paper 2Kenny Low100% (1)

- Medical Abbreviations: White Blood Cells: WBC 15. Bedrest: BR 6Document5 pagesMedical Abbreviations: White Blood Cells: WBC 15. Bedrest: BR 6Muhammad Farhan Rizqullah100% (1)

- Case Study: Double Outlet Right VentricleDocument6 pagesCase Study: Double Outlet Right VentriclejisooNo ratings yet

- ML - Workshop - Day2 - Jupyter NotebookDocument15 pagesML - Workshop - Day2 - Jupyter NotebookAbhisingNo ratings yet

- Diabetes Diagrama de ArbolDocument4 pagesDiabetes Diagrama de ArbolRamón MendozaNo ratings yet

- Diabetes Diagrama de ArbolDocument4 pagesDiabetes Diagrama de ArbolRamón MendozaNo ratings yet

- Name: Yandrapu Manoj Naidu Roll No: 20MDT1017: Choose FilesDocument7 pagesName: Yandrapu Manoj Naidu Roll No: 20MDT1017: Choose FilesYANDRAPU MANOJ NAIDU 20MDT1017No ratings yet

- Healthcare PGP - Capstone ProjectDocument32 pagesHealthcare PGP - Capstone ProjectSneh Prakhar0% (1)

- Diabetes Prediction SystemDocument4 pagesDiabetes Prediction Systemsaurabh khairnarNo ratings yet

- Pima Indian Diabetes Questions-1Document1 pagePima Indian Diabetes Questions-1Sayan PalNo ratings yet

- Healthcare PGPDocument28 pagesHealthcare PGPSneh PrakharNo ratings yet

- Step-By-Step-Diabetes-Classification-Knn-Detailed-Copy1 - Jupyter NotebookDocument12 pagesStep-By-Step-Diabetes-Classification-Knn-Detailed-Copy1 - Jupyter NotebookElyzaNo ratings yet

- Diabetis ProjectDocument7 pagesDiabetis ProjectKeerthi SankaraNo ratings yet

- Data PengamatanDocument3 pagesData Pengamatannatasya khairunnisaNo ratings yet

- Capstone Project 2Document15 pagesCapstone Project 2DavidNo ratings yet

- Name: MANOGNA GV Email Id: Major Project: Diabetes Prediction Let's Import Required Libraries!Document4 pagesName: MANOGNA GV Email Id: Major Project: Diabetes Prediction Let's Import Required Libraries!Manogna GvNo ratings yet

- Foodlog Date 2 3 22 - Daily IntakeDocument1 pageFoodlog Date 2 3 22 - Daily Intakeapi-593701830No ratings yet

- Univariate and Multivariate Analysis - Jupyter NotebookDocument5 pagesUnivariate and Multivariate Analysis - Jupyter NotebookAnuvidyaKarthiNo ratings yet

- Mean Vector and Correlation Matrix in R - Jupyter NotebookDocument7 pagesMean Vector and Correlation Matrix in R - Jupyter NotebookAnuvidyaKarthiNo ratings yet

- Diabetes ModelDocument44 pagesDiabetes Modelsasda100% (1)

- Thursday Feb 1Document4 pagesThursday Feb 1api-397151182No ratings yet

- String ResonantDocument3 pagesString ResonantSingh IndrajeetNo ratings yet

- Thursday1-27-22 - Daily IntakeDocument1 pageThursday1-27-22 - Daily Intakeapi-595317201No ratings yet

- Foodlog Date 1-22-22 - Daily IntakeDocument1 pageFoodlog Date 1-22-22 - Daily Intakeapi-599680134No ratings yet

- Tre Project PcuDocument11 pagesTre Project Pcugoo odNo ratings yet

- Foodlog Date 2 3 2024 - Daily IntakeDocument1 pageFoodlog Date 2 3 2024 - Daily Intakeapi-732045328No ratings yet

- Diabetes Prediction - Logistic Regression - Jupyter NotebookDocument4 pagesDiabetes Prediction - Logistic Regression - Jupyter NotebooksaravanakumarNo ratings yet

- Food Log Date 220124Document4 pagesFood Log Date 220124api-593711305No ratings yet

- Foodlog Date February 14 - Daily IntakeDocument1 pageFoodlog Date February 14 - Daily Intakeapi-732764034No ratings yet

- SVM - RF - Diabetes - CSV - 26 - 6 - 2023.ipynb - ColaboratoryDocument8 pagesSVM - RF - Diabetes - CSV - 26 - 6 - 2023.ipynb - Colaboratoryutsavarora1912No ratings yet

- Foodlog Date 2 11 24 - Daily IntakeDocument1 pageFoodlog Date 2 11 24 - Daily Intakeapi-732045328No ratings yet

- Diabetic Prediction Using LogicalRegressionDocument9 pagesDiabetic Prediction Using LogicalRegressionYagnesh VyasNo ratings yet

- Mechelleg-Foodlog Date Sunday 2-11-24 - Daily IntakeDocument1 pageMechelleg-Foodlog Date Sunday 2-11-24 - Daily Intakeapi-732565843No ratings yet

- Copy of Day 2 - Sheet1 3Document1 pageCopy of Day 2 - Sheet1 3api-349309070No ratings yet

- Foodlog Date 2Document1 pageFoodlog Date 2api-595312194No ratings yet

- Foodlog Date Thursday February 9thDocument4 pagesFoodlog Date Thursday February 9thapi-654020081No ratings yet

- Kevin Soto Foodlog Date 2 9 - Daily Intake 1Document1 pageKevin Soto Foodlog Date 2 9 - Daily Intake 1api-732087714No ratings yet

- Day 10 2-7Document2 pagesDay 10 2-7api-396938345No ratings yet

- 2 1 22 - Daily IntakeDocument2 pages2 1 22 - Daily Intakeapi-595126701No ratings yet

- Ms (%) Ed (Kcal/Kg) PC (%) Proteina Animapd (%) FC (%) Ee (%)Document20 pagesMs (%) Ed (Kcal/Kg) PC (%) Proteina Animapd (%) FC (%) Ee (%)JUAN FERNANDO SEGURA CASTRONo ratings yet

- VARIANZADocument4 pagesVARIANZAGERALDINE ESTEFANIA CUELLO PEREZNo ratings yet

- Conversion FactorDocument110 pagesConversion FactorAmanNo ratings yet

- 3rekap Status Gizi 2023Document17 pages3rekap Status Gizi 2023yurna betty kesgagizi kab.pesbar LampungNo ratings yet

- Tablas Vel Vs DosisDocument10 pagesTablas Vel Vs DosisHemir Ariel Jaque BeltranNo ratings yet

- Ikan Ke PT BT VS VL VM DKL %Document3 pagesIkan Ke PT BT VS VL VM DKL %melisa bayu prasetyaNo ratings yet

- Ikan Ke PT BT VS VL VM DKL %Document3 pagesIkan Ke PT BT VS VL VM DKL %melisa bayu prasetyaNo ratings yet

- Foodlog Date 2Document1 pageFoodlog Date 2api-595312194No ratings yet

- Foodlog Date 02 04 24Document1 pageFoodlog Date 02 04 24api-732738212No ratings yet

- Pasta US - AdjDocument9 pagesPasta US - AdjMeshack MateNo ratings yet

- Tue Feb 6 3Document3 pagesTue Feb 6 3api-398289109No ratings yet

- Diagnosa PTM Asik NewDocument8 pagesDiagnosa PTM Asik NewHendra FitriadiNo ratings yet

- Experiment 4Document5 pagesExperiment 4Apurva PatilNo ratings yet

- Friday Feb 9Document3 pagesFriday Feb 9api-397151182No ratings yet

- Age Outlier BMI Outlier Glucose Outlier Insulin Outlier Homa OutlierDocument5 pagesAge Outlier BMI Outlier Glucose Outlier Insulin Outlier Homa OutlierAhmadKomarudinNo ratings yet

- Brand Loyalty Data Logistic RegressionDocument4 pagesBrand Loyalty Data Logistic Regressionritesh choudhuryNo ratings yet

- Foodlog Date 2 01 2022 - Daily IntakeDocument1 pageFoodlog Date 2 01 2022 - Daily Intakeapi-593674825No ratings yet

- Day 7 2-4 WeekendDocument2 pagesDay 7 2-4 Weekendapi-396938345No ratings yet

- Anexo #01 Cálculo de Caída de Tensión Circuitos en Media TensiónDocument69 pagesAnexo #01 Cálculo de Caída de Tensión Circuitos en Media TensiónLuis AngelNo ratings yet

- Foodlog Day 1Document4 pagesFoodlog Day 1api-397125493No ratings yet

- Food Log Date 220126Document4 pagesFood Log Date 220126api-593711305No ratings yet

- Datos Empleados Fabsoluta Facumulada Frelativa Facumulada2 IntervalosDocument6 pagesDatos Empleados Fabsoluta Facumulada Frelativa Facumulada2 IntervalosAlejandro MalagonNo ratings yet

- Math Practice Simplified: Decimals & Percents (Book H): Practicing the Concepts of Decimals and PercentagesFrom EverandMath Practice Simplified: Decimals & Percents (Book H): Practicing the Concepts of Decimals and PercentagesRating: 5 out of 5 stars5/5 (3)

- Case Study On GynaeDocument22 pagesCase Study On GynaeJay PaulNo ratings yet

- The Beauty of DentistryDocument5 pagesThe Beauty of DentistryMorosanu Diana GeorgianaNo ratings yet

- The Five Level Model A New Approach To Organizing Body Composition ResearchDocument10 pagesThe Five Level Model A New Approach To Organizing Body Composition ResearchGabriel FagundesNo ratings yet

- CAIE Biology A-Level: Topic 10 - Infectious DiseasesDocument3 pagesCAIE Biology A-Level: Topic 10 - Infectious Diseasesstephen areriNo ratings yet

- Kuliayada CaafimaadkaDocument50 pagesKuliayada CaafimaadkaKhalid Abdiaziz AbdulleNo ratings yet

- 10 ĐỀ THI KSCL LẦN 2 NĂM HỌC 2021Document4 pages10 ĐỀ THI KSCL LẦN 2 NĂM HỌC 2021Sơn Cao ThanhNo ratings yet

- Early Intervention Support and Inclusion For Children With Disability - ADHCDocument4 pagesEarly Intervention Support and Inclusion For Children With Disability - ADHCMirolz PomenNo ratings yet

- List of Important World International DaysDocument32 pagesList of Important World International DaysYassir ButtNo ratings yet

- Ch10 - Critical Thinking and Clinical ReasoningDocument31 pagesCh10 - Critical Thinking and Clinical ReasoningVinz TombocNo ratings yet

- Paracetamol IV 10mg/ml 50ml 100ml Solution For Infusion PIL - UK BBBA6849Document2 pagesParacetamol IV 10mg/ml 50ml 100ml Solution For Infusion PIL - UK BBBA6849Ganesh NaniNo ratings yet

- OB Ward Case StudyDocument20 pagesOB Ward Case StudyIvan A. EleginoNo ratings yet

- Periodontal Accelerated Osteogenic OrthodonticsDocument6 pagesPeriodontal Accelerated Osteogenic Orthodonticsyui cherryNo ratings yet

- Additive EfectDocument8 pagesAdditive EfectMAIN FIRSTFLOORBNo ratings yet

- Transes Anaphy BloodDocument5 pagesTranses Anaphy BloodPia LouiseNo ratings yet

- NSTP Unit 4Document14 pagesNSTP Unit 4Sophie DatuNo ratings yet

- Micoses Superficiais - GlobalDocument19 pagesMicoses Superficiais - GlobalJosé Paulo Ribeiro JúniorNo ratings yet

- 05 23 12+Grad+Entire+IssueDocument48 pages05 23 12+Grad+Entire+IssueVivek SarthiNo ratings yet

- Latihan Abbreviation 2Document2 pagesLatihan Abbreviation 2Sherly AmeliaNo ratings yet

- Care of Orthopedic Patients With Baker CystsDocument27 pagesCare of Orthopedic Patients With Baker CystsLuayon FrancisNo ratings yet

- Salivaryglands 2 Cpalate 2 Cpharynx 2 CswallowingDocument11 pagesSalivaryglands 2 Cpalate 2 Cpharynx 2 Cswallowingr74k8zgg8rNo ratings yet

- MCU PACKAGE PTVI Contractor (Operational Area) SentDocument1 pageMCU PACKAGE PTVI Contractor (Operational Area) Sentmuh.hasbi asbukNo ratings yet

- Assessment of Knowledge Sharing For Prevention of Hepatitis Viral Infection Among Students of Higher Institutions of Kebbi State, NigeriaDocument9 pagesAssessment of Knowledge Sharing For Prevention of Hepatitis Viral Infection Among Students of Higher Institutions of Kebbi State, NigeriaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Vaccine: Greg G. WolffDocument5 pagesVaccine: Greg G. WolffRennan Lima M. CastroNo ratings yet

- Head TraumaDocument22 pagesHead TraumaEllsay AliceNo ratings yet

- Reference ID: 4109856: 1 Indications and Usage 4 ContraindicationsDocument52 pagesReference ID: 4109856: 1 Indications and Usage 4 Contraindicationsgmsanto7No ratings yet

- Case Based Discussion: Sindy Helda PutriDocument30 pagesCase Based Discussion: Sindy Helda PutriSindyputri HeldaNo ratings yet